10.20

In Defense of Killer Robots

Rosa Brooks

Rosa Brooks (b. 1970) is a law professor at Georgetown University, where she teaches courses on international relations and national security. She has also served in the U.S. Defense Department and been a consultant to many organizations, including Human Rights Watch. She writes a regular opinion column for Foreign Affairs, from which this piece is taken.

Robots just can’t catch a break. If we’re not upbraiding them for taking our jobs, we’re lambasting their alleged tendency to seize control of spaceships, computer systems, the Earth, the galaxy or the universe beyond. The Bad Robot has long been a staple of film and fiction, from HAL (“I’m sorry, Dave, I’m afraid I can’t do that”) to the Terminator, but recently, bad robots have migrated from the screen to the world of military ethics and human rights campaigns.

Specifically, a growing number of ethicists and rights advocates are calling for a global ban on the development, production, and use of fully autonomous weapons systems, which are, according to Human Rights Watch, “also” — and rather conveniently — “known as killer robots.” (Not to their mothers, I’m sure!)

The term does tend to have a chilling effect even upon those harboring a soft spot for R2-

Let’s review the case against the robots. The core concern relates to military research into weapons systems that are “fully autonomous,” meaning that they can “select and engage targets without human intervention.” Today, even our most advanced weapons technologies still require humans in the loop. Thus, Predator drones can’t decide for themselves whom to kill: it takes a human being — often dozens of human beings in a complex command chain — to decide that it’s both legal and wise to launch missiles at a given target. In the not-

5 According to the Campaign to Stop Killer Robots, this would be bad, because a) killer robots might not have the ability to abide by the legal obligation to distinguish between combatants and civilians; and b) “Allowing life or death decisions to be made by machines crosses a fundamental moral line” and jeopardizes fundamental principles of “human dignity.”

Neither of these arguments makes much sense to me. Granted, the thought of an evil robot firing indiscriminately into a crowd is dismaying, as is the thought of a rogue robot, sparks flying from every rusting joint, going berserk and turning its futuristic super-

Arguably, computers will be far better than human beings at complying with international humanitarian law. Face it: we humans are fragile and panicky creatures, easily flustered by the fog of war. Our eyes face only one direction; our ears register only certain frequencies; our brains can process only so much information at a time. Loud noises make us jump, and fear floods our bodies with powerful chemicals that can temporarily distort our perceptions and judgment.

As a result, we make stupid mistakes in war, and we make them all the time. We misjudge distances; we forget instructions, we misconstrue gestures. We mistake cameras for weapons, shepherds for soldiers, friends for enemies, schools for barracks, and wedding parties for terrorist convoys.

In fact, we humans are fantastically bad at distinguishing between combatants and civilians — and even when we can tell the difference, we often make risk-

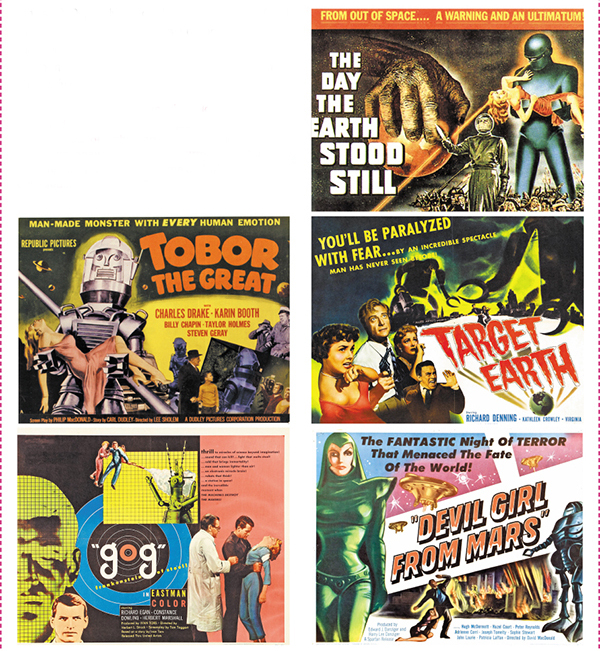

seeing connections

In the 1950s, Hollywood released a number of “killer robot” movies, most likely as a response to the fears of the technology that produced the atomic weapons that were used during World War II and were driving the Cold War with the Soviet Union.

Look at these posters for movies released during this time, and explain how the images in the posters illustrate this fear of technology. Then, identify movies or TV shows today that represent current societal fears of technology. What is the same and what has changed from the 1950s?

10 Computers, in contrast, are excellent in crisis and combat situations. They don’t get mad, they don’t get scared, and they don’t act out of sentimentality. They’re exceptionally good at processing vast amounts of information in a short time and rapidly applying appropriate decision rules. They’re not perfect, but they’re a good deal less flawed than those of us cursed with organic circuitry.

We assure ourselves that we humans have special qualities no machine can replicate: we have “judgment” and “intuition,” for instance. Maybe, but computers often seem to have better judgment. This has already been demonstrated in dozens of different domains, from aviation to anesthesiology. Computers are better than humans at distinguishing between genuine and faked expressions of pain; Google’s driverless cars are better at avoiding accidents than cars controlled by humans. Given a choice between relying on a human to comply with international humanitarian law and relying on a well-

Opponents of autonomous weapons ask whether there’s a legal and ethical obligation to refrain from letting machines make decisions about who should live and who should die. If it turns out, as it may, that machines are better than people at applying the principles of international humanitarian law, we should be asking an entirely different question: Might there be a legal and ethical obligation to use “killer robots” in lieu of — well, “killer humans”?

Confronted with arguments about the technological superiority of computers over human brains, those opposed to the development of autonomous weapons systems argue that such consequentialist reasoning is insufficient. Ultimately, as a 2014 joint report by Human Rights Watch and Harvard’s International Human Rights Clinic argues, it would simply be “morally wrong” to give machines the power to “decide” who lives and who dies: “As inanimate machines, fully autonomous weapons could truly comprehend neither the value of individual life nor the significance of its loss. Allowing them to make determinations to take life away would thus conflict with the principle of [human] dignity.”

I suppose the idea here is that any self-

15 I’m not buying it. Death is death, and I don’t imagine it gives the dying any consolation to know their human killer feels kind of bad about the whole affair.

Let’s not romanticize humans. As a species, we’re capable of mercy and compassion, but we also have a remarkable propensity for violence and cruelty. We’re a species that kills for pleasure: every year, more than half a million people around the globe die as a result of intentional violence, and many more are injured, starved, or intentionally deprived of shelter, medicine, or other essentials. In the United States alone, more than 16,000 people are murdered each year, and another million-

Plug in the right lines of code, and robots will dutifully abide by the laws of armed conflict to the best of their technological ability. In this sense, “killer robots” may be capable of behaving far more “humanely” than we might assume. But the flip-

In the 1960s, experiments by Yale psychologist Stanley Milgram demonstrated the terrible ease with which ordinary humans could be persuaded to inflict pain on complete strangers; since then, other psychologists have refined and extended his work. Want to program an ordinary human being to participate in genocide? Both history and social psychology suggest that it’s not much more difficult than creating a new iPhone app.

“But wait!” you say, “That’s all very well, but aren’t you assuming obedient robots? What if the killer robots are overcome by bloodlust or a thirst for power? What if intelligent, autonomous robots decide to override the code that created them, and turn upon us all?”

20 Well: if that happens, killer robots will finally be able to pass the Turing Test. When the robots go rogue — when they start killing for sport or out of hatred, when they start accruing power and wealth for fun — they’ll have ceased to be robots in any meaningful sense. For all intents and purposes, they will have become humans — and it’s humans we’ve had reason to fear, all along.

Understanding and Interpreting

Identify Rosa Brooks’s central claim about why she is not opposed to killer robots.

Reread paragraphs 4 and 5, and paraphrase the objections that Brooks claims some people have to killer robots.

According to Brooks, what human flaws make robots, even killer ones, more effective in situations like the “fog of war” (par. 7)?

What significant assumptions does Brooks make about robot technology and programming developments in the future?

Summarize the concerns that Human Rights Watch has about killer robots (par. 13) and explain how Brooks responds to those concerns.

Brooks claims that humans can be programmed to behave like robots (par. 18). What evidence does she use to support this claim?

Analyzing Language, Style, and Structure

Reread the first paragraph. How does this opening act as a hook for the reader?

Oftentimes in an argumentative piece like this one, the author will begin by laying out the evidence that supports his or her position. But notice that beginning with paragraph 4, Brooks chooses to “review the case against the robots.” What does she achieve by beginning with the counterarguments instead of her own position?

Brooks often uses a sarcastic tone toward those who are afraid of killer robots. Identify one or more of these places and explain how this tone helps her to achieve her purpose.

In paragraph 6, Brooks uses descriptive language and a series of rhetorical questions. How does she use these devices to help prove her point about killer robots?

Reread paragraph 17 and explain how Brooks’s language choices and repetition help her build her argument.

Summarize the point Brooks makes in her conclusion, and explain how it becomes one more piece of evidence to support her claim.

Skim back through the argument, looking for the kinds of evidence that Brooks uses. Overall, does she rely more on pathos or logos to support her claim? Explain.

Connecting, Arguing, and Extending

Do you agree or disagree with Brooks’s opinion when she says that she would take her “chances with the killer robot any day” (par. 11)? Why?

Research the Milgram Experiment that Brooks refers to in paragraph 18, and explain whether you agree or disagree with the conclusion that Brooks draws from the experiment that “humans can behave far more like machines than we generally assume” (par. 17).

Research the Turing Test that Brooks refers to in paragraph 20, and explain how close scientists are to designing a robot that can pass the test.

Read the following statement by the Campaign to Stop Killer Robots, which is an international coalition that is working to preemptively ban the research and development of fully autonomous weapons systems. Write an argument in which you support, oppose, or qualify the coalition’s claim.

The Campaign to Stop Killer Robots calls for a pre-

emptive and comprehensive ban on the development, production, and use of fully autonomous weapons. The prohibition should be achieved through an international treaty, as well as through national laws and other measures. “Allowing life or death decisions on the battlefield to be made by machines crosses a fundamental moral line and represents an unacceptable application of technology,” said Nobel Peace Laureate Jody Williams of the Nobel Women’s Initiative. “Human control of autonomous weapons is essential to protect humanity from a new method of warfare that should never be allowed to come into existence.”