7.2 Operant Conditioning

Classical conditioning is just one form of learning. A second is operant conditioning, in which behavior is modified by its consequences.

The core idea behind operant conditioning is that the consequences that follow any bit of behavior alter the likelihood that the behavior will be performed in the future. This, in principle, is true for virtually any behavior by any organism. For instance, if your dog sits up and there is a positive consequence (a dog treat), it will be more likely to sit up in the future. If you party the night before an exam and there is a negative consequence (you fail), you will be less likely to party pre-

What positive consequence would you like to experience as a result of reading this chapter?

Operant Conditioning in Everyday Life

Preview Question

Question

What is an example of operant conditioning in everyday life?

What is an example of operant conditioning in everyday life?

Examples of operant conditioning are abundant in everyday life. Suppose that you see a parent arguing with a small child and eventually giving in to the child’s demands. You may realize that the child, as a result, is more likely to argue in the future. The parent’s giving in is a positive consequence that raises the likelihood of the child repeating the behavior.

Alternatively, imagine you have a friend you’d like to know better. You hope the person will “open up” by talking more about personal thoughts and feelings. You know that, for this to occur, you need to pay attention and display interest whenever your friend shows the slightest sign of discussing personal topics. Your attention and interest are consequences that raise the likelihood that he or she will continue to open up. If, instead, you yawn and look at your watch, these consequences will make that behavior less likely.

Consequences alter behavior. The challenge for psychological science is to understand the exact processes through which learning from consequences occurs.

WHAT DO YOU KNOW?…

Question 8

True or False?

According to the concept of operant conditioning, behaviors followed by negative consequences are likely to recur.

| A. |

| B. |

Comparing Classical Conditioning to Operant Conditioning

Preview Question

Question

What are the two main differences between classical conditioning and operant conditioning?

What are the two main differences between classical conditioning and operant conditioning?

Operant conditioning and classical conditioning differ in two main ways: (1) the order of the stimulus and the behavior, and (2) the type of behavior that is learned.

Order of stimulus and behavior: In classical conditioning, a stimulus comes first and triggers a response, that is, some behavior. For example, a bell rings (the stimulus) and then the dog salivates (the behavior). In operant conditioning, this order is reversed: A stimulus follows a behavior. You buy a raffle ticket (the behavior) and then you win a TV (a desired stimulus). You try a new strategy in a video game (the behavior) and your video game character is blown to bits (an undesired stimulus). Because the stimuli follow responses, they are called response consequences. Operant conditioning researchers examine how different types of consequences affect the tendency to perform behaviors again in the future.

Type of behavior learned: In classical conditioning, researchers study behaviors that are immediately triggered by an environmental stimulus. A bell rings, and it triggers salivation in Pavlov’s dog. The rat appears, and it triggers fear in Little Albert. In operant conditioning, researchers study actions that appear to be “spontaneous”; that is, they occur even when no triggering stimulus is in sight. You may suddenly decide to text a friend—

even though she is not there asking you to send a text. Or you may spontaneously turn off a TV and start writing a paper for class— even though your professor is not standing around reminding you to work on the paper. Importantly, these behaviors affect the external world (including other people). The text to your friend causes her to cheer up. The work you put into the paper causes your professor to be impressed by your writing and to grade the paper as an A. Most behaviors influence, or “operate on,” the environment; this is why these behaviors are called operants and why the process through which people learn them is called operant conditioning (Skinner, 1953).

Operant conditioning research, then, greatly expands the scope of learning processes beyond those studied by Pavlov. Let’s now look, in detail, at psychological processes in operant conditioning.

In what ways have you “operated on” the environment today?

WHAT DO YOU KNOW?…

Question 9

Which of the following is an example of learning via classical conditioning and which is an example of learning via operant conditioning?

You help your boss out of a jam and get a bonus.

After receiving the bonus and otherwise being treated well by your boss, you feel happy whenever you see her.

a. operant, b. classical

Thorndike’s Puzzle Box

Preview Questions

Question

What was the main research result in Thorndike’s studies of how animals learn to escape from puzzle boxes?

What was the main research result in Thorndike’s studies of how animals learn to escape from puzzle boxes?

Question

What is the law of effect?

What is the law of effect?

Research on operant conditioning has a long history in psychology. It can be traced to work by an American psychologist named Edward L. Thorndike (1874–

At about the same time that Pavlov began to study classical conditioning in Russia, Thorndike started experiments on a different type of learning at Columbia University in New York. He placed cats in puzzle boxes, enclosures the cat could escape from by performing a relatively simple behavior (Figure 7.9). For example, the puzzle box might have a door to which a string was attached; the cat could escape by pulling the string, which opened the door. To provide the cats with an incentive to escape, Thorndike put them in the puzzle boxes when they were hungry and placed some food outside the puzzle box (Chance, 1999).

TRIAL-

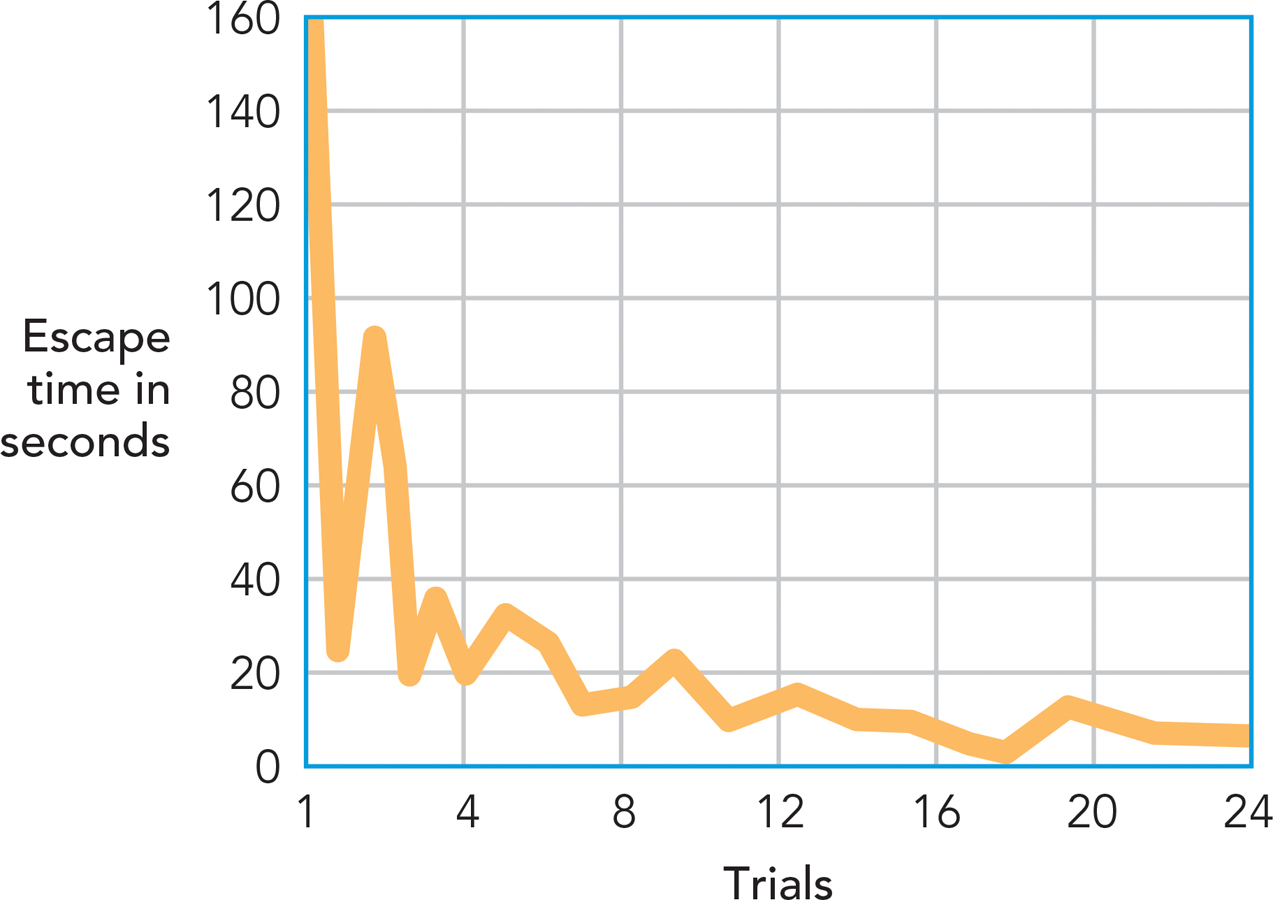

Thorndike discovered that the cats gradually escaped more quickly (Figure 7.10). Initially, it would take them a couple of minutes. They would waste time on behaviors that didn’t help them to get out of the box, such as clawing at the wall. But after a few trials, their behavior became more focused. They spent almost all their time performing actions (such as trying to pull the string connected to the door) that, on previous trials, had enabled them to escape. Eventually, they escaped the puzzle box in just a matter of seconds.

Thorndike found, then, that the cats rapidly learned. Their behavior changed as a result of their experience in the puzzle box. Over time, they learned to perform only those behaviors that, in the past, had been followed by successful escape.

THE LAW OF EFFECT. Thorndike realized that his discovery did not pertain merely to cats in boxes. He had discovered a general principle of learning that he called the law of effect. The law describes relations among (1) the situation the animal is in, (2) a behavior it performs, and (3) an outcome that follows the behavior. The law of effect states that when an organism performs a behavior that leads to a satisfying outcome in a given situation (i.e., an outcome that is pleasurable or beneficial), it will be more likely to perform that behavior when it encounters the same situation in the future. The likelihood of the behavior, then, goes up when the response consequence—

The law of effect explains the cat’s behavior. Each time the cat escapes the box, this satisfying outcome strengthens the connection between the stimulus (the puzzle box with its door closed) and the behavior that enabled the cat to escape (pulling the string attached to the door). When placed back in the puzzle box, the cat is thus likely to perform the behavior again.

THINK ABOUT IT

If you play a video game, you adapt your strategies, doing more of what works best. The law of effect explains this: Behavior that leads to good outcomes becomes more frequent. But if you so excel at the game that it becomes easy, you grow bored with it and stop playing. Can the law of effect explain this change?

WHAT DO YOU KNOW?…

Question 10

The following information is incorrect. Explain why: “Thorndike’s work with cats in puzzle boxes led him to conclude that cats, like most organisms, cannot learn through their experiences. This led him to formulate the law of effect, which states that the likelihood of a behavior is not tied to consequences.”

Skinner and Operant Conditioning

Preview Questions

Question

In Skinner’s analysis of operant conditioning, what is a reinforcer?

In Skinner’s analysis of operant conditioning, what is a reinforcer?

Question

What is the difference between positive and negative reinforcement?

What is the difference between positive and negative reinforcement?

Question

How does reinforcement differ from punishment?

How does reinforcement differ from punishment?

After Thorndike, many psychologists explored how animals learn from experience (Bower & Hilgard, 1981); indeed, the study of learning came to dominate American psychology from the 1920s through the early 1960s. Among these many researchers, one became the most eminent psychologist of the twentieth century: B. F. Skinner (Haggbloom et al., 2002).

Skinner (1904–

CONSEQUENCES SHAPE BEHAVIOR. Skinner’s central insight was the same as Thorndike’s: Behavior is shaped by its consequences. When you do something, a consequence usually follows. Use a GPS, and you find the place you’re looking for. Tell dirty jokes to socially conservative friends, and you find they are not amused. These consequences not only influence your emotional reactions, as Pavlov noted; they also determine whether you perform these behaviors again in the future. Thanks to the positive consequence, finding your desired location, you start using your GPS more. Due to the negative consequence, the displeasure of your conservative friends, you tell them dirty jokes less often.

Although Skinner’s insight was the same as Thorndike’s, his overall contributions were far broader. Skinner not only generated experimental results showing that consequences influence behavior, but he also developed a new technology for conducting research on these influences (see Research Toolkit) that was widely adopted in psychology laboratories around the world. Furthermore, he advanced an entire school of thought, called behaviorism, which argues that all actions and experiences of all organisms must be explained by referring to the environmental influences that a given organism has encountered (Skinner, 1974). Skinner forcefully argued that behaviorism and the principles of operant conditioning could explain most of the phenomena in the field of psychology because most of psychology is concerned with behaviors that are followed by response consequences.

Central to Skinner’s theoretical system was his analysis of how two types of response consequences, reinforcers and punishments, shape behavior. Let’s examine these now.

REINFORCEMENT. In operant conditioning, the stimulus of central interest is called a reinforcer. A reinforcer is any stimulus that occurs after a response and raises the future probability of that response. Skinner’s research analyzed how reinforcers raise the probability that a reinforced behavior will occur again, thereby shaping behavior.

Skinner identified two types of reinforcement—

Psychologists distinguish among four types of response consequences that affect the frequency with which people perform behaviors. The frequency of behavior is increased by two types of reinforcement: positive (a reinforcing stimulus is presented) and negative (an aversive stimulus is removed). The frequency of behavior is decreased by two types of punishment: positive (an aversive stimulus is presented) and negative (a desired stimulus is taken away).

7.1

| Reinforcement and Punishment | ||

|---|---|---|

| Effects on Behavior | ||

| Increase frequency of behavior | Decrease frequency of behavior | |

|

Present stimulus |

Positive reinforcement |

Positive punishment |

|

Remove stimulus |

Negative reinforcement |

Negative punishment |

What particular reinforcer would make it more likely that you would leave your residence early enough to get to school on time? Is this a positive or negative reinforcer?

Here’s another example; see if you can tell whether it’s a positive or negative reinforcer. Imagine that you have a neighbor who plays music too loudly. You yell, “Quiet!” and the neighbor turns the music off. What kind of reinforcer was the cessation of music? It was a negative reinforcer that makes it more likely that, the next time the music is too loud, you will yell “quiet” again.

Notice that “reinforcer” is defined in terms of the effects of a stimulus on the probability of behavior, not in terms of whether people like, or enjoy, the stimulus. Some stimuli that people do not like are effective positive reinforcers. Suppose you live in a region that periodically is struck with natural disasters (e.g., hurricanes or earthquakes). You don’t like the disasters, yet they are positive reinforcers. Their occasional occurrence powerfully raises the probability of some behaviors, such as purchasing electrical generators and stocking up on emergency water supplies.

PUNISHMENT. Skinner recognized that behavior is affected by not only reinforcement but also punishment. A punishment is a stimulus that lowers the future likelihood of behavior. Scolding a misbehaving child, ticketing a speeding driver, and booing an athlete who fails to hustle are all cases of punishment; these response consequences tend to lower the likelihood of the behavior they follow.

As with reinforcement, one can identify “positive” and “negative” versions of punishment. Positive punishment is the presentation of an aversive stimulus, and negative punishment is the taking away of a desired stimulus. Table 7.1 summarizes the two types of reinforcement and punishment.

An everyday setting that illustrates the distinction between positive and negative punishment occurs in child care. When a child misbehaves, a caretaker could opt for positive punishment by presenting an aversive stimulus (e.g., scolding the child). There is, however, a popular negative-

What memorable positive and negative punishments did you experience as a child?

Punishment can get results. Yet Skinner devoted almost all his research efforts to the study of reinforcement rather than punishment. He did so for two reasons, involving effectiveness and ethics. While punishment works, he regarded it as an ineffective way to change behavior because people may act against someone who is punishing them. Second, Skinner judged that rewarding desirable behavior is ethically superior to punishing undesirable action (e.g., Skinner 1948). Let’s follow Skinner’s lead and focus in detail on reinforcement processes.

WHAT DO YOU KNOW?…

Question 11

Match the example of operant conditioning on the left with its correct label on the right.

1. Your car stopped making an annoying sound after you put on your seatbelt. 2. Your teacher yelled at you for being late to class. 3. Your friend took you out to lunch in appreciation of your listening attentively to her problems. 4. Your cell phone was taken away because you missed curfew. | b. Negative reinforcement a. Positive punishment c. Negative punishment d. Positive reinforcement |

7.1

| This situation: | is an example of this kind of operant conditioning: | because: |

|---|---|---|

|

1.. Your car stopped making an annoying sound after you put on your seatbelt. |

a. Positive punishment |

e. Applying an aversive stimulus decreased the likelihood of your repeating that behavior. |

|

2. Your teacher yelled at you for being late to class. |

b. Negative reinforcement |

f. Removing a desirable stimulus decreased the likelihood of your repeating that behavior. |

|

3. Your friend took you out to lunch in appreciation of your listening attentively to her problems. |

c. Negative punishment |

g. Removing an aversive stimulus increased the likelihood of your repeating that behavior. |

|

4. Your cell phone was taken away because you missed curfew. |

d. Positive reinforcement |

h. Applying a desirable stimulus increased the likelihood of your repeating that behavior. |

Four Basic Operant Conditioning Processes

Preview Questions

Question

What is a schedule of reinforcement?

What is a schedule of reinforcement?

Question

How do different reinforcement schedules affect behavior?

How do different reinforcement schedules affect behavior?

Question

What is a discriminative stimulus?

What is a discriminative stimulus?

Question

How does research on discriminative stimuli explain people’s tendency to act differently in different situations?

How does research on discriminative stimuli explain people’s tendency to act differently in different situations?

Question

How can organisms learn complex behaviors through operant conditioning?

How can organisms learn complex behaviors through operant conditioning?

Skinner discovered a number of systematic, and sometimes surprising, relations between reinforcement and behavior. As you’ll see in this section, the discoveries involved four basic operant conditioning processes: schedules of reinforcement, extinction, discriminative stimuli, and the shaping of complex behavior.

Skinner believed that his research on each process was highly relevant to human behavior (Skinner, 1953). Note, however, that his studies were conducted on animals. Why animals? According to Skinner, just as principles of physics apply to all physical objects, principles of learning apply to all organisms. Thus, for ease of conducting research, he studied relatively simple organisms (especially pigeons and rats) in a relatively simple experimental setting, a Skinner box (see Research Toolkit).

RESEARCH TOOLKIT

The Skinner Box

When scientists conduct research, they often isolate their object of study from the surrounding environment. Chemists put chemical substances into a test tube. Biologists put cell cultures into a Petri dish. This isolation is required to discover lawful scientific processes. Suppose you left a cell culture sitting out in the environment, instead of protected by a Petri dish. Dust, germs, and other objects would then become mixed together with the biological material, contaminating your experiment.

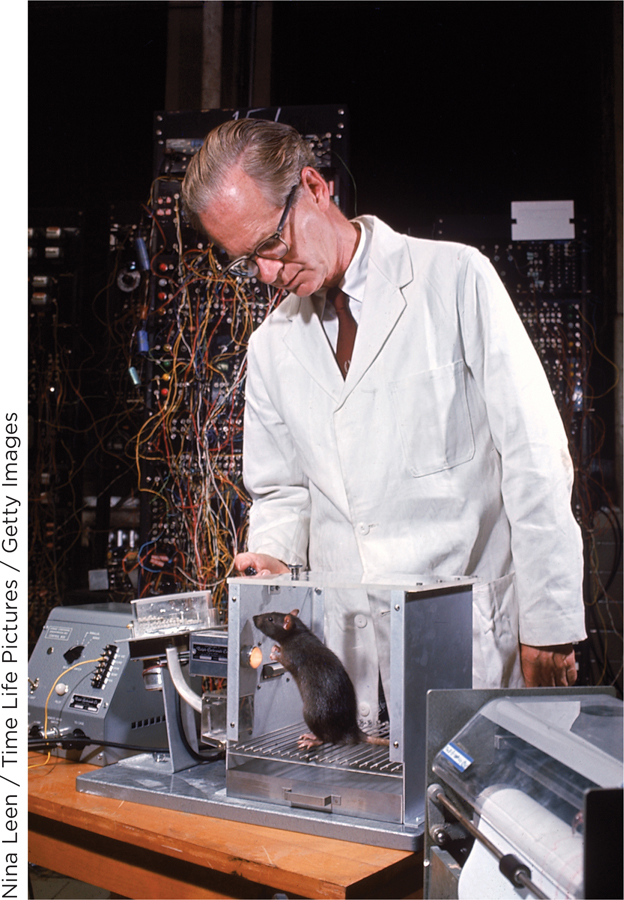

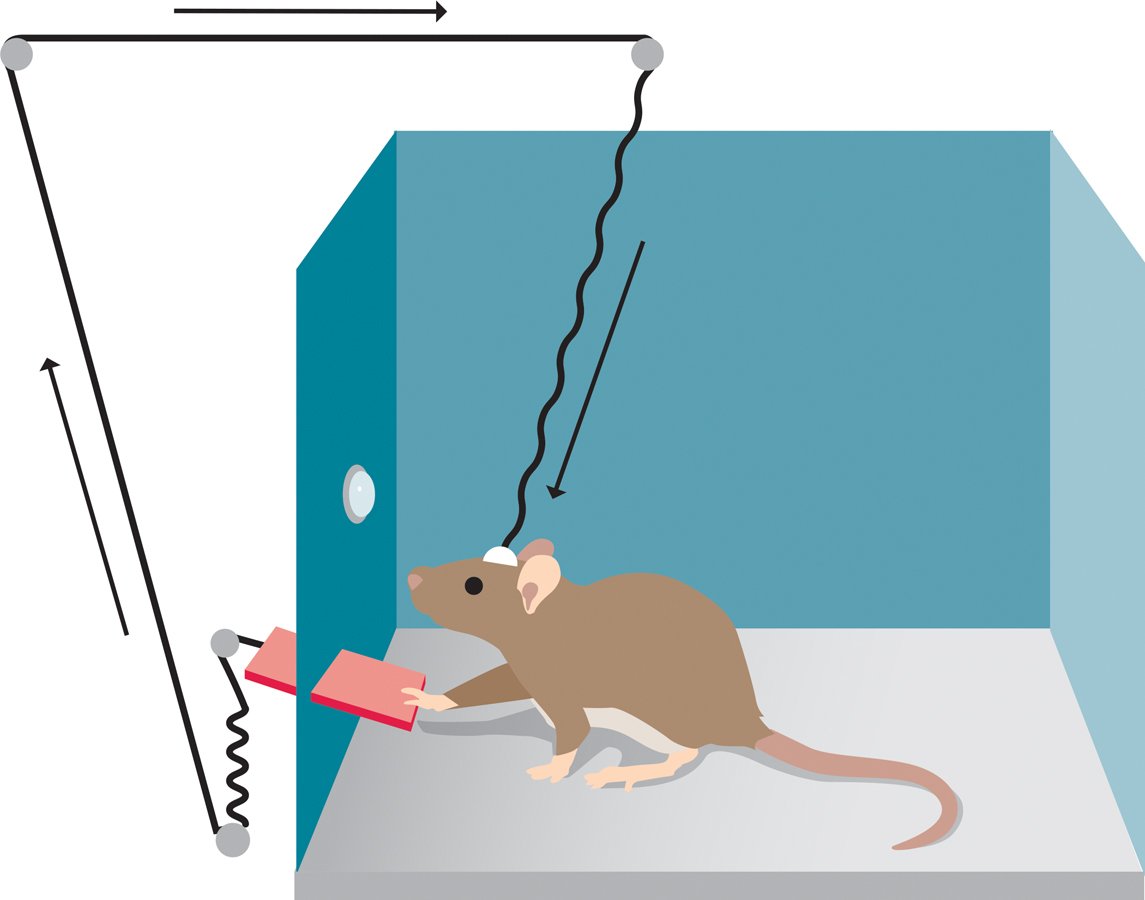

Like other scientists, psychologists who study learning need to isolate their object of study from environmental variables that could contaminate their experiments. What they need is a controlled environment in which an animal can roam freely while the researchers control variables that might influence its behavior. This is what B. F. Skinner provided by inventing the Skinner box (which he referred to as an “operant conditioning chamber”).

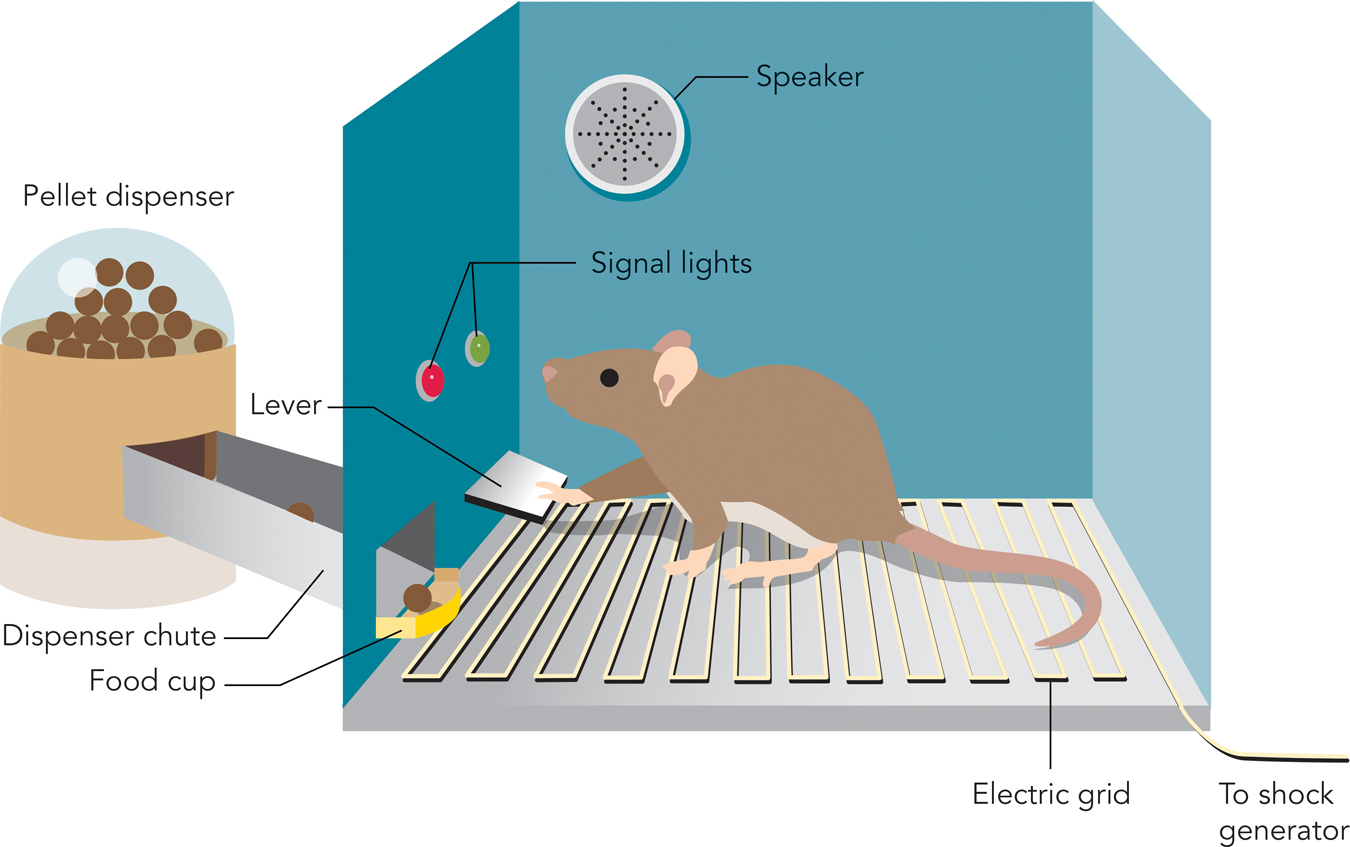

The Skinner box is a piece of laboratory apparatus that is used in the study of operant conditioning. Its main virtue is simplicity. It has only three main elements (Figure 7.11):

A device that the animal can act upon. If the Skinner box is designed for research with rats, this device is usually a lever that the rat can press. For research with pigeons, it is a small disk on a wall that the pigeon can peck. The device records the number of times the animal responds (i.e., the number of lever presses or pecks).

A mechanism for delivering reinforcers. This is usually a small opening through which food or water can be delivered. For studies on the effects of punishment, rather than reinforcement, the box might also contain an electric grid that delivers shocks that punish the animal’s behavior.

A signal that can serve as a discriminative stimulus. This is usually a light that turns on or off. Sometimes it is a speaker that delivers a tone.

Because the Skinner box is so simple, the experimenter can control all the variables in the animal’s environment. The experimenter can decide whether to reinforce the animal’s behavior, how frequently to deliver reinforcements, and whether to provide the animal with a signal (e.g., a light) indicating whether a behavior will be reinforced. And that’s it—

By controlling all the variables in the environment, Skinner was able to obtain remarkably powerful experimental results. Skinner box studies produce results that are so reliable that the basic pattern of findings (discussed in the next section) is replicated, time and time again, with essentially every individual animal that is placed into the box.

WHAT DO YOU KNOW?…

Question 12

The simplicity of the operant conditioning chamber gave Skinner control. Control, in turn, increased his ability to his findings.

THINK ABOUT IT

Do reinforcers affect behavior in the same way in humans as in animals (e.g., laboratory rats)? What about the role of expectations? Humans daydream about reinforcers they might experience in the future. Rats do not. Might some psychological processes in reinforcement be unique to humans?

SCHEDULES OF REINFORCEMENT. In both the laboratory and everyday life, the relation between behaviors and reinforcers varies. Sometimes reinforcers occur consistently and predictably. Every time you pay the cashier at a grocery store, the behavior is reinforced (you get a bag of food). Other times, however, reinforcers are sporadic and unpredictable. You always root, root, root for your home team, but often the behavior is not reinforced (sometimes they lose).

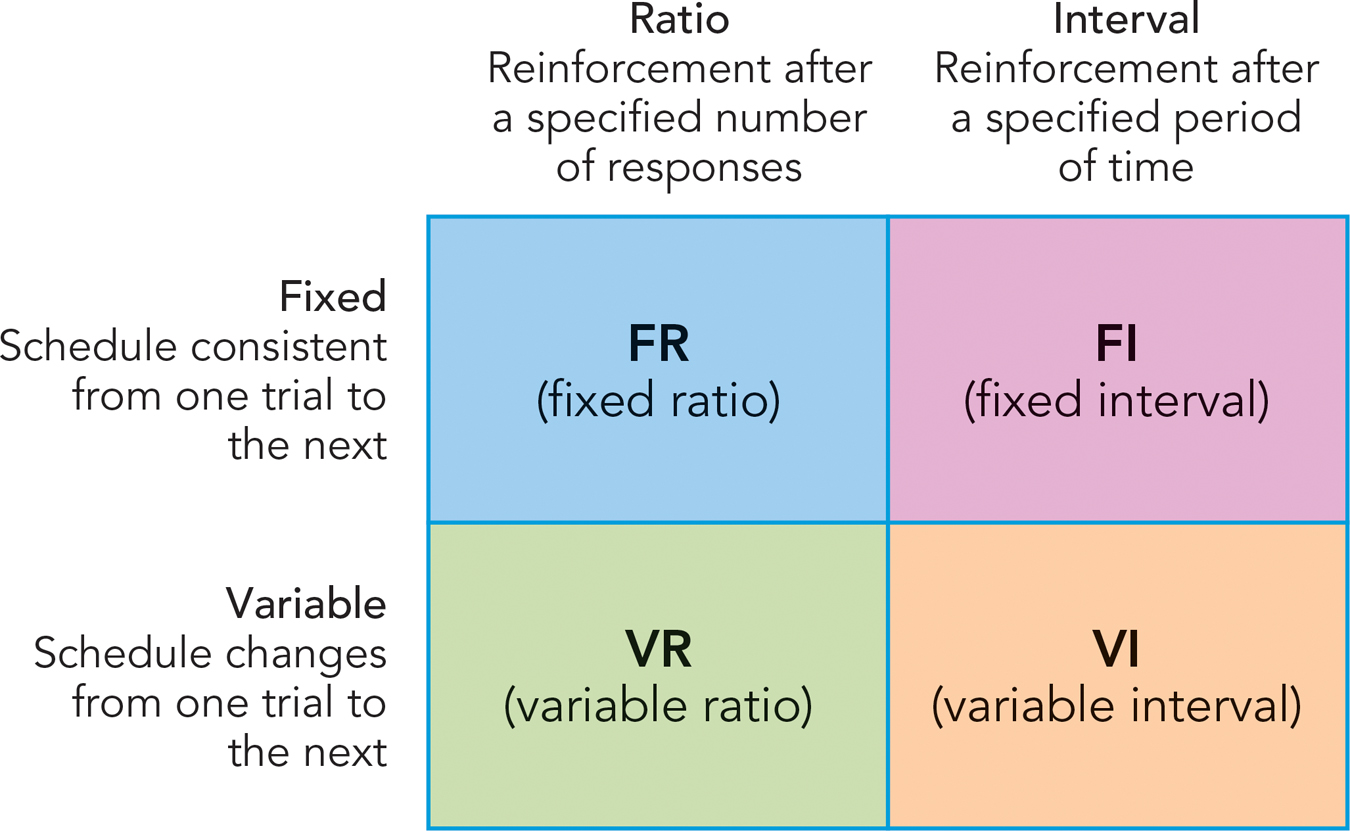

Skinner recognized that different schedules of reinforcement exist. A schedule of reinforcement is a timetable that indicates when reinforcers occur, in relation to the occurrence of behavior. Different schedules of reinforcement produce different patterns of behavior (Ferster & Skinner, 1957). Schedules of reinforcement differ in two ways: (1) the consistency of the reinforcement schedule and (2) whether time is a factor in the delivery of reinforcers (see Figure 7.12).

Regarding consistency, sometimes the schedule with which reinforcers are delivered remains the same, or is “fixed,” from one trial to the next. (A trial in Skinner’s experiments is any sequence in which a behavior or series of behaviors is followed by a reinforcer.) In fixed schedules of reinforcement, the delivery of reinforcers is consistent across trials. In variable schedules of reinforcement, the delivery of reinforcers is inconsistent; it changes unpredictably from one trial to the next.

Regarding time, sometimes an organism must wait for a period of time before a reinforcer is delivered. In interval schedules of reinforcement, a reinforcer is delivered subsequent to the first response the organism makes after a specific period of time elapses. There is nothing the organism can do to make the reinforcement occur more quickly. In another pattern, however, time is not a determining factor in the scheduling of reinforcements. In ratio schedules of reinforcement, the organism gets a reinforcer after performing a certain number of responses. If it responds faster, it is reinforced sooner.

On which schedule of reinforcement is your texting reinforced?

As shown in Figure 7.12, these two distinctions combine to create four types of reinforcement schedules: fixed interval (FI), variable interval (VI), fixed ratio (FR), and variable ratio (VR). Let’s look at each one, examining both Skinner’s laboratory results and their relevance to everyday life.

In a fixed interval (FI) schedule, reinforcers occur after a consistent period of time has elapsed from one trial to the next. For example, suppose a rat is pressing a lever under an FI schedule, and the interval is 1 minute. This means that, once the rat presses the lever and is given a reinforcer, it cannot get another one for 60 seconds. After the full minute elapses, the first time the rat presses the lever, it receives another reinforcer.

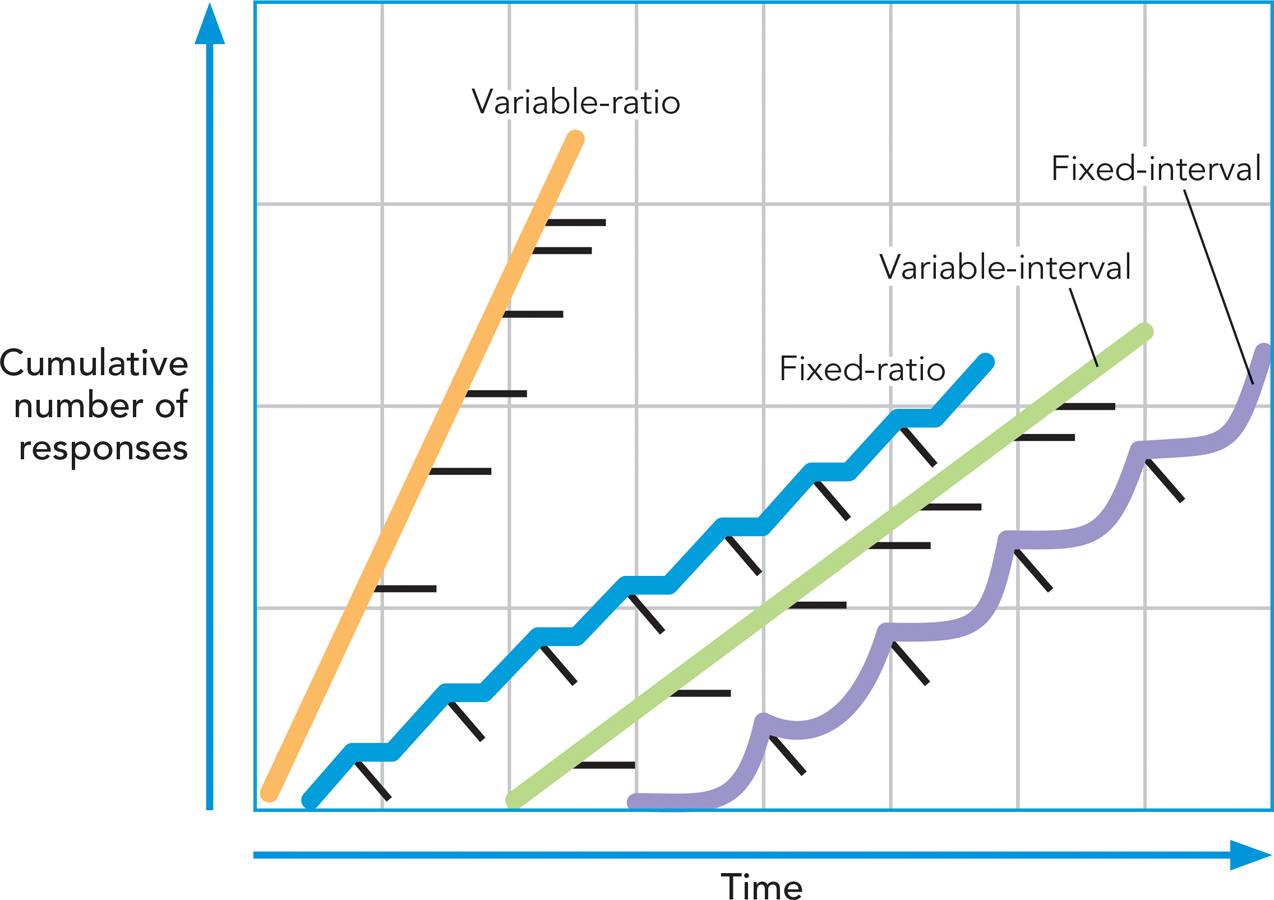

FI schedules produce relatively low rates of behavior (Figure 7.13). Once a reinforcer is presented, an animal tends not to respond for awhile. The animal generally exhibits high rates of response only in the few seconds before the end of the interval. As a result, the animal’s overall level of behavior is low in comparison to that of animals reinforced under any of the other three reinforcement schedules.

FI schedules are common in everyday life. One example of a reinforcer that often occurs on an FI schedule is classroom exams; you might be in a class that has exams on a fixed schedule, maybe once every four weeks. (Exams are reinforcers; they reinforce studying. If there were no exams, most people would study less than they do in classes with exams.) So exams in your class are reinforcers that occur on a predictable, fixed schedule. What, then, would Skinner’s theory predict about the behavior of students in a course with regularly scheduled exams?

Because the exams occur on an FI schedule, Skinner would predict that students’ frequency of studying for the course would vary across time, just like the behavior of a rat in a Skinner box (see Figure 7.13). He would expect that, after one exam occurs, students would not do much studying for awhile. Rather, they would exhibit high rates of studying only in the few days and hours just prior to the next exam. Of course, for many students, this is exactly what occurs—

In a variable interval (VI) schedule, reinforcements occur after a period of time that is unpredictable to the organism in the Skinner box. For example, the interval might be set to 1 minute on average, but could vary from 10 seconds to 110 seconds from one trial to the next. VI schedules produce patterns of behavior that occur at a higher rate, and more consistently, than behavior under an FI schedule (see Figure 7.13). A simple change in our cramming example above shows how this works in everyday life. Suppose you had pop quizzes in a course, instead of scheduled exams. You likely would study at a relatively consistent rate, always keeping up on readings and reviewing lecture notes, so that you’d always be ready for an exam. This type of consistency in behavior is exactly what occurs in Skinner boxes, under VI schedules.

The ratio schedules of reinforcement yield higher rates of response than either of the interval schedules (see Figure 7.13). In the fixed ratio (FR) schedule, a reinforcer is delivered after an organism performs a certain number of responses, and that number stays consistent from one trial to the next. For instance, a rat might be reinforced after every 20th lever press. With an FR schedule, the reinforcement occurs after a certain number of responses no matter how long, or short, a period of time the animal takes to perform these responses. Under an FR schedule, organisms get more reinforcements if they respond more quickly (unlike the FI schedule).

To see the power of an FR schedule, imagine two types of jobs: one in which you get paid by the hour, and the other—

In a variable ratio (VR) schedule, reinforcements are delivered after an organism performs a certain number of responses on average. The exact number of responses required on a given trial varies unpredictably from one trial to the next. For example, a rat’s lever-

Under a VR schedule, animals get reinforced only sporadically. An animal might, on some trials, have to press the lever 50 times or more just to receive one reinforcer. You might expect these schedules to produce low rates of response. But instead, the opposite occurs! VR schedules produce the highest response rates (see Figure 7.13). A remarkable finding from Skinner’s lab is that, while getting very few reinforcements, animals on a VR schedule will exhibit very high rates of response.

The power of the VR schedule explains the power of gambling devices, such as slot machines. Slot machines reinforce a sequence of behavior: putting money in the machine and pulling a lever (an action that, ironically, looks quite like the behavior of a rat pressing a lever in a Skinner box). Critically, they reinforce the behavior only sporadically, on a variable ratio schedule. Large payouts do occur, but only once every few hundred or thousand plays. Despite the low frequency of reinforcement, the device is addictive. Whether you’re a pigeon in a Skinner box or a human in a casino, the VR schedule can seize control of your behavior and cause you to lose your nest egg.

EXTINCTION. Earlier in this chapter, you learned about extinction in classical conditioning. In operant conditioning, extinction refers to the pattern of behavior that results when a behavior that previously was reinforced is no longer followed by any reinforcers. For instance, suppose an experimenter had reinforced the lever-

What happens to the animal’s behavior during extinction? The pattern of extinction depends on the schedule of reinforcement that the animal had experienced previously. If its behavior had been reinforced consistently (e.g., on an FR schedule with a reinforcement occurring after every few responses), extinction is rapid. Animals quickly stop responding when reinforcers cease. However, if the behavior had been reinforced on an irregular, random schedule (e.g., on a VR schedule with large numbers of behaviors sometimes being required to obtain one reinforcer), extinction is very slow. An animal might perform a large number of behaviors while receiving no reinforcement at all.

Extinction, then, is fast if an animal has been reinforced consistently but slow if an animal has been reinforced only intermittently. Compare the following two human cases in terms of extinction:

You put money into a vending machine, press a button indicating your selection, but there is no reinforcer: The machine fails to deliver your snack.

You buy a lottery ticket at a local convenience store, but there is no reinforcer: You do not win the lottery.

In the vending machine example, one trial in which you don’t get a reinforcer is enough: You stop putting money into that machine. In the lottery ticket example, one trial in which you don’t get a reinforcer has hardly any effect: Lottery players often buy tickets repeatedly, for months or years, without winning. The properly functioning vending machine reinforces behavior on an FR schedule, whereas the lottery reinforces behavior on a VR schedule, and—

DISCRIMINATIVE STIMULI. Your behavior commonly changes from one situation to the next. You’re talkative with friends, yet quiet in class or in a church. You work hard on some courses, but “blow off” others. You speak formally to your professor and casually to your roommate. Operant conditioning explains these variations in behavior in terms of discriminative stimuli. A discriminative stimulus is one that provides information about the type of consequences that are likely to follow a given type of behavior. Discriminative stimuli may signal that, in one situation, a behavior likely will be reinforced, whereas in another situation, that same behavior may be punished.

An example of a discriminative stimulus in social interactions is a “shhh” signal (see photo). The gesture indicates that the typical consequences of talking do not hold. Typically, if you talk about some topic, your behavior is reinforced by the attention of the people listening to you. The “shhh” signal indicates that, instead, this same behavior is likely to be punished; something in the typical environment has changed (e.g., someone nearby, who shouldn’t hear your conversation, is listening in), and the behavior will result in negative consequences.

Many of the everyday situations you encounter contain discriminative stimuli—

Have you ever failed to notice a discriminative stimulus? At what cost?

THIS JUST IN

Reinforcing Pain Behavior

“Ouch.”

“What’s wrong? Are you OK?”

“Ouch. Ow. It hurts. Ow.”

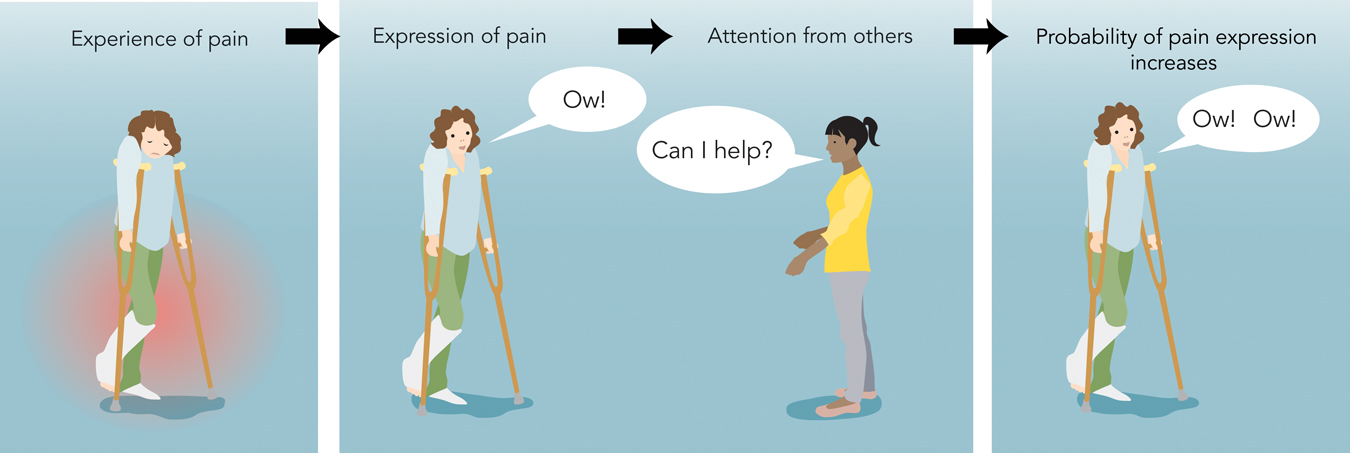

Pain is internal. It’s an inner feeling created by receptors within your body that detect harmful stimuli, such as cuts or burns (see Chapter 5). But expressions of pain—

A major lesson of this chapter is that the external environment shapes behavior. Some environmental stimuli, called reinforcers, increase the frequency of the behavior that precedes them. Classic research by Skinner shows that when rats press a lever, reinforcers increase the frequency of their lever-

A recent operant learning theory of pain behavior outlines four steps through which this happens (Figure 7.14; Gatzounis et al., 2012). After experiencing pain (Step 1), people may exhibit a pain behavior (Step 2). That pain behavior brings attention from other people (Step 3). The extra attention can reinforce the pain behavior, making it more frequent in the future (Step 4).

Research supports the theory (Kunz, Rainville, & Lautenbacher, 2011). Research participants experienced, across a series of trials, a very warm stimulus that was painful (but not harmful). In different experimental conditions, the researchers reinforced different types of reactions to the painful stimulus. On some trials, participants received a reinforcer whenever they displayed a neutral facial expression during the stimulation. On other trials, they received a reinforcer whenever they displayed a painful facial expression. The study produced two key results:

Reinforcers increased expressions of pain. Researchers observed that participants appeared to be in greater pain during the trials in which pain expressions were reinforced.

Pain expressions were positively correlated with pain experiences. When the researchers asked participants how much pain they actually were experiencing, people who had displayed more pain on their face also reported experiencing more pain.

What does this mean for your everyday experiences? Imagine you’re with someone experiencing minor pain—

WHAT DO YOU KNOW?…

Question 13

Name something a well-

THE SHAPING OF COMPLEX BEHAVIOR. A rat placed in a Skinner box does not immediately start pressing the lever. Lever pressing, from the rat’s perspective, is a somewhat complex behavior. It has to locate the lever, balance to reach it, and learn to press down with sufficient force. How can one teach the rat to do this complex act?

In operant conditioning, organisms learn complex behaviors through shaping. Shaping is a step-

Did you ever wonder how animal trainers teach lions to jump through hoops, seals to balance balls on their noses, and parrots to ride bicycles? They do it by shaping the animals’ behavior through operant conditioning. Suppose you want to train a parrot to ride a bicycle. You can’t just sit around waiting for your parrot to hop on the bike and start pedaling, and only then give it a reinforcer. That’s never going to happen. Instead, you have to begin with something simple. You might at first reinforce the bird merely when it walks in the direction of the bike. Next, you might reinforce it only when it gets closer to the bike, then only when it touches the bike, and so forth. After a potentially painstaking series of shaping experiences, your parrot eventually will become a cyclist.

Much human learning also is shaped gradually. When, as a child, you learned proper manners for eating, adults first reinforced simple actions, such as picking up and using a fork. They didn’t wait until you made it through a formal meal, complete with proper use of salad fork and butter knife, before reinforcing your behavior. Similarly, in a foreign language class, the instructor’s goal is to teach you how to converse in complete sentences. But first, she reinforces something much simpler: the speaking of simple words and phrases. In both cases, behavior is gradually shaped. Over time, the behavior that is reinforced becomes more complex.

THINK ABOUT IT

Much human behavior is learned by shaping. But is all of it? What about driving? When you learned to drive, did you at first make a lot of mistakes—

WHAT DO YOU KNOW?…

Question 14

Of ratio and interval schedules of reinforcement, schedules yield higher rates of response because the reinforcements depend on the organism doing something. Of fixed and variable ratio schedules, ratio schedules produce the highest rates of response, surprisingly. Behaviors reinforced on a ratio schedule tend to extinguish slowly, especially if the behavior itself was reinforced very intermittently, as in gambling. A discriminative stimulus indicates whether a given behavior will be reinforced or in a given situation. Simple organisms can learn complex responses by being for behaviors that approximate a desired behavior.

TRY THIS!

Earlier, in this chapter’s Try This! Activity, you attempted to teach a pigeon to peck on a wall. Try it again. Now that you have learned the principles of operant conditioning, you should be able to get a higher score than you did previously. In particular, apply what you have learned about schedules of reinforcement and how they can increase rates of response, to improve your score.

Beyond Skinner in the Study of Operant Conditioning

Preview Questions

Question

What does it mean to say that there are “biological constraints on learning”?

What does it mean to say that there are “biological constraints on learning”?

Question

Do research findings in the study of biological constraints on learning confirm, or call into question, the principles of learning developed by Skinner?

Do research findings in the study of biological constraints on learning confirm, or call into question, the principles of learning developed by Skinner?

Question

Why might rewards sometimes lower people’s tendency to engage in an activity?

Why might rewards sometimes lower people’s tendency to engage in an activity?

Skinner believed that his principles of operant conditioning would be comprehensive. In theory, they would explain all operant behavior, by all organisms, in all environments. Skinner made this claim explicitly. His first book (Skinner, 1938) was The Behavior of Organisms—

Research has shown, however, that Skinner’s claim is not correct. His principles of learning are sometimes insufficient. Skinner failed to attend to some factors that have proven important to learning and behavior. Two such factors are (1) biological constraints on learning that are based in the evolutionary history of the species being studied, and (2) the way in which rewards alter not only people’s actions, but also their thoughts about their actions.

BIOLOGICAL CONSTRAINTS ON OPERANT CONDITIONING. Why did Skinner choose to conduct experiments on the lever-

On this point, Skinner turned out to be wrong. The choice of organism and behavior sometimes does make a difference. The differences reflect animals’ innate biology, which produces biological constraints on learning (Domjan & Galef, 1983; Öhman & Mineka, 2001). A biological constraint on learning is an evolved predisposition that makes it difficult, if not impossible, for a given species to learn a certain type of behavior when reinforced with a certain type of reward.

Consider two operant conditioning experiments with a pigeon:

A pigeon is reinforced with food whenever it pecks a particular spot on a wall.

A pigeon is reinforced with food whenever it flaps its wings.

Skinner would have predicted that the two experiments should work the same way; the reinforcer, food, would raise the probability of responding. However, the experiments actually turn out differently (Rachlin, 1976). The pecking experiment works as Skinner expected, but the flapping experiment does not. Food reinforcers do not raise the probability of wing flapping. Why not?

The answer lies in the pigeons’ biological predispositions. For a pigeon, pecking and food naturally are biologically connected. Wing flapping and food, however, are not. In fact, wing flapping and eating were usually disconnected during pigeons’ evolution. For a pigeon in the presence of food, flapping its wings is a bad idea: It flies away and gets no food. Pigeons’ evolutionary history, then, constrains learning; they find it difficult to learn the connection between the behavior, wing flapping, and the reinforcer, food.

This is only one of many cases in which a species’ evolutionary past constrains its ability to learn through operant conditioning. In another (Breland & Breland, 1961), researchers reinforced a raccoon for picking up two coins and dropping them in a box. The raccoon picked up the coins. But instead of dropping them in the box, it rubbed them together, dipped them into the box, pulled them out, rubbed them some more, and never let go. The researchers had never reinforced these behaviors. Yet the raccoon performed them repeatedly. Why? Its actions were a case of instinctual drift, in which animals exhibit behavior that reflects their species’ evolved biological predispositions, rather than their individual learning experiences. Raccoons instinctively moisten their paws and food, and rub their food before eating, and in the experiment the raccoon instinctively did the same thing with the coins, in the absence of reinforcement.

Evolution also plays a role in human learning. Consider language. Shaping through operant conditioning cannot explain human language learning (Chomsky, 1959). Young children quickly learn not only the words of their language, but also the language’s syntax, that is, the rules through which sentences are formed. They do so despite the fact that parents rarely provide explicit reinforcements for syntax use (Brown, 1973). Humans are biologically predisposed to learn language; we inherit capabilities that make it easy for us to learn to understand and produce sentences (Pinker, 1994).

REWARDS AND PERSONAL INTEREST IN ACTIVITIES. Skinner thought that the effects of reinforcement on behavior were the same for all species. No matter who you are—

Yet there is a complicating factor to which Skinner gave little thought. Rats and pigeons do not think about themselves and the causes of their behavior. (“Am I really the sort of rat who should be stuck in a box pressing a lever all day long?”) People, on the other hand, think about themselves incessantly. Sometimes their thoughts can cause rewards to “backfire”—decreasing rather than increasing behavior.

In a classic study, researchers tested the effects of rewards on preschool children who were intrinsically interested in drawing, that is, who naturally liked to draw (Lepper, Greene, & Nisbett, 1973). In one experimental condition, the children were asked to draw pictures for a chance to win a “Good Player Award.” After they finished, the children received the award. In a second condition, children were asked to draw pictures but with no mention of an award. A few days later, there was a free period during which the children could do anything they wanted, including drawing. Did the Good Player Awards increase the probability of drawing during this period, as Skinner would have predicted? No. The very opposite occurred: Children in the no -award condition spent more time drawing. Why?

The rewards changed children’s thoughts about their behavior (Lepper, Greene, & Nisbett, 1973). At the start of the study, the children were interested in drawing; they knew they drew pictures out of personal interest. But the Good Player Awards caused them to think that they drew pictures to get external rewards. As a result, the children became less interested in drawing when no rewards were available.

A summary of more than 100 studies confirms that rewards can reduce children’s interest in activities (Deci, Koestner, & Ryan, 1999). The research shows that rewards can backfire—

In summary, these two scientific findings—

What activities do you engage in that you find intrinsically interesting? When have you been rewarded for doing something you already enjoy?

WHAT DO YOU KNOW?…

Question 15

Both statements below are incorrect. Explain why:

“The fact that it’s just as easy to teach a pigeon to peck as it is to flap its wings indicates that our evolutionary history has no effect on our ability to learn through operant conditioning.”

“Just as Skinner would have predicted, rewards always increase the likelihood of a behavior, even when people are intrinsically motivated to do something.”

a. This statement is incorrect because it is, in fact, easier to teach a pigeon to peck than to flap its wings; this indicates that our evolutionary history constrains our ability to learn through operant conditioning.

b. This statement is incorrect because, contrary to what Skinner would have predicted, rewards can actually decrease the likelihood of a behavior, when people are intrinsically motivated to do something.

Biological Bases of Operant Conditioning

Preview Question

Question

How do psychologists know that the brain contains a “reward center”?

How do psychologists know that the brain contains a “reward center”?

Now that we’ve learned about psychological processes in operant learning, we can move to a biological level of analysis. What brain mechanisms allow organisms to learn through operant conditioning?

A first step in answering this question was taken more than a half-

Research has advanced considerably from these early days. Rather than identifying a general area of the brain that is involved in reward, researchers now search for specific neurons that are responsible for operant conditioning. This operant conditioning research has progressed, thanks to study of a simple organism you learned about earlier, Aplysia (Rankin, 2002). Aplysia eat by biting. Researchers (Brembs et al., 2002) reinforced biting by electrically stimulating a reward area of the Aplysia nervous system. This reinforcer changed the Aplysia ’s behavior; it increased their frequency of biting. The researchers then explored neuron-

Further research with Aplysia has shown that the precise cellular changes that occur in operant conditioning differ from those that occur in classical conditioning (Lorenzetti et al., 2006). This means that classical conditioning and operant conditioning, which differ psychologically, also differ biologically.

In more complex organisms, the situation is more complicated. In humans, for instance, rewards have a number of effects—

Despite this greater complexity, research conducted with animals (e.g., laboratory rats) has identified a biological substance in mammals that is key to their ability to learn through rewards: dopamine, one of the brain’s neurotransmitters. Different types of findings suggest that dopamine is central to reward processes. The presentation of rewarding stimuli (e.g., food, water, or opportunities for sex) increases levels of dopamine in the brain (Schultz, 2006; Wise, 2004). Furthermore, when the normal functioning of dopamine is blocked, rewards no longer control animals’ behavior.

In one study (Wise et al., 1978), rats were reinforced by food for lever pressing. After learning to press the lever, they were assigned to different experimental conditions. In one, reinforcement for lever pressing ceased; as you might expect, these rats gradually stopped pressing the lever. In a second condition, reinforcement continued; these rats kept pressing. Finally, the key experimental condition combined two features: (1) Lever pressing was reinforced, but (2) rats received an injection of a drug that blocks the neurotransmitter effects of dopamine. These rats stopped pressing the lever. Their behavior resembled that of the rats in the no-

WHAT DO YOU KNOW?…

Question 16

Research with Aplysia indicates that operant and classical conditioning, which are psychologically different, are also different. The neurotransmitter is central to reward processes.