4.2 Correlation

In the previous section we saw that a scatterplot can be used to graph quantitative bivariate data. A scatterplot provides us with a good visual representation to begin investigating whether or not an association exists between the two variables under consideration.

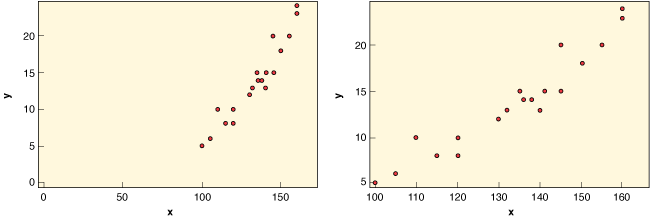

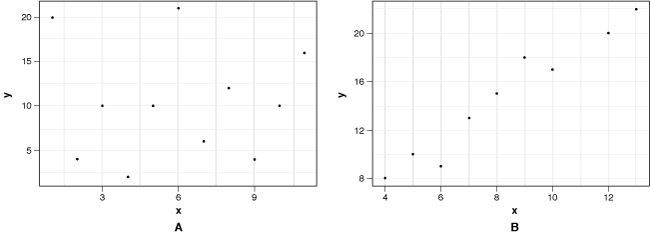

When an association does exist, we typically describe its form, direction, and strength. When reporting the strength of a linear association solely based on a scatterplot, subjectivity can be a concern. The following example illustrates this point. Take a look at the two graphs shown in Figure 4.4. In which case is the association between x and y more strongly linear?

By examining the graphs you might (at first glance) say that there is less scatter in the graph on the left; in this case you would conclude that the linear association is stronger in this graph. It turns out that the strength of association is exactly the same for the two graphs because they are plots of the same data. The scatterplot on the left appears more linear because it uses a different x-axis scale than the graph on the right. Changing the scale here distorts the image; whether intended or not, such differences in perspective can be misleading.

So, in order to find a more objective way to describe a linear association between two variables, we’ll introduce a numerical calculation (called the correlation coefficient). Once we have determined graphically that a linear association exists, this value should confirm our assessment of the association’s strength and direction. (Note that we proceeded in a similar fashion when identifying outliers—first looking at a graph, and then using a numerical outlier criterion.)

The video Snapshots: Correlation and Causation. illustrates strong linear relationships between sets of variables, and shows how the correlation can be used to describe such relationships.

4.2.1 Interpreting the Correlation Coefficient

The correlation coefficient, r, is a measure of the strength and direction of the linear association between two quantitative variables. Its formula is a bit complicated, so for now we’ll let software such as CrunchIt! compute it and instead we'll concentrate on interpretation. Here are some facts about r:

- r is always a number between –1 and 1, inclusive.

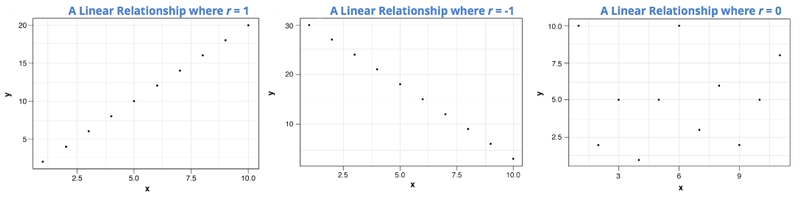

- There are three extreme cases for the values of r:

- r = +1: The points in the scatterplot fall on a line with positive slope. This indicates a perfect positive linear association between x and y.

- r = –1: The points in the scatterplot fall on a line with negative slope. This indicates a perfect negative linear association between x and y.

- r = 0: There is no linear association between the two variables. The scatterplot exhibits lots of scatter or a strong nonlinear pattern.

In most situations involving real data, r is never exactly equal to –1, 0, or 1. If r > 0, then there is a positive association between the two variables. When r is close to 1, the relationship is said to be strong; the farther the value of r is from 1, the weaker the positive linear relationship is said to be.

If r < 0, then there is a negative association between the two variables. When r is close to –1, the relationship is said to be strong; the farther the value of r is from –1, the weaker the negative linear relationship is said to be.

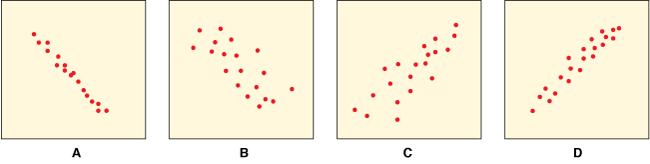

Let’s take a look at a few examples of scatterplots and their corresponding values of r.

Notice that in all four graphs some sort of linear relationship is present, but to varying degrees, and in different directions. These two characteristics (direction and strength) are reflected in the value of the correlation coefficient. Graphs A and B display a negative trend whereas graphs C and D show a positive trend. Although A and B both have a negative trend, there is more scatter in graph B than A. Therefore the correlation coefficient for A is closer to –1 than it is for graph B. Similarly, although graphs C and D both have a positive trend, there is more scatter in graph C than D. Therefore the correlation coefficient is closer to +1 in graph D than it is in graph C.

Now Try This 4.4

For each of the scatterplots shown below determine which of the following statements is true.

1. For Graph A:

| A. |

| B. |

| C. |

| D. |

| E. |

2. For Graph B:

| A. |

| B. |

| C. |

| D. |

| E. |

Now that we (hopefully) have a better idea about how the value of r tells us something about strength and direction of the linear association, let’s take a look at several other facts about r.

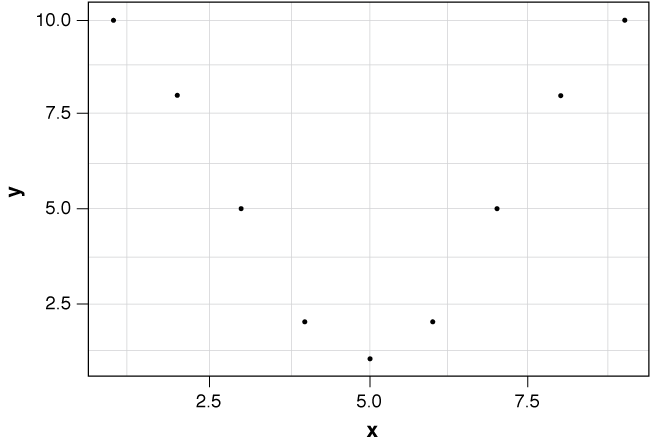

- A correlation coefficient value of 0 does not imply that there is no association between x and y; rather it means that there is no linear association between x and y. The following graph has a correlation coefficient of 0; although there is a very clear (and strong) association between x and y, the association is not linear.

Figure 4.7 A Very Strong Nonlinear Relationship

The moral of this story is to always plot the data first. If a linear relationship is not present, it does not make sense to compute r.

- The value of r does not depend on each variable’s units. If we are interested in measuring the correlation between the heights (in inches) and the weights (in pounds) of a group of college students, the correlation coefficient will be exactly the same number if instead we measured the heights in centimeters and the weights in kilograms.

- The value of r does not depend on which variable is selected as the explanatory variable and which is selected as the response variable. The correlation coefficient r measures the strength and direction of the linear relationship between the two variables. While the scatterplot looks different if you interchange the explanatory and response variables, the strength and direction of the linear relationship between the variables remains the same, and r does not change.

Now Try This 4.5

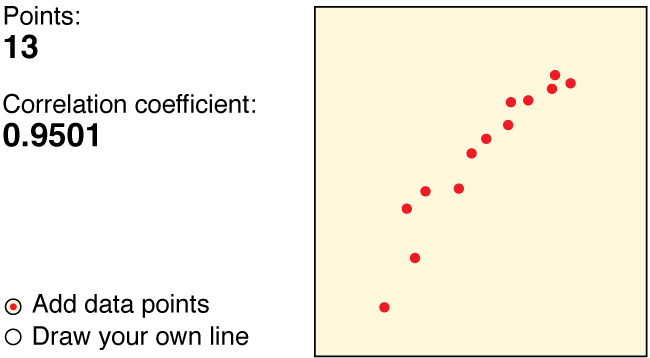

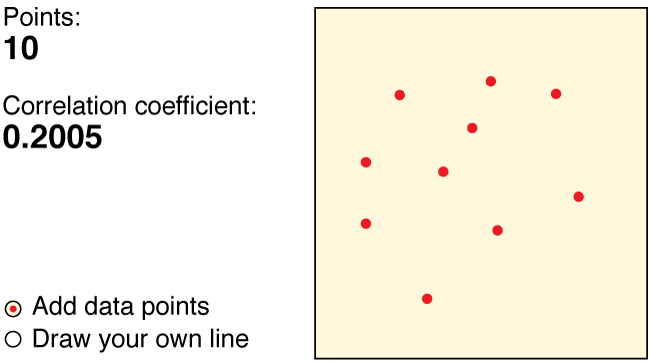

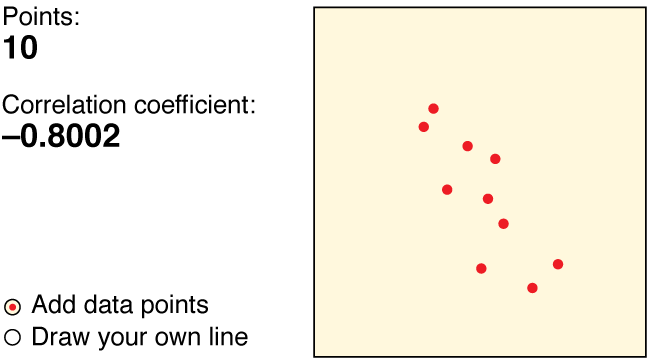

Use the Correlation and Regression applet to create a data set that has the following correlation coefficients:

A. r = 0.95

B. r = 0.20

C. r = -0.80

Although answers will vary, here are sample solutions.

Describe how your graphs compare to the samples.

| A. |

| B. |

4.2.2 Correlation in Practice

Photo Credit: Roel Burgler/Redux

The following table contains data collected based on several variables for the ten countries in the world with the largest population in 2012. Variables measured include population estimates (in millions) for 2012, fertility rates, percentage of the population below 15 years old, percentage of the population who are 65+ years old, average life expectancy, percentage of urban residents, percentage of women 15-49 years old using contraception, and the projected population estimates (in millions) for 2050.

| Country | 2012 Pop (mill) | Fertility Rate | % < 15 years old | % 65+ years | Avg. Life Expectancy at birth (years) | % urban | % using contraception | 2050 Pop (mill) |

|---|---|---|---|---|---|---|---|---|

| China | 1350 | 1.5 | 16 | 9 | 75 | 56 | 54 | 1311 |

| India | 1260 | 2.5 | 31 | 5 | 65 | 31 | 54 | 1691 |

| USA | 314 | 1.9 | 20 | 13 | 79 | 79 | 79 | 423 |

| Indonesia | 241 | 2.3 | 27 | 6 | 72 | 43 | 61 | 309 |

| Brazil | 194 | 1.9 | 24 | 7 | 74 | 84 | 80 | 213 |

| Pakistan | 180 | 3.6 | 35 | 4 | 65 | 35 | 27 | 314 |

| Nigeria | 170 | 5.6 | 44 | 3 | 51 | 51 | 15 | 402 |

| Bangladesh | 153 | 2.3 | 31 | 5 | 69 | 25 | 61 | 226 |

| Russia | 143 | 1.6 | 15 | 13 | 69 | 74 | 80 | 128 |

| Japan | 128 | 1.4 | 13 | 24 | 83 | 86 | 54 | 96 |

Source: Population Reference Bureau

There are several quantitative variables contained in this data set. Perhaps you are interested in seeing if population size is related to the percentage of residents who are over 65 years old or maybe you’d like to see if there is an association between a country’s fertility rate and the percentage of women aged 15-49 who use contraception.

To answer questions such as these, we begin by determining if it makes sense to designate one of the variables as explanatory and the other as response (if not, arbitrarily put one variable on the x-axis and the other on the y-axis.) Next graph the data using a scatterplot. If a linear trend is apparent, use statistical software to determine the correlation coefficient.

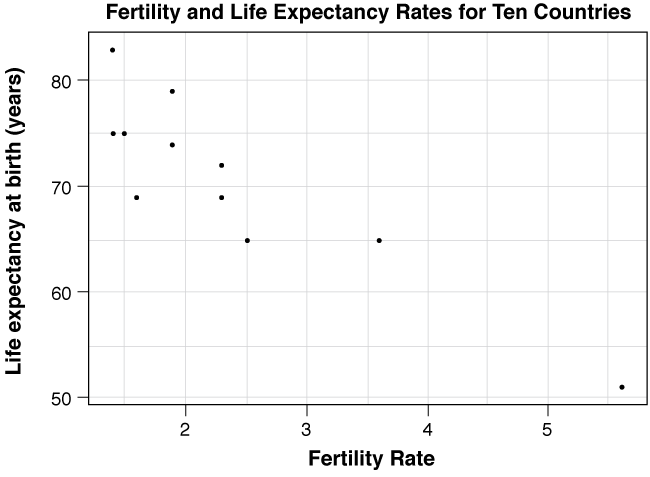

Let’s try an example. Is there an association between the fertility rate of a country and the life expectancy at birth (in years) for that country? Here is the scatterplot of the data for the ten most populated countries in 2012:

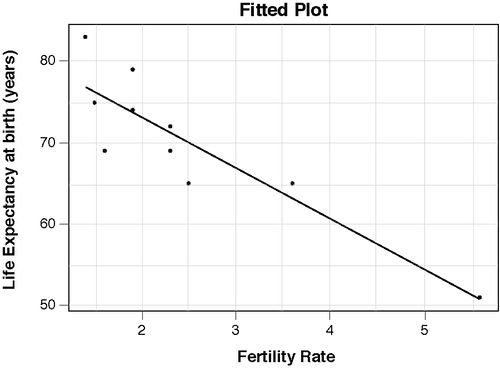

In a plot such as this one, we can imagine a line drawn roughly through the middle of this set of points, such as the one shown below.

Because there is a little scatter around this line and the slope of the line is negative, we say that the scatterplot shows a moderately strong linear association between the two variables. When we compute the correlation coefficient using technology, we find that r = –0.8918. This confirms our theory that there is a moderately strong negative association between a country’s life expectancy and its fertility rate.

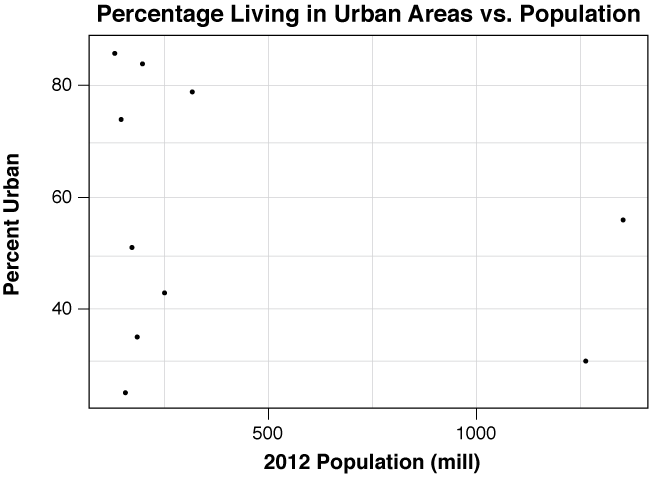

Is there association between 2012 population estimates and the percentage of the population living in an urban area? We’ll let population be the explanatory variable and the percentage of the population living in an urban area be the response variable.

In this plot, it is much more difficult to imagine a line going through the middle of these points. Our inability to visualize an appropriate line leads us to believe that the relationship between these variables is not linear. Indeed, the value of the correlation coefficient is r = –0.2714. This indicates that there is (at best) a very weak linear relationship between the percentage of people living in urban areas and 2012 population estimates.

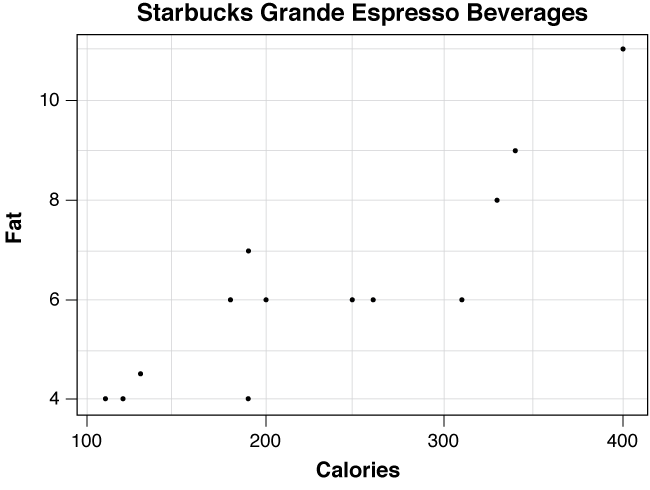

Here is another example to consider. Figure 4.11 contains the fat (g) and calories for a large sample of grande (16 oz) cups of hot espresso offered by Starbucks.

Photo Credit: © whiteboxmedia limited / Alamy

It is easy to imagine a line through the middle of this scatterplot. The plot displays a strong positive linear association between fat grams and calories in grande hot espresso coffees at Starbucks. Those that are high in fat tend to have a large number of calories and those that have lower fat amounts tend to have fewer calories. Because there appears to be a linear trend, we compute the correlation and find that r = 0.873, again confirming a strong positive linear association between fat grams and calories.

4.2.3 How outliers affect r

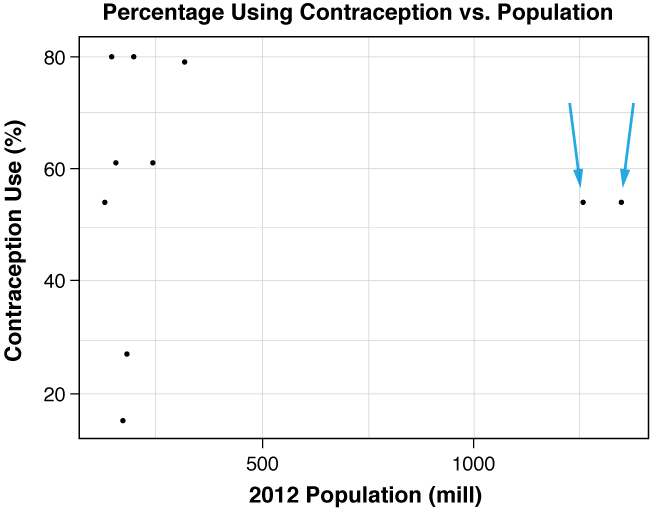

In Table 4.3 we looked at several variables measured on the top ten highest populated countries in 2012. Here is the scatterplot showing the relationship between population and the percentage of women aged 15-49 who use contraception, with potential outliers identified.

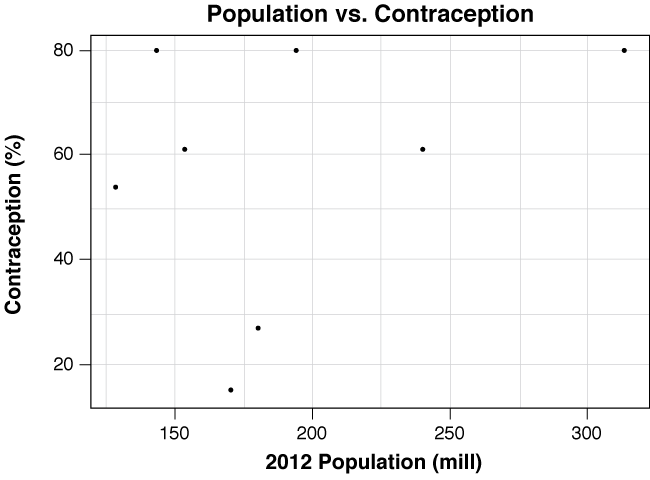

The correlation coefficient for the data shown in Figure 4.12 is –0.02644. In a scatterplot, an outlier is a point outside the overall pattern of the plot. On the left of this plot we see a roughly linear pattern, with the two points on the right falling far outside that pattern. Although the percentage of women aged 15-49 using contraception in China and India are in the range of the other countries, their population numbers are about four times the size of the other countries; therefore both counties are certainly outliers in the x-direction. Let’s remove these data points, re-examine the scatterplot, and recalculate the correlation coefficient.

This scatterplot looks different (more clearly showing a positive but weak association), and the correlation coefficient also changed significantly. The recalculated correlation coefficient is 0.2975. This example illustrates that the correlation coefficient is not resistant to outliers. This means that removing outliers from a data set will certainly alter the correlation coefficient’s value.

To investigate further how outliers impact the correlation coefficient, open the Correlation and Regression applet. Plot some points, drag one of the points, and observe how the value of r changes.

However, you should not remove outliers from your data set merely to improve the strength of the linear association between your variables. As we pointed out in Section 3.3.2, when you suspect problems with data values, you should report that, and you may remove outliers if you are certain that they have occurred because of data collection or entry errors. In the case of the population/life expectancy data, the reported values are correct. China and India represent outliers due to populations that are very much larger than those of other populous countries. So the question then becomes, what do you intend to do with a proposed model? If your goal is to make predictions about countries whose populations are similar to the remainder of the data, then removing the values for China and India is reasonable. If you are looking for a model to describe the whole set of data, you should not remove the outliers. In this case, however, the scatterplot provides ample warning that even the best linear model is unlikely to yield reasonable predictions.

Now Try This 4.6

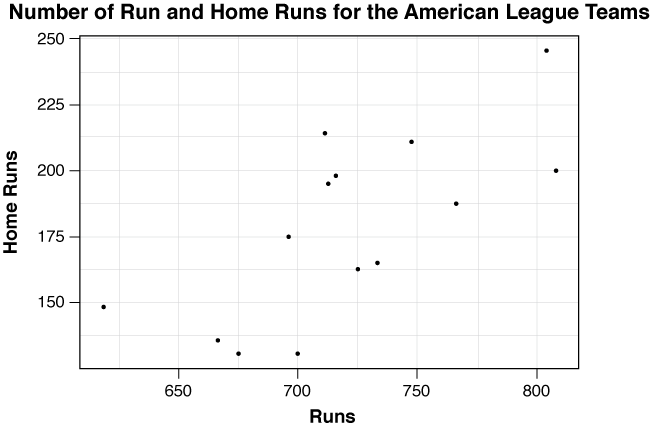

The following table shows the number of runs and the number of home runs for each team in the American League during the 2012 season.

Source: sports.espn.go.com

1. The scatterplot below shows home runs versus runs.

The form of the association between a team’s number of runs and number of home runs is ; the direction of the association is ; the strength of the association is .

2. Report the correlation coefficient, rounding your answer to four decimal places.

4.2.4 The Formula for r

Photo Credit: Ryan R Fox/Shutterstock

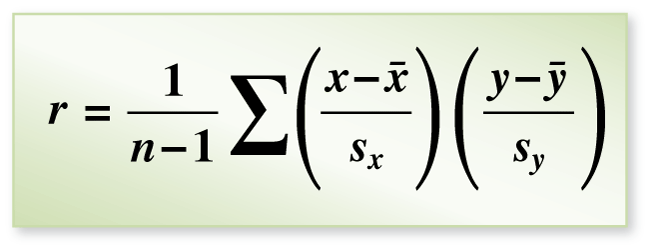

We have seen so far that the correlation coefficient, r, is a measure of the strength and direction of the linear association between two quantitative variables, x and y, but up to this point we have not introduced its mathematical formula. For those of you who are curious to know how r is calculated, read on. The formula for r is the following:

Although this formula might look mysterious at first glance, let’s take a closer look and try to make sense of all of its parts. The formula for r is just a type of average of products. Recall that for each individual, two measurements are taken, one designated the x-value and the other designated the y-value. To compute r, first we calculate the z-score for each of the x-values (x−¯xsx), and then we calculate a z-score for each of the observed y-values (y−¯ysy) . (Recall that a z-score measures how many standard deviations an observation is from its mean.) Next, for each ordered pair (x, y), we multiply the corresponding z-scores for the x- and y-values. The final part of the calculation requires us to add these products and then divide by n – 1.

Here’s a small example to illustrate the steps that are part of this calculation. Recent studies have shown that a person’s waist size is a better predictor of the likelihood that he will develop type 2 diabetes than body mass index (BMI). Here are waist and BMI data for four men.

| Individual | Waist in inches (x) | BMI (y) |

|---|---|---|

| 1 | 34 | 21.6 |

| 2 | 40 | 25.9 |

| 3 | 42 | 28.8 |

| 4 | 38 | 25.2 |

Source: www.news-medical.net

Let’s compute the correlation coefficient for this data set. First, for each ordered pair (x, y), we need to find the z-score corresponding to the x-value (waist measurement) and the z-score corresponding to the y-value (BMI). To determine the z-scores we need to calculate the mean and standard deviation for each variable. These summary statistics are presented in the table below.

| Column | Mean | Std. Dev. |

|---|---|---|

| Waist (x) | 38.5 | 3.4156504 |

| BMI (y) | 25.375 | 2.960152 |

The following table shows the calculations for the z-scores for each ordered pair as well as the product of each pair's z-scores.

| Row | Waist (x) | BMI (y) | z-score (x) | z-score (y) | Product |

|---|---|---|---|---|---|

| 1 | 34 | 21.6 | 34−38.53.4157=−1.317 | 21.6−25.3752.9602=−1.275 | −1.317∗−1.275=1.6801 |

| 2 | 40 | 25.9 | 40−38.53.4157=0.439 | 25.9−25.3752.9602=0.1774 | 0.439∗0.1774=0.0779 |

| 3 | 42 | 28.8 | 42−38.53.4157=1.025 | 28.8−25.3752.9602=1.157 | 1.025∗1.157=1.1856 |

| 4 | 38 | 25.2 | 38−38.53.4157=−0.1464 | 25.2−25.3752.9602=−0.0591 | −0.1464∗−0.0591=0.0087 |

The correlation coefficient is found by summing the products and dividing by n – 1. Therefore,

r=13(1.6801+0.0779+1.1856+0.0087)=0.9841

For the remainder of this chapter we will not calculate r by hand. You shouldn’t either. Use CrunchIt! to calculate r, and instead focus your energy on interpreting and understanding the meaning of r.

4.2.5 Some Further Thoughts About r

In English, correlation is a synonym for association or relationship. In statistics, correlation refers to the correlation coefficient r, which measures the strength and direction of the linear relationship between two quantitative variables. To us, correlation is a number; you should not say that there is a correlation between two variables, but rather that there is an association or a relationship between them.

In addition, correlation is a calculation based on the numerical values of the variables observed. We cannot calculate a correlation coefficient if either (or both) of the variables studied are categorical, so it makes no sense at all to use the word correlation in these settings. (Later in this course we will study a way to determine whether an association exists between two categorical variables and also if one exists when one variable is quantitative and the other is categorical.)

Even when we can calculate a correlation coefficient, whether a particular association is moderate or strong is open to interpretation. Researchers are often quite delighted with an r of 0.5, which might not seem very strong to us. In order to give us a common vocabulary for describing the strength of an association, we will use the following guidelines:

- if |r|≥0.8, we will say that the linear association is strong;

- if 0.5≤|r| <0.8, we will say that the linear association is moderate;

- if 0.2≤|r|<0.5, we will say that the linear association is weak, and

- if |r|<0.2, we will say that there is essentially no linear association between the two variables.

Remember that the sign of the correlation coefficient always indicates the direction of the linear association, rather than its strength. Thus, we would say that an r = –0.6 represents a moderate negative linear association, whereas an r of 0.3 represents a weak positive linear association.

This correspondence between values of r and adjectives describing the strength of the relationship represents neither a universal standard nor an unbreakable rule. It is presented merely to give you some guidance as you begin to work with correlation. Should you be collecting and analyzing your own data in the future, or evaluating the analysis of others, keep in mind that interpretations of r can be subjective.

And most importantly, do not confuse correlation with causation. The fact that an explanatory variable and a response variable have a strong association does not indicate that the explanatory variable causes the response. In the example above, waist size and BMI show a strong (indeed, a very strong) linear relationship, but waist size does not cause BMI. Similarly, if we were to make a scatterplot of the shoe size and reading level for several elementary-school students we would no doubt see a strong positive association between these two variables. Here it should be clear that having bigger feet doesn’t make you a better reader. Rather, both variables are responding to the lurking variable, age.

This warning bears repeating. Correlation does not imply causation. The clearest way to establish a cause-and-effect relationship, as we discussed in Chapter 2, is to conduct a randomized, comparative experiment. In such a situation, subjects are randomized into treatment groups so that differences in outcomes are most likely caused by differences in treatments.

However, when human subjects are involved, researchers frequently cannot conduct experiments due to practical or ethical considerations. In attempting to establish smoking as a cause of lung cancer, it was not possible to divide subjects into two groups, forcing one group to smoke and one to abstain from cigarettes. The link between smoking and lung cancer was confirmed only because many, many observational studies over dozens of years showed a very strong association between cigarette smoking and lung cancer.

The article Correlation, causation, and association - What does it all mean??? provides additional discussion of the challenges involved in establishing causation.

Now that we have both graphical and numerical measures available to assess the association between two quantitative variables, we’ll move on to finding models to describe the relationship between the variables. In the next section we’ll look at quantitative bivariate data sets that exhibit a moderate to strong linear association, and develop methods to find appropriate models for the data. These models will allow us to predict values of the response variable, given an explanatory variable value.