Operant Conditioning

ASSOCIATING BEHAVIORS AND CONSEQUENCES

KEY THEME

Operant conditioning deals with the learning of active, voluntary behaviors that are shaped and maintained by their consequences.

KEY QUESTIONS

How did Edward Thorndike study the acquisition of new behaviors, and what conclusions did he reach?

What were B.F. Skinner’s key assumptions?

How are positive reinforcement and negative reinforcement similar, and how are they different?

Classical conditioning can help explain the acquisition of many learned behaviors, including emotional and physiological responses. However, recall that classical conditioning involves reflexive behaviors that are automatically elicited by a specific stimulus. Most everyday behaviors don’t fall into this category. Instead, they involve nonreflexive, or voluntary, actions that can’t be explained with classical conditioning.

The investigation of how voluntary behaviors are acquired began with a young American psychology student named Edward L. Thorndike. A few years before Pavlov began his extensive studies of classical conditioning, Thorndike was using cats, chicks, and dogs to investigate how voluntary behaviors are acquired. Thorndike’s pioneering studies helped set the stage for the later work of another American psychologist named B.F. Skinner. It was Skinner who developed operant conditioning, another form of conditioning that explains how we acquire and maintain voluntary behaviors.

Thorndike and the Law of Effect

Edward L. Thorndike was the first psychologist to systematically investigate animal learning and how voluntary behaviors are influenced by their consequences. At the time, Thorndike was only in his early 20s and a psychology graduate student. He conducted his pioneering studies to complete his dissertation and earn his doctorate in psychology.

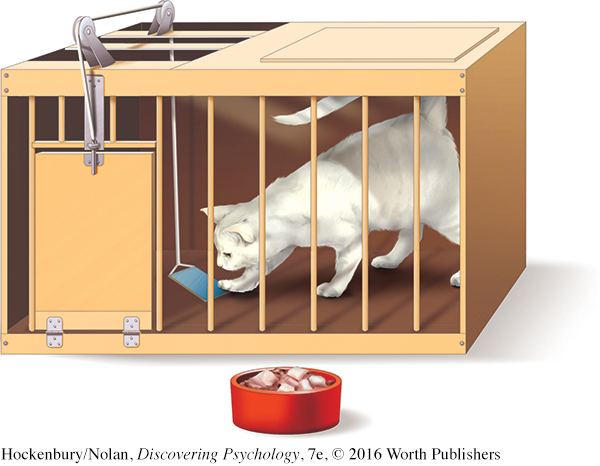

Thorndike’s dissertation focused on the issue of whether animals, like humans, use reasoning to solve problems (Dewsbury, 1998). In an important series of experiments, Thorndike (1898) put hungry cats in specially constructed cages that he called “puzzle boxes.” A cat could escape the cage by a simple act, such as pulling a loop or pressing a lever that would unlatch the cage door. A plate of food was placed just outside the cage, where the hungry cat could see and smell it.

Thorndike found that when the cat was first put into the puzzle box, it would engage in many different, seemingly random behaviors to escape. For example, the cat would scratch at the cage door, claw at the ceiling, and try to squeeze through the wooden slats (not to mention complain at the top of its lungs). Eventually, however, the cat would accidentally pull on the loop or step on the lever, opening the door latch and escaping the box. After several trials in the same puzzle box, a cat could get the cage door open very quickly.

Thorndike (1898) concluded that the cats did not display any humanlike insight or reasoning in unlatching the puzzle box door. Instead, he explained the cats’ learning as a process of trial and error (Chance, 1999). The cats gradually learned to associate certain responses with successfully escaping the box and gaining the food reward. According to Thorndike, these successful behaviors became “stamped in,” so that a cat was more likely to repeat these behaviors when placed in the puzzle box again. Unsuccessful behaviors were gradually eliminated.

Thorndike’s observations led him to formulate the law of effect: Responses followed by a “satisfying state of affairs” are “strengthened” and more likely to occur again in the same situation. Conversely, responses followed by an unpleasant or “annoying state of affairs” are “weakened” and less likely to occur again.

Thorndike’s description of the law of effect was an important first step in understanding how active, voluntary behaviors can be modified by their consequences. Thorndike, however, never developed his ideas on learning into a formal model or system (Hearst, 1999). Instead, he applied his findings to education, publishing many books on educational psychology (Mayer & others, 2003). Some 30 years after Thorndike’s famous puzzle-

B.F. Skinner and the Search for “Order in Behavior”

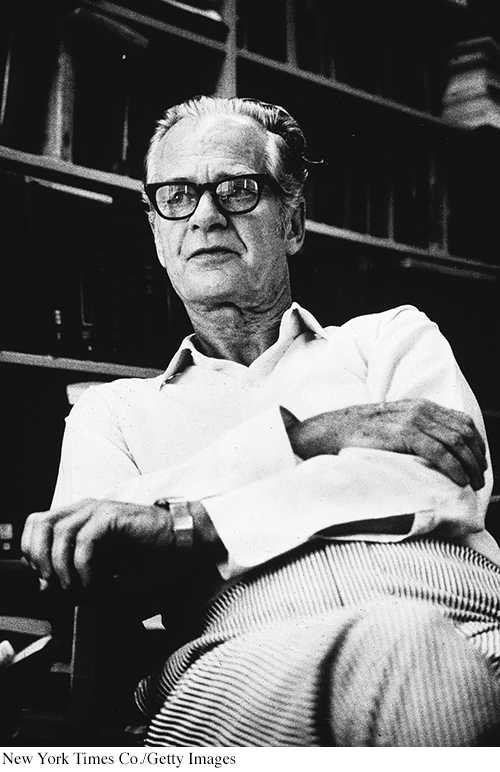

From the time he was a graduate student in psychology until his death, the famous American psychologist B.F. Skinner searched for the “lawful processes” that would explain “order in behavior” (Skinner, 1956, 1967). Like John Watson, Skinner was a staunch behaviorist. Skinner strongly believed that psychology should restrict itself to studying only phenomena that could be objectively measured and verified—

Skinner acknowledged that Pavlov’s classical conditioning could explain the learned association of stimuli in certain reflexive responses (Iversen, 1992). But classical conditioning was limited to existing behaviors that were reflexively elicited. To Skinner, the most important form of learning was demonstrated by new behaviors that were actively emitted by the organism, such as the active behaviors produced by Thorndike’s cats in trying to escape the puzzle boxes.

Skinner (1953) coined the term operant to describe any “active behavior that operates upon the environment to generate consequences.” In everyday language, Skinner’s principles of operant conditioning explain how we acquire the wide range of voluntary behaviors that we perform in daily life. But as a behaviorist who rejected mentalistic explanations, Skinner avoided the term voluntary because it would imply that behavior was due to a conscious choice or intention.

Skinner defined operant conditioning concepts in very objective terms and he avoided explanations based on subjective mental states (Moore, 2005b). We’ll closely follow Skinner’s original terminology and definitions.

Reinforcement

INCREASING FUTURE BEHAVIOR

In a nutshell, Skinner’s operant conditioning explains learning as a process in which behavior is shaped and maintained by its consequences. One possible consequence of a behavior is reinforcement. Reinforcement is said to occur when a stimulus or an event follows an operant and increases the likelihood of the operant being repeated. Notice that reinforcement is defined by the effect it produces—

Let’s look at reinforcement in action. Suppose you put your money into a soft-

In this example, pushing the coin return lever is the operant—the active response you emitted. The shower of coins is the reinforcing stimulus, or reinforcer—the stimulus or event that is sought in a particular situation. In everyday language, a reinforcing stimulus is typically something desirable, satisfying, or pleasant. Skinner, of course, avoided such terms because they reflected subjective emotional states.

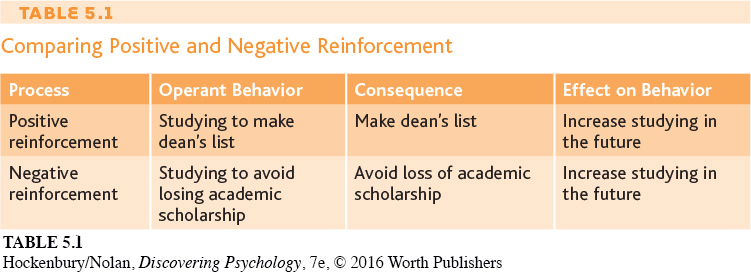

POSITIVE AND NEGATIVE REINFORCEMENT

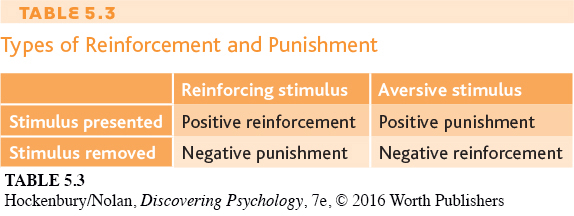

There are two forms of reinforcement: positive reinforcement and negative reinforcement. Both affect future behavior, but they do so in different ways (see Table 5.1). It’s easier to understand these differences if you note at the outset that Skinner did not use the terms positive and negative in their everyday sense of meaning “good” and “bad” or “desirable” and “undesirable.” Instead, think of the words positive and negative in terms of their mathematical meanings. Positive is the equivalent of a plus sign (+), meaning that something is added. Negative is the equivalent of a minus sign (–), meaning that something is subtracted or removed. If you keep that distinction in mind, the principles of positive and negative reinforcement should be easier to understand.

Positive reinforcement involves following an operant with the addition of a reinforcing stimulus. In positive reinforcement situations, a response is strengthened because something is added or presented. Everyday examples of positive reinforcement in action are easy to identify. Here are some examples:

Your backhand return of the tennis ball (the operant) is low and fast, and your tennis coach yells “Excellent!” (the reinforcing stimulus).

You watch a student production of Hamlet and write a short paper about it (the operant) for 10 bonus points (the reinforcing stimulus) in your literature class.

You reach your sales quota at work (the operant) and you get a bonus check (the reinforcing stimulus).

In each example, if the addition of the reinforcing stimulus has the effect of making you more likely to repeat the operant in similar situations in the future, then positive reinforcement has occurred.

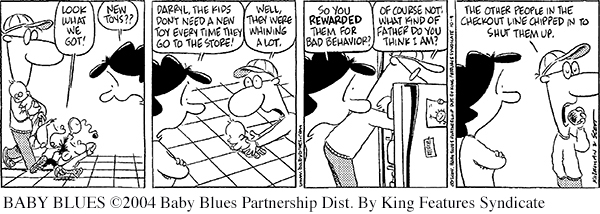

It’s important to point out that what constitutes a reinforcing stimulus can vary from person to person, species to species, and situation to situation. While gold stars and stickers may be reinforcing to a third-

It’s also important to note that the reinforcing stimulus is not necessarily something we usually consider positive or desirable. For example, most teachers would not think of a scolding as being a reinforcing stimulus to children. But to children, adult attention can be a powerful reinforcing stimulus. If a child receives attention from the teacher only when he misbehaves, then the teacher may unwittingly be reinforcing misbehavior. The child may actually increase disruptive behavior in order to get the sought-

Think Like a SCIENTIST

Can wearable technology help you break a bad habit or form a good one? Go to LaunchPad: Resources to Think Like a Scientist about Positive and Negative Reinforcement.

Negative reinforcement involves an operant that is followed by the removal of an aversive stimulus. In negative reinforcement situations, a response is strengthened because something is being subtracted or removed. Remember that the word negative in the phrase negative reinforcement is used like a mathematical minus sign (–).

For example, you take two aspirin (the operant) to remove a headache (the aversive stimulus). Thirty minutes later, the headache is gone. Are you now more likely to take aspirin to deal with bodily aches and pain in the future? If you are, then negative reinforcement has occurred.

Aversive stimuli typically involve physical or psychological discomfort that an organism seeks to escape or avoid. Consequently, behaviors are said to be negatively reinforced when they let you either: (1) escape aversive stimuli that are already present or (2) avoid aversive stimuli before they occur. That is, we’re more likely to repeat the same escape or avoidance behaviors in similar situations in the future. The headache example illustrates the negative reinforcement of escape behavior. By taking two aspirin, you “escaped” the headache. Paying your electric bill on time to avoid a late charge illustrates the negative reinforcement of avoidance behavior. Here are some more examples of negative reinforcement involving escape or avoidance behavior:

You make backup copies of important computer files (the operant) to avoid losing the data if the computer’s hard drive should fail (the aversive stimulus).

You dab some hydrocortisone cream on an insect bite (the operant) to escape the itching (the aversive stimulus).

You install a new battery (the operant) in the smoke detector to escape the annoying beep (the aversive stimulus).

In each example, if escaping or avoiding the aversive event has the effect of making you more likely to repeat the operant in similar situations in the future, then negative reinforcement has taken place.

PRIMARY AND CONDITIONED REINFORCERS

Skinner also distinguished two kinds of reinforcing stimuli: primary and conditioned. A primary reinforcer is one that is naturally reinforcing for a given species. That is, even if an individual has not had prior experience with the particular stimulus, the stimulus or event still has reinforcing properties. For example, food, water, adequate warmth, and sexual contact are primary reinforcers for most animals, including humans.

A conditioned reinforcer, also called a secondary reinforcer, is one that has acquired reinforcing value by being associated with a primary reinforcer. The classic example of a conditioned reinforcer is money. Money is reinforcing not because those flimsy bits of paper and little pieces of metal have value in and of themselves, but because we’ve learned that we can use them to acquire primary reinforcers and other conditioned reinforcers. Awards, frequent-

Conditioned reinforcers need not be as tangible as money or college degrees. Conditioned reinforcers can be as subtle as a smile, a touch, or a nod of recognition. Looking back at the Prologue, for example, Fern was reinforced by the laughter of her friends and relatives each time she told “the killer attic” tale—

Punishment

USING AVERSIVE CONSEQUENCES TO DECREASE BEHAVIOR

KEY THEME

Punishment is a process that decreases the future occurrence of a behavior.

KEY QUESTIONS

How does punishment differ from negative reinforcement?

What factors influence the effectiveness of punishment?

What effects are associated with the use of punishment to control behavior, and what are some alternative ways to change behavior?

What are discriminative stimuli?

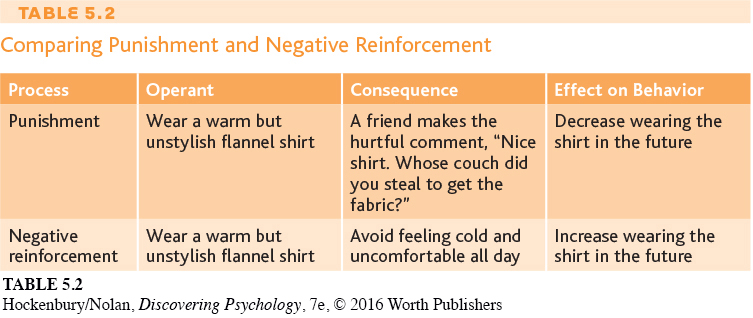

Positive and negative reinforcement are processes that increase the frequency of a particular behavior. The opposite effect is produced by punishment. Punishment is a process in which a behavior is followed by an aversive consequence that decreases the likelihood of the behavior’s being repeated. Many people tend to confuse punishment and negative reinforcement, but these two processes produce entirely different effects on behavior (see Table 5.2). Negative reinforcement always increases the likelihood that an operant will be repeated in the future. Punishment always decreases the future performance of an operant.

Skinner (1953) identified two types of aversive events that can act as punishment. Positive punishment, also called punishment by application, involves a response being followed by the presentation of an aversive stimulus. The word positive in the phrase positive punishment signifies that something is added or presented in the situation. In this case, it’s an aversive stimulus. Here are some everyday examples of punishment by application:

An employee wears shorts to work (the operant) and is reprimanded by his supervisor for dressing inappropriately (the punishing stimulus).

Your dog jumps up on a visitor (the operant), and you smack him with a rolled-

up newspaper (the punishing stimulus). You are late to class (the operant), and your instructor responds with a sarcastic remark (the punishing stimulus).

In each of these examples, if the presentation of the punishing stimulus has the effect of decreasing the behavior it follows, then punishment has occurred. Although the punishing stimuli in these examples were administered by other people, punishing stimuli also occur as natural consequences for some behaviors. Inadvertently touching a live electrical wire, a hot stove, or a sharp object (the operant) can result in a painful injury (the punishing stimulus).

The second type of punishment is negative punishment, also called punishment by removal. The word negative indicates that some stimulus is subtracted or removed from the situation (see Table 5.3). In this case, it is the loss or withdrawal of a reinforcing stimulus following a behavior. That is, the behavior’s consequence is the loss of some privilege, possession, or other desirable object or activity. Here are some everyday examples of punishment by removal:

After she speeds through a red light (the operant), her drivers’ license is suspended (loss of reinforcing stimulus).

Because he was flirting with another woman (the operant), a guy gets dumped by his girlfriend (loss of reinforcing stimulus).

In each example, if the behavior decreases in response to the removal of the reinforcing stimulus, then punishment has occurred. It’s important to stress that, like reinforcement, punishment is defined by the effect it produces. In everyday usage, people often refer to a particular consequence as a punishment when, strictly speaking, it’s not. Why? Because the consequence has not reduced future occurrences of the behavior. Hence, many consequences commonly thought of as punishments—

MYTH SCIENCE

Is it true that punishment is an effective way to teach new behaviors?

Why is it that aversive consequences don’t always function as effective punishments? Skinner (1953) as well as other researchers have noted that several factors influence the effectiveness of punishment (Horner, 2002). For example, punishment is more effective if it immediately follows a response than if it is delayed. Punishment is also more effective if it consistently, rather than occasionally, follows a response (Lerman & Vorndran, 2002; Spradlin, 2002). Though speeding tickets and prison sentences are commonly referred to as punishments, these aversive consequences are inconsistently applied and often administered only after a long delay. Thus, they don’t always effectively decrease specific behaviors.

However, many studies have demonstrated that physical punishment is associated with increased aggressiveness, delinquency, and antisocial behavior in the child (Gershoff, 2002; Knox, 2010; MacKenzie & others, 2012). In one study of almost 2,500 children, those who had been spanked at age three were more likely to be more aggressive at age five (Taylor & others, 2010). Other negative effects include poor parent–

Even when punishment works, its use has several drawbacks (see B. Smith, 2012). First, punishment may decrease a specific response, but it doesn’t necessarily teach or promote a more appropriate response to take its place. Second, punishment that is intense may produce undesirable results, such as complete passivity, fear, anxiety, or hostility (Lerman & Vorndran, 2002). Finally, the effects of punishment are likely to be temporary (Estes & Skinner, 1941; Skinner, 1938). A child who is sent to her room for teasing her little brother may well repeat the behavior when her mother’s back is turned. As Skinner (1971) noted, “Punished behavior is likely to reappear after the punitive consequences are withdrawn.” For some suggestions on how to change behavior without using a punishing stimulus, see the In Focus box, “Changing the Behavior of Others: Alternatives to Punishment.”

IN FOCUS

Changing the Behavior of Others: Alternatives to Punishment

Although punishment may temporarily decrease the occurrence of a problem behavior, it doesn’t promote more desirable or appropriate behaviors in its place. Throughout his life, Skinner remained strongly opposed to the use of punishment. Instead, he advocated the greater use of positive reinforcement to strengthen desirable behaviors (Dinsmoor, 1992; Skinner, 1971). Here are four strategies that can be used to reduce undesirable behaviors without resorting to punishment.

Strategy 1: Reinforce an Incompatible Behavior

The best method to reduce a problem behavior is to reinforce an alternative behavior that is both constructive and incompatible with the problem behavior. For example, if you’re trying to decrease a child’s whining, respond to her requests (the reinforcer) only when she talks in a normal tone of voice.

Strategy 2: Stop Reinforcing the Problem Behavior

Technically, this strategy is called extinction. The first step in effectively applying extinction is to observe the behavior carefully and identify the reinforcer that is maintaining the problem behavior. Then eliminate the reinforcer.

Suppose a friend keeps interrupting you while you are trying to study, asking you if you want to play a video game or just hang out. You want to extinguish his behavior of interrupting your studying. In the past, trying to be polite, you’ve responded to his behavior by acting interested (a reinforcer). You could eliminate the reinforcer by acting uninterested and continuing to study while he talks.

It’s important to note that when the extinction process is initiated, the problem behavior often temporarily increases. This situation is more likely to occur if the problem behavior has only occasionally been reinforced in the past. Thus, once you begin, be consistent in nonreinforcement of the problem behavior.

Strategy 3: Reinforce the Non-

This strategy involves setting a specific time period after which the individual is reinforced if the unwanted behavior has not occurred.

For example, if you’re trying to reduce bickering between children, set an appropriate time limit, and then provide positive reinforcement if they have not squabbled during that interval.

Strategy 4: Remove the Opportunity to Obtain Positive Reinforcement

It’s not always possible to identify and eliminate all the reinforcers that maintain a behavior. For example, a child’s obnoxious behavior might be reinforced by the social attention of siblings or classmates.

In a procedure called time-

Enhancing the Effectiveness of Positive Reinforcement

Often, these four strategies are used in combination. However, remember the most important behavioral principle: Positively reinforce the behaviors that you want to increase. There are several ways in which you can enhance the effectiveness of positive reinforcement:

Make sure that the reinforcer is strongly reinforcing to the individual whose behavior you’re trying to modify.

The positive reinforcer should be delivered immediately after the preferred behavior occurs.

The positive reinforcer should initially be given every time the preferred behavior occurs. When the desired behavior is well established, gradually reduce the frequency of reinforcement.

Use a variety of positive reinforcers, such as tangible items, praise, special privileges, recognition, and so on. Minimize the use of food as a positive reinforcer.

Capitalize on what is known as the Premack principle—a more preferred activity (e.g., painting) can be used to reinforce a less preferred activity (e.g., picking up toys).

Encourage the individual to engage in self-

reinforcement in the form of pride, a sense of accomplishment, and feelings of self-control.

Discriminative Stimuli

Is Maria’s preference for this blue sweater based on classical or operant conditioning? Try Concept Practice: Conditioning in Daily Life.

SETTING THE OCCASION FOR RESPONDING

Another component of operant conditioning is the discriminative stimulus—the specific stimulus in the presence of which a particular operant is more likely to be reinforced. For example, a ringing phone is a discriminative stimulus that sets the occasion for a particular response—

This example illustrates how we’ve learned from experience to associate certain environmental cues or signals with particular operant responses. We’ve learned that we’re more likely to be reinforced for performing a particular operant response when we do so in the presence of the appropriate discriminative stimulus. Thus, you’ve learned that you’re more likely to be reinforced for screaming at the top of your lungs at a football game (one discriminative stimulus) than in the middle of class (a different discriminative stimulus).

In this way, according to Skinner (1974), behavior is determined and controlled by the stimuli that are present in a given situation. In Skinner’s view, an individual’s behavior is not determined by a personal choice or a conscious decision. Instead, individual behavior is determined by environmental stimuli and the person’s reinforcement history in that environment. Skinner’s views on this point have some very controversial implications, which are discussed in the Critical Thinking box on the next page, “Is Human Freedom Just an Illusion?”

CRITICAL THINKING

Is Human Freedom Just an Illusion?

Skinner was intensely interested in human behavior and social problems (Bjork, 1997). He believed that operant conditioning principles could, and should, be applied on a broad scale to help solve society’s problems. Skinner’s most radical—

Skinner argued that behavior is not simply influenced by the environment but is determined by it. Control the environment, he said, and you will control human behavior. As he bluntly asserted in his controversial best-

Such views did not sit well with the American public (Rutherford, 2003). Following the publication of Beyond Freedom and Dignity, one member of Congress denounced Skinner for “advancing ideas which threaten the future of our system of government by denigrating the American tradition of individualism, human dignity, and self-

Skinner’s ideas clashed with the traditional American ideals of personal responsibility, individual freedom, and self-

To understand Skinner’s point of view, it helps to think of society as a massive, sophisticated Skinner box. From the moment of birth, the environment shapes and determines your behavior through reinforcing or punishing consequences. Taking this view, you are no more personally responsible for your behavior than is a rat in a Skinner box pressing a lever to obtain a food pellet. Just like the rat’s behavior, your behavior is simply a response to the unique patterns of environmental consequences to which you have been exposed. On the one hand, it may seem convenient to blame your history of environmental consequences for your failures and mistakes. On the other hand, that means you can’t take any credit for your accomplishments and good deeds, either!

Skinner (1971) proposed that “a technology of behavior” be developed, one based on a scientific analysis of behavior. He believed that society could be redesigned using operant conditioning principles to produce more socially desirable behaviors—

Critics charged Skinner with advocating a totalitarian state. They asked who would determine which behaviors were shaped and maintained (Rutherford, 2000; Todd & Morris, 1992). As Skinner pointed out, however, human behavior is already controlled by various authorities: parents, teachers, politicians, religious leaders, employers, and so forth. Such authorities regularly use reinforcing and punishing consequences to shape and control the behavior of others. Skinner insisted that it is better to control behavior in a rational, humane fashion than to leave the control of behavior to the whims and often selfish aims of those in power.

Skinner’s ideas may seem radical or far-

CRITICAL THINKING QUESTIONS

If Skinner’s vision of a socially engineered society using operant conditioning principles were implemented, would such changes be good or bad for society?

Are human freedom and personal responsibility illusions? Or is human behavior fundamentally different from a rat’s behavior in a Skinner box? If so, how?

Is your behavior almost entirely the product of environmental conditioning? Think about your answer carefully. After all, exactly why are you reading this box?

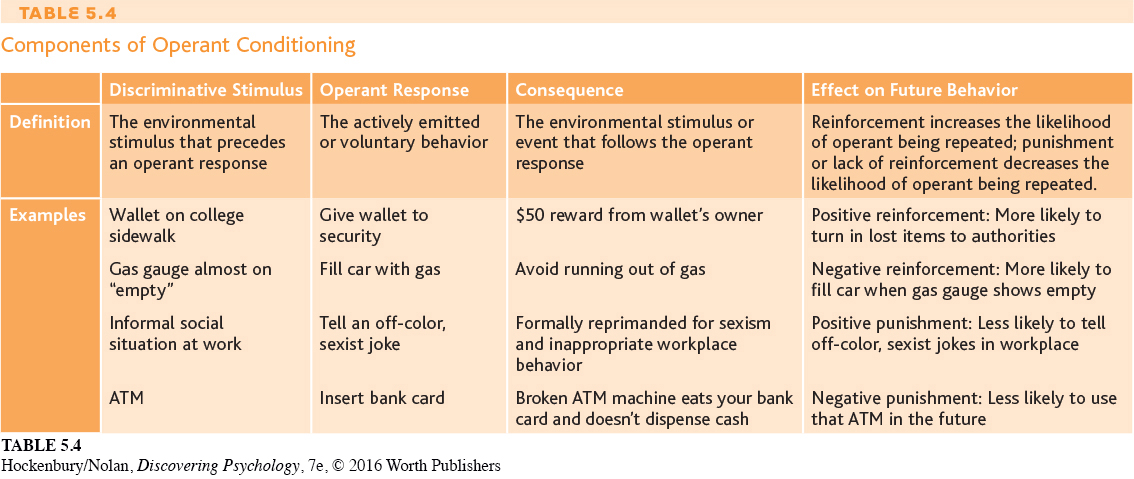

We have now discussed all three fundamental components of operant conditioning (see Table 5.4). In the presence of a specific environmental stimulus (the discriminative stimulus), we emit a particular behavior (the operant), which is followed by a consequence (reinforcement or punishment). If the consequence is either positive or negative reinforcement, we are more likely to repeat the operant when we encounter the same or similar discriminative stimuli in the future. If the consequence is some form of punishment, we are less likely to repeat the operant when we encounter the same or similar discriminative stimuli in the future.

Next, we’ll build on the basics of operant conditioning by considering how Skinner explained the acquisition of complex behaviors.

Shaping and Maintaining Behavior

KEY THEME

New behaviors are acquired through shaping and can be maintained through different patterns of reinforcement.

KEY QUESTIONS

How does shaping work?

What is the partial reinforcement effect, and how do the four schedules of reinforcement differ in their effects?

What is behavior modification?

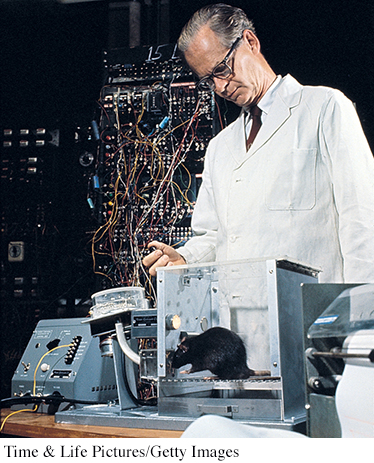

To scientifically study the relationship between behavior and its consequences in the laboratory, Skinner invented the operant chamber, more popularly known as the Skinner box. An operant chamber is a small cage with a food dispenser. Attached to the cage is a device that automatically records the number of operants made by an experimental animal, usually a rat or pigeon. For a rat, the typical operant is pressing a bar; for a pigeon, it is pecking at a small disk. Food pellets are usually used for positive reinforcement. Often, a light in the cage functions as a discriminative stimulus. When the light is on, pressing the bar or pecking the disk is reinforced with a food pellet. When the light is off, these responses do not result in reinforcement.

When a rat is first placed in a Skinner box, it typically explores its new environment, occasionally nudging or pressing the bar in the process. The researcher can accelerate the rat’s bar-

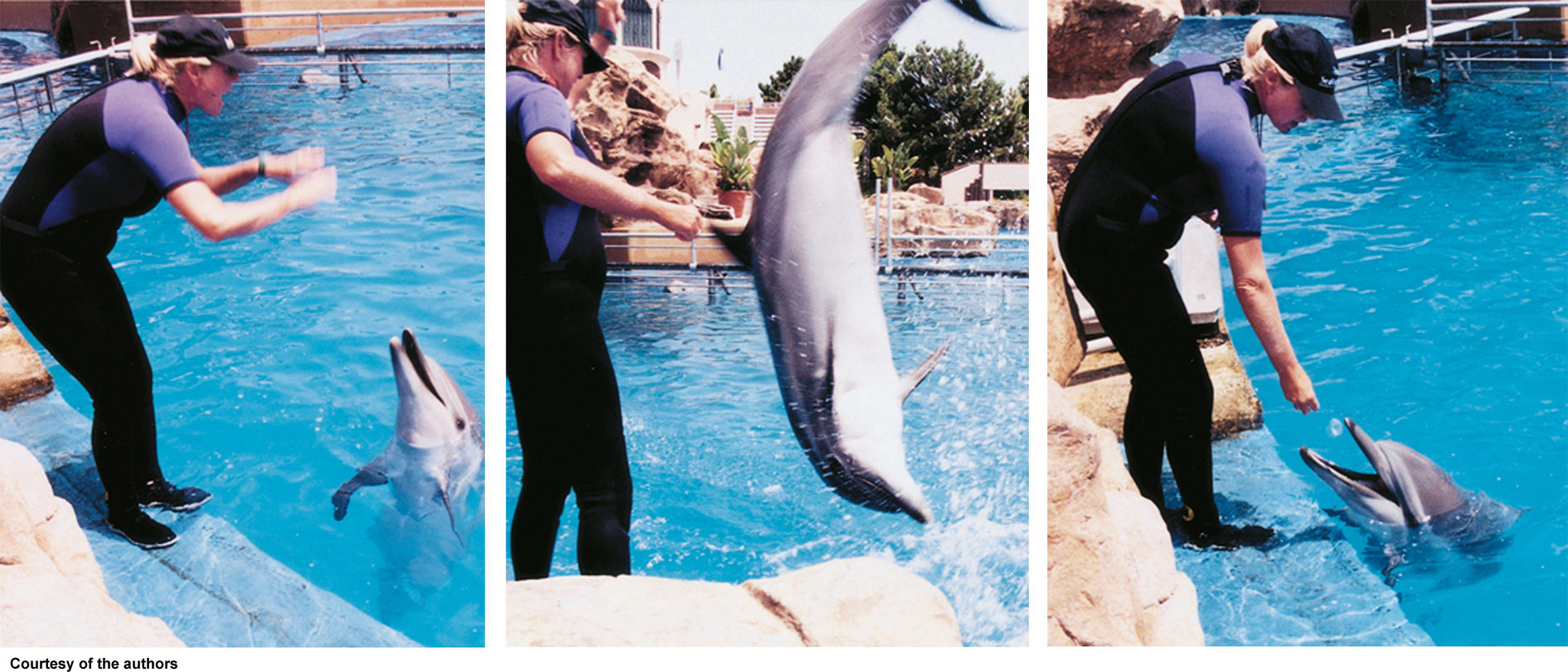

Skinner believed that shaping could explain how people acquire a wide variety of abilities and skills—

THE PARTIAL REINFORCEMENT EFFECT

BUILDING RESISTANCE TO EXTINCTION

Once a rat had acquired a bar-

Now suppose that despite all your hard work, your basketball skills are dismal. If practicing free throws was never reinforced by making a basket, what would you do? You’d probably eventually quit playing basketball. This is an example of extinction. In operant conditioning, when a learned response no longer results in reinforcement, the likelihood of the behavior’s being repeated gradually declines.

Skinner (1956) first noticed the effects of partial reinforcement when he began running low on food pellets one day. Rather than reinforcing every bar press, Skinner tried to stretch out his supply of pellets by rewarding responses only periodically. He found that the rats not only continued to respond, but actually increased their rate of bar pressing.

MYTH SCIENCE

Is it true that the most effective way to teach a new behavior is to reward it each time it is performed?

One important consequence of partially reinforcing behavior is that partially reinforced behaviors tend to be more resistant to extinction than are behaviors conditioned using continuous reinforcement. This phenomenon is called the partial reinforcement effect. For example, when Skinner shut off the food-

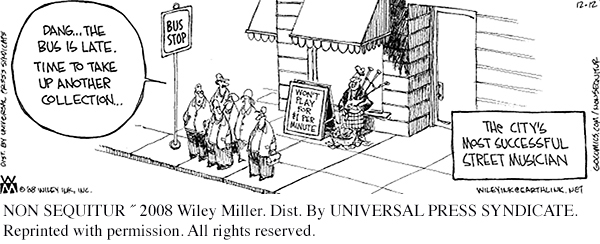

Skinner (1948b) pointed out that superstitions may result when a behavior is accidentally reinforced—

In everyday life, the partial reinforcement effect is reflected in behaviors that persist despite the lack of reinforcement. Gamblers may persist despite a string of losses, writers will persevere in the face of repeated rejection slips, and the family dog will continue begging for the scraps of food that it has only occasionally received at the dinner table in the past.

THE SCHEDULES OF REINFORCEMENT

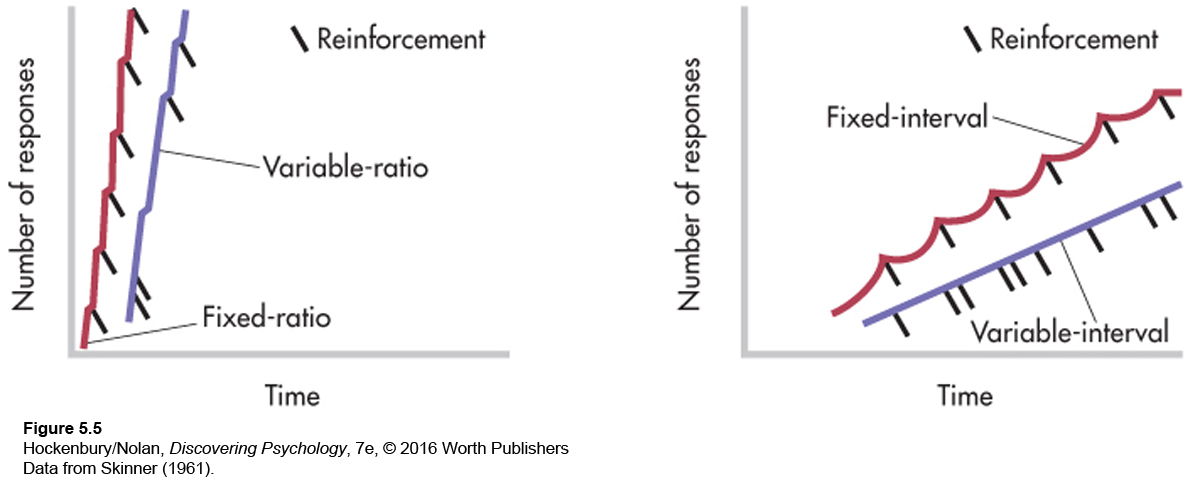

Skinner (1956) found that specific preset arrangements of partial reinforcement produced different patterns and rates of responding. Collectively, these different reinforcement arrangements are called schedules of reinforcement. As we describe the four basic schedules of reinforcement, it will be helpful to refer to Figure 5.5, which shows the typical pattern of responses produced by each schedule.

With a fixed-

With a variable-

Variable-

On a fixed-

Fixed-

On a variable-

Generally, the unpredictable nature of variable-

Applications of Operant Conditioning

The In Focus box on alternatives to punishment earlier in the chapter described how operant conditioning principles can be applied to reduce and eliminate problem behaviors. These examples illustrate behavior modification, the application of learning principles to help people develop more effective or adaptive behaviors. Most often, behavior modification involves applying the principles of operant conditioning to bring about changes in behavior.

Behavior modification techniques have been successfully applied in many different settings (see Kazdin, 2008). Coaches, parents, teachers, and employers all routinely use operant conditioning. For example, behavior modification has been used to reduce public smoking by teenagers (Jason & others, 2009), improve student behavior in school cafeterias (McCurdy & others, 2009), reduce problem behaviors in schoolchildren (Dunlap & others, 2010; Schanding & Sterling-

Businesses also use behavior modification. For example, one large retailer increased productivity by allowing employees to choose their own reinforcers. A casual dress code and flexible work hours proved to be more effective reinforcers than money (Raj & others, 2006). In each of these examples, the systematic use of reinforcement, shaping, and extinction increased the occurrence of desirable behaviors and decreased the incidence of undesirable behaviors. In Chapter 14 on therapies, we’ll look at behavior modification techniques in more detail.

The principles of operant conditioning have also been used in the specialized training of animals, such as the Labrador shown at right, to help people who are physically challenged. Other examples are Seeing Eye dogs and capuchin monkeys who assist people who are severely disabled.