Contemporary Views of Operant Conditioning

KEY THEME

In contrast to Skinner, today’s psychologists acknowledge the importance of both cognitive and evolutionary factors in operant conditioning.

KEY QUESTIONS

How did Tolman’s research demonstrate the involvement of cognitive processes in learning?

What are cognitive maps, latent learning, and learned helplessness?

How do an animal’s natural behavior patterns affect the conditioning of operant behaviors?

In our discussion of classical conditioning, we noted that contemporary psychologists acknowledge the important roles played by cognitive factors and biological predispositions in classical conditioning. The situation is much the same with operant conditioning. The basic principles of operant conditioning have been confirmed in thousands of studies. However, our understanding of operant conditioning has been broadened by the consideration of cognitive factors and the recognition of the importance of natural behavior patterns.

Cognitive Aspects of Operant Conditioning

RATS! I THOUGHT YOU HAD THE MAP!

In Skinner’s view, operant conditioning did not need to invoke cognitive factors to explain the acquisition of operant behaviors. Words such as expect, prefer, choose, and decide could not be used to explain how behaviors were acquired, maintained, or extinguished. Similarly, Thorndike and other early behaviorists believed that complex, active behaviors were no more than a chain of stimulus–

However, not all learning researchers agreed with Skinner and Thorndike. Edward C. Tolman firmly believed that cognitive processes played an important role in the learning of complex behaviors—

Much of Tolman’s research involved rats in mazes. When Tolman began his research in the 1920s, many studies of rats in mazes had been done. In a typical experiment, a rat would be placed in the “start” box. A food reward would be put in the “goal” box at the end of the maze. The rat would initially make many mistakes in running the maze. After several trials, it would eventually learn to run the maze quickly and with very few errors.

But what had the rats learned? According to traditional behaviorists, the rats had learned a sequence of responses, such as “first corner—

Tolman (1948) disagreed with that view. He noted that several investigators had reported as incidental findings that their maze-

As an analogy, think of the route you typically take to get to your psychology classroom. If a hallway along the way were blocked off for repairs, you would use your cognitive map of the building to come up with an alternative route to class. Tolman showed experimentally that rats, like people, seem to form cognitive maps (Tolman, 1948). And, like us, rats can use their cognitive maps to come up with an alternative route to a goal when the customary route is blocked (Tolman & Honzik, 1930a).

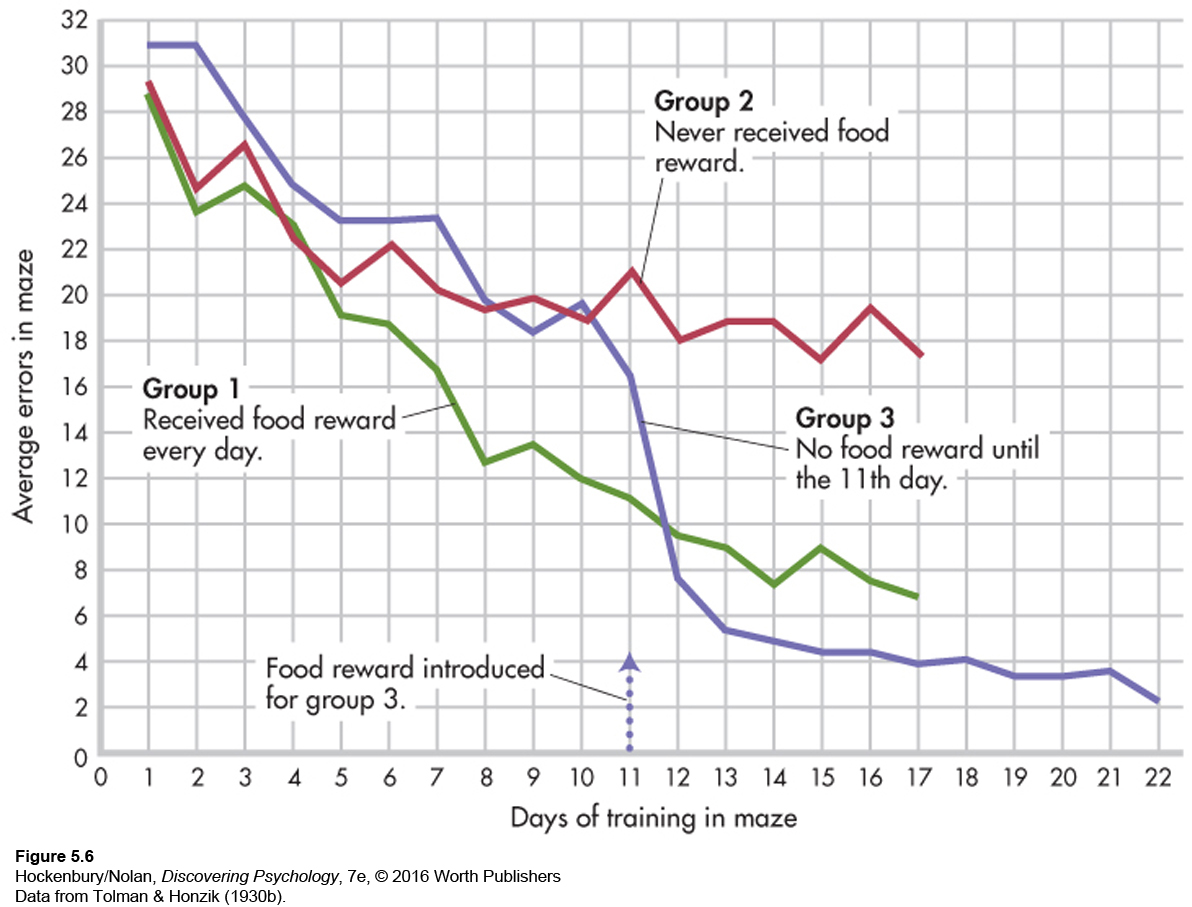

Tolman challenged the prevailing behaviorist model on another important point. According to Thorndike, for example, learning would not occur unless the behavior was “strengthened,” or “stamped in,” by a rewarding consequence. But Tolman showed that this was not necessarily the case. In a classic experiment, three groups of rats were put in the same maze once a day for several days (Tolman & Honzik, 1930b). For group 1, a food reward awaited the rats at the end of the maze. Their performance in the maze steadily improved; the number of errors and the time it took the rats to reach the goal box showed a steady decline with each trial. The rats in group 2 were placed in the maze each day with no food reward. They consistently made many errors, and their performance showed only slight improvement. The performance of the rats in groups 1 and 2 was exactly what the traditional behaviorist model would have predicted.

Now consider the behavior of the rats in group 3. These rats were placed in the maze with no food reward for the first 10 days of the experiment. Like the rats in group 2, they made many errors as they wandered about the maze. But, beginning on day 11, they received a food reward at the end of the maze. As you can see in Figure 5.6, there was a dramatic improvement in group 3’s performance from day 11 to day 12. Once the rats had discovered that food awaited them at the end of the maze, they made a beeline for the goal. On day 12, the rats in group 3 ran the maze with very few errors, improving their performance to the level of the rats in group 1 that had been rewarded on every trial!

Tolman concluded that reward—or reinforcement—

From these and other experiments, Tolman concluded that learning involves the acquisition of knowledge rather than simply changes in outward behavior. According to Tolman (1932), an organism essentially learns “what leads to what.” It learns to “expect” that a certain behavior will lead to a particular outcome in a specific situation.

Tolman is now recognized as an important forerunner of modern cognitive learning theorists (Gleitman, 1991; Olton, 1992). Many contemporary cognitive learning theorists follow Tolman in their belief that operant conditioning involves the cognitive representation of the relationship between a behavior and its consequence. Today, operant conditioning is seen as involving the cognitive expectancy that a given consequence will follow a given behavior (Bouton, 2007; Dickinson & Balleine, 2000).

Learned Helplessness

EXPECTATIONS OF FAILURE AND LEARNING TO QUIT

Cognitive factors, particularly the role of expectation, are involved in another learning phenomenon, called learned helplessness. Learned helplessness was discovered by accident. Psychologists were trying to find out if classically conditioned responses would affect the process of operant conditioning in dogs. The dogs were strapped into harnesses and then exposed to a tone (the neutral stimulus) paired with an unpleasant but harmless electric shock (the UCS), which elicited fear (the UCR). After conditioning, the tone alone—

In the classical conditioning setup, the dogs were unable to escape or avoid the shock. But the next part of the experiment involved an operant conditioning procedure in which the dogs could escape the shock. The dogs were transferred to a special kind of operant chamber called a shuttlebox, which has a low barrier in the middle that divides the chamber in half. In the operant conditioning setup, the floor on one side of the cage became electrified. To escape the shock, all the dogs had to do was learn a simple escape behavior: Jump over the barrier when the floor was electrified. Normally, dogs learn this simple operant very quickly.

However, when the classically conditioned dogs were placed in the shuttlebox and one side became electrified, the dogs did not try to jump over the barrier. Rather than perform the operant to escape the shock, they just lay down and whined. Why?

To Steven F. Maier and Martin Seligman, two young psychology graduate students at the time, the explanation of the dogs’ passive behavior seemed obvious. During the tone–

To test this idea, Seligman and Maier (1967) designed a simple experiment. Dogs were arranged in groups of three. The first dog received shocks that it could escape by pushing a panel with its nose. The second dog was “yoked” to the first and received the same number of shocks. However, nothing the second dog did could stop the shock—

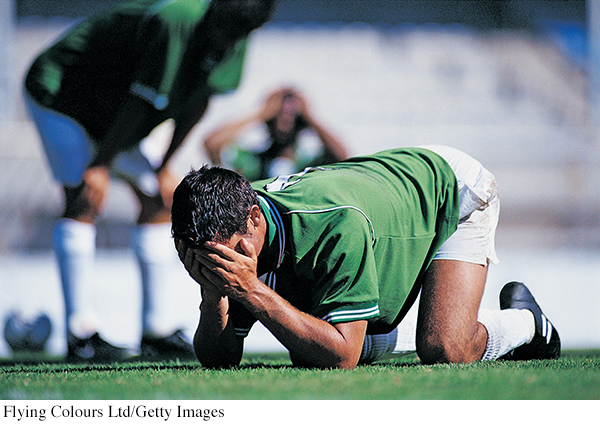

After this initial training, the dogs were transferred to the shuttlebox. As Seligman and Maier had predicted, the first and third dogs quickly learned to jump over the barrier when the floor became electrified. But the second dog, the one that had learned that nothing it did would stop the shock, made no effort to jump over the barrier. Because the dog had developed the cognitive expectation that its behavior would have no effect on the environment, it had become passive (Seligman & Maier, 1967). The name of this phenomenon is learned helplessness—a phenomenon in which exposure to inescapable and uncontrollable aversive events produces passive behavior (Maier & others, 1969).

Since these early experiments, learned helplessness has been demonstrated in many different species, including primates, cats, rats, and fish (LoLordo, 2001). Even cockroaches demonstrate learned helplessness in a cockroach-

In humans, numerous studies have found that exposure to uncontrollable, aversive events can produce passivity and learned helplessness. For example, college students who have experienced failure in previous academic settings may feel that academic tasks and setbacks are beyond their control. Thus, when faced with the demands of exams, papers, and studying, rather than rising to the challenge, they may experience feelings of learned helplessness (Au & others, 2010). If a student believes that academic tasks are unpleasant, unavoidable, and beyond her control, even the slightest setback can trigger a sense of helpless passivity. Such students may be prone to engage in self-

How can learned helplessness be overcome? In their early experiments, Seligman and Maier discovered that if they forcibly dragged the dogs over the shuttlebox barrier when the floor on one side became electrified, the dogs would eventually overcome their passivity and begin to jump over the barrier on their own (LoLordo, 2001; Seligman, 1992). For students who experience academic learned helplessness, establishing a sense of control over their schoolwork is the first step. Seeking knowledge about course requirements and assignments and setting goals, however modest, that can be successfully met can help students begin to acquire a sense of mastery over environmental challenges (Glynn & others, 2005).

Since the early demonstrations of learned helplessness in dogs, the notion of learned helplessness has undergone several revisions and refinements (Abramson & others, 1978; Gillham & others, 2001). Learned helplessness has been shown to play a role in psychological disorders, particularly depression, and in the ways that people respond to stressful events. Learned helplessness has also been applied in such diverse fields as management, sales, and health psychology (Wise & Rosqvist, 2006). In Chapter 12 on stress, health, and coping, we will take up the topic of learned helplessness again.

Operant Conditioning and Biological Predispositions

MISBEHAVING CHICKENS

Skinner and other behaviorists firmly believed that the general laws of operant conditioning applied to all animal species—

Pigeon, rat, monkey, which is which? It doesn’t matter. Of course, these species have behavioral repertoires which are as different as their anatomies. But once you have allowed for differences in the ways in which they make contact with the environment, and in the ways in which they act upon the environment, what remains of their behavior shows astonishingly similar properties.

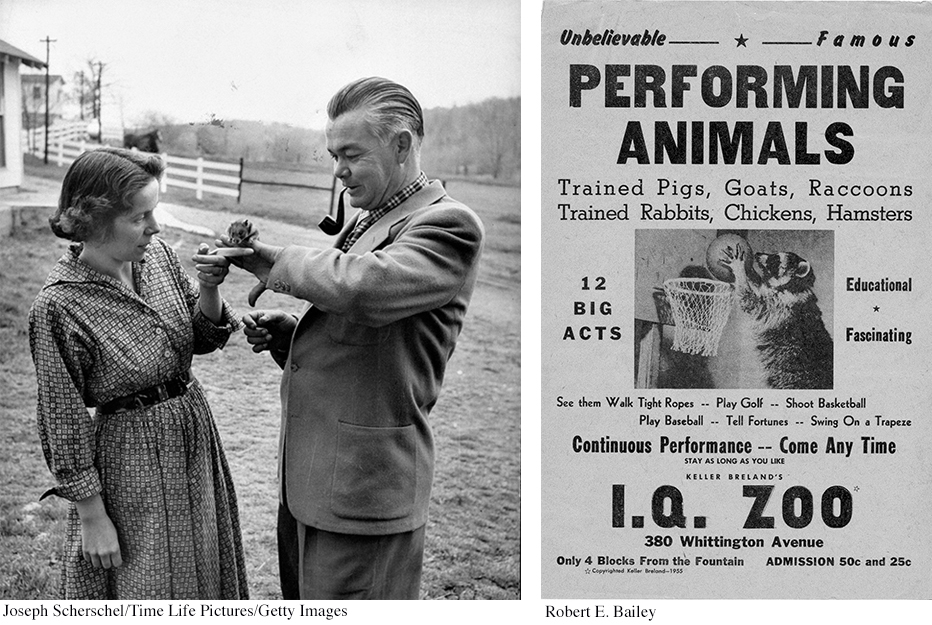

However, psychologists studying operant conditioning, like those studying classical conditioning, found that an animal’s natural behavior patterns could influence the learning of new behaviors. Consider the experiences of Keller and Marian Breland, two of Skinner’s students at the University of Minnesota. The Brelands established a successful business training animals for television commercials, trade shows, fairs, and even displays in department stores (Breland & Breland, 1961). Using operant conditioning, the Brelands trained thousands of animals of many different species to perform all sorts of complex tricks (Bihm & others, 2010a, b).

But the Brelands weren’t always successful in training the animals. For example, they tried to train a chicken to play baseball. The chicken learned to pull a loop that activated a swinging bat. After hitting the ball, the chicken was supposed to run to first base. The chicken had little trouble learning to pull the loop, but instead of running to first base, the chicken would chase the ball.

The Brelands also tried to train a raccoon to pick up two coins and deposit them into a metal box. The raccoon easily learned to pick up the coins but seemed to resist putting them into the box. Like a furry little miser, it would rub the coins together. And rather than dropping the coins in the box, it would dip the coins in the box and take them out again. As time went on, this behavior became more persistent, even though the raccoon was not being reinforced for it. In fact, the raccoon’s “misbehavior” was actually preventing it from getting reinforced for correct behavior (Bailey & Bailey, 1993).

The Brelands noted that such nonreinforced behaviors seemed to reflect innate, instinctive responses. The chicken chasing the ball was behaving like a chicken chasing a bug. Raccoons in the wild instinctively clean and moisten their food by dipping it in streams or rubbing it between their forepaws. These natural behaviors interfered with the operant behaviors the Brelands were attempting to condition—

The biological predisposition to perform such natural behaviors was strong enough to overcome the lack of reinforcement. These instinctual behaviors also prevented the animals from engaging in the learned behaviors that would result in reinforcement. Clearly, reinforcement is not the sole determinant of behavior. And, inborn or instinctive behavior patterns can interfere with the operant conditioning of arbitrary responses.

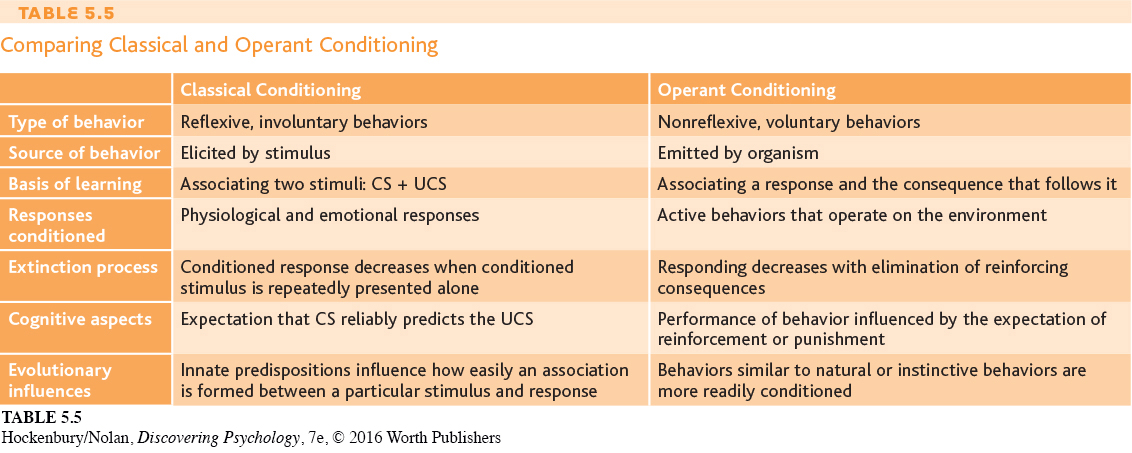

Before you go on to the next section, take a few minutes to review Table 5.5 and make sure you understand the differences between classical and operant conditioning.

Test your understanding of Operant Conditioning with  .

.