1.2 Research Methods Used by Psychologists

Regardless of their perspective, psychology researchers use the same research methods. These methods fall into three categories—

Descriptive Methods

descriptive methods Research methods whose main purpose is to provide objective and detailed descriptions of behavior and mental processes.

There are three types of descriptive methods: observational techniques, case studies, and survey research. The main purpose of all three methods is to provide objective and detailed descriptions of behavior and mental processes. However, these descriptive data only allow the researcher to speculate about cause–

naturalistic observation A descriptive research method in which the behavior of interest is observed in its natural setting, and the researcher does not intervene in the behavior being observed.

Observational techniques. Observational techniques exactly reflect their name. The researcher directly observes the behavior of interest. Such observation can be done in the laboratory. For example, children’s behavior can be observed using one-

participant observation A descriptive research method in which the observer becomes part of the group being observed.

Observational techniques do have a major potential problem, though. The observer may influence or change the behavior of those being observed. This is why observers must remain as unobtrusive as possible, so that the results won’t be contaminated by their presence. To overcome this possible shortcoming, researchers use participant observation. In participant observation, the observer becomes part of the group being observed. Sometimes naturalistic observation studies that start out with unobtrusive observation end up as participant observation studies. For example, Dian Fossey’s study of gorillas turned into participant observation when she was finally accepted as a member of the group. However, in most participant observation studies, the observer begins the study as a participant, whether in a laboratory or natural setting. You can think of this type of study as comparable to doing undercover work. A famous example of such a study involved a group of people posing as patients with symptoms of a major mental disorder to see if doctors at psychiatric hospitals could distinguish them from real patients (Rosenhan, 1973). According to Rosenhan, it turned out that the doctors and staff couldn’t do so, but the patients could. Once admitted, these “pseudopatients” acted normally and asked to be released. We will find out what happened to them in Chapter 10, on abnormal psychology.

case study A descriptive research method in which the researcher studies an individual in depth over an extended period of time.

Case studies. Detailed observation is also involved in a case study. In a case study, the researcher studies an individual in depth over an extended period of time. In brief, the researcher attempts to learn as much as possible about the individual being studied. A life history for the individual is developed, and data for a variety of tests are collected. The most common use of case studies is in clinical settings with patients suffering specific deficits or problems. The main goal of a case study is to gather information that will help in the treatment of the patient. The results of a case study cannot be generalized to the entire population. They are specific to the individual that has been studied. However, case study data do allow researchers to develop hypotheses that can then be tested in experimental research. A famous example of such a case study is that of the late Henry Molaison, a person with amnesia (Scoville & Milner, 1957). He was studied by nearly 100 investigators (Corkin, 2002) and is often referred to as the most studied individual in the history of neuroscience (Squire, 2009). For confidentiality purposes while he was alive (he died in 2008 at the age of 82), only his initials, H. M., were used to identify him in the hundreds of studies that he participated in for over five decades. Thus, we will refer to him as H. M. His case will be discussed in more detail in Chapter 5, Memory, but let’s consider some of his story here to illustrate the importance of case studies in the development of hypotheses and the subsequent experimental work to test these hypotheses.

For medical reasons, H. M. had his hippocampus (a part of the brain below the cortex) and the surrounding areas surgically removed at a young age. His case study included testing his memory capabilities in depth after the operation. Except for some amnesia for a period preceding his surgery (especially events in the days immediately before the surgery), he appeared to have normal memory for information that he had learned before the operation, but he didn’t seem to be able to form any new memories. For example, if he didn’t know you before his operation, he would never be able to remember your name regardless of how many times you met with him. He could read a magazine over and over again without ever realizing that he had read it before. He couldn’t remember what he had eaten for breakfast. Such memory deficits led to the hypothesis that the hippocampus plays an important role in the formation of new memories; later experimental research confirmed this hypothesis (Cohen & Eichenbaum, 1993). We will learn exactly what role the hippocampus plays in memory in Chapter 5. Remember, researchers cannot make cause–

survey research A descriptive research method in which the researcher uses questionnaires and interviews to collect information about the behavior, beliefs, and attitudes of particular groups of people.

Survey research. The last descriptive method is one that you are most likely already familiar with, survey research. You have probably completed surveys either online, over the phone, via the mail, or in person during an interview. Survey research uses questionnaires and interviews to collect information about the behavior, beliefs, and attitudes of particular groups of people. It is assumed in survey research that people are willing and able to answer the survey questions accurately. However, the wording, order, and structure of the survey questions may lead the participants to give biased answers (Schwartz, 1999). For example, survey researchers need to be aware of the social desirability bias, our tendency to respond in socially approved ways that may not reflect what we actually think or do. This means that questions need to be constructed carefully to minimize such biases. Developing a well-

population The entire group of people that a researcher is studying.

sample The subset of a population that actually participates in a research study.

Another necessity in survey research is surveying a representative sample of the relevant population, the entire group of people being studied. For many reasons (such as time and money), it is impossible to survey every person in the population. This is why the researcher only surveys a sample, the subset of people in a population participating in a study. For these sample data to be meaningful, the sample has to be representative of the larger relevant population. If you don’t have a representative sample, then generalization of the survey findings to the population is not possible.

One ill-

random sampling A sampling technique that obtains a representative sample of a population by ensuring that each individual in a population has an equal opportunity to be in the sample.

In random sampling, each individual in the population has an equal opportunity of being in the sample. To understand the “equal opportunity” part of the definition, think about selecting names from a hat, where each name has an equal chance of being selected. In actuality, statisticians have developed procedures for obtaining a random sample that parallel selecting names randomly from a hat. Think about how you would obtain a random sample of first-

Correlational Studies

correlational study A research study in which two variables are measured to determine if they are related (how well either one predicts the other).

variable Any factor that can take on more than one value.

In a correlational study, two variables are measured to determine if they are related (how well either one predicts the other). A variable is any factor that can take on more than one value. For example, age, height, grade point average, and intelligence test scores are all variables. In conducting a correlational study, the researcher first gets a representative sample of the relevant population. Next, the researcher takes the two measurements on the sample. For example, the researcher could measure a person’s height and their weight.

correlation coefficient A statistic that tells us the type and the strength of the relationship between two variables. The sign of the coefficient (+ or –) indicates the type of correlation—

positive correlation A direct relationship between two variables.

The correlation coefficient and predictability. To see if the variables are related, the researcher calculates the correlation coefficient, a statistic that tells us the type and the strength of the relationship between two variables. Correlation coefficients range in value from –1.0 to +1.0. The sign of the coefficient, + or –, tells us the type of relationship, positive or negative. A positive correlation indicates a direct relationship between two variables—

negative correlation An inverse relationship between two variables.

A negative correlation is an inverse relationship between two variables—

The second part of the correlation coefficient is its absolute value, from 0 to 1.0. The strength of the correlation is indicated by its absolute value. Zero and absolute values near zero indicate no relationship. As the absolute value increases to 1.0, the strength of the relationship increases. Please note that the sign of the coefficient does not tell us anything about the strength of the relationship. Coefficients do not function like numbers on the number line, where positive numbers are greater than negative numbers. With correlation coefficients, only the absolute value of the number tells us about the relationship’s strength. For example, –.50 indicates a stronger relationship than +.25. As the strength of the correlation increases, researchers can predict the relationship with more accuracy. If the coefficient is + (or –) 1.0, we have perfect predictability. A correlation of +1.0 means that every change in one variable is accompanied by an equivalent change in the other variable in the same direction, and a correlation of –1.0 means that every change in one variable is accompanied by an equivalent change in the other variable in the opposite direction. Virtually all correlation coefficients in psychological research have an absolute value less than 1.0. Thus, we usually do not have perfect predictability so there will be exceptions to these relationships, even the strong ones. These exceptions do not, however, invalidate the general trends indicated by the correlations. They only indicate that the relationships are not perfect. Because the correlations are not perfect, such exceptions have to exist.

regression toward the mean The tendency for extreme or unusual values on one variable to be matched on average with less extreme values on the other variable when the two variables are not perfectly correlated.

mean The numerical average for a set of values.

It is also important to realize that when two variables are correlated, but imperfectly so, extreme values on one of the variables tend to be matched on average with less extreme values on the other variable. This is called regression toward the mean (the mean is the statistical name for the numerical average of a set of values). Probability theory tells us that an extreme value, either far above or below average, is likely to be followed by a value that is more consistent with the long-

Although regression toward the mean seems straightforward, we do not seem to recognize it when it is operating in our everyday lives. A good example is the widespread belief in the “Sports Illustrated jinx”—that it is bad luck for an athlete to be pictured on the cover of Sports Illustrated magazine because it will lead to a decline in the athlete’s performance afterward (Gilovich, 1991). Many athletes do suffer declines in their performance after being pictured on the cover, but the cause of this decline is regression toward the mean and not a jinx. The athletes pictured on the cover have accomplished outstanding performances in their respective sports. This is why they are on the cover. Regression toward the mean would predict that this extremely high level of performance would be followed by a more normal level of performance because performance levels of an athlete over time vary and thus are not perfectly correlated. Hence, such regression in performance would be expected. Failure to recognize regression toward the mean is not only the reason for belief in the Sports Illustrated jinx but also the reason for other superstitious beliefs that people hold and many other events that we all experience in our everyday lives (Kruger, Savitsky, & Gilovich, 1999; Lawson, 2002). Extreme events tend to be followed by more ordinary ones. When this happens, the important point to remember is that regression toward the mean is likely operating, and no extraordinary explanation is necessary. Not only do people fail to recognize regression toward the mean when it occurs, they also tend to make nonregressive or insufficiently regressive predictions (Gilovich, 1991; Kahneman & Tversky, 1973). Thus, when trying to explain or predict changes in scores, levels of performance, and other events over time, remember regression toward the mean is likely operating so extreme or extraordinary events will tend to be followed by more normal ones.

scatterplot A visual depiction of correlational data in which each data point represents the scores on the two variables for each participant.

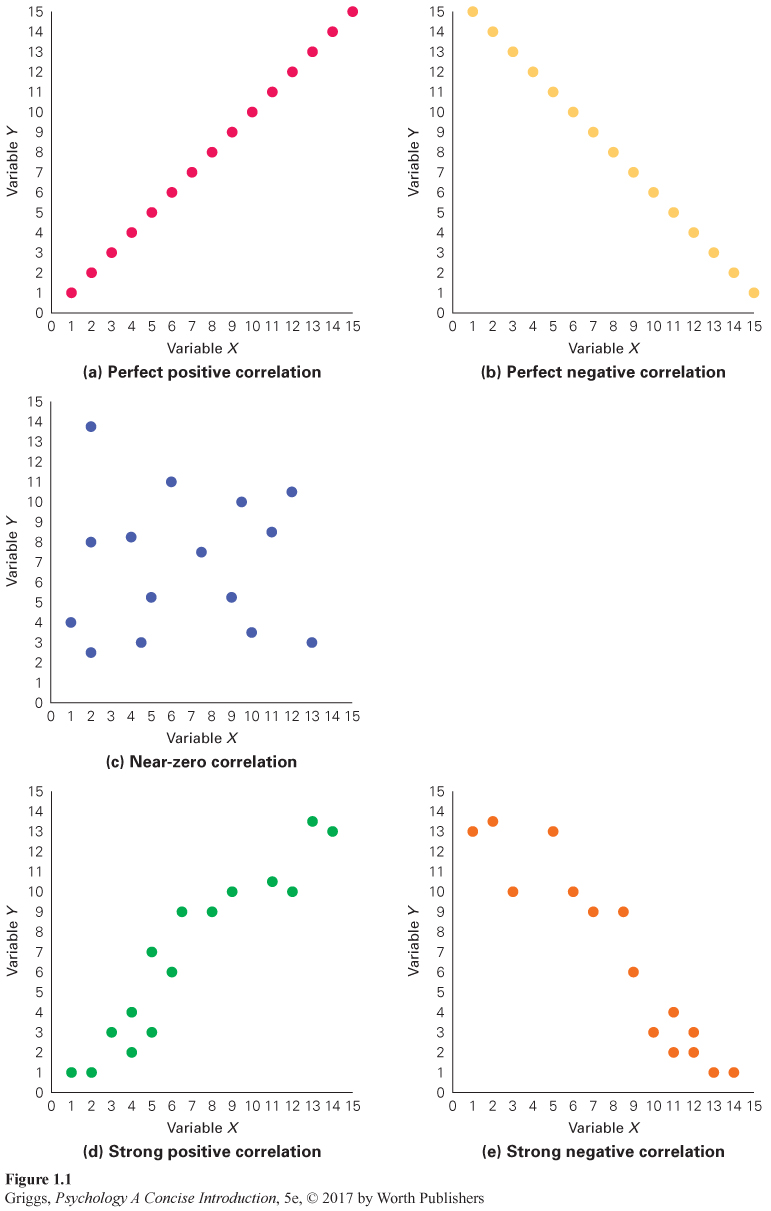

Scatterplots. A good way to understand the predictability of a coefficient is to examine a scatterplot—a visual depiction of correlational data. In a scatterplot, each data point represents the scores on two variables for each participant. Several sample scatterplots are presented in Figure 1.1. Correlational studies usually involve a large number of participants; therefore, there are usually a large number of data points in a scatterplot. Because those in Figure 1.1 are just examples to illustrate how to interpret scatterplots, there are only 15 points in each one. This means there were 15 participants in each of the hypothetical correlational studies leading to these scatterplots.

The scatterplots in Figure 1.1(a) and (b) indicate perfect 1.0 correlations—

The scatterplot in Figure 1.1(c) indicates no relationship between the two variables. There is no direction to the data points in this scatterplot. They are scattered all over in a random fashion. This means that we have a correlation near 0 and minimal predictability. Now consider Figure 1.1(d) and (e). First, you should realize that (d) indicates a positive correlation because of the direction of the data points from the bottom left to the top right and that (e) indicates a negative correlation because of the direction of the scatter from the top left to the bottom right. But what else does the scatter of the data points tell us? Note that the data points in (d) and (e) neither fall on the same line as in (a) and (b), nor are they scattered all about the graph with no directional component as in (c). Thus, scatterplots (d) and (e) indicate correlations with strengths somewhere between 0 and 1.0. As the amount of scatter of the data points increases, the strength of the correlation decreases. So, how strong would the correlations represented in (d) and (e) be? They would be fairly strong because there is not much scatter. Remember, as the amount of scatter increases, the predictability also decreases. When the scatter is maximal as in (c), the strength is near 0, and we have little predictability.

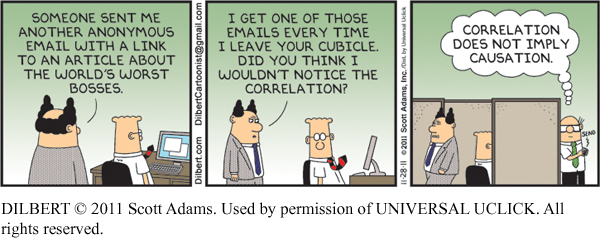

The third-

third-

To understand this point, let’s consider the negative correlation between self-

To make sure you understand the third-

spurious correlation A correlation in which the variables are related through their relationship with one or more other variables but not through a causal mechanism.

Now that you are aware of the third-

Given the examples of spurious correlations that we have discussed, such as ice cream sales and forest fires, you may think that such correlations do not have any significance in the real world, but they do. To help you understand why they do, I’ll describe a famous example from medical research in which the researchers were led astray by a spurious correlation in their search for the cause of a deadly disease, pellagra. In the early 1900s, pellagra victims (pellagrins) had a mortality rate of 1 in 3, and overall, the disease was killing roughly 100,000 people a year in the southeastern United States (Bronfenbrenner & Mahoney, 1975; Kraut, 2003). Medical researchers were scrambling to find its cause so that a treatment could be found. One correlational study found that the incidence of the disease was negatively correlated with the quality of sanitary conditions (Stanovich, 2010). In geographical areas with higher quality plumbing and sewerage, there was hardly any disease, and in areas with poor plumbing and sewerage, there was an extremely high incidence of the disease. Because this correlation fit the infectious disease model in which the disease is spread by a microorganism, these researchers forgot that there could be a spurious correlation stemming from the third-

But why should a dietary deficiency hypothesis be preferred over an infectious disease hypothesis? Both were congruent with the available correlational data. Most medical researchers ignored Goldberger’s proposal and continued to pursue the infectious disease hypothesis. Given the urgency of the situation, with thousands dying from the disease, a frustrated Goldberger felt compelled to squash that hypothesis once and for all by exposing himself and some volunteers to its proposed cause in a series of dramatic demonstrations. He, his wife, and 14 volunteers engaged in a series of what were termed “filth parties” because the participants were “feasting on filth” (Kraut, 2003, p. 147). Urine, fecal matter, and liquid feces were taken from pellagrins and then were mixed with wheat flour and formed into dough balls that were swallowed by Goldberger and the volunteers (Kraut, 2003). For complete refutation of the infectious disease hypothesis, they also mixed scabs taken from the rashes of pellagrins into the dough balls, allowed themselves to be injected with blood taken from pellagrins, and applied secretions from the throats and noses of pellagrins with swabs to their own noses and throats. What happened? No one developed pellagra. The infectious disease hypothesis was put to rest. You are likely wondering why Goldberger and these volunteers had so much faith in the dietary deficiency hypothesis that they would participate in these “filthy” demonstrations. Goldberger had collected enough supporting data from dietary studies that he had conducted at orphanages, a state prison, and a state asylum to convince his wife and the volunteers, but not his opponents, that he was right (Kraut, 2003). Sadly, Goldberger died from kidney cancer in 1929 before the specific dietary cause of pellagra, a niacin deficiency, was identified in the 1930s.

In sum, it is important to realize that correlational studies only allow researchers to develop hypotheses about cause–

Experimental Research

random assignment A control measure in which participants are randomly assigned to groups in order to equalize participant characteristics across the various groups in an experiment.

The key aspect of experimental research is that the researcher controls the experimental setting. The only factor that varies is what the researcher manipulates. It is this control that allows the researcher to make cause–

Please note the differences between random assignment and random sampling. Random sampling is a technique in which a sample of participants that is representative of a population is obtained. Hence it is used not only in experiments but also in other research methods such as correlational studies and surveys. Random assignment is only used in experiments. It is a control measure in which the researcher randomly assigns the participants in the sample to the various groups or conditions in an experiment. Random sampling allows you to generalize your results to the relevant population; random assignment controls for possible influences of individual characteristics of the participants on the behavior(s) of interest in an experiment. These differences between random sampling and random assignment are summarized in Table 1.2.

| Random Sampling | Random Assignment |

|---|---|

| A sampling technique in which a sample of participants that is representative of the population is obtained | A control measure in which participants in a sample are randomly assigned to the groups or conditions in an experiment |

| Used in experiments and some other research methods such as correlational studies and surveys | Used only in experiments |

| Allows researcher to generalize the findings to the relevant population | Allows researcher to control for possible influences of individual characteristics of the participants on the behavior(s) of interest |

independent variable In an experiment, the variable that is a hypothesized cause and thus is manipulated by the experimenter.

dependent variable In an experiment, a variable that is hypothesized to be affected by the independent variable and thus is measured by the experimenter.

experiment A research method in which the researcher manipulates one or more independent variables and measures their effect on one or more dependent variables while controlling other potentially relevant variables.

Designing an experiment. When a researcher designs an experiment, the researcher begins with a hypothesis (the prediction to be tested) about the cause–

experimental group In an experiment, the group exposed to the independent variable.

control group In an experiment, the group not exposed to the independent variable.

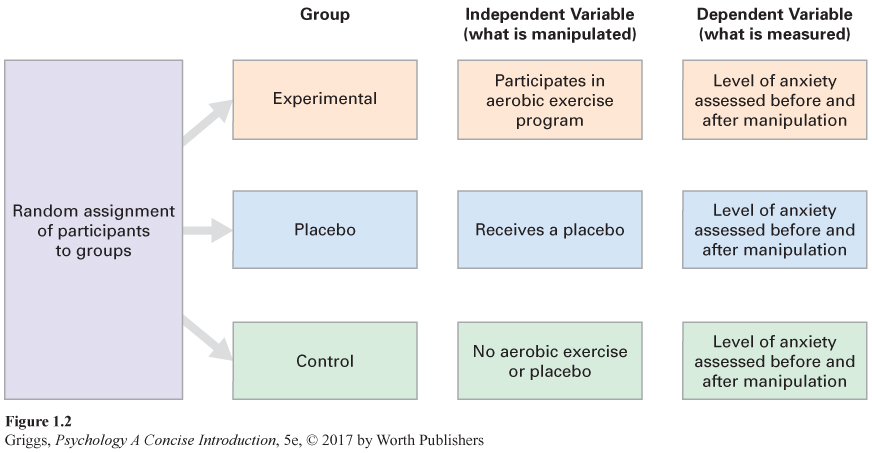

Let’s consider the simplest experiment first—

operational definition A description of the operations or procedures that a researcher uses to manipulate or measure a variable.

The independent and dependent variables in an experiment must be operationally defined. An operational definition is a description of the operations or procedures the researcher uses to manipulate or measure a variable. In our sample experiment, the operational definition of aerobic exercise would include the type and the duration of the activity. For level of anxiety, the operational definition would describe the way the anxiety variable was measured (for example, a participant’s score on a specified anxiety scale). Operational definitions not only clarify a particular experimenter’s definitions of variables but also allow other experimenters to attempt to replicate the experiment more easily, and replication is the cornerstone of science (Moonesinghe, Khoury, & Janssens, 2007).

placebo effect Improvement due to the expectation of improving because of receiving treatment.

placebo An inactive pill or a sham treatment that has no known effects.

Let’s go back to our aerobic exercise experiment. We have our experimental group and our control group, but this experiment really requires a second control group. The first control group (the group not participating in the aerobic exercise program) provides a baseline level of anxiety to which the anxiety of the experimental group can then be compared. In other words, it controls for changes over time in the level of anxiety not due to aerobic exercise, such as regression toward the mean. However, we also need to control for what is called the placebo effect—improvement due to the expectation of improving because of receiving treatment. The treatment involved in the placebo effect, however, only involves receiving a placebo—an inactive pill or a sham treatment that has no known effects. The placebo effect can arise not only from a conscious belief in the treatment but also from subconscious associations between recovery and the experience of being treated (Niemi, 2009). For example, stimuli that a patient links with getting better, such as a doctor’s white lab coat or the smell of an examining room, may induce some improvement in the patient’s condition. In addition, giving a placebo a popular medication brand name, prescribing more frequent doses, using placebo injections rather than pills, or indicating that it is expensive can boost the effect of a placebo (Niemi, 2009; Stewart-

You might be puzzled by physicians prescribing placebos, but consider the following. Combining the research finding that the placebo response to pain is very large (Kam-

nocebo effect A negative placebo effect due to the expectation of adverse consequences from receiving treatment.

The placebo (Latin for “I will please”) effect, however, does have an evil twin, the nocebo (Latin for “I will harm”) effect, whereby expectation of a negative outcome due to treatment leads to adverse effects (Benedetti, Lanotte, Lopiano, & Colloca, 2007; Kennedy, 1961). Expectations can also do harm; hence, the nocebo effect is sometimes referred to as a negative placebo effect. For example, when a patient anticipates a drug’s possible side effects, he can suffer them even if the drug is a placebo. Because of ethical reasons, the nocebo effect has not been studied nearly as much as the placebo effect, but recently Häuser, Hansen, and Enck (2012) reviewed 31 studies that clearly demonstrated the nocebo effect in clinical practice. They concluded that the nocebo effect creates an ethical dilemma for physicians and therapists—

placebo group A control group of participants who believe they are receiving treatment, but who are only receiving a placebo.

The reduction of anxiety in the experimental group participants in our aerobic exercise experiment may be partially or completely due to a placebo effect. This is why researchers add a control group called the placebo group to control for the possible placebo effect. A placebo group is a group of participants who believe they are receiving treatment, but they are not. They get a placebo. For example, the participants in a placebo group in the aerobic exercise experiment would be told that they are getting an antianxiety drug, but they would only get a placebo (in this case, a pill that has no active ingredient). The complete design for the aerobic exercise experiment, including the experimental, placebo, and control groups, is shown in Figure 1.2 For the experimenter to conclude that there is a placebo effect, the reduction of anxiety in the placebo group would have to be significantly greater than the reduction for the control group. This is because the control group provides a baseline of the reduction in symptom severity over time caused by factors other than that caused by the placebo (Benedetti, 2014). A good example of such a factor is one that we discussed earlier in the chapter, regression toward the mean. In this example, it would translate to your anxiety regressing naturally toward its normal level over time. If a control group was not included in the study, then the measurement of the placebo effect would be confounded because it would include the improvement due to these other factors and not just the placebo. Similarly, for the experimenter to conclude that the reduction of anxiety in the experimental group is due to aerobic exercise and not a placebo effect or other factors leading to a reduction in anxiety, the reduction would have to be significantly greater than that observed for the placebo and control groups because the reduction in the experimental group is due not only to the effect of the aerobic exercise but also to a placebo effect created by the expectation of getting better by engaging in the aerobic exercise and to natural factors leading the body to reduce anxiety and return to its normal state.

inferential statistical analyses Statistical analyses that allow researchers to draw conclusions about the results of a study by determining the probability that the results are due to random variation (chance). The results are statistically significant if this probability is .05 or less.

Now you may be wondering what is meant by “significantly greater.” This is where statistical analysis enters the scene. We use what are called inferential statistical analyses—statistical analyses that allow researchers to draw conclusions about the results of their studies. Such analyses tell the researcher the probability that the results of the study are due to random variation (chance). Obviously, the experimenter would want this probability to be low. Remember, the experimenter’s hypothesis is that the manipulation of the independent variable (not chance) is what causes the dependent variable to change. The scientific community has agreed that a “significant” finding is one that has a probability of .05 (1/20) or less that it is due to chance. Possibly, this acceptance standard is too lenient. A recent study that attempted to replicate 100 research findings reported in top cognitive and social psychology journals failed to replicate over 60% of the findings, and when the findings were replicated, the size of the observed effects were on average smaller (Open Science Collaboration, 2015; cf. Gilbert, King, Pettigrew, & Wilson, 2016). Although many factors are involved in this large-

Statistical significance tells us the probability that a finding did not occur by chance, but it does not insure that the finding has practical significance or value in our everyday world. A statistically significant finding with little practical value sometimes occurs when very large samples are used in a study. With such samples, very small differences among groups may be significant. Belmont and Marolla’s (1973) finding of a birth-

double-

The aerobic exercise experiment would also need to include another control measure, the double-

meta-

Now let’s think about experiments that are more complex than our sample experiment with its one independent variable (aerobic exercise) and two control groups. In most experiments, the researcher examines multiple values of the independent variable. With respect to the aerobic exercise variable, the experimenter might examine the effects of different amounts or different types of aerobic exercise. Such manipulations would provide more detailed information about the effects of aerobic exercise on anxiety. An experimenter might also manipulate more than one independent variable. For example, an experimenter might manipulate diet as well as aerobic exercise. Different diets (high-

The various research methods that have been discussed are summarized in Table 1.3. Their purposes and data-

| Research Method | Goal of Method | How Data Are Collected |

|---|---|---|

| Laboratory observation | Description | Unobtrusively observe behavior in a laboratory setting |

| Naturalistic observation | Description | Unobtrusively observe behavior in its natural setting |

| Participant observation | Description | Observer becomes part of the group whose behavior is being observed |

| Case study | Description | Study an individual in depth over an extended period of time |

| Survey | Description | Representative sample of a group completes questionnaires or interviews to determine behavior, beliefs, and attitudes of the group |

| Correlational study | Prediction | Measure two variables to determine whether they are related |

| Experiment | Explanation | Manipulate one or more independent variables in a controlled setting to determine their impact on one or more measured dependent variables |

Section Summary

Research methods fall into three categories—

Descriptive methods only allow description, but correlational studies allow the researcher to make predictions about the relationships between variables. In a correlational study, two variables are measured and these measurements are compared to see if they are related. A statistic, the correlation coefficient, tells us both the type of the relationship (positive or negative) and the strength of the relationship. The sign of the coefficient (+ or –) tells us the type, and the absolute value of the coefficient (0 to 1.0) tells us the strength. Zero and values near zero indicate no relationship. As the absolute value approaches 1.0, the strength increases. Correlational data may also be depicted in scatterplots. A positive correlation is indicated by data points that extend from the bottom left of the plot to the top right. Scattered data points going from the top left to the bottom right indicate a negative correlation. The strength is reflected in the amount of scatter—

To draw cause–

2

Question 1.3

.

Explain why the results of a case study cannot be generalized to a population.

The results of a case study cannot be generalized to a population because they are specific to the individual who has been studied. To generalize to a population, you need to include a representative sample of the population in the study. However, the results of a case study do allow the researcher to develop hypotheses about cause–

Question 1.4

.

Explain the differences between random sampling and random assignment.

Random sampling is a method for obtaining a representative sample from a population. Random assignment is a control measure for assigning the members of a sample to groups or conditions in an experiment. Random sampling allows the researcher to generalize the results from the sample to the population; random assignment controls for individual characteristics across the groups in an experiment. Random assignment is used only in experiments, but random sampling is used in experiments and some other research methods such as correlational studies and surveys.

Question 1.5

.

Explain how the scatterplots for correlation coefficients of +.90 and –.90 would differ.

There would be the same amount of scatter of the data points in each of the two scatterplots because they are equal in strength (.90). In addition, because they are strong correlations, there would not be much scatter. However, the scatter of data points in the scatterplot for +.90 would go from the bottom left of the plot to the top right; the scatter for –.90 would go from the top left of the plot to the bottom right. Thus, the direction of the scatter would be different in the two scatterplots.

Question 1.6

.

Waldman, Nicholson, Adilov, and Williams (2008) found that autism prevalence rates among school-

Some possible third variables that could serve as environmental triggers for autism among genetically vulnerable children stem from the children being in the house more and spending less time outdoors because of the high rates of precipitation. According to the authors of the study, such variables would include increased television and video viewing, decreased vitamin D levels because of less exposure to sunlight, and increased exposure to household chemicals. In addition, there may be chemicals in the atmosphere that are transported to the surface by the precipitation. All of these variables could serve as third variables and possibly account for the correlation.

Question 1.7

.

Explain why a double-

The double-