1.3 The Scientific Method

KEY THEME

The scientific method is a set of assumptions, attitudes, and procedures that guides all scientists, including psychologists, in conducting research.

KEY QUESTIONS

What assumptions and attitudes are held by psychologists?

What characterizes each step of the scientific method?

How does a hypothesis differ from a theory?

Whatever their approach or specialty, psychologists who do research are scientists. And, like other scientists, they rely on the scientific method to guide their research. The scientific method refers to a set of assumptions, attitudes, and procedures that guide researchers in creating questions to investigate, in generating evidence, and in drawing conclusions.

scientific method

A set of assumptions, attitudes, and procedures that guide researchers in creating questions to investigate, in generating evidence, and in drawing conclusions.

Like all scientists, psychologists are guided by the basic scientific assumption that events are lawful. When this scientific assumption is applied to psychology, it means that psychologists assume that behavior and mental processes follow consistent patterns. Psychologists are also guided by the assumption that events are explainable. Thus, psychologists assume that behavior and mental processes have a cause or causes that can be understood through careful, systematic study.

Psychologists are also open-minded. They are willing to consider new or alternative explanations of behavior and mental processes. However, their open-minded attitude is tempered by a healthy sense of scientific skepticism. That is, psychologists critically evaluate the evidence for new findings, especially those that seem contrary to established knowledge.

The Steps in the Scientific Method

SYSTEMATICALLY SEEKING ANSWERS

Like any science, psychology is based on verifiable or empirical evidence—evidence that is the result of objective observation, measurement, and experimentation. As part of the overall process of producing empirical evidence, psychologists follow the four basic steps of the scientific method. In a nutshell, these steps are:

Formulate a specific question that can be tested

Design a study to collect relevant data

Analyze the data to arrive at conclusions

Report the results

empirical evidence

Verifiable evidence that is based upon objective observation, measurement, and/or experimentation.

Following the basic guidelines of the scientific method does not guarantee that valid conclusions will always be reached. However, these steps help guard against bias and minimize the chances for error and faulty conclusions. Let’s look at some of the key concepts associated with each step of the scientific method.

STEP 1. FORMULATE A TESTABLE HYPOTHESIS

Once a researcher has identified a question or an issue to investigate, he or she must formulate a hypothesis that can be tested empirically. Formally, a hypothesis is a tentative statement that describes the relationship between two or more variables. A hypothesis is often stated as a specific prediction that can be empirically tested, such as “strong social networks are associated with greater well-being in college students.”

hypothesis

(high-POTH-uh-sis) A tentative statement about the relationship between two or more variables; a testable prediction or question.

A variable is simply a factor that can vary, or change. These changes must be capable of being observed, measured, and verified. The psychologist must provide an operational definition of each variable to be investigated. An operational definition defines the variable in very specific terms as to how it will be measured, manipulated, or changed. Operational definitions are important because many of the concepts that psychologists investigate—such as memory, happiness, or stress—can be measured in more than one way.

variable

A factor that can vary, or change, in ways that can be observed, measured, and verified.

operational definition

A precise description of how the variables in a study will be manipulated or measured.

For example, how would you test the hypothesis that “strong social networks are associated with greater well-being in college students”? To test that specific prediction, you would need to formulate an operational definition of each variable. How could you operationally define social networks? Well-being? What could you objectively observe and measure?

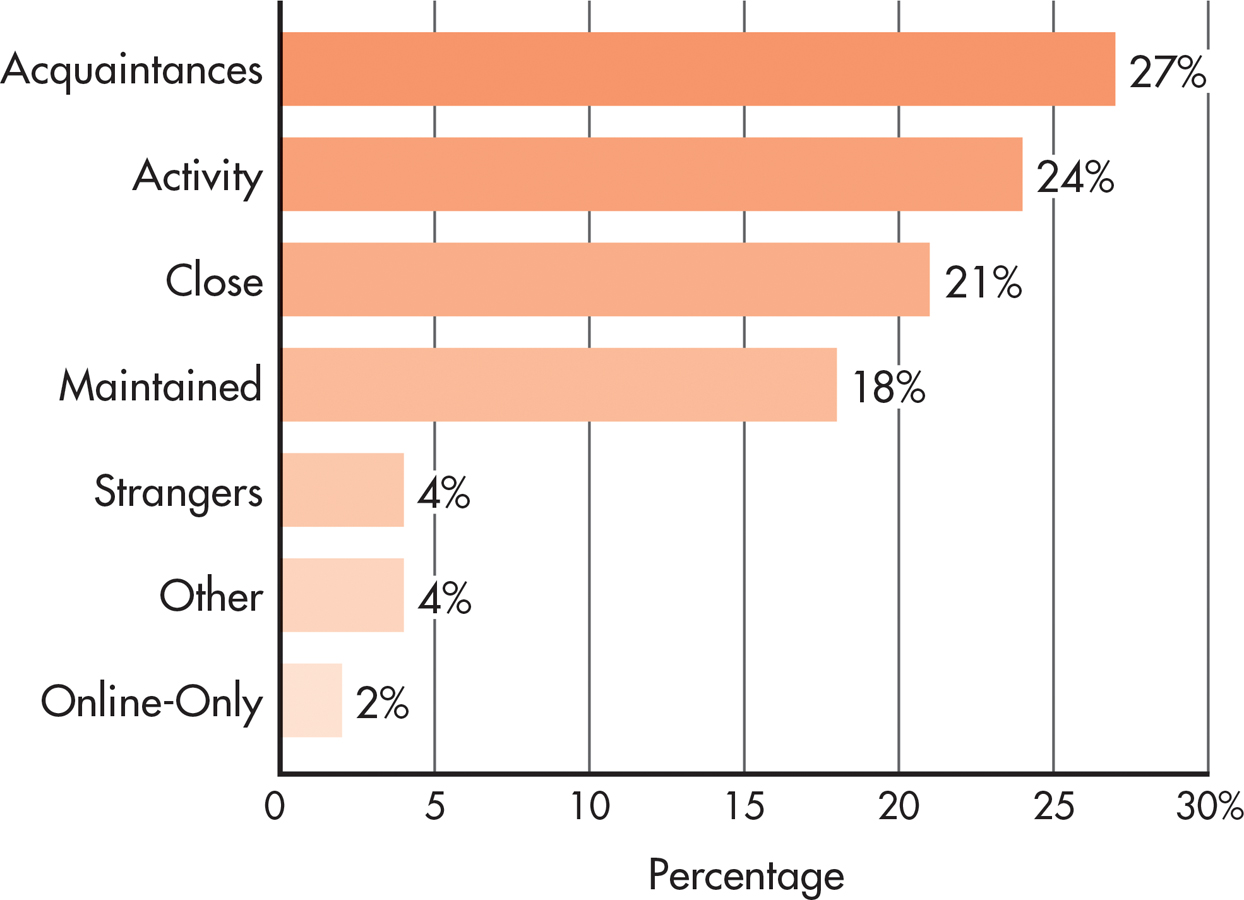

To investigate the impact of social networks on college students, Adriana Manago and her colleagues (2012) used Facebook data. They operationally defined network size as the participant’s number of Facebook friends. They asked participants to classify their Facebook friends into different categories, such as “close friends,” “acquaintances,” and “online” friends. FIGURE 1.2 shows the percentage of each type of friend in the participants’ social networks.

Source: Data from Manago & others (2012).

How was well-being operationally defined? Manago and her colleagues (2012) used a standard scale that measured life satisfaction. Students rated their agreement on a 5-point scale for nine statements like “I have a good life” and “I like the way things are going for me.” And what did Manago and her colleagues find? Students with larger networks were significantly happier with their lives.

STEP 2. DESIGN THE STUDY AND COLLECT THE DATA

This step involves deciding which research method to use for collecting data. There are two basic types of designs used in research—descriptive and experimental. Each research approach answers different kinds of questions and provides different kinds of evidence.

Descriptive research includes research strategies for observing and describing behavior, including identifying the factors that seem to be associated with a particular phenomenon. The study by Adriana Manago and her colleagues (2012) on social networks and student well-being is just one example of descriptive research. Descriptive research answers the who, what, where, and when kinds of questions about behavior. Who engages in a particular behavior? What factors or events seem to be associated with the behavior? Where does the behavior occur? When does the behavior occur? How often? In the next section, we’ll discuss commonly used descriptive methods, including naturalistic observation, surveys, case studies, and correlational studies.

In contrast, experimental research is used to show that one variable causes change in a second variable. In an experiment, the researcher deliberately varies one factor, then measures the changes produced in a second factor. Ideally, all experimental conditions are kept as constant as possible except for the factor that the researcher systematically varies. Then, if changes occur in the second factor, those changes can be attributed to the variations in the first factor.

STEP 3. ANALYZE THE DATA AND DRAW CONCLUSIONS

Once observations have been made and measurements have been collected, the raw data need to be analyzed and summarized. Researchers use the methods of a branch of mathematics known as statistics to analyze, summarize, and draw conclusions about the data they have collected.

statistics

A branch of mathematics used by researchers to organize, summarize, and interpret data.

Researchers rely on statistics to determine whether their results support their hypotheses. They also use statistics to determine whether their findings are statistically significant. If a finding is statistically significant, it means that the results are not very likely to have occurred by chance. As a rule, statistically significant results confirm the hypothesis. Appendix A provides a more detailed discussion of the use of statistics in psychology research.

statistically significant

A mathematical indication that research results are not very likely to have occurred by chance.

Keep in mind that even though a finding is statistically significant, it may not be practically significant. If a study involves a large number of participants, even small differences among groups of subjects may result in a statistically significant finding. But the actual average differences may be so small as to have little practical significance or importance.

For example, Reynol Junco (2012) surveyed nearly two thousand college students and found a statistically significant relationship between GPA and amount of time spent on Facebook: Students who spent a lot of time on Facebook tended to have lower grades than students who spent less time. However, the practical, real-world significance of this relationship was low: It turned out that a student had to spend 93 minutes per day more than the average of 106 minutes for the increased time to have even a small (.12 percentage points) impact on GPA. So remember that a statistically significant result is simply one that is not very likely to have occurred by chance. Whether the finding is significant in the everyday sense of being important is another matter altogether.

A statistical technique called meta-analysis is sometimes used in psychology to analyze the results of many research studies on a specific topic. Meta-analysis involves pooling the results of several studies into a single analysis. By creating one large pool of data to be analyzed, meta-analysis can help reveal overall trends that may not be evident in individual studies.

meta-analysis

A statistical technique that involves combining and analyzing the results of many research studies on a specific topic in order to identify overall trends.

Meta-analysis is especially useful when a particular issue has generated a large number of studies with inconsistent results. For example, many studies have looked at the factors that predict success in college. British psychologists Michelle Richardson and her colleagues (2012) pooled the results of over 200 research studies investigating personal characteristics that were associated with success in college. “Success in college” was operationally defined as cumulative grade-point average (GPA). They found that motivational factors were the strongest predictor of college success, outweighing test scores, high school grades, and socioeconomic status. Especially important was a trait they called performance self-efficacy, the belief that you have the skills and abilities to succeed at academic tasks. We’ll talk more about self-efficacy in Chapter 8 on motivation and emotion.

STEP 4. REPORT THE FINDINGS

For advances to be made in any scientific discipline, researchers must share their findings with other scientists. In addition to reporting their results, psychologists provide a detailed description of the study itself, including who participated in the study, how variables were operationally defined, how data were analyzed, and so forth.

Describing the precise details of the study makes it possible for other investigators to replicate, or repeat, the study. Replication is an important part of the scientific process. When a study is replicated and the same basic results are obtained again, scientific confidence that the results are accurate is increased. Conversely, if the replication of a study fails to produce the same basic findings, confidence in the original findings is reduced.

replicate

To repeat or duplicate a scientific study in order to increase confidence in the validity of the original findings.

Psychologists present their research at academic conferences or write a paper summarizing the study and submit it to one of the many psychology journals for publication. Before accepting papers for publication, most psychology journals send the paper to other knowledgeable psychologists to review and evaluate. If the study conforms to the principles of sound scientific research and contributes to the existing knowledge base, the paper is accepted for publication.

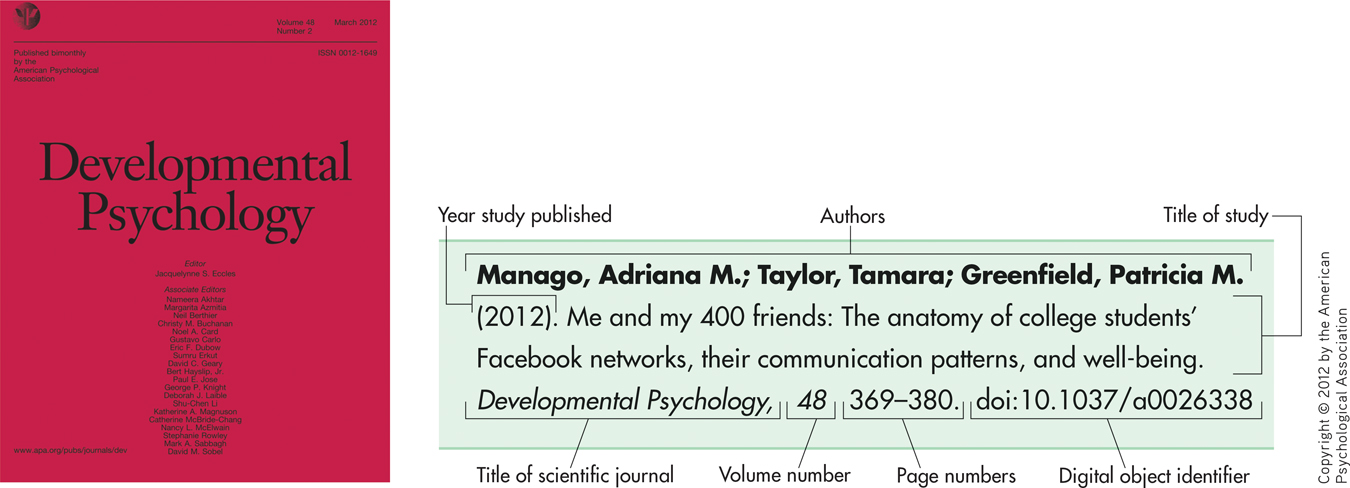

Throughout this text, you’ll see citations that look like the one you encountered in the discussion above on social networks and well-being: “(Manago & others, 2012).” These citations identify the sources of the research and ideas that are being discussed. The citation tells you the author or authors (Manago & others) of the study and the year (2012) in which the study was published. You can find the complete reference in the alphabetized References section at the back of this text. The complete reference lists the authors’ full names, the article title, the journal or book in which the article was published, and the DOI, or digital object identifier. The DOI is a permanent Internet “address” for journal articles and other digital works posted on the Internet.

FIGURE 1.3 shows you how to decipher the different parts of a typical journal reference.

Building Theories

INTEGRATING THE FINDINGS FROM MANY STUDIES

As research findings accumulate from individual studies, eventually theories develop. A theory, or model, is a tentative explanation that tries to account for diverse findings on the same topic. Note that theories are not the same as hypotheses. A hypothesis is a specific question or prediction to be tested. In contrast, a theory integrates and summarizes numerous research findings and observations on a particular topic. Along with explaining existing results, a good theory often generates new predictions and hypotheses that can be tested by further research (Higgins, 2004).

theory

A tentative explanation that tries to integrate and account for the relationship of various findings and observations.

As you encounter different theories, try to remember that theories are tools for explaining behavior and mental processes, not statements of absolute fact. Like any tool, the value of a theory is determined by its usefulness. A useful theory is one that furthers the understanding of behavior, allows testable predictions to be made, and stimulates new research. Often, more than one theory proves to be useful in explaining a particular area of behavior or mental processes, such as the development of personality or the experience of emotion.

It’s also important to remember that theories often reflect the self-correcting nature of the scientific enterprise. In other words, when new research findings challenge established ways of thinking about a phenomenon, theories are expanded, modified, and even replaced. Thus, as the knowledge base of psychology evolves and changes, theories evolve and change to produce more accurate and useful explanations of behavior and mental processes.

While the conclusions of psychology rest on empirical evidence gathered using the scientific method, the same is not true of pseudoscientific claims (J. C. Smith, 2010). As you’ll read in the Science Versus Pseudoscience box below, pseudosciences often claim to be scientific while ignoring the basic rules of science.

SCIENCE VERSUS PSEUDOSCIENCE

What Is a Pseudoscience?

The word pseudo means “fake” or “false.” Thus, a pseudoscience is a fake science. More specifically, a pseudoscience is a theory, method, or practice that promotes claims in ways that appear to be scientific and plausible even though supporting empirical evidence is lacking or nonexistent (Matute & others, 2011). Surveys have found that pseudoscientific beliefs are common among the general public (National Science Board, 2010).

pseudoscience

Fake or false science that makes claims based on little or no scientific evidence.

Do you remember Tyler from our Prologue? He wanted to know whether a magnetic bracelet could help him concentrate or improve his memory. We’ll use what we learned about magnet therapy to help illustrate some of the common strategies used to promote pseudosciences.

Magnet Therapy: What’s the Attraction?

The practice of applying magnets to the body to supposedly treat various conditions and ailments is called magnet therapy. Magnet therapy has been around for centuries. Today, Americans spend an estimated $500 million each year on magnetic bracelets, belts, vests, pillows, and mattresses. Worldwide, the sale of magnetic devices is estimated to be $5 billion per year (Winemiller & others, 2005).

The Internet has been a bonanza for those who market products like magnet therapy. Web sites hail the “scientifically proven healing benefits” of magnet therapy for everything from improving concentration and athletic prowess to relieving stress and curing Alzheimer’s disease and schizophrenia (e.g., Johnston, 2008; Parsons, 2007). Treating pain is the most commonly marketed use of magnet therapy. However, reviews of scientific research on magnet therapy consistently conclude that there is no evidence that magnets can relieve pain (National Standard, 2009: National Center for Complementary and Alternative Medicine, 2009).

But proponents of magnet therapy, like those of other pseudoscientific claims, use very effective strategies to create the illusion of scientifically validated products or procedures. Each of the ploys below should serve as a warning sign that you need to engage your critical and scientific thinking skills.

Strategy 1: Testimonials rather than scientific evidence

Pseudosciences often use testimonials or personal anecdotes as evidence to support their claims. Although they may be sincere and often sound compelling, testimonials are not acceptable scientific evidence. Testimonials lack the basic controls used in scientific research. Many different factors, such as the simple passage of time, could account for a particular individual’s response.

Strategy 2: Scientific jargon without scientific substance

Pseudoscientific claims are littered with scientific jargon to make their claims seem more credible. An ad may be littered with scientific-sounding terms, such as “bio-magnetic balance” that—when examined—turn out to be meaningless.

Strategy 3: Combining established scientific knowledge with unfounded claims

Pseudosciences often mention well-known scientific facts to add credibility to their unsupported claims. For example, the magnet therapy spiel often starts by referring to the properties of the earth’s magnetic field, the fact that blood contains minerals and iron, and so on. Unfortunately, it turns out that the iron in red blood cells is not attracted to magnets (Ritchie & others, 2012). Established scientific procedures may also be mentioned, such as magnetic resonance imaging (MRI). For the record, MRI does not use static magnets, which are the type that are found in magnetic jewelry.

Strategy 4: Irrefutable or nonfalsifiable claims

Consider this claim: “Magnet therapy restores the natural magnetic balance required by the body’s healing process.” How could you test that claim? An irrefutable or nonfalsifiable claim is one that cannot be disproved or tested in any meaningful way. The irrefutable claims of pseudosciences typically take the form of broad or vague statements that are essentially meaningless.

Strategy 5: Confirmation bias

Scientific conclusions are based on converging evidence from multiple studies, not a single study. Pseudosciences ignore this process and instead trumpet the findings of a single study that seems to support their claims. In doing so, they do not mention all the other studies that tested the same thing but yielded results that failed to support the claim. This illustrates confirmation bias—the tendency to seek out evidence that confirms an existing belief while ignoring evidence that contradicts or undermines the belief (J. C. Smith, 2010). When disconfirming evidence is pointed out, it is ignored, rationalized, or dismissed.

confirmation bias

The tendency to seek out evidence that confirms an existing belief while ignoring evidence that might contradict or undermine the belief.

Strategy 6: Shifting the burden of proof

In science, the responsibility for proving the validity of a claim rests with the person making the claim. Many pseudosciences, however, shift the burden of proof to the skeptic. If you express skepticism about a pseudoscientific claim, the pseudoscience advocate will challenge you to disprove their claim.

Strategy 7: Multiple outs

What happens when pseudosciences fail to deliver on their promised benefits? Typically, multiple excuses are offered. Privately, Tyler admitted that he hadn’t noticed any improvement in his ability to concentrate while wearing the bracelet his girlfriend gave him. But his girlfriend insisted that he simply hadn’t worn the bracelet long enough for the magnets to “clear his energy field.” Other reasons given when magnet therapy fails to work:

Magnets act differently on different body parts.

The magnet was placed in the wrong spot.

The magnets were the wrong type, size, shape, etc.

One of our goals in this text is to help you develop your scientific thinking skills so you’re better able to evaluate claims about behavior or mental processes, especially claims that seem farfetched or too good to be true. In this chapter, we’ll look at the scientific methods used to test hypotheses and claims. And in the Science Versus Pseudoscience boxes in later chapters, you’ll see how various pseudoscience claims have stood up to scientific scrutiny. We hope you enjoy this feature!