Part 1. Media and Culture 12e Extended Case Study

Introduction

Can We Trust Facebook with Our Personal Data?

Click the forward and backward arrows to navigate through the slides. You may also click the above outline button to skip to certain slides.

The first half of 2018 was a rough one for Facebook. On the surface, things appeared to be going well. The company had more than 2.2 billion monthly users.1 Its revenue was $40.65 billion, more than twice as much as just two years prior. Along with Google, Facebook had become a digital advertising powerhouse, with nearly all—about 98 percent—of its revenue coming from advertising.

Yet in recent years, there has been increasing public discussion in America about digital media companies growing too big and becoming too lax in terms of protecting the security and privacy of its users. USA Today, for example, wrote that the big digital companies “have grown too big, too influential, too unmonitored—too much the Big Brother that dystopian stories warn about.”2

Of all the major digital companies, Facebook was the one with the most obvious missteps, and those missteps became serious stumbles by 2018.

First was the problem of the 2016 presidential campaign in the United States. In February 2018, the U.S. Justice Department’s special counsel indicted thirteen individuals connected to a Russian internet troll farm that placed at least three thousand Facebook ads to influence American voters and increase polarization on certain social issues. The Russian trolls were responsible for eighty thousand posts reaching 126 million people on Facebook.3

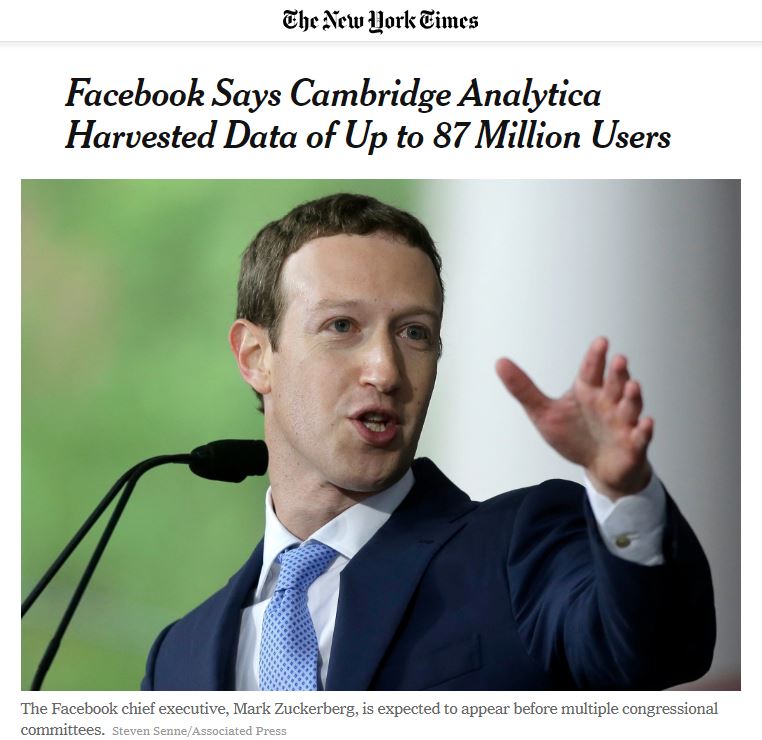

Then, in March 2018, the New York Times and the Observer revealed a huge unauthorized use of Facebook user data by Cambridge Analytica, a British firm that consulted with candidate Donald Trump’s campaign in 2016. According to the account of a whistleblower who worked with Cambridge Analytica, the company “used personal information taken without authorization in early 2014 to build a system that could profile individual US voters, in order to target them with personalized political advertisements.”4 It all started in 2014, when 270,000 Facebook users participated in a survey and downloaded an app, consenting to have their data accessed for academic use. It then veered off into a violation of privacy for millions of Facebook users. Cambridge Analytica and its partners, without authorization, bought the data set and scraped information from the survey participants’ friends, ultimately gaining access to information on about 87 million Facebook users.5

In April 2018, Facebook revealed that most of its two billion users could have had their user information accessed without authorization, but that it would disable the feature that had allowed it to happen. Bloomberg reported that this was yet more “evidence of the ways the social-media giant failed to protect people’s privacy while generating billions of dollars in revenue from the information.”6

Facebook founder and CEO Mark Zuckerberg (and the fifth richest person in the world, with a $71 billion net worth) was called to testify before the U.S. Congress and the European Parliament. By most accounts, Zuckerberg got off easy, fielding largely clueless questions from older elected officials. As the Guardian put it, “At times Zuckerberg resembled the polite teenager who visits his grandparents, only to spend the afternoon showing them how to turn on the wifi.”7 (Zuckerberg’s testimony did generate an avalanche of critical memes, though.)8 But one candid comment from a European official in May 2018 had a sharp point:

“In total you apologized 15 or 16 times in the last decade,” Guy Verhofstadt, leader of the Alliance of Liberals and Democrats for Europe, said after Zuckerberg apologized, again. “Every year you have one or another wrongdoing with your company. . . . Are you able to fix it? And if you’ve already confronted so many dysfunctions, there clearly has to be a problem.”9

Dylan Byers, a technology reporter for CNN, argued that “Facebook’s problem is Facebook.” In other words, the very nature of how Facebook operates leads to problems: “Facebook’s data privacy problem isn’t a glitch, it’s the central feature. Facebook succeeds by collecting, harvesting and profiting off your data. In fact, many of Facebook’s proposed solutions to its myriad problems are structured to give them more access to your data.”10 By July 2018, even investors were alarmed by Facebook’s ongoing privacy problems and long-term future for growth. In one day, the value of Facebook’s shares plunged about 20 percent, the largest in Wall Street history.

Is Facebook’s business model of profiting from our personal data also an ever-present problem for our data privacy and security? Are Facebook’s constant apologies for violating the privacy of our data evidence of a serious problem? For this extended case study, we will look at news stories about instances of unauthorized data use and data breaches at Facebook and what Facebook promised to do in its apologies.

As developed in Chapter 1, a media-literate perspective involves mastering five overlapping critical stages that build on one another: (1) description: paying close attention, taking notes, and researching the subject under study; (2) analysis: discovering and focusing on significant patterns that emerge from the description stage; (3) interpretation: asking and answering the “What does that mean?” and “So what?” questions about your findings; (4) evaluation: arriving at a judgment about whether something is good, bad, poor, or mediocre, which involves subordinating one’s personal views to the critical assessment resulting from the first three stages; and (5) engagement: taking some action that connects our critical interpretations and evaluations with our responsibility as citizens.

Value of reference one. “Stats,” Facebook, March 31, 2018, https://newsroom.fb.com/company-info

Value of reference two. Marco della Cava, Elizabeth Weise, and Jessica Guynn, “Are Facebook, Google and Amazon Too Big? Why That Question Keeps Coming Up,” USA Today, September 25, 2017, www.usatoday.com/story/tech/2017/09/25/facebook-google-and-amazon-too-big-question-comes-right-and-left/689879001.

Value of reference one. Devlin Barrett, Sari Horwitz, and Rosalind S. Helderman, “Russian Troll Farm, 13 Suspects Indicted in 2016 Election Interference,” Washington Post, February 16, 2018,

www.washingtonpost.com/world/national-security/russian-troll-farm-13-suspects-indicted-for-interference-in-us-election/2018/02/16/2504de5e-1342-11e8-9570-29c9830535e5_story.html; and Leslie Shapiro, “Anatomy of a Russian Facebook Ad,” Washington Post, November 1, 2017, www.washingtonpost.com/graphics/2017/business/russian-ads-facebook-anatomy.

Value of reference one. Carole Cadwalladr and Emma Graham-Harrison, “Revealed: 50 Million Facebook Profiles Harvested for Cambridge Analytica in Major Data Breach,” Guardian, March 17, 2018, www.theguardian.com/news/2018/mar/17/cambridge-analytica-facebook-influence-us-election.

Value of reference one. Matthew Rosenberg, Nicholas Confessore, and Carole Cadwalladr, “How Trump Consultants Exploited the Facebook Data of Millions,” New York Times, March 17, 2018, www.nytimes.com/2018/03/17/us/politics/cambridge-analytica-trump-campaign.html.

Value of reference one. Sarah Frier, “Facebook Says Data on Most of Its 2 Billion Users Is Vulnerable,” Bloomberg, April 4, 2018, www.bloomberg.com/news/articles/2018-04-04/facebook-says-data-on-87-million-people-may-have-been-shared.

Value of reference one. Jonathan Freedland, “Zuckerberg Got Off Lightly. Why Are Politicians So Bad at Asking Questions?” Guardian, April 11, 2018, www.theguardian.com/commentisfree/2018/apr/11/mark-zuckerberg-facebook-congress-senate

Value of reference one. Emma Grey Ellis, “Mark Zuckerberg’s Testimony Birthed an Oddly Promising Memepocalypse,” Wired, April 11, 2018, www.wired.com/story/mark-zuckerberg-memepocalypse

Value of reference one. Dylan Byers, “Facebook’s Problem Is Facebook,” CNNTech, May 22, 2018, http://money.cnn.com/2018/05/22/technology/pacific-newsletter/index.html

Value of reference one. Ibid.

Step 1: Description

Given our research question, in the description phase you would look at news stories that reveal data-security problems at Facebook.

A good starting point is 2011, when the Federal Trade Commission charged Facebook with a list of eight violations in which Facebook told consumers their information would be private but made it public to advertisers and third-party applications. At the time, the chair of the FTC said, “Facebook’s innovation does not have to come at the expense of consumer privacy.”11 Facebook settled with the FTC by fixing the problem and agreeing to submit to privacy audits through the year 2031. “Today’s announcement formalizes our commitment to providing you with control over your privacy and sharing—and it also provides protection to ensure that your information is only shared in the way you intend,” said Zuckerberg in his statement in regard to the settlement.12

Since that time, what are some other unauthorized uses of data or data breaches at Facebook, and how has the company responded to them?

- In 2013, Facebook reported that it “inadvertently exposed six million users’ phone numbers and e-mail addresses to unauthorized viewers over the last year.” The company also noted, ''It's still something we're upset and embarrassed by, and we'll work doubly hard to make sure nothing like this happens again.''13

- In 2014, Facebook faced criticism from European regulators after it disclosed “that it deliberately manipulated the emotional content of the news feeds by changing the posts displayed to nearly 700,000 users to see if emotions were contagious. The company did not seek explicit permission from the affected people—roughly one out of every 2,500 users of the social network at the time of the experiment—and some critics have suggested that the research violated its terms of service with its customers. Facebook has said that customers gave blanket permission for research as a condition of using the service.” In a news report, “Richard Allan, Facebook’s director of policy in Europe, said that it was clear that people had been upset by the study. ‘We want to do better in the future and are improving our process based on this feedback,’ he said in a statement.”14

- In 2016, WhatsApp—the world’s largest messaging service, purchased by Facebook in 2014—said it would begin to share users’ information with Facebook. Regulators in Hamburg, Germany, objected and ordered Facebook to stop and to delete any information from WhatsApp’s thirty-five million German users that had been shared with Facebook. “‘It has to be their decision, whether they want to connect their account with Facebook,’ Johannes Caspar, the Hamburg data protection commissioner, said.”15 According to the report, “Facebook said . . . after the order had been issued, that it had complied with Europe’s privacy rules and that it was willing to work with the regulator to address its concerns.”16

- In June 2018 (after the European Union officially chastised Zuckerberg), the New York Times revealed that Facebook had agreements with at least sixty digital device makers, including Apple, Amazon, Microsoft, and Samsung, allowing them “access to vast amounts of its users’ personal information,” including users’ friends. According to the article, “The partnerships, whose scope has not previously been reported, raise concerns about the company’s privacy protections and compliance with a 2011 consent decree with the Federal Trade Commission.”17 In a statement on Facebook titled “Why We Disagree with the New York Times,” Facebook’s vice president of product partnerships responded that “friends’ information, like photos, was only accessible on devices when people made a decision to share their information with those friends. We are not aware of any abuse by these companies.” He further noted that Facebook had earlier announced that it would be “winding down” the software-sharing agreements with the digital device makers.18

- Just a few days later in June 2018, another New York Times investigative report revealed that “Facebook has data-sharing partnerships with at least four Chinese electronics companies, including a manufacturing giant that has a close relationship with China’s government.” The agreements dated back to at least 2010, the paper reported.19 According to the Times, “Facebook officials said in an interview that the company would wind down the Huawei deal by the end of the week.” Huawei was the Chinese telecommunications company that “has been flagged by American intelligence officials as a national security threat” to the United States.20 Facebook did not post a statement on its website responding to this article.

Value of reference one. “Facebook Settles FTC Charges That It Deceived Consumers by Failing to Keep Privacy Promises,” Federal Trade Commission, November 29, 2011, www.ftc.gov/news-events/press-releases/2011/11/facebook-settles-ftc-charges-it-deceived-consumers-failing-keep.

Value of reference one. Mark Zuckerberg, “Our Commitment to the Facebook Community,” Facebook, November 29, 2011, https://newsroom.fb.com/news/2011/11/our-commitment-to-the-facebook-community.

Value of reference one. Reuters, “Facebook Says Technical Flaw Exposed 6 Million Users,” New York Times, June 21, 2013, www.nytimes.com/2013/06/22/business/facebook-says-technical-flaw-exposed-6-million-users.html.

Value of reference one. Vindu Goel, “After Uproar, European Regulators Question Facebook on Psychological Testing,” New York Times, July 2, 2014, https://bitsblogs.nytimes.com/2014/07/02/facebooks-secret-manipulation-of-user-emotions-under-british-inquiry.

Value of reference one. Mark Scott, “Facebook Ordered to Stop Collecting Data on WhatsApp Users in Germany,” New York Times, September 27, 2016, www.nytimes.com/2016/09/28/technology/whatsapp-facebook-germany.html.

Value of reference one. Ibid.

Value of reference one. Gabriel J. X. Dance, Nicholas Confessore, and Michael LaForgia, “Facebook Gave Device Makers Deep Access to Data on Users and Friends,” New York Times, June 3, 2018, www.nytimes.com/interactive/2018/06/03/technology/facebook-device-partners-users-friends-data.html.

Value of reference one. Ime Archibong, “Why We Disagree with the New York Times,” Facebook, June 3, 2018, https://newsroom.fb.com/news/2018/06/why-we-disagree-with-the-nyt.

Value of reference one. Michael LaForgia and Gabriel J. X. Dance, “Facebook Gave Data Access to Chinese Firm Flagged by U.S. Intelligence,” New York Times, June 5, 2018, www.nytimes.com/2018/06/05/technology/facebook-device-partnerships-china.html.

Value of reference one. Ibid.

Step 2: Analysis

In the second stage of the critical process, you isolate patterns that call for closer attention. What patterns emerged from the news stories just discussed? For example, was there a consistent pattern of unauthorized data use by Facebook, or a pushing of the boundaries of data collection? Are Facebook’s policies on personal data clear to its users and the public? Did Facebook have a consistent pattern in dealing with concerns and complaints about its ability to secure users’ data and privacy?

Step 3: Interpretation

In the interpretation stage, you determine the larger meanings of the patterns you have analyzed. The most difficult stage in criticism, interpretation demands an answer to the questions “So what?” and “What does all this mean?”

One interpretation might be (to quote Dylan Byers) that “Facebook’s problem is Facebook.” Facebook has regularly fallen short in transparency in data use and its commitment to users’ privacy and data security, particularly given the provisions of its 2011 agreement with the FTC. It seems that Facebook’s main method of making money—appealing to advertisers by providing substantial information on its users—motivates it to constantly develop new ways to harvest data. Thus, it’s always pushing the boundaries of privacy rules, and by sharing user information with its clients, users’ data security is at risk. (This can be seen in the Cambridge Analytica case—yes, it violated Facebook policies, but sharing user data with so many third-party developers increases the opportunities for violations to happen.)

Step 4: Evaluation

The evaluation stage of the critical process is about making informed judgments. Building on description, analysis, and interpretation, you can better evaluate Facebook’s performance.

Based on your critical research, what would you conclude about Facebook’s record in terms of privacy and security? Remember that for any problems you identify in your evaluation, you will need to weigh them against the value of Facebook: What good does this particular social medium offer you, your community, the country, and the world?

Step 5: Engagement

The fifth stage of the critical process—engagement—encourages you to take action, adding your own voice to the process of shaping our culture and environment.

Given more than a decade of Facebook’s existence, a number of ideas for engagement already exist. At the global level, the General Data Protection Regulation (or GDPR) became effective in May 2018. The new European law establishes common rules for digital companies in handling user data and is supported by heavy fines for violations. European Parliament member Viviane Reding explained that with the GDPR, “you cannot hand over the personal data of citizens without having asked if the citizens agree that you hand it over. And you cannot steal it and just tell them after. That is not possible anymore, according to the new law. If you do, then the penalties will be very, very severe.”21

Because all of the major digital companies—including Amazon, Apple, Facebook, Google, Microsoft—do business in Europe, they have effectively adopted the higher standards of the GDPR for their operations in the United States and other countries, so as not to have multiple rules to follow. (Users in the United States might remember receiving notices from their digital services on changes in user agreements at the time the GDPR went into effect.) The GDPR exists because of the concerns of European citizens about data privacy. You might be further engaged to ensure that the new standards are followed in the United States. Also, if you have a complaint about your personal data being misused on a social media platform, you can make an online report at the FTC Complaint Assistant (www.ftccomplaintassistant.gov).

You can also make a switch to other social media platforms. Ironically, a New York Times writer suggests that users upset with the shortcomings of Facebook might find a solution with one of Facebook’s own subsidiaries: Instagram. He argued that the two social media platforms are almost a side-by-side test. Facebook is “designed as a giant megaphone, with an emphasis on public sharing and an algorithmic feed capable of sending posts rocketing around the world in seconds.” Conversely, Instagram is “more minimalist, designed for intimate sharing rather than viral broadcasting. Users of this app, many of whom have private accounts with modest followings, can post photos or videos, but external links do not work and there is no re-share button, making it harder for users to amplify one another’s posts.”22

Finally, you can tell people what you think: you can respond directly to Facebook on its help pages (www.facebook.com/help), you can monitor and set your Facebook and other social media sharing settings to the most restrictive, you can weigh in regarding social media privacy with your elected officials (you can find them at www.govtrack.us/congress/members), and you can share your ideas with friends on social media—including Facebook.

Value of reference one. Julia Powles, “The G.D.P.R., Europe’s New Privacy Law, and the Future of the Global Data Economy,” New Yorker, May 25, 2018, www.newyorker.com/tech/elements/the-gdpr-europes-new-privacy-law-and-the-future-of-the-global-data-economy

Value of reference two. Kevin Roose, “What If a Healthier Facebook Is Just . . . Instagram?” New York Times, January 22, 2018, www.nytimes.com/2018/01/22/technology/facebook-instagram.html.