6.2 Vision: Sensory and Perceptual Processing

Please continue to the next section.

Light Energy and Eye Structures

6-

Our eyes receive light energy and transduce (transform) it into neural messages that our brain then processes into what we consciously see. How does such a taken-

The Stimulus Input: Light Energy

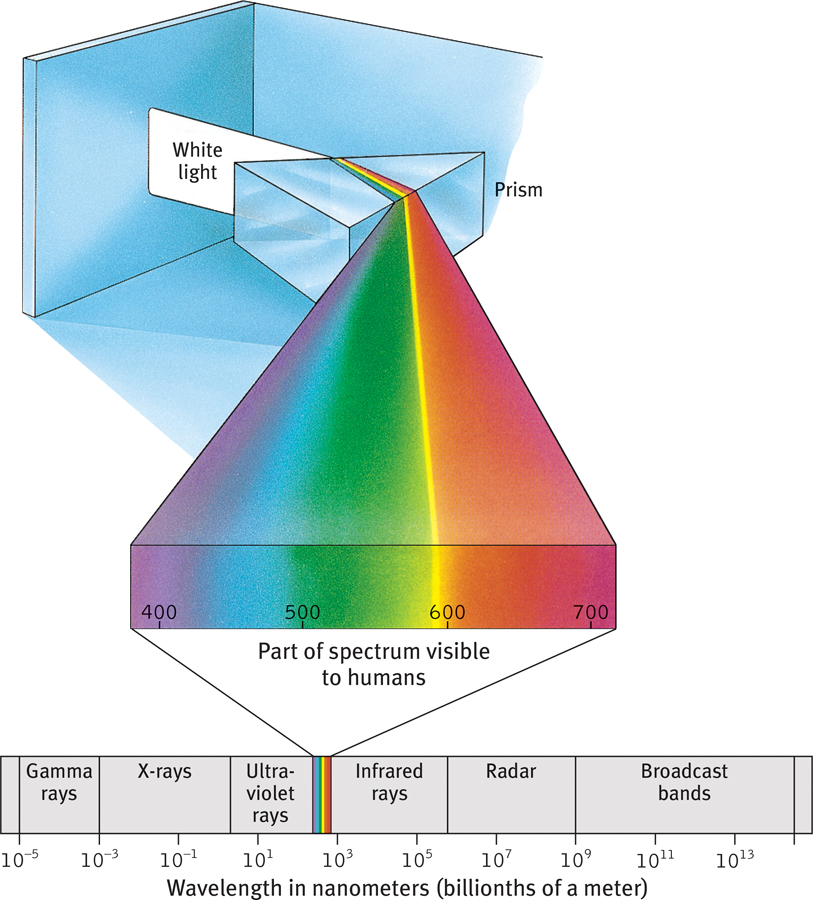

When you look at a bright red tulip, the stimuli striking your eyes are not particles of the color red but pulses of electromagnetic energy that your visual system perceives as red. What we see as visible light is but a thin slice of the whole spectrum of electromagnetic energy, ranging from imperceptibly short gamma waves to the long waves of radio transmission (FIGURE 6.12). Other organisms are sensitive to differing portions of the spectrum. Bees, for instance, cannot see what we perceive as red but can see ultraviolet light.

Figure 6.12

Figure 6.12The wavelengths we see What we see as light is only a tiny slice of a wide spectrum of electromagnetic energy, which ranges from gamma rays as short as the diameter of an atom to radio waves over a mile long. The wavelengths visible to the human eye (shown enlarged) extend from the shorter waves of blue-

wavelength the distance from the peak of one light or sound wave to the peak of the next. Electromagnetic wavelengths vary from the short blips of cosmic rays to the long pulses of radio transmission.

hue the dimension of color that is determined by the wavelength of light; what we know as the color names blue, green, and so forth.

intensity the amount of energy in a light wave or sound wave, which influences what we perceive as brightness or loudness. Intensity is determined by the wave’s amplitude (height).

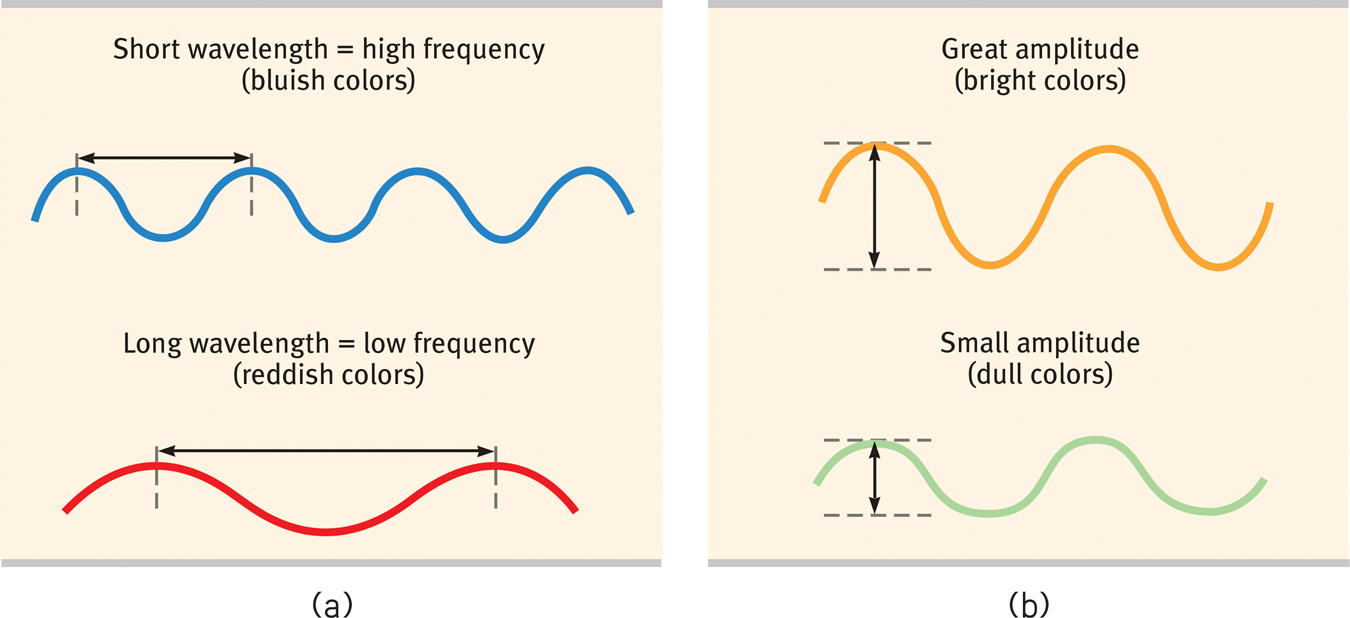

Two physical characteristics of light help determine our sensory experience. Light’s wavelength—the distance from one wave peak to the next (FIGURE 6.13a below)—determines its hue (the color we experience, such as the tulip’s red petals or green leaves). Intensity—the amount of energy in light waves (determined by a wave’s amplitude, or height)—influences brightness (FIGURE 6.13b). To understand how we transform physical energy into color and meaning, consider the eye.

Figure 6.13

Figure 6.13The physical properties of waves (a) Waves vary in wavelength (the distance between successive peaks). Frequency, the number of complete wavelengths that can pass a point in a given time, depends on the wavelength. The shorter the wavelength, the higher the frequency. Wavelength determines the perceived color of light. (b) Waves also vary in amplitude (the height from peak to trough). Wave amplitude influences the perceived brightness of colors.

pupil the adjustable opening in the center of the eye through which light enters.

240

The Eye

Light enters the eye through the cornea, which bends light to help provide focus (FIGURE 6.14). The light then passes through the pupil, a small adjustable opening. Surrounding the pupil and controlling its size is the iris, a colored muscle that dilates or constricts in response to light intensity—

Figure 6.14

Figure 6.14The eye Light rays reflected from a candle pass through the cornea, pupil, and lens. The curvature and thickness of the lens change to bring nearby or distant objects into focus on the retina. Rays from the top of the candle strike the bottom of the retina, and those from the left side of the candle strike the right side of the retina. The candle’s image on the retina thus appears upside down and reversed.

iris a ring of muscle tissue that forms the colored portion of the eye around the pupil and controls the size of the pupil opening.

lens the transparent structure behind the pupil that changes shape to help focus images on the retina.

retina the light-

accommodation the process by which the eye’s lens changes shape to focus near or far objects on the retina.

Behind the pupil is a transparent lens that focuses incoming light rays into an image on the retina, a multilayered tissue on the eyeball’s sensitive inner surface. The lens focuses the rays by changing its curvature and thickness in a process called accommodation.

For centuries, scientists knew that when an image of a candle passes through a small opening, it casts an inverted mirror image on a dark wall behind. If the image passing through the pupil casts this sort of upside-

Today’s answer: The retina doesn’t “see” a whole image. Rather, its millions of receptor cells convert particles of light energy into neural impulses and forward those to the brain. There, the impulses are reassembled into a perceived, upright-

241

Information Processing in the Eye and Brain

rods retinal receptors that detect black, white, and gray; necessary for peripheral and twilight vision, when cones don’t respond.

Retinal Processing

cones retinal receptor cells that are concentrated near the center of the retina and that function in daylight or in well-

6-

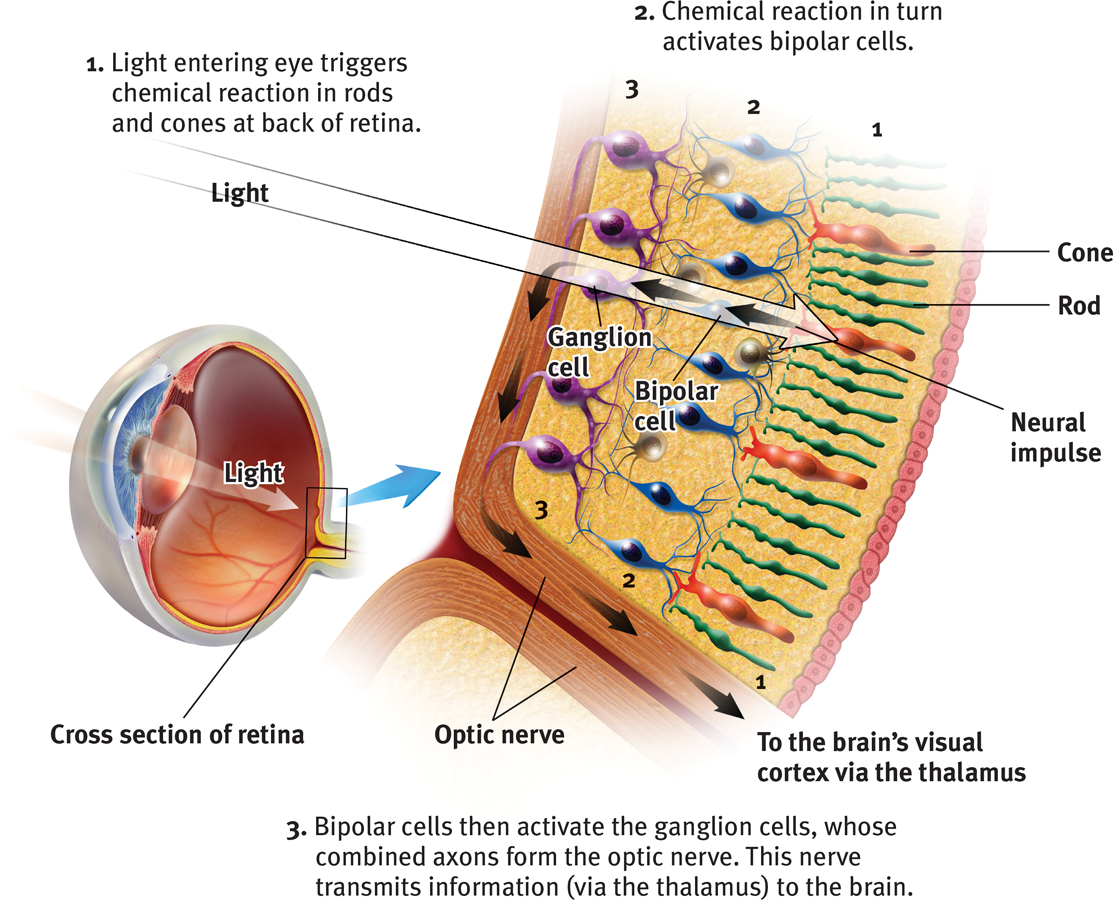

Imagine that you could follow behind a single light-

Figure 6.15

Figure 6.15The retina’s reaction to light

optic nerve the nerve that carries neural impulses from the eye to the brain.

blind spot the point at which the optic nerve leaves the eye, creating a “blind” spot because no receptor cells are located there.

RETRIEVAL PRACTICE

Figure 6.16

Figure 6.16The blind spot

- There are no receptor cells where the optic nerve leaves the eye. This creates a blind spot in your vision. To demonstrate, first close your left eye, look at the spot above, and move your face away to a distance at which one of the cars disappears. (Which one do you predict it will be?) Repeat with your right eye closed—

and note that now the other car disappears. Can you explain why?

Your blind spot is on the nose side of each retina, which means that objects to your right may fall onto the right eye’s blind spot. Objects to your left may fall on the left eye’s blind spot. The blind spot does not normally impair your vision, because your eyes are moving and because one eye catches what the other misses.

fovea the central focal point in the retina, around which the eye’s cones cluster.

242

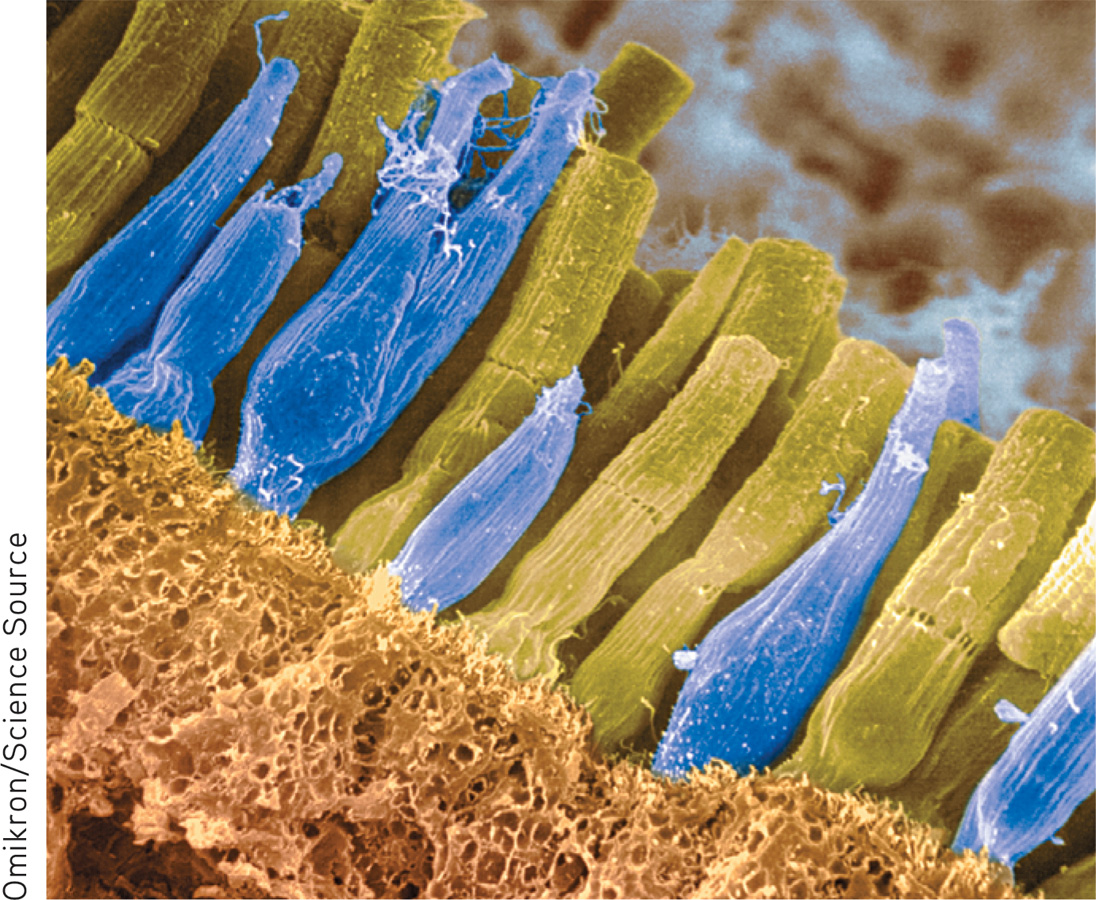

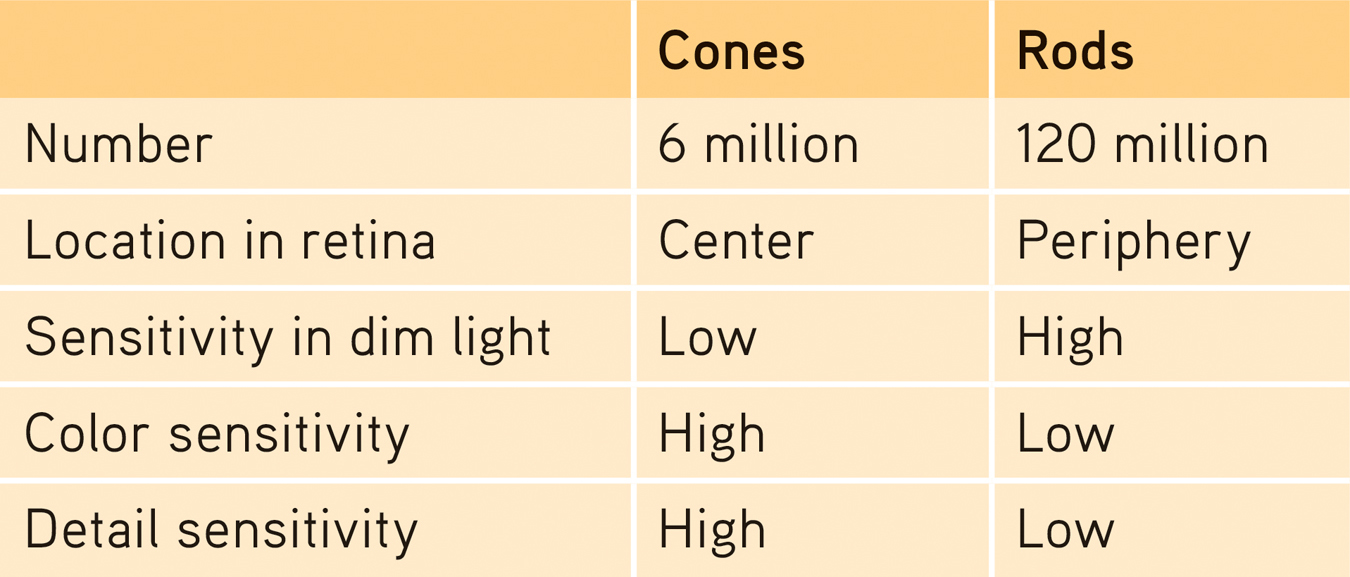

Rods and cones differ in where they’re found and in what they do (TABLE 6.1). Cones cluster in and around the fovea, the retina’s area of central focus (see Figure 6.14). Many cones have their own hotline to the brain: Each cone transmits its message to a single bipolar cell. That cell helps relay the cone’s individual message to the visual cortex, which devotes a large area to input from the fovea. These direct connections preserve the cones’ precise information, making them better able to detect fine detail.

Table 6.1

Table 6.1Receptors in the Human Eye: Rod-

Rods don’t have dedicated hotlines. Rods share bipolar cells which send combined messages. To experience this rod-

Cones also enable you to perceive color. In dim light they become ineffectual, so you see no colors. Rods, which enable black-

When you enter a darkened theater or turn off the light at night, your eyes adapt. Your pupils dilate to allow more light to reach your retina, but it typically takes 20 minutes or more before your eyes fully adapt. You can demonstrate dark adaptation by closing or covering one eye for up to 20 minutes. Then make the light in the room not quite bright enough to read this book with your open eye. Now open the dark-

To summarize: The retina’s neural layers don’t just pass along electrical impulses. They also help to encode and analyze sensory information. (The third neural layer in a frog’s eye, for example, contains the “bug detector” cells that fire only in response to moving fly-

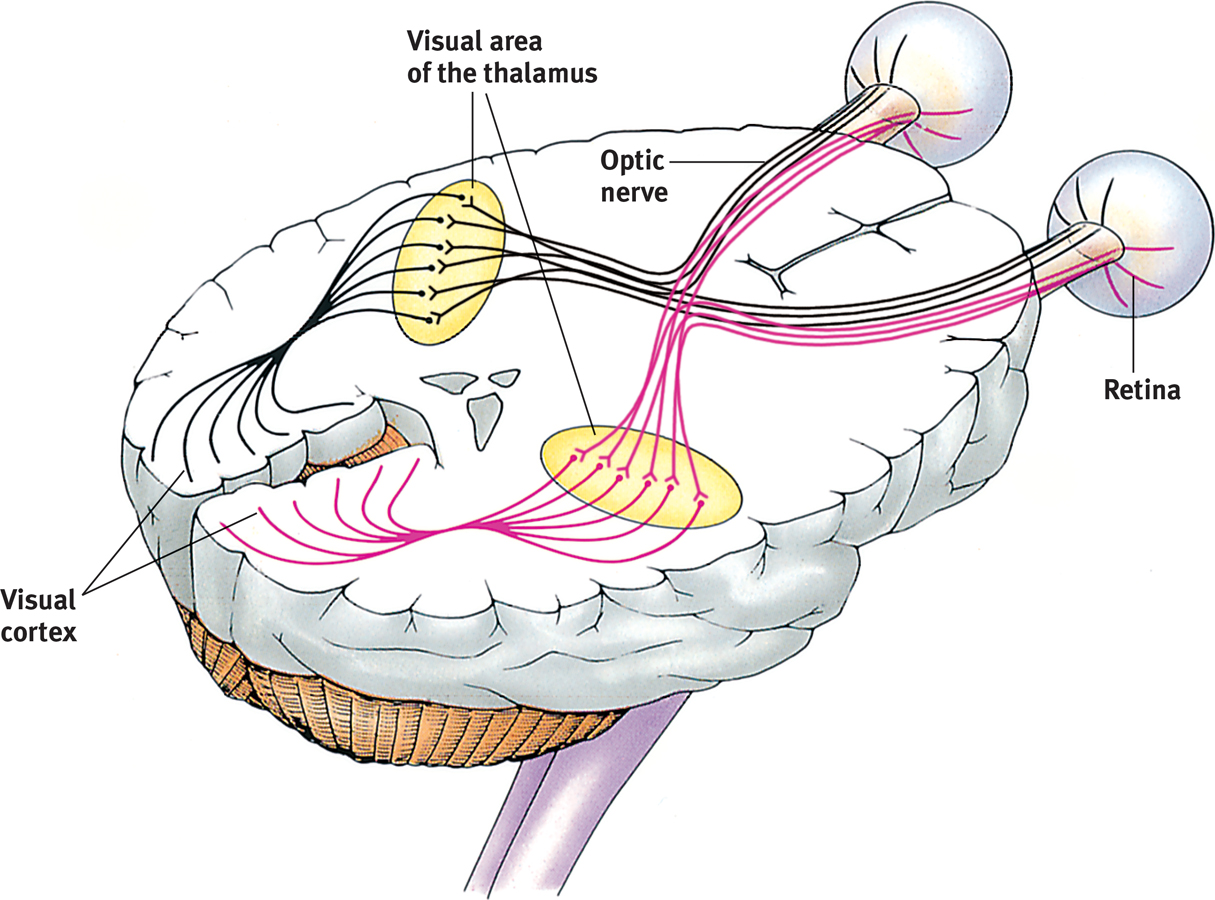

- After processing by your retina’s nearly 130 million receptor rods and cones, information travels forward again, to your bipolar cells.

- From there, it moves to your eye’s million or so ganglion cells, and through their axons making up the optic nerve to your brain.

- After a momentary stop-

off in the thalamus, the information travels to your visual cortex. Any given retinal area relays its information to a corresponding location in your visual cortex, in the occipital lobe at the back of your brain (FIGURE 6.17).

Figure 6.17

Figure 6.17

Pathway from the eyes to the visual cortex Ganglion axons forming the optic nerve run to the thalamus, where they synapse with neurons that run to the visual cortex.

The same sensitivity that enables retinal cells to fire messages can lead them to misfire, as you can demonstrate. Turn your eyes to the left, close them, and then gently rub the right side of your right eyelid with your fingertip. Note the patch of light to the left, moving as your finger moves.

Why do you see light? Why at the left? This happens because your retinal cells are so responsive that even pressure triggers them. But your brain interprets their firing as light. Moreover, it interprets the light as coming from the left—

Question

U8xQKX0HWNppqmtgfo2RFuo6i+Jx7HneNvMJQhzCP+o+WDWccyv1qxbZiedJRWpqa0BqcnudaTi1v6ujoZfXem/D6XHUQY3F1SmNGK2rYgTQk6v8QPc5Ta+o/emZ1bV/b/uUCl3sNUWiCGHgTe2aAokGa4cfzcLdxB82k2cbAzSImo3gL6CnrfySCw4DAgEVnRXkSWWOaMkL5UjDHkqRR4haI0Foed9g6pQX8mGPxVdD3vIbkN6Xa3YultUXq8ymXxCSTEG6A/q9avjp243

RETRIEVAL PRACTICE

- Some nocturnal animals, such as toads, mice, rats, and bats, have impressive night vision thanks to having many more ______________ (rods/cones) than ______________ (rods/cones) in their retinas. These creatures probably have very poor ______________ (color/black-

and- white) vision.

rods; cones; color

- Cats are able to open their ______________ much wider than we can, which allows more light into their eyes so they can see better at night.

pupils

Color Processing

6-

One of vision’s most basic and intriguing mysteries is how we see the world in color. In everyday conversation, we talk as though objects possess color: “A tomato is red.” Recall the old question, “If a tree falls in the forest and no one hears it, does it make a sound?” We can ask the same of color: If no one sees the tomato, is it red?

The answer is No. First, the tomato is everything but red, because it rejects (reflects) the long wavelengths of red. Second, the tomato’s color is our mental construction. As Isaac Newton (1704) noted, “The [light] rays are not colored.” Like all aspects of vision, our perception of color resides not in the object itself but in the theater of our brains, as evidenced by our dreaming in color.

“Only mind has sight and hearing; all things else are deaf and blind.”

Epicharmus, Fragments, 550 b.c.e.

Young-

How, from the light energy striking the retina, does our brain construct our experience of color—

Modern detective work on the mystery of color vision began in the nineteenth century, when Hermann von Helmholtz built on the insights of an English physicist, Thomas Young. Any color can be created by combinations of different amounts of light waves of three primary colors—

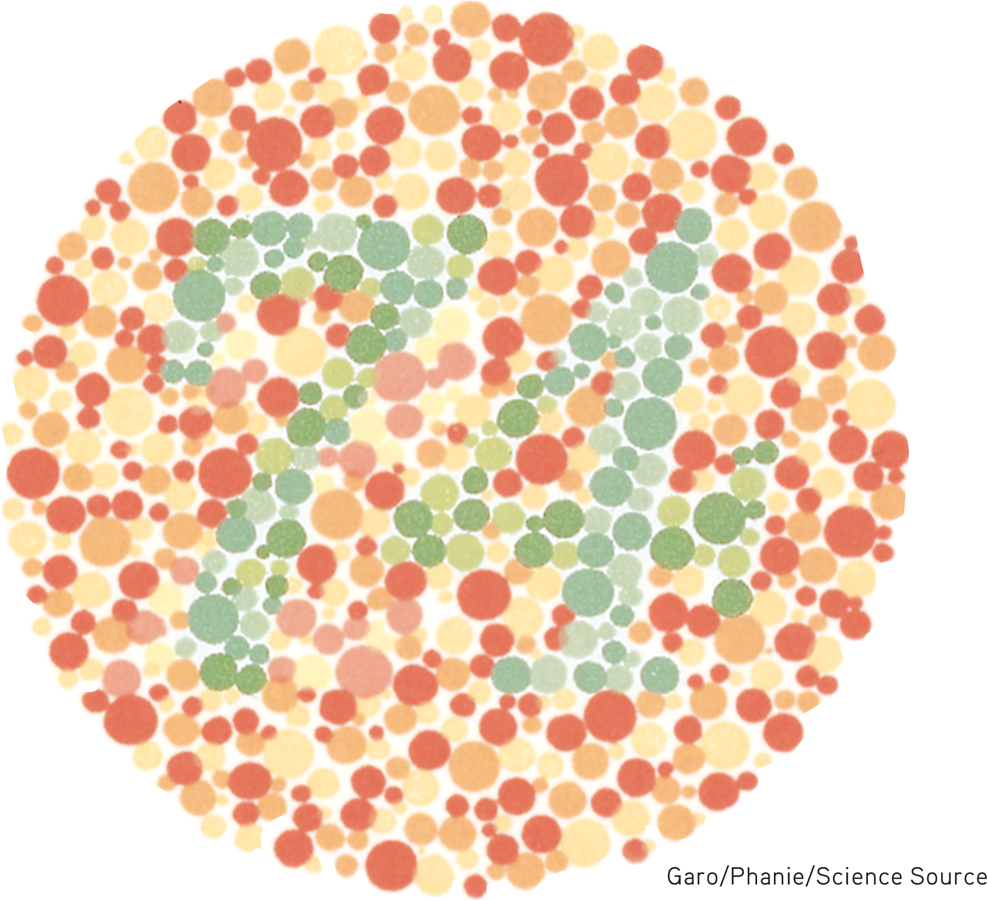

Most people with color-

Figure 6.18

Figure 6.18Color-

But why do people blind to red and green often still see yellow? And why does yellow appear to be a pure color and not a mixture of red and green, the way purple is of red and blue? Trichromatic theory left some parts of the color vision mystery unsolved, and this sparked researcher Ewald Hering’s curiosity.

244

Hering, a physiologist, found a clue in afterimages. Stare at a green square for a while and then look at a white sheet of paper, and you will see red, green’s opponent color. Stare at a yellow square and its opponent color, blue, will appear on the white paper. (To experience this, try the flag demonstration in FIGURE 6.19.) Hering formed another hypothesis: There must be two additional color processes, one responsible for red-

Figure 6.19

Figure 6.19Afterimage effect Stare at the center of the flag for a minute and then shift your eyes to the dot in the white space beside it. What do you see? (After tiring your neural response to black, green, and yellow, you should see their opponent colors.) Stare at a white wall and note how the size of the flag grows with the projection distance.

opponent-

Indeed, a century later, researchers also confirmed Hering’s opponent-

For an interactive review and demonstration of these color vision principles, visit LaunchPad’s PsychSim 6: Colorful World.

For an interactive review and demonstration of these color vision principles, visit LaunchPad’s PsychSim 6: Colorful World.

So how do we explain afterimages, such as in the flag demonstration? By staring at green, we tire our green response. When we then stare at white (which contains all colors, including red), only the red part of the green-

The present solution to the mystery of color vision is therefore roughly this: Color processing occurs in two stages.

- The retina’s red, green, and blue cones respond in varying degrees to different color stimuli, as the Young-

Helmholtz trichromatic theory suggested. - The cones’ responses are then processed by opponent-

process cells, as Hering’s theory proposed.

RETRIEVAL PRACTICE

- What are two key theories of color vision? Are they contradictory or complementary?

The Young-

feature detectors nerve cells in the brain that respond to specific features of the stimulus, such as shape, angle, or movement.

Feature Detection

6-

Once upon a time, scientists believed that the brain was like a movie screen, on which the eye projected images. But then along came David Hubel and Torsten Wiesel (1979), who showed that our brain’s computing system deconstructs visual images and then reassembles them. Hubel and Wiesel received a Nobel Prize for their work on feature detectors, nerve cells in the brain that respond to a scene’s specific features—

245

Using microelectrodes, they had discovered that some neurons fired actively when cats were shown lines at one angle, while other neurons responded to lines at a different angle. They surmised that these specialized neurons in the occipital lobe’s visual cortex—

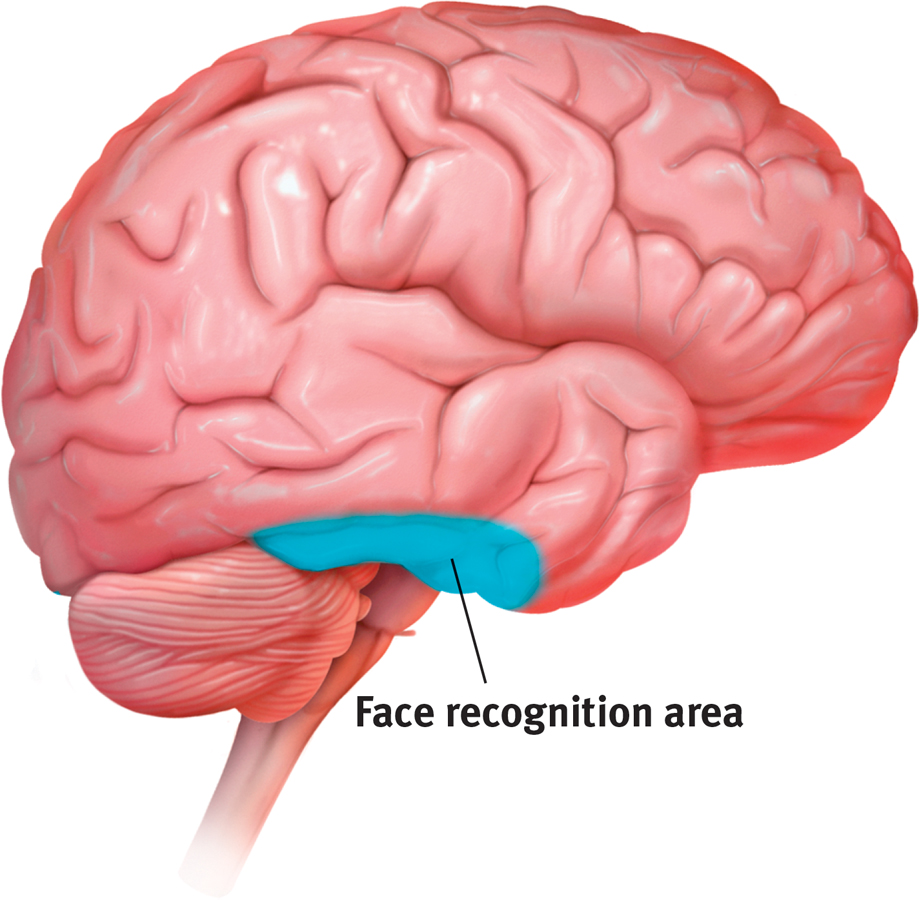

One temporal lobe area by your right ear (FIGURE 6.20) enables you to perceive faces and, thanks to a specialized neural network, to recognize them from varied viewpoints (Connor, 2010). If stimulated in this area, you might spontaneously see faces. If this region were damaged, you might recognize other forms and objects, but not familiar faces.

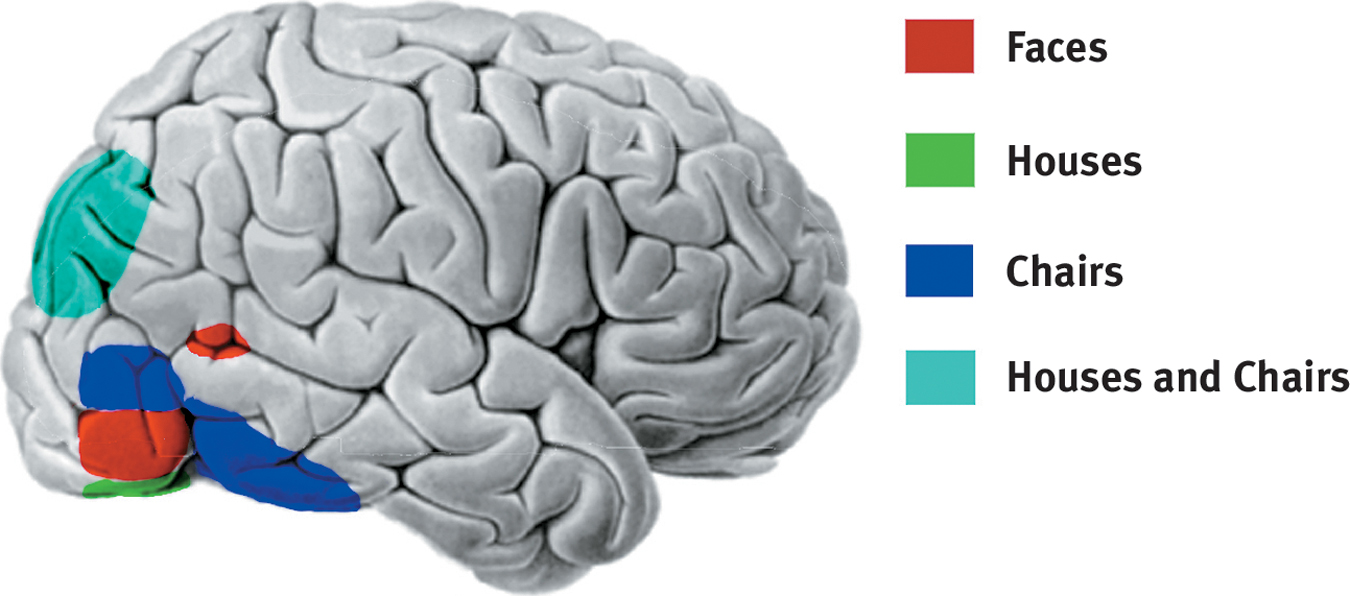

Figure 6.20

Figure 6.20Face recognition processing In social animals such as humans, a large right temporal lobe area (shown here in a right-

Researchers can temporarily disrupt the brain’s face-

Figure 6.21

Figure 6.21The telltale brain Looking at faces, houses, and chairs activates different brain areas in this right-

For biologically important objects and events, monkey brains (and surely ours as well) have a “vast visual encyclopedia” distributed as specialized cells (Perrett et al., 1988, 1992, 1994). These cells respond to one type of stimulus, such as a specific gaze, head angle, posture, or body movement. Other supercell clusters integrate this information and fire only when the cues collectively indicate the direction of someone’s attention and approach. This instant analysis, which aided our ancestors’ survival, also helps a soccer player anticipate where to strike the ball, and a driver anticipate a pedestrian’s next movement.

246

parallel processing the processing of many aspects of a problem simultaneously; the brain’s natural mode of information processing for many functions, including vision.

Parallel Processing

6-

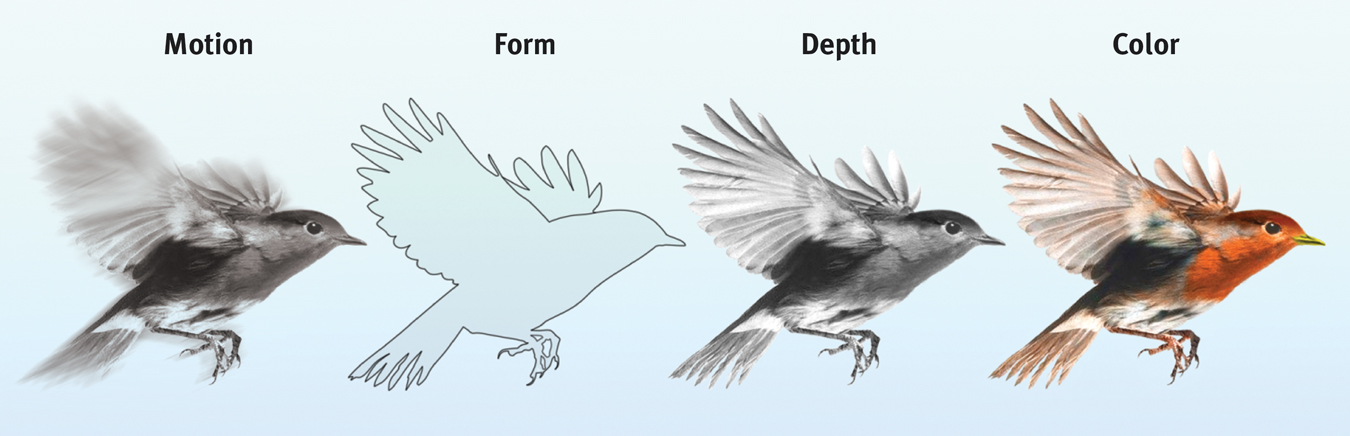

Our brain achieves these and other remarkable feats by parallel processing: doing many things at once. To analyze a visual scene, the brain divides it into subdimensions—

Figure 6.22

Figure 6.22Parallel processing Studies of patients with brain damage suggest that the brain delegates the work of processing motion, form, depth, and color to different areas. After taking a scene apart, the brain integrates these subdimensions into the perceived image. How does the brain do this? The answer to this question is the Holy Grail of vision research.

To recognize a face, your brain integrates information projected by your retinas to several visual cortex areas, compares it to stored information, and enables you to recognize the face: Grandmother! Scientists have debated whether this stored information is contained in a single cell or, more likely, distributed over a vast network of cells. Some supercells—

Destroy or disable a neural workstation for a visual subtask, and something peculiar results, as happened to “Mrs. M.” (Hoffman, 1998). Since a stroke damaged areas near the rear of both sides of her brain, she has been unable to perceive movement. People in a room seem “suddenly here or there but I have not seen them moving.” Pouring tea into a cup is a challenge because the fluid appears frozen—

After stroke or surgery has damaged the brain’s visual cortex, others have experienced blindsight. Shown a series of sticks, they report seeing nothing. Yet when asked to guess whether the sticks are vertical or horizontal, their visual intuition typically offers the correct response. When told, “You got them all right,” they are astounded. There is, it seems, a second “mind”—a parallel processing system—

For a 4-

For a 4-

***

“I am…wonderfully made.”

King David, Psalm 139:14

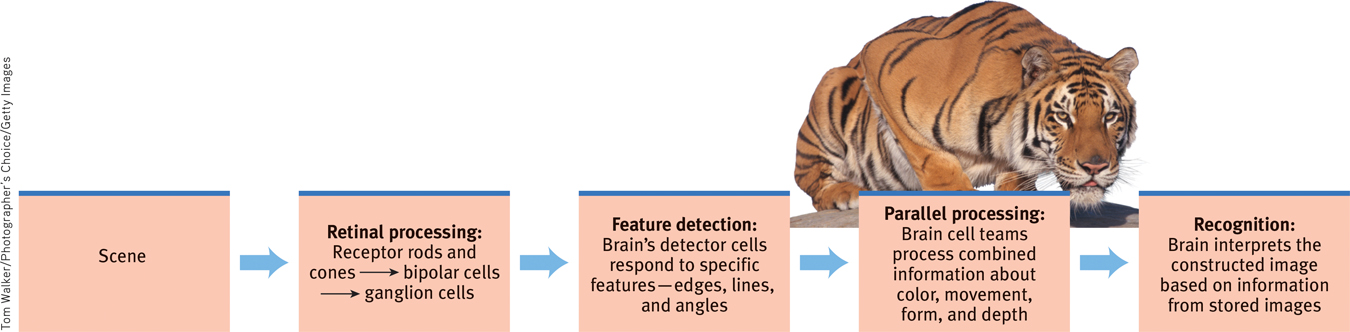

Think about the wonders of visual processing. As you read this page, the letters are transmitted by reflected light rays onto your retina, which triggers a process that sends formless nerve impulses to several areas of your brain, which integrates the information and decodes meaning, thus completing the transfer of information across time and space from my mind to your mind (FIGURE 6.23). That all of this happens instantly, effortlessly, and continuously is indeed awesome. As Roger Sperry (1985) observed, the “insights of science give added, not lessened, reasons for awe, respect, and reverence.”

Figure 6.23

Figure 6.23A simplified summary of visual information processing

Question

6139TAOtAVLFM2udjp+CBcIYOmxqpXLFxzK8hEqKK6n3WYzHwsOdrnrxqPewItvGjq3uGiahzvtg/60ceVQu4YzH1wXA5MgAyaTNKdO70ryS4Hw5tj2OkiNEv4N4ohPxTB87qy/kR6ZYbZ1qLBrV40MUhiu9W7gBfIrQ7zzBDsu3YMPlSvAUw9Db2K65TGC9Jko70PNOH/wwF7gjePQu9KvFJ1erRu9Zhzx8WAQPus5/LhQW0cyw2VjaLilyiaWQ4mKmu+ezOFkR8/15xrPAyM7+C0pPfBVBsdA4cpBQ3Ps=247

RETRIEVAL PRACTICE

- What is the rapid sequence of events that occurs when you see and recognize a friend?

Light waves reflect off the person and travel into your eye, where the receptor cells in your retina convert the light waves’ energy into neural impulses sent to your brain. Your brain processes the subdimensions of this visual input–

Perceptual Organization

gestalt an organized whole. Gestalt psychologists emphasized our tendency to integrate pieces of information into meaningful wholes.

6-

It’s one thing to understand how we see colors and shapes. But how do we organize and interpret those sights so that they become meaningful perceptions—

Early in the twentieth century, a group of German psychologists noticed that when given a cluster of sensations, people tend to organize them into a gestalt, a German word meaning a “form” or a “whole.” As we look straight ahead, we cannot separate the perceived scene into our left and right fields of view. It is, at every moment, one whole, seamless scene. Our conscious perception is an integrated whole.

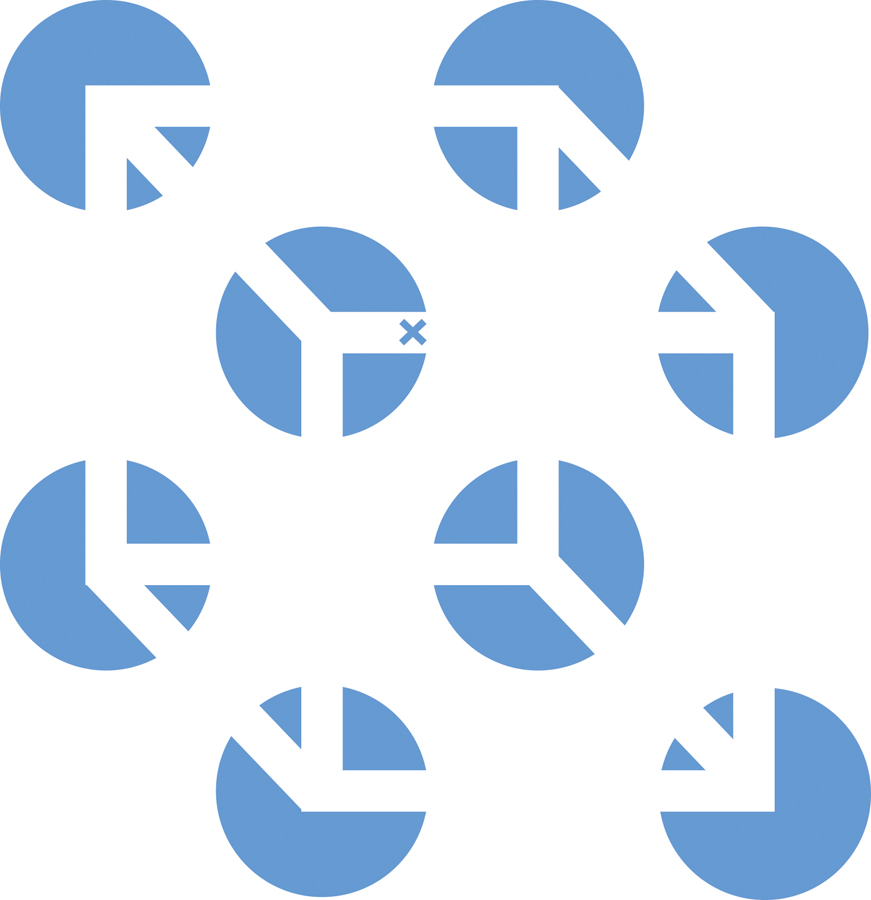

Consider FIGURE 6.24: The individual elements of this figure, called a Necker cube, are really nothing but eight blue circles, each containing three converging white lines. When we view these elements all together, however, we see a cube that sometimes reverses direction. This phenomenon nicely illustrates a favorite saying of Gestalt psychologists: In perception, the whole may exceed the sum of its parts, rather as water differs from its hydrogen and oxygen parts.

Figure 6.24

Figure 6.24A Necker cube What do you see: circles with white lines, or a cube? If you stare at the cube, you may notice that it reverses location, moving the tiny X in the center from the front edge to the back. At times, the cube may seem to float forward, with circles behind it. At other times, the circles may become holes through which the cube appears, as though it were floating behind them. There is far more to perception than meets the eye. (From Bradley et al., 1976.)

Over the years, the Gestalt psychologists demonstrated many principles we use to organize our sensations into perceptions (Wagemans et al., 2012a,b). Underlying all of them is a fundamental truth: Our brain does more than register information about the world. Perception is not just opening a shutter and letting a picture print itself on the brain. We filter incoming information and construct perceptions. Mind matters.

Form Perception

Imagine designing a video-

Figure and Ground To start with, the video-

Figure 6.25

Figure 6.25Reversible figure and ground

figure-

grouping the perceptual tendency to organize stimuli into coherent groups.

248

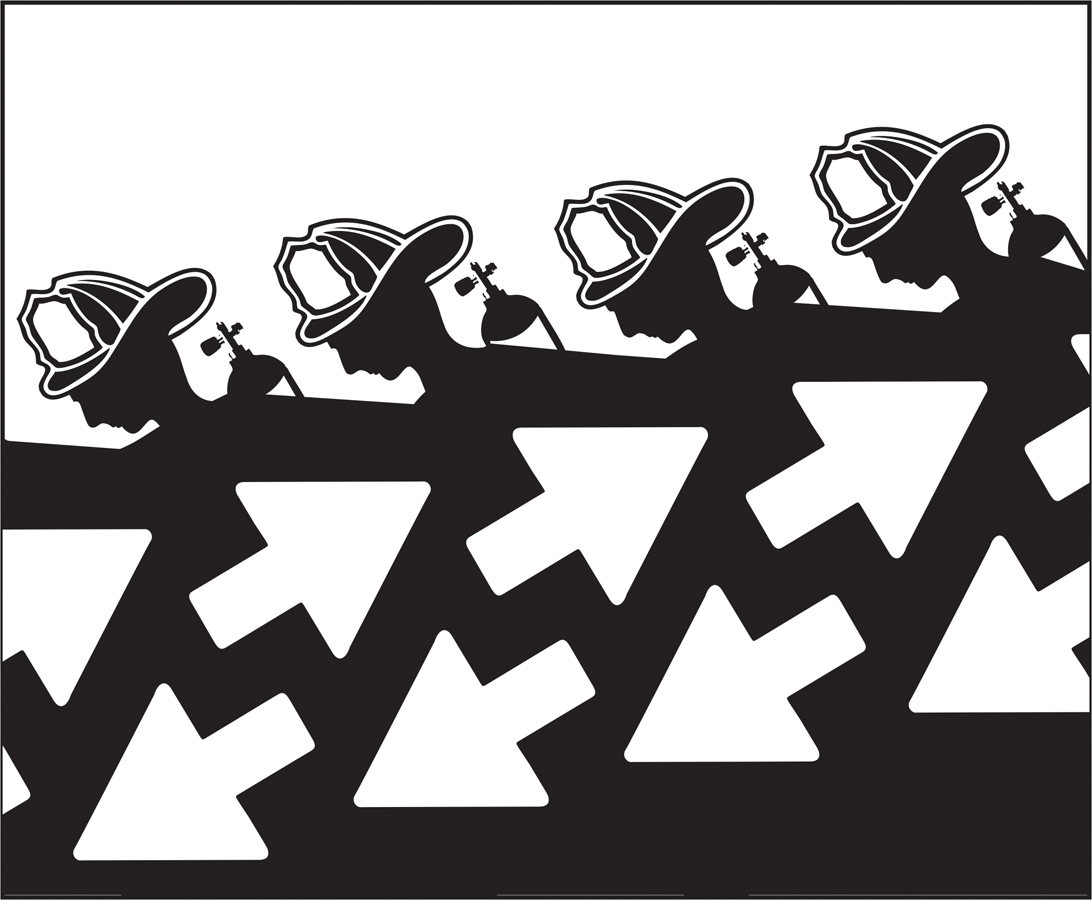

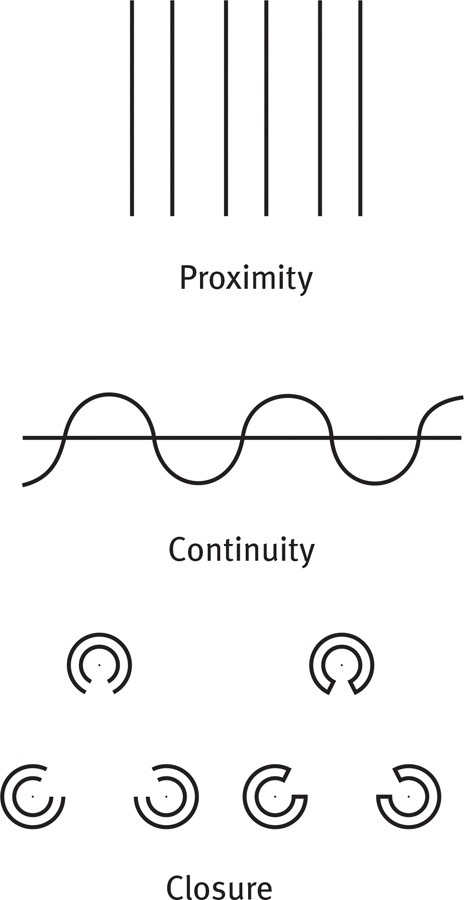

Grouping Having discriminated figure from ground, we (and our video-

- Proximity We group nearby figures together. We see not six separate lines, but three sets of two lines.

- Continuity We perceive smooth, continuous patterns rather than discontinuous ones. This pattern could be a series of alternating semicircles, but we perceive it as two continuous lines—

one wavy, one straight. - Closure We fill in gaps to create a complete, whole object. Thus we assume that the circles on the left are complete but partially blocked by the (illusory) triangle. Add nothing more than little line segments to close off the circles and your brain stops constructing a triangle.

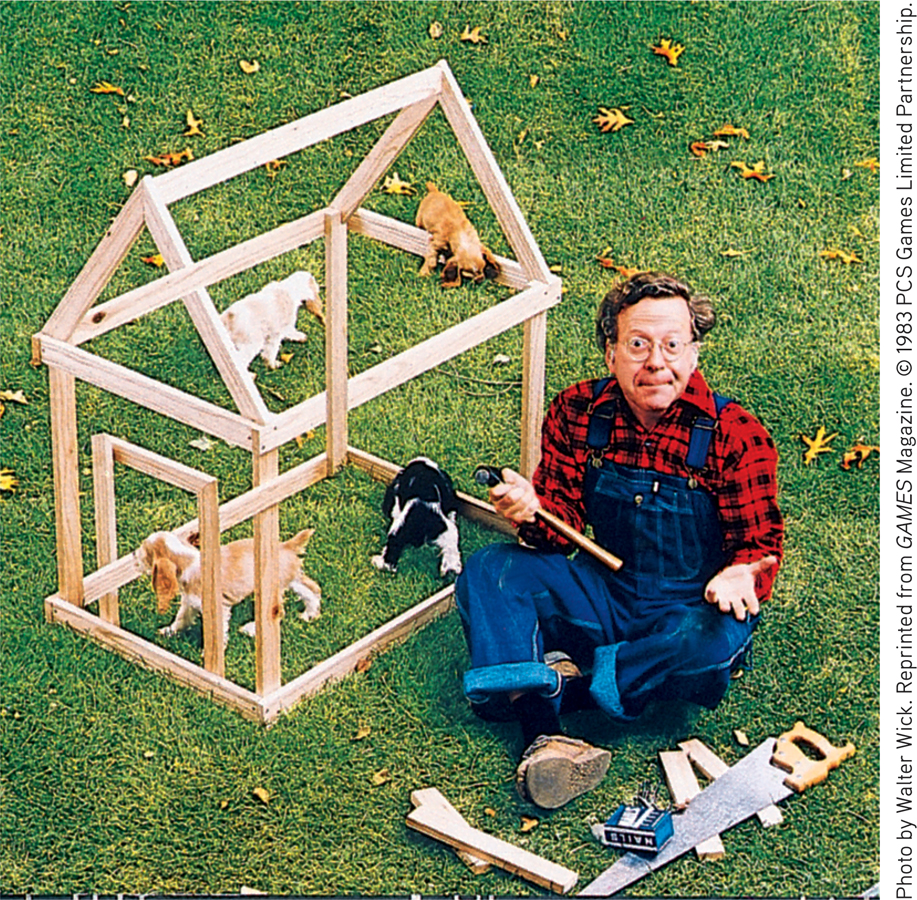

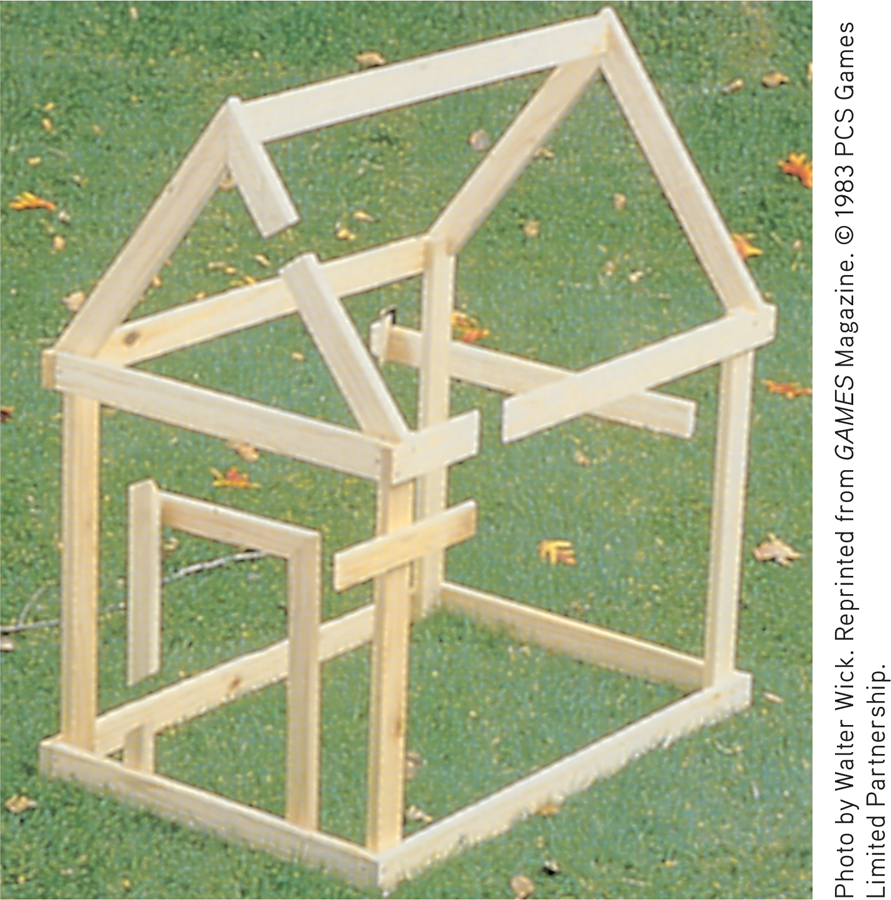

Such principles usually help us construct reality. Sometimes, however, they lead us astray, as when we look at the doghouse in FIGURE 6.26.

Figure 6.26

Figure 6.26Grouping principles What’s the secret to this impossible doghouse? You probably perceive this doghouse as a gestalt—

RETRIEVAL PRACTICE

- In terms of perception, a band’s lead singer would be considered ___________ (figure/ground), and the other musicians would be considered ___________ (figure/ground).

figure; ground

- What do we mean when we say that, in perception, the whole may exceed the sum of its parts?

Gestalt psychologists used this saying to describe our perceptual tendency to organize clusters of sensations into meaningful forms or coherent groups.

249

depth perception the ability to see objects in three dimensions although the images that strike the retina are two-

Depth Perception

visual cliff a laboratory device for testing depth perception in infants and young animals.

6-

From the two-

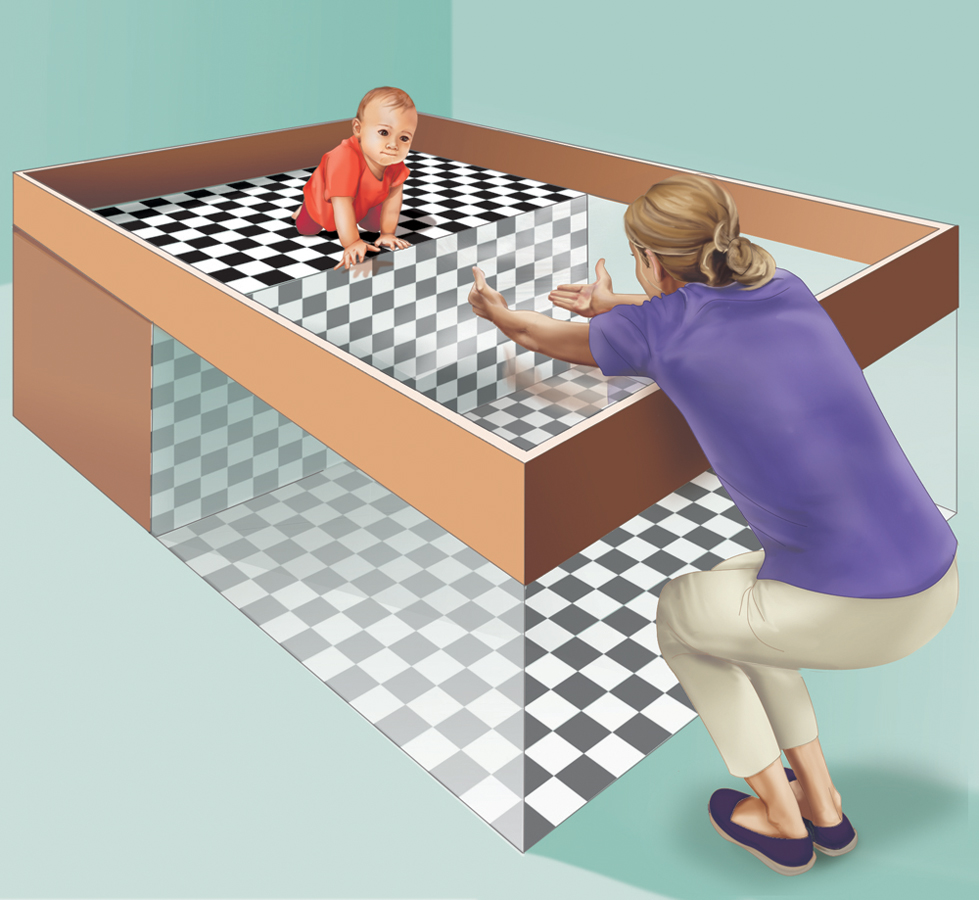

Back in their Cornell University laboratory, Gibson and Walk placed 6-

Figure 6.27

Figure 6.27Visual cliff Eleanor Gibson and Richard Walk devised this miniature cliff with a glass-

binocular cues depth cues, such as retinal disparity, that depend on the use of two eyes.

Had they learned to perceive depth? Learning seems to be part of the answer because crawling, no matter when it begins, seems to increase infants’ wariness of heights (Campos et al., 1992). As infants become mobile, their experience leads them to fear heights (Adolph et al., 2014).

How do we do it? How do we transform two differing two-

retinal disparity a binocular cue for perceiving depth: By comparing images from the retinas in the two eyes, the brain computes distance—

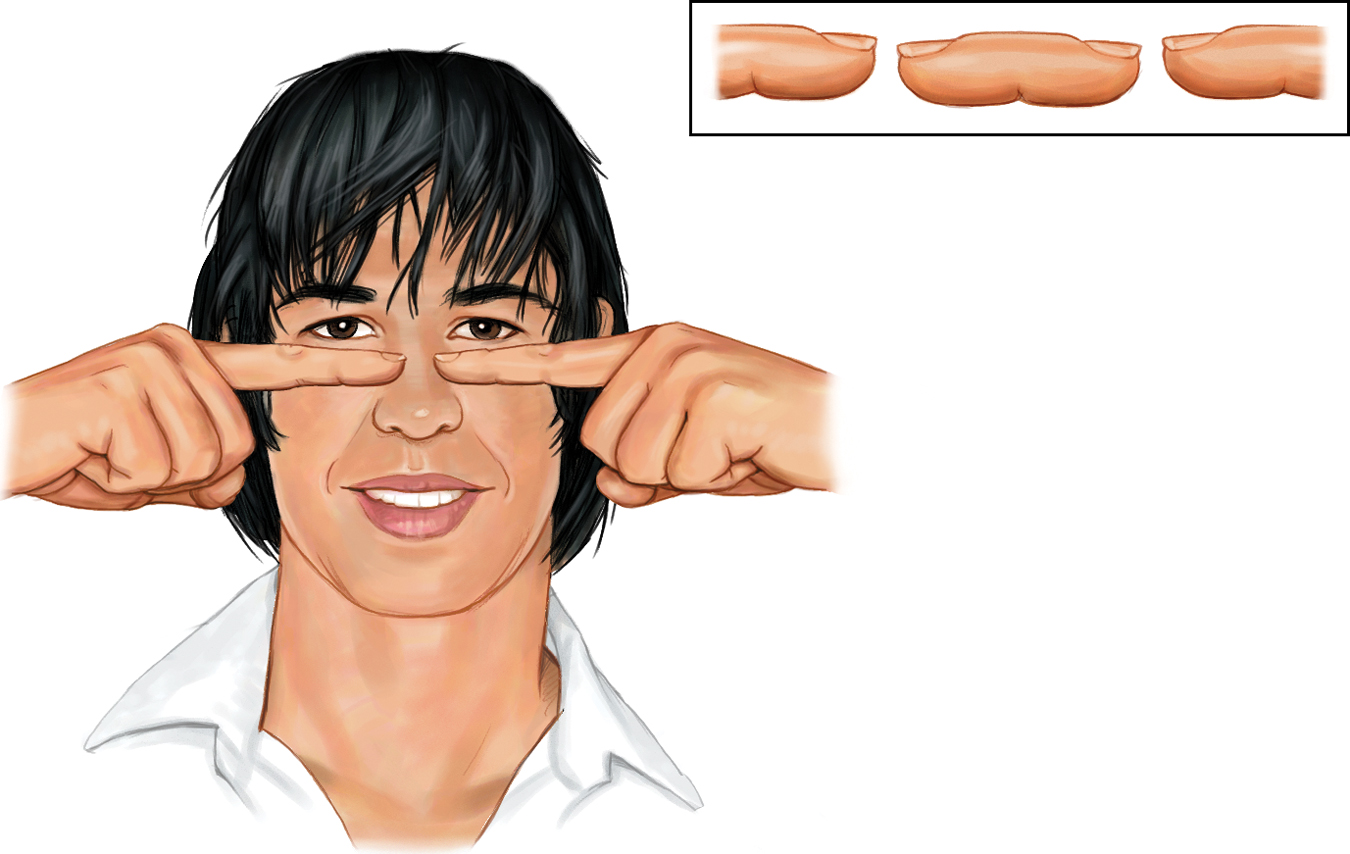

Binocular Cues Try this: With both eyes open, hold two pens or pencils in front of you and touch their tips together. Now do so with one eye closed. With one eye, the task becomes noticeably more difficult, demonstrating the importance of binocular cues in judging the distance of nearby objects. Two eyes are better than one.

Because your eyes are about 2½ inches apart, your retinas receive slightly different images of the world. By comparing these two images, your brain can judge how close an object is to you. The greater the retinal disparity, or difference between the two images, the closer the object. Try it. Hold your two index fingers, with the tips about half an inch apart, directly in front of your nose, and your retinas will receive quite different views. If you close one eye and then the other, you can see the difference. (Bring your fingers close and you can create a finger sausage, as in FIGURE 6.28.) At a greater distance—

Figure 6.28

Figure 6.28The floating finger sausage Hold your two index fingers about 5 inches in front of your eyes, with their tips half an inch apart. Now look beyond them and note the weird result. Move your fingers out farther and the retinal disparity—

250

Carnivorous animals, including humans, have eyes that enable forward focus on prey and offer binocular vision-

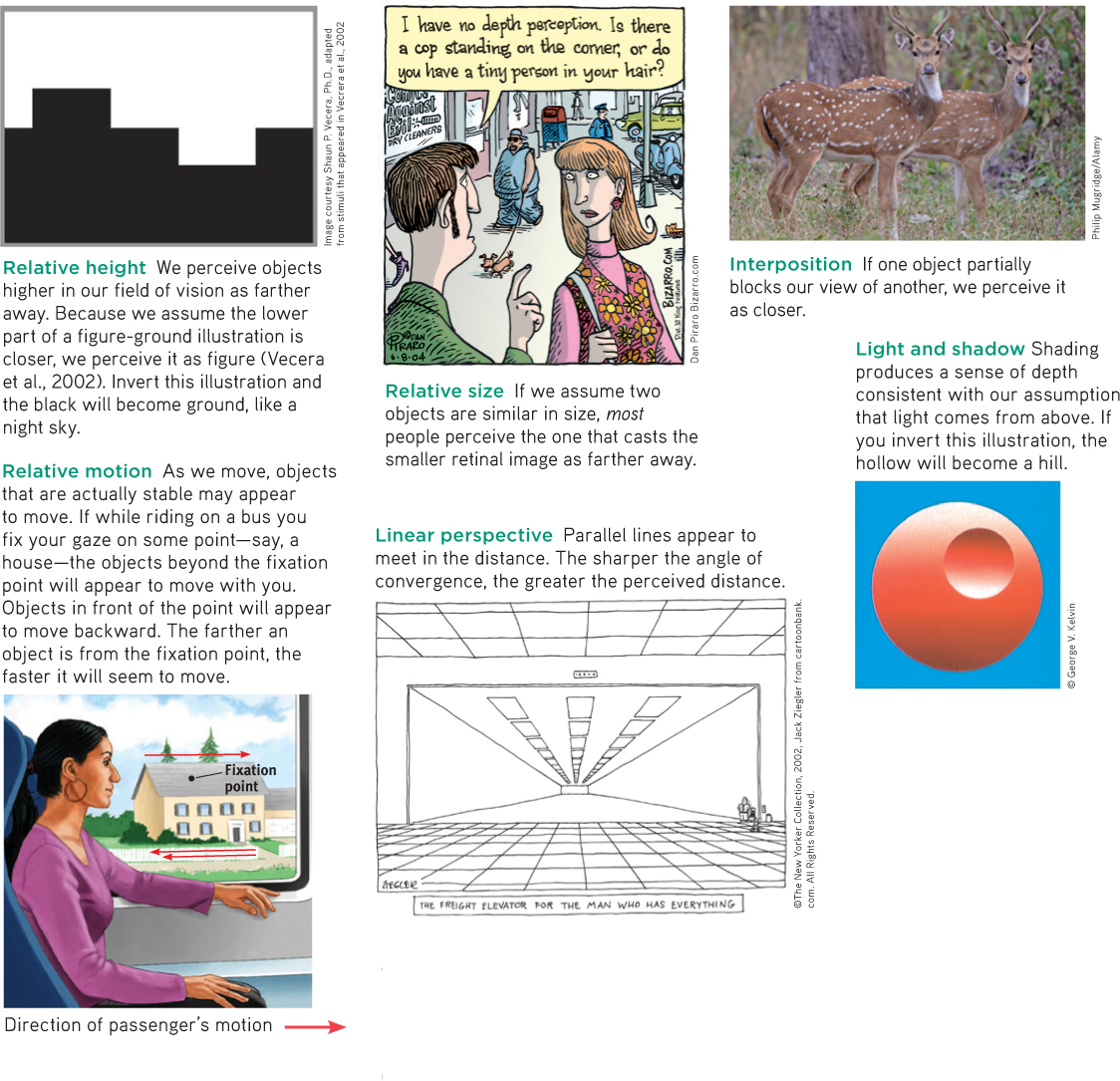

monocular cues depth cues, such as interposition and linear perspective, available to either eye alone.

We could easily build this feature into our video-

For animated demonstrations and explanations of these cues, visit LaunchPad’s Concept Practice: Depth Cues.

For animated demonstrations and explanations of these cues, visit LaunchPad’s Concept Practice: Depth Cues.

Monocular Cues How do we judge whether a person is 10 or 100 meters away? Retinal disparity won’t help us here, because there won’t be much difference between the images cast on our right and left retinas. At such distances, we depend on monocular cues (depth cues available to each eye separately). See FIGURE 6.29 for some examples.

Figure 6.29

Figure 6.29Monocular depth cues

RETRIEVAL PRACTICE

- How do we normally perceive depth?

We are normally able to perceive depth thanks to (1) binocular cues (which are based on our retinal disparity), and (2) monocular cues (which include relative height, relative size, interposition, linear perspective, light and shadow, and relative motion).

251

Motion Perception

phi phenomenon an illusion of movement created when two or more adjacent lights blink on and off in quick succession.

Imagine that you could perceive the world as having color, form, and depth but that you could not see motion. Not only would you be unable to bike or drive, you would have trouble writing, eating, and walking.

Normally your brain computes motion based partly on its assumption that shrinking objects are retreating (not getting smaller) and enlarging objects are approaching. But you are imperfect at motion perception. In young children, this ability to correctly perceive approaching (and enlarging) vehicles is not yet fully developed, which puts them at risk for pedestrian accidents (Wann et al., 2011). But it’s not just children who have occasional difficulties with motion perception. Our adult brains are sometimes tricked into believing what they are not seeing. When large and small objects move at the same speed, the large objects appear to move more slowly. Thus, trains seem to move slower than cars, and jumbo jets seem to land more slowly than little jets.

Our brain also perceives a rapid series of slightly varying images as continuous movement (a phenomenon called stroboscopic movement). As film animators know well, a superfast slide show of 24 still pictures a second will create an illusion of movement. We construct that motion in our heads, just as we construct movement in blinking marquees and holiday lights. We perceive two adjacent stationary lights blinking on and off in quick succession as one single light moving back and forth. Lighted signs exploit this phi phenomenon with a succession of lights that creates the impression of, say, a moving arrow.

Perceptual Constancy

6-

“From there to here, from here to there, funny things are everywhere.”

Dr. Seuss, One Fish, Two Fish, Red Fish, Blue Fish, 1960

perceptual constancy perceiving objects as unchanging (having consistent color, brightness, shape, and size) even as illumination and retinal images change.

So far, we have noted that our video-

“Sometimes I wonder: Why is that Frisbee getting bigger? And then it hits me.”

Anonymous

color constancy perceiving familiar objects as having consistent color, even if changing illumination alters the wavelengths reflected by the objects.

Color and Brightness Constancies Our experience of color depends on an object’s context. This would be clear if you viewed an isolated tomato through a paper tube over the course of a day. The tomato’s color would seem to change as the light—

Though we take color constancy for granted, this ability is truly remarkable. A blue poker chip under indoor lighting reflects wavelengths that match those reflected by a sunlit gold chip (Jameson, 1985). Yet bring a bluebird indoors and it won’t look like a goldfinch. The color is not in the bird’s feathers. You and I see color thanks to our brain’s computations of the light reflected by an object relative to the objects surrounding it.

Figure 6.30

Figure 6.30The solution Another view of the impossible doghouse in Figure 6.26 reveals the secrets of this illusion. From the photo angle in Figure 6.26, the grouping principle of closure leads us to perceive the boards as continuous.

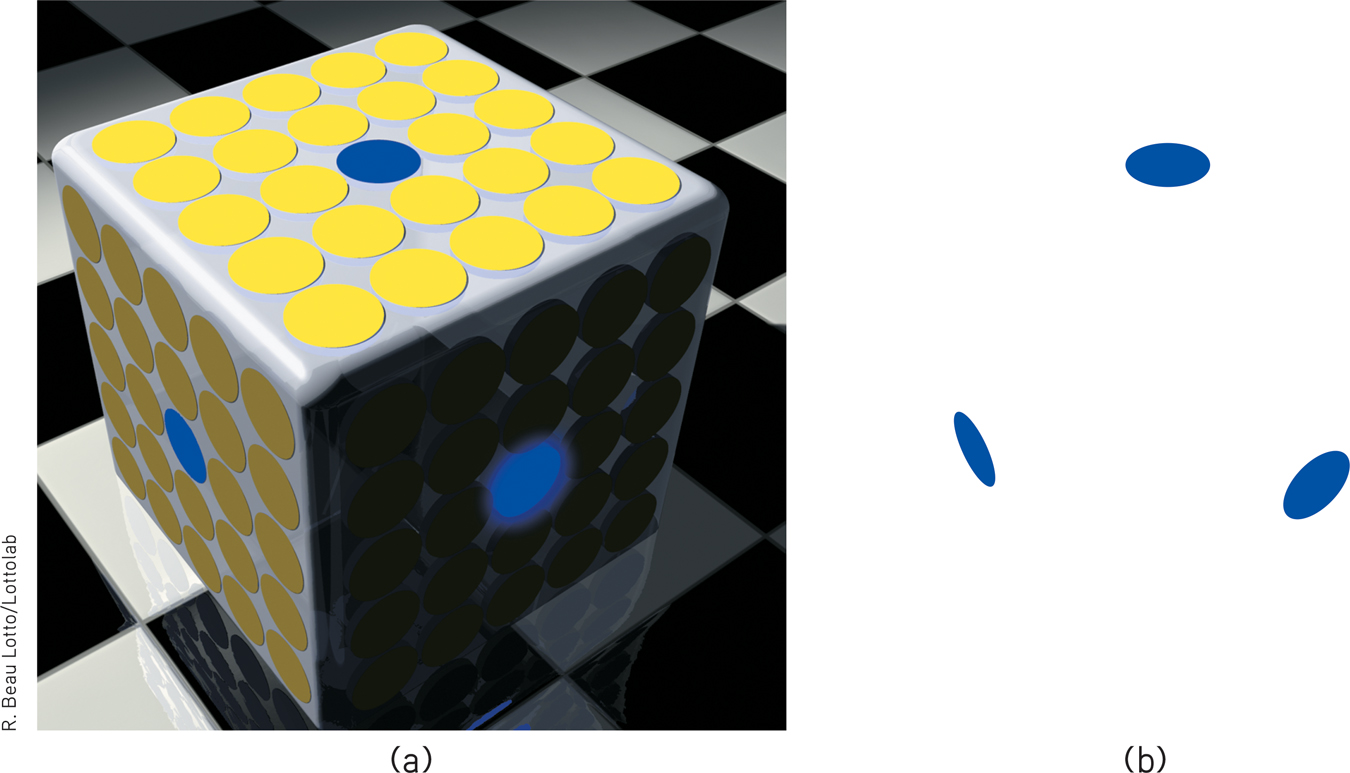

FIGURE 6.31 below dramatically illustrates the ability of a blue object to appear very different in three different contexts. Yet we have no trouble seeing these disks as blue. Nor does knowing the truth—

Figure 6.31

Figure 6.31Color depends on context (a) Believe it or not, these three blue disks are identical in color. (b) Remove the surrounding context and see what results.

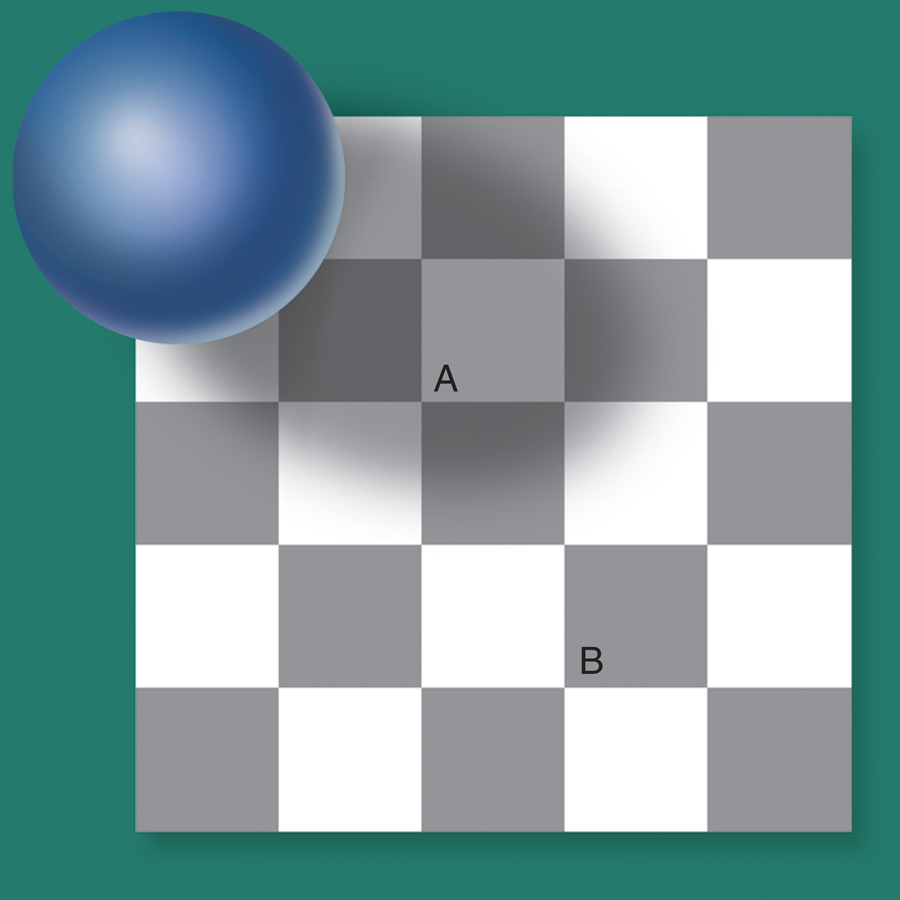

Brightness constancy (also called lightness constancy) similarly depends on context. We perceive an object as having a constant brightness even while its illumination varies. This perception of constancy depends on relative luminance—the amount of light an object reflects relative to its surroundings (FIGURE 6.32). White paper reflects 90 percent of the light falling on it; black paper, only 10 percent. Although a black paper viewed in sunlight may reflect 100 times more light than does a white paper viewed indoors, it will still look black (McBurney & Collings, 1984). But if you view sunlit black paper through a narrow tube so nothing else is visible, it may look gray, because in bright sunshine it reflects a fair amount of light. View it without the tube and it is again black, because it reflects much less light than the objects around it.

Figure 6.32

Figure 6.32Relative luminance Because of its surrounding context, we perceive Square A as lighter than Square B. But believe it or not, they are identical. To channel comedian Richard Pryor, “Who you gonna believe: me, or your lying eyes?” If you believe your lying eyes—

252

This principle—

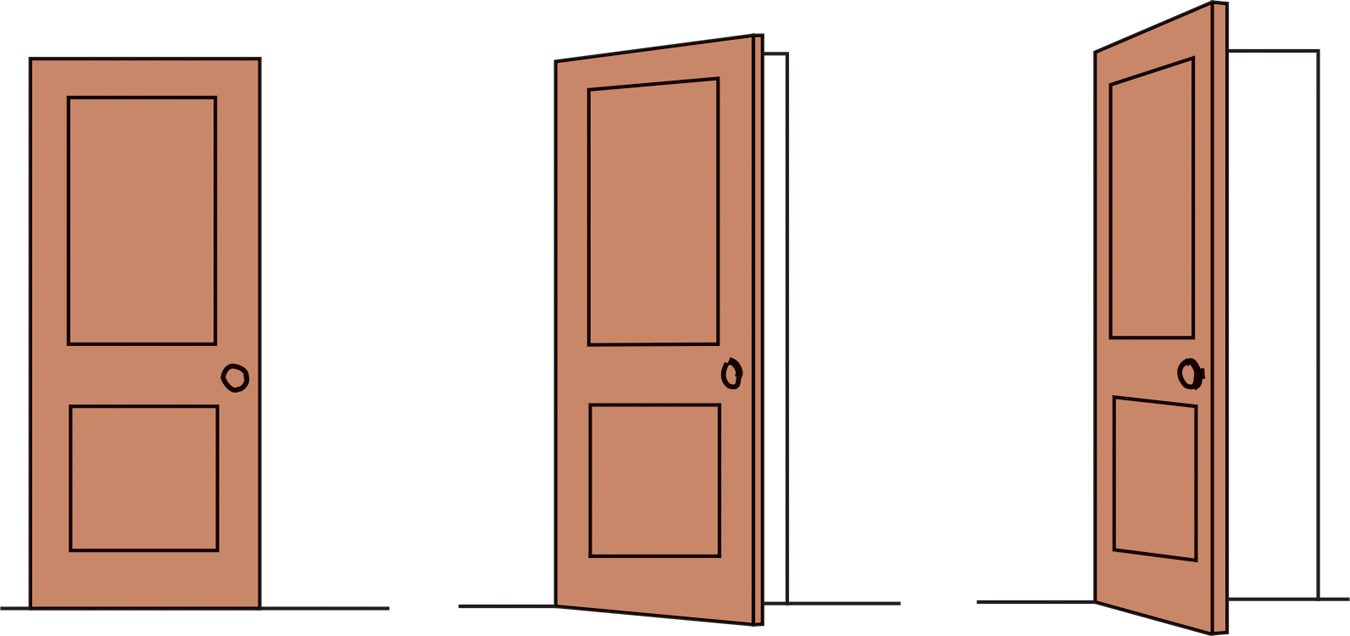

Shape and Size Constancies Sometimes an object whose actual shape cannot change seems to change shape with the angle of our view (FIGURE 6.33). More often, thanks to shape constancy, we perceive the form of familiar objects, such as the door in FIGURE 6.34, as constant even while our retinas receive changing images of them. Our brain manages this feat thanks to visual cortex neurons that rapidly learn to associate different views of an object (Li & DiCarlo, 2008).

Figure 6.33

Figure 6.33Perceiving shape Do the tops of these tables have different dimensions? They appear to. But—

Thanks to size constancy, we perceive objects as having a constant size, even while our distance from them varies. We assume a car is large enough to carry people, even when we see its tiny image from two blocks away. This assumption also illustrates the close connection between perceived distance and perceived size. Perceiving an object’s distance gives us cues to its size. Likewise, knowing its general size—

Even in size-

Figure 6.34

Figure 6.34Shape constancy A door casts an increasingly trapezoidal image on our retinas as it opens. Yet we still perceive it as rectangular.

253

For at least 22 centuries, scholars have wondered (Hershenson, 1989). One reason is that monocular cues to objects’ distances make the horizon Moon seem farther away. If it’s farther away, our brain assumes, it must be larger than the Moon high in the night sky (Kaufman & Kaufman, 2000). Take away the distance cue, by looking at the horizon Moon through a paper tube, and the object will immediately shrink.

Size-

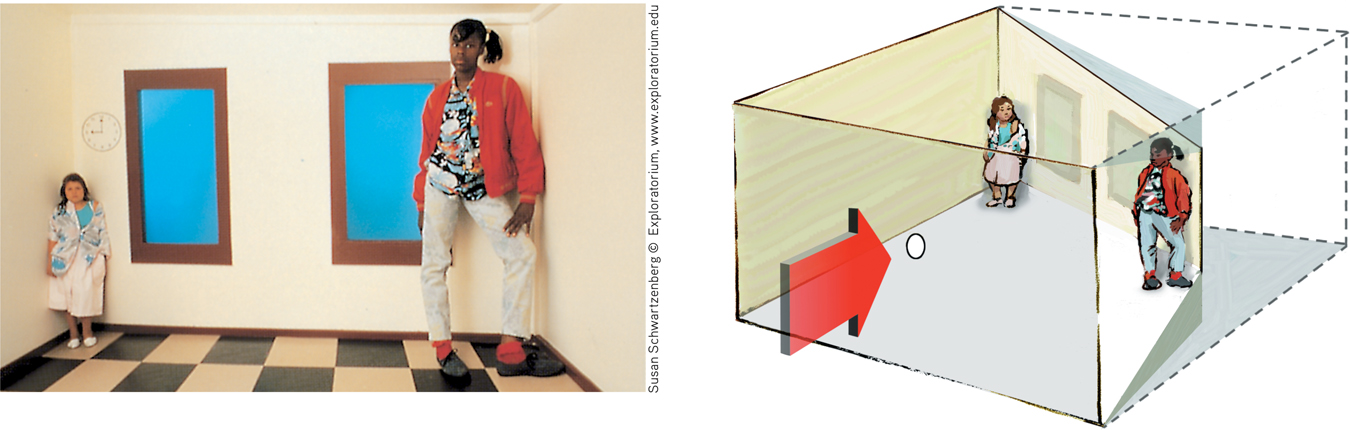

Figure 6.35

Figure 6.35The illusion of the shrinking and growing girls This distorted room, designed by Adelbert Ames, appears to have a normal rectangular shape when viewed through a peephole with one eye. The girl in the right corner appears disproportionately large because we judge her size based on the false assumption that she is the same distance away as the girl in the far corner.

Perceptual illusions reinforce a fundamental lesson: Perception is not merely a projection of the world onto our brain. Rather, our sensations are disassembled into information bits that our brain then reassembles into its own functional model of the external world. During this reassembly process, our assumptions—

To experience more visual illusions, and to understand what they reveal about how you perceive the world, visit LaunchPad’s PsychSim 6: Visual Illusions.

To experience more visual illusions, and to understand what they reveal about how you perceive the world, visit LaunchPad’s PsychSim 6: Visual Illusions.

***

Form perception, depth perception, motion perception, and perceptual constancies illuminate how we organize our visual experiences. Perceptual organization applies to our other senses, too. Listening to an unfamiliar language, we have trouble hearing where one word stops and the next one begins. Listening to our own language, we automatically hear distinct words. This, too, reflects perceptual organization. But it is more, for we even organize a string of letters—

Question

huqN6EQuFHqtCoZ+neMoBNUg9puF+6yUlAyUJF2SE+j4a7AimfaXNFS+2fooDY7vzsBJehNbq2ZV7yLbvCDSHYax3dx8z/KFE/0pRThbLUOe++Eu9UKIfYEWaxFEFa+qGDNwvK8uJXODfJATwOsmEfamkSAh7SToVKHEexUYLSkCnNTaIkY026iV75KfK4iD885GEnWJd+N9rGTp4tvtJ7nhEI1+WRSYp+s0K06gyuRNSUb5bjhmWF4was5b1t/jwU21C+If2q4KFH3dItW8KyAVe3BZDdKyvIQczKcVAjBP6S5vFBnWpQ==Perceptual Interpretation

“Let us then suppose the mind to be, as we say, white paper void of all characters, without any ideas: How comes it to be furnished?…To this I answer, in one word, from EXPERIENCE.”

John Locke, An Essay Concerning Human Understanding, 1690

Philosophers have debated whether our perceptual abilities should be credited to our nature or our nurture. To what extent do we learn to perceive? German philosopher Immanuel Kant (1724–

254

Experience and Visual Perception

6-

Restored Vision and Sensory Restriction Writing to John Locke, William Molyneux wondered whether “a man born blind, and now adult, taught by his touch to distinguish between a cube and a sphere” could, if made to see, visually distinguish the two. Locke’s answer was No, because the man would never have learned to see the difference.

Molyneux’s hypothetical case has since been put to the test with a few dozen adults who, though blind from birth, later gained sight (Gregory, 1978; von Senden, 1932). Most were born with cataracts—

Seeking to gain more control than is provided by clinical cases, researchers have outfitted infant kittens and monkeys with goggles through which they could see only diffuse, unpatterned light (Wiesel, 1982). After infancy, when the goggles were removed, these animals exhibited perceptual limitations much like those of humans born with cataracts. They could distinguish color and brightness, but not the form of a circle from that of a square. Their eyes had not degenerated; their retinas still relayed signals to their visual cortex. But lacking stimulation, the cortical cells had not developed normal connections. Thus, the animals remained functionally blind to shape. Experience guides, sustains, and maintains the brain neural organization that enables our perceptions.

In both humans and animals, similar sensory restrictions later in life do no permanent harm. When researchers cover the eye of an adult animal for several months, its vision will be unaffected after the eye patch is removed. When surgeons remove cataracts that develop during late adulthood, most people are thrilled at the return to normal vision.

The effect of sensory restriction on infant cats, monkeys, and humans suggests that for normal sensory and perceptual development, there is a critical period—an optimal period when exposure to certain stimuli or experiences is required. Surgery on blind children in India reveals that children blind from birth can benefit from removal of cataracts. But the younger they are, the more they will benefit, and their visual acuity (sharpness) may never be normal (Sinha, 2013). Early nurture sculpts what nature has endowed. In less dramatic ways, it continues to do so throughout our lives. Our visual experience matters. For example, despite concerns about their social costs, playing action video games sharpens spatial skills such as visual attention, eye-

Experiments on the perceptual limitations and advantages produced by early sensory deprivation provide a partial answer to the enduring question about experience: Does the effect of early experience last a lifetime? For some aspects of perception, the answer is clearly Yes: “Use it soon or lose it.” We retain the imprint of some early sensory experiences far into the future.

perceptual adaptation in vision, the ability to adjust to an artificially displaced or even inverted visual field.

Perceptual Adaptation Given a new pair of glasses, we may feel slightly disoriented, even dizzy. Within a day or two, we adjust. Our perceptual adaptation to changed visual input makes the world seem normal again. But imagine a far more dramatic new pair of glasses—

Could you adapt to this distorted world? Baby chicks cannot. When fitted with such lenses, they continue to peck where food grains seem to be (Hess, 1956; Rossi, 1968). But we humans adapt to distorting lenses quickly. Within a few minutes your throws would again be accurate, your stride on target. Remove the lenses and you would experience an aftereffect: At first your throws would err in the opposite direction, sailing off to the right; but again, within minutes you would readapt.

255

Indeed, given an even more radical pair of glasses—

At first, when Stratton wanted to walk, he found himself searching for his feet, which were now “up.” Eating was nearly impossible. He became nauseated and depressed. But he persisted, and by the eighth day he could comfortably reach for an object in the right direction and walk without bumping into things. When Stratton finally removed the headgear, he readapted quickly.

In later experiments, people wearing the optical gear have even been able to ride a motorcycle, ski the Alps, and fly an airplane (Dolezal, 1982; Kohler, 1962). The world around them still seemed above their heads or on the wrong side. But by actively moving about in these topsy-