27.3 Forming Good and Bad Decisions and Judgments

27-

When making each day’s hundreds of judgments and decisions (Is it worth the bother to take a jacket? Can I trust this person? Should I shoot the basketball or pass to the player who’s hot?), we seldom take the time and effort to reason systematically. We just follow our intuition, our fast, automatic, unreasoned feelings and thoughts. After interviewing policy makers in government, business, and education, social psychologist Irving Janis (1986) concluded that they “often do not use a reflective problem-

The Availability Heuristic

When we need to act quickly, the mental shortcuts we call heuristics enable snap judgments. Thanks to our mind’s automatic information processing, intuitive judgments are instantaneous. They also are usually effective (Gigerenzer & Sturm, 2012). However, research by cognitive psychologists Amos Tversky and Daniel Kahneman (1974) showed how these generally helpful shortcuts can lead even the smartest people into dumb decisions.3 The availability heuristic operates when we estimate the likelihood of events based on how mentally available they are—

“Kahneman and his colleagues and students have changed the way we think about the way people think.”

American Psychological Association President Sharon Brehm, 2007

The availability heuristic can distort our judgments of other people, too. Anything that makes information pop into mind—

“In creating these problems, we didn’t set out to fool people. All our problems fooled us, too.” Amos Tversky (1985)

|

“Intuitive thinking [is] fine most of the time…. But sometimes that habit of mind gets us in trouble.” Daniel Kahneman (2005)

|

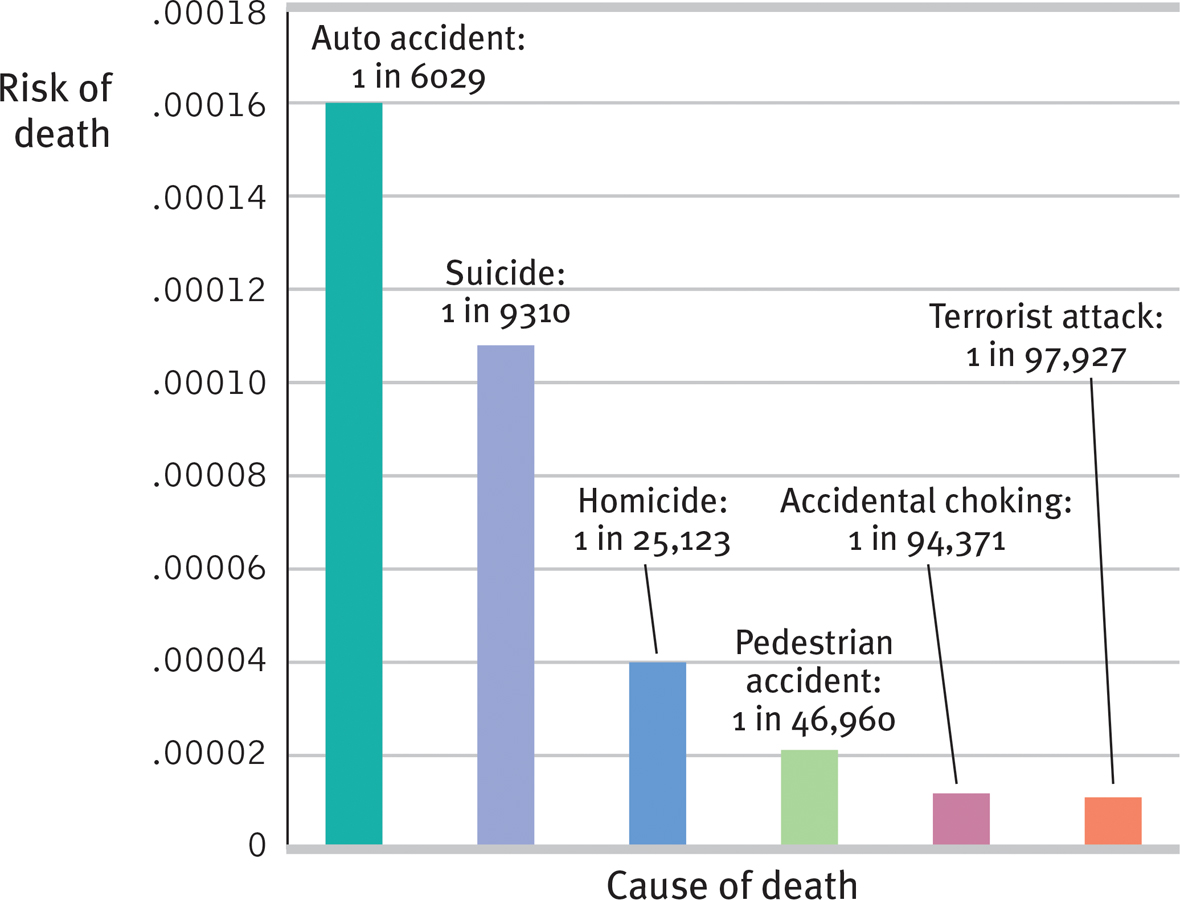

Even during that horrific year, terrorist acts claimed comparatively few lives. Yet when the statistical reality of greater dangers (see FIGURE 27.6) was pitted against the 9/11 terror, the memorable case won: Emotion-

Figure 27.6

Figure 27.6Risk of death from various causes in the United States, 2001 (Data assembled from various government sources by Randall Marshall et al., 2007.)

“Don’t believe everything you think.”

Bumper sticker

We often fear the wrong things (See below for Thinking Critically About: The Fear Factor). We fear flying because we visualize air disasters. We fear letting our sons and daughters walk to school because we see mental snapshots of abducted and brutalized children. We fear swimming in ocean waters because we replay Jaws with ourselves as victims. Even just passing by a person who sneezes and coughs heightens our perceptions of various health risks (Lee et al., 2010). And so, thanks to such readily available images, we come to fear extremely rare events.

THINKING CRITICALLY ABOUT

THINKING CRITICALLY ABOUT: The Fear Factor—Why We Fear the Wrong Things

27-4 What factors contribute to our fear of unlikely events?

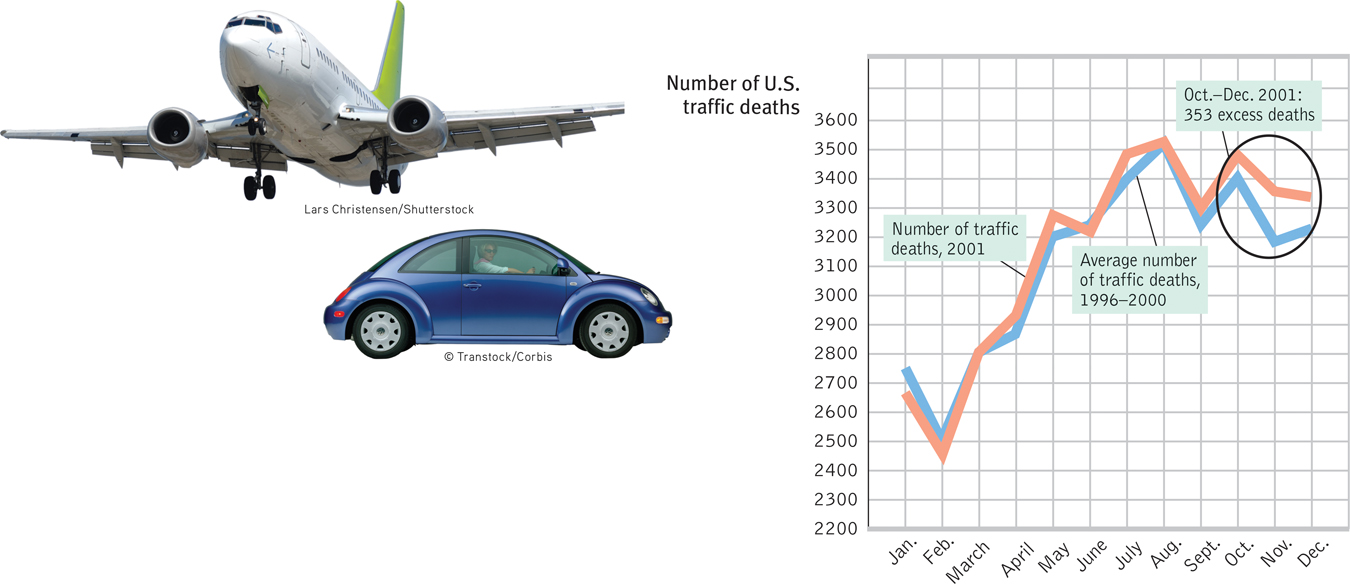

After the 9/11 attacks, many people feared flying more than driving. In a 2006 Gallup survey, only 40 percent of Americans reported being “not afraid at all” to fly. Yet from 2009 to 2011 Americans were—

In a late 2001 essay, I [DM] calculated that if—

Figure 27.7

Figure 27.7Scared onto deadly highways Images of 9/11 etched a sharper image in American minds than did the millions of fatality-

Why do we in so many ways fear the wrong things? Why do so many American parents fear school shootings, when their child is more likely to be killed by lightning (Ripley, 2013)? Psychologists have identified four influences that feed fear and cause us to ignore higher risks.

- We fear what our ancestral history has prepared us to fear. Human emotions were road tested in the Stone Age. Our old brain prepares us to fear yesterday’s risks: snakes, lizards, and spiders (which combined now kill a tiny fraction of the number killed by modern-day threats, such as cars and cigarettes). Yesterday’s risks also prepare us to fear confinement and heights, and therefore flying.

- We fear what we cannot control. Driving we control; flying we do not.

- We fear what is immediate. The dangers of flying are mostly telescoped into the moments of takeoff and landing. The dangers of driving are diffused across many moments to come, each trivially dangerous.

- Thanks to the availability heuristic, we fear what is most readily available in memory. Vivid images, like that of United Flight 175 slicing into the World Trade Center, feed our judgments of risk. Thousands of safe car trips have extinguished our anxieties about driving. Shark attacks kill about one American per year, while heart disease kills 800,000—but it’s much easier to visualize a shark bite, and thus many people fear sharks more than cigarettes (Daley, 2011). Similarly, we remember (and fear) widespread disasters (hurricanes, tornadoes, earthquakes) that kill people dramatically, in bunches. But we fear too little the less dramatic threats that claim lives quietly, one by one, continuing into the distant future. Horrified citizens and commentators renewed calls for U.S. gun control in 2012, after 20 children and 6 adults were slain in a Connecticut elementary school—although even more Americans are murdered by guns daily, though less dramatically, one by one. Philanthropist Bill Gates has noted that each year a half-million children worldwide die from rotavirus. This is the equivalent of four 747s full of children dying every day, and we hear nothing of it (Glass, 2004).

The news, and our own memorable experiences, can make us disproportionately fearful of infinitesimal risks. As one risk analyst explained, “If it’s in the news, don’t worry about it. The very definition of news is ‘something that hardly ever happens’” (Schneier, 2007).

“Fearful people are more dependent, more easily manipulated and controlled, more susceptible to deceptively simple, strong, tough measures and hard-

Media researcher George Gerbner to U.S. Congressional Subcommittee on Communications, 1981

RETRIEVAL PRACTICE

- Why can news be described as “something that hardly ever happens”? How does knowing this help us assess our fears?

If a tragic event such as a plane crash makes the news, it is noteworthy and unusual, unlike much more common bad events, such as traffic accidents. Knowing this, we can worry less about unlikely events and think more about improving the safety of our everyday activities. (For example, we can wear a seat belt when in a vehicle and use the crosswalk when walking.)

To offer a vivid depiction of climate change, Cal Tech scientists created an interactive map of global temperatures over the past 120 years (see www.tinyurl.com/TempChange).

Meanwhile, the lack of comparably available images of global climate change—

Dramatic outcomes make us gasp; probabilities we hardly grasp. As of 2013, some 40 nations—

Overconfidence

Sometimes our judgments and decisions go awry simply because we are more confident than correct. Across various tasks, people overestimate their performance (Metcalfe, 1998). If 60 percent of people correctly answer a factual question, such as “Is absinthe a liqueur or a precious stone?,” they will typically average 75 percent confidence (Fischhoff et al., 1977). (It’s a licorice-

It was an overconfident BP that, before its exploded drilling platform spewed oil into the Gulf of Mexico, downplayed safety concerns, and then downplayed the spill’s magnitude (Mohr et al., 2010; Urbina, 2010). It is overconfidence that drives stockbrokers and investment managers to market their ability to outperform stock market averages (Malkiel, 2012). A purchase of stock X, recommended by a broker who judges this to be the time to buy, is usually balanced by a sale made by someone who judges this to be the time to sell. Despite their confidence, buyer and seller cannot both be right.

Overconfidence can also feed extreme political views. People with a superficial understanding of proposals for cap-

Hofstadter’s Law: It always takes longer than you expect, even when you take into account Hofstadter’s Law.

Douglas Hofstadter, Gödel, Escher, Bach: The Eternal Golden Braid, 1979

Classrooms are full of overconfident students who expect to finish assignments and write papers ahead of schedule (Buehler et al., 1994, 2002). In fact, the projects generally take about twice the number of days predicted. We also overestimate our future leisure time (Zauberman & Lynch, 2005). Anticipating how much more we will accomplish next month, we happily accept invitations and assignments, only to discover we’re just as busy when the day rolls around. The same “planning fallacy” (underestimating time and money) appears everywhere. Boston’s mega-

“When you know a thing, to hold that you know it; and when you do not know a thing, to allow that you do not know it; this is knowledge.”

Confucius (551–479 B.C.E.), Analects

Overconfidence can have adaptive value. People who err on the side of overconfidence live more happily. They seem more competent than others (Anderson et al., 2012). Moreover, given prompt and clear feedback, as weather forecasters receive after each day’s predictions, we can learn to be more realistic about the accuracy of our judgments (Fischhoff, 1982). The wisdom to know when we know a thing and when we do not is born of experience.

Belief Perseverance

Our overconfidence is startling; equally so is our belief perseverance—our tendency to cling to our beliefs in the face of contrary evidence. One study of belief perseverence engaged people with opposing views of capital punishment (Lord et al., 1979). After studying two supposedly new research findings, one supporting and the other refuting the claim that the death penalty deters crime, each side was more impressed by the study supporting its own beliefs. And each readily disputed the other study. Thus, showing the pro-

To rein in belief perseverance, a simple remedy exists: Consider the opposite. When the same researchers repeated the capital-

The more we come to appreciate why our beliefs might be true, the more tightly we cling to them. Once we have explained to ourselves why we believe a child is “gifted” or has a “specific learning disorder,” we tend to ignore evidence undermining our belief. Once beliefs form and get justified, it takes more compelling evidence to change them than it did to create them. Prejudice persists. Beliefs often persevere.

The Effects of Framing

Framing—the way we present an issue—

Similarly, 9 in 10 college students rated a condom as effective if told it had a supposed “95 percent success rate” in stopping the HIV virus. Only 4 in 10 judged it effective when told it had a “5 percent failure rate” (Linville et al., 1992). To scare people even more, frame risks as numbers, not percentages. People told that a chemical exposure was projected to kill 10 of every 10 million people (imagine 10 dead people!) felt more frightened than did those told the fatality risk was an infinitesimal .000001 (Kraus et al., 1992).

Framing can be a powerful persuasion tool. Carefully posed options can nudge people toward decisions that could benefit them or society as a whole (Benartzi & Thaler, 2013; Thaler & Sunstein, 2008):

- Why choosing to be an organ donor depends on where you live. In many European countries as well as the United States, those renewing their driver’s license can decide whether they want to be organ donors. In some countries, the default option is Yes, but people can opt out. Nearly 100 percent of the people in opt-out countries have agreed to be donors. In the United States, Britain, and Germany, the default option is No, but people can “opt in.” There, less than half have agreed to be donors (Hajhosseini et al., 2013; Johnson & Goldstein, 2003).

- How to help employees decide to save for their retirement. A 2006 U.S. pension law recognized the framing effect. Before that law, employees who wanted to contribute to a 401(k) retirement plan typically had to choose a lower take-home pay, which few people will do. Companies can now automatically enroll their employees in the plan but allow them to opt out (which would raise the employees’ take-home pay). In both plans, the decision to contribute is the employee’s. But under the new “opt-out” arrangement, enrollments in one analysis of 3.4 million workers soared from 59 to 86 percent (Rosenberg, 2010).

- How to help save the planet. Although a “carbon tax” may be the most effective way to curb greenhouse gases, many people oppose new taxes. But they are more supportive of funding clean energy development with a “carbon offset” fee (Hardisty et al., 2010).

The point to remember: Those who understand the power of framing can use it to nudge our decisions.

The Perils and Powers of Intuition

27-

The perils of intuition—

So, are our heads indeed filled with straw? Good news: Cognitive scientists are also revealing intuition’s powers. Here is a summary of some of the high points:

- Intuition is analysis “frozen into habit” (Simon, 2001). It is implicit knowledge—what we’ve learned and recorded in our brains but can’t fully explain (Chassy & Gobet, 2011; Gore & Sadler-Smith, 2011). Chess masters display this tacit expertise in “blitz chess,” where, after barely more than a glance, they intuitively know the right move (Burns, 2004). We see this expertise in the smart and quick judgments of experienced nurses, firefighters, art critics, car mechanics, and musicians. Skilled athletes can react without thinking. Indeed, conscious thinking may disrupt well-practiced movements such as batting or shooting free throws. For all of us who have developed some special skill, what feels like instant intuition is an acquired ability to perceive and react in an eyeblink.

- Intuition is usually adaptive, enabling quick reactions. Our fast and frugal heuristics let us intuitively assume that fuzzy looking objects are far away—which they usually are, except on foggy mornings. If a stranger looks like someone who previously harmed or threatened us, we may—without consciously recalling the earlier experience—react warily. People’s automatic, unconscious associations with a political position can even predict their future decisions before they consciously make up their minds (Galdi et al., 2008). Newlyweds’ automatic associations—their gut reactions—to their new spouses likewise predict their future marital happiness (McNulty et al., 2013). Our learned associations surface as gut feelings, the intuitions of our two-track mind.

- Intuition is huge. Today’s cognitive science offers many examples of unconscious, automatic influences on our judgments (Custers & Aarts, 2010). Consider: Most people guess that the more complex the choice, the smarter it is to make decisions rationally rather than intuitively (Inbar et al., 2010). Actually, Dutch psychologists have shown that in making complex decisions, we benefit by letting our brain work on a problem without thinking about it (Strick et al., 2010, 2011). In one series of experiments, three groups of people read complex information (about apartments or roommates or art posters or soccer football matches). One group stated their preference immediately after reading information about each of four options. The second group, given several minutes to analyze the information, made slightly smarter decisions. But wisest of all, in study after study, was the third group, whose attention was distracted for a time, enabling their minds to engage in automatic, unconscious processing of the complex information. The practical lesson: Letting a problem “incubate” while we attend to other things can pay dividends (Sio & Ormerod, 2009). Facing a difficult decision involving lots of facts, we’re wise to gather all the information we can, and then say, “Give me some time not to think about this.” By taking time to sleep on it, we let our unconscious mental machinery work. Thanks to our active brain, nonconscious thinking (reasoning, problem solving, decision making, planning) is surprisingly astute (Creswell et al., 2013; Hassin, 2013).Page 365

Hmm … male or female? When acquired expertise becomes an automatic habit, as it is for experienced chicken sexers, it feels like intuition. At a glance, they just know, yet cannot easily tell you how they know.

Hmm … male or female? When acquired expertise becomes an automatic habit, as it is for experienced chicken sexers, it feels like intuition. At a glance, they just know, yet cannot easily tell you how they know.Critics of this research remind us that deliberate, conscious thought also furthers smart thinking (Lassiter et al., 2009; Payne et al., 2008). In challenging situations, superior decision makers, including chess players, take time to think (Moxley et al., 2012). And with many sorts of problems, deliberative thinkers are aware of the intuitive option, but know when to override it (Mata et al., 2013). Consider:

A bat and a ball together cost 110 cents.

The bat costs 100 cents more than the ball.

How much does the ball cost?

Most people’s intuitive response—10 cents—is wrong, and a few moments of deliberate thinking reveals why.4

The bottom line: Our two-