Thinking

Concepts

8-1 What is cognition, and what are the functions of concepts?

Psychologists who study cognition focus on the mental activities associated with thinking, knowing, remembering, and communicating information. One of these activities is forming concepts—mental groupings of similar objects, events, ideas, and people. The concept chair includes many items—a baby’s high chair, a reclining chair, a dentist’s chair.

Psychologists who study cognition focus on the mental activities associated with thinking, knowing, remembering, and communicating information. One of these activities is forming concepts—mental groupings of similar objects, events, ideas, and people. The concept chair includes many items—a baby’s high chair, a reclining chair, a dentist’s chair.

Concepts simplify our thinking. Imagine life without them. We would need a different name for every person, event, object, and idea. We could not ask a child to “throw the ball” because there would be no concept of throw or ball. We could not say “They were angry.” We would have to describe expressions and words. Concepts such as ball and anger give us much information with little mental effort.

We often form our concepts by developing a prototype—a mental image or best example of a category (Rosch, 1978). People more quickly agree that “a robin is a bird” than that “a penguin is a bird.” For most of us, the robin is the birdier (more prototypical) bird; it more closely resembles our bird prototype. When something closely matches our proto type of a concept, we readily recognize it as an example of the concept.

Sometimes, though, our experiences don’t match up neatly with our prototypes. When this happens, our category boundaries may blur. Is a 17-year-old female a girl or a woman? Is a whale a fish or a mammal? Is a tomato a fruit? Because a tomato fails to match our fruit prototype, we are slower to recognize it as a fruit.

Similarly, when symptoms don’t fit one of our disease prototypes, we are slow to perceive an illness (Bishop, 1991). People whose heart attack symptoms (shortness of breath, exhaustion, a dull weight in the chest) don’t match their heart attack prototype (sharp chest pain) may not seek help. Concepts speed and guide our thinking. But they don’t always make us wise.

Solving Problems

8-2 What strategies help us solve problems, and what tendencies work against us?

One tribute to our rationality is our impressive problem-solving skill. What’s the best route around this traffic jam? How should we handle a friend’s criticism? How can we get in the house without our keys?

Some problems we solve through trial and error. Thomas Edison tried thousands of light bulb filaments before stumbling upon one that worked. For other problems, we use algorithms, step-by-step procedures that guarantee a solution. But following the steps in an algorithm takes time and effort—sometimes a lot of time and effort. To find a word using the 10 letters in SPLOYOCHYG, for example, you could construct a list, with each letter in each of the 10 positions. But your list of 907,200 different combinations would be very long! In such cases, we often resort to heuristics, simpler thinking strategies. Thus, you might reduce the number of options in the SPLOYOCHYG example by grouping letters that often appear together (CH and GY) and avoiding rare combinations (such as YY). By using heuristics and then applying trial and error, you may hit on the answer. Have you guessed it?1

Sometimes we puzzle over a problem, with no feeling of getting closer to the answer. Then, suddenly the pieces fall together in a flash of insight—an abrupt, true-seeming, and often satisfying solution (Knoblich & Oellinger, 2006; Topolinski & Reber, 2010). Ten-year-old Johnny Appleton had one of these Aha! moments and solved a problem that had stumped many adults. How could they rescue a young robin that had fallen into a narrow, 30-inch-deep hole in a cement-block wall? Johnny’s solution: slowly pour in sand, giving the bird enough time to keep its feet on top of the constantly rising mound (Ruchlis, 1990).

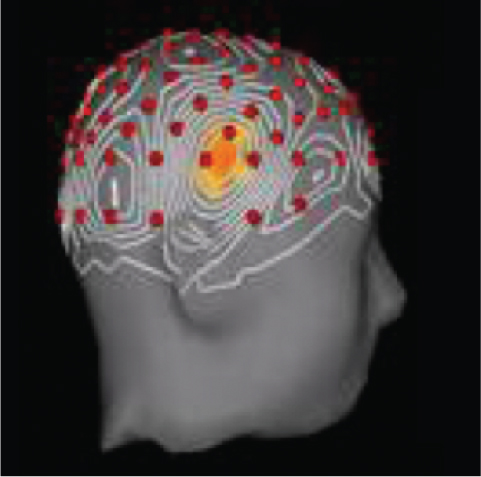

Sudden flashes of insight are associated with bursts of brain activity (Kounios & Beeman, 2009; Sandkühler & Bhattacharya, 2008). In one study (Jung-Beeman et al., 2004), researchers asked people to think of a word that forms a compound word or phrase with each of three words in a set (such as pine, crab, and sauce). When people knew the answer, they were to press a button, which would sound a bell. (Need a hint? The word is a fruit.2) About half the solutions were by a sudden Aha! insight. Before the Aha! moment, the problem solvers’ frontal lobes (which are involved in focusing attention) were active. Then, as the insight occurred, there was a burst of activity in their right temporal lobe, just above the ear (FIGURE 8.1).

Insight gives us a sense of satisfaction, a feeling of happiness. The joy of a joke is similarly a sudden “I get it!” reaction to double meaning or a surprise ending: “You don’t need a parachute to skydive. You only need a parachute to skydive twice.” Comedian Groucho Marx was a master at this: “I once shot an elephant in my pajamas. How he got into my pajamas I’ll never know.”

221

Insightful as we are, other cognitive tendencies may lead us astray. Confirmation bias is our tendency to seek evidence for our ideas more eagerly than we seek evidence against them (Klayman & Ha, 1987; Skov & Sherman, 1986). Peter Wason (1960) demonstrated confirmation bias in a now-classic study. He gave students a set of three numbers (2-4-6) and told them the sequence was based on a rule. Their task was to guess the rule. (It was simple: Each number must be larger than the one before it.) Before giving their answers, students formed their own three-number sets, and Wason told them whether their sets worked with his rule. When they felt certain they had the rule, they were to announce it. The result? They were seldom right but never in doubt. Most students formed a wrong idea (“Maybe it’s counting by twos”) and then searched only for evidence confirming the wrong rule (by testing 6-8-10, 100-102-104, and so forth).

In real life, this tendency can have grave results. Once people form a belief—that gun control does (or does not) save lives—they prefer information that supports their belief. Confirmation bias also helped launch a war. The United States invaded Iraq on the belief that dictator Saddam Hussein possessed weapons of mass destruction (WMD) that posed an immediate threat. The belief turned out to be false. A U.S. Senate Select Committee on Intelligence (2004), with members from both political parties, later investigated and found confirmation bias partly to blame. Administration analysts “had a tendency to accept information which supported [their beliefs]…more readily than information which contradicted” them, the report stated. Sources denying such weapons were viewed to be “either lying or not knowledgeable about Iraq’s problems.” Sources that reported ongoing WMD activities were seen as “having provided valuable information.”

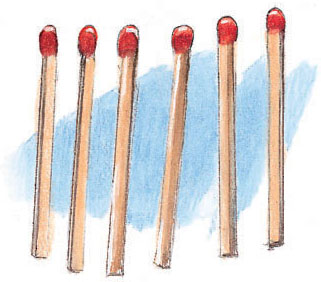

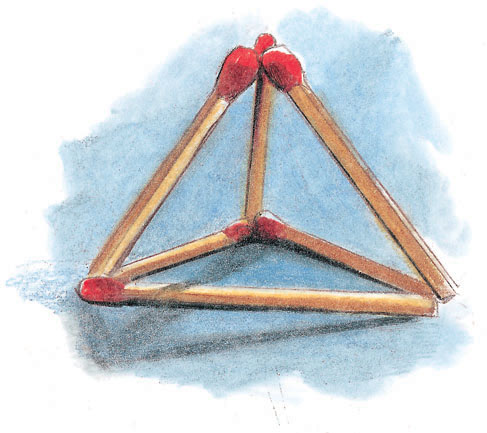

Once we get hung up on an incorrect view of a problem, it’s hard to approach it from a different angle. This obstacle to problem solving is called fixation. Can you solve the matchstick problem in FIGURE 8.2? If not, you may be experiencing fixation. (See the solution in FIGURE 8.3.)

Making Good (and Bad) Decisions and Judgments

8-3 What is intuition, and how can the availability heuristic, overconfidence, belief perseverance, and framing influence our decisions and judgments?

Each day holds hundreds of judgments and decisions. Is it worth the bother to take a jacket? Can I trust this person? Should I shoot the basketball or pass to the player who’s hot? As we judge the odds and make our decisions, we seldom take the time and effort to reason systematically. We just follow our intuition, our fast, automatic, unreasoned feelings and thoughts. After interviewing leaders in government, business, and education, one social psychologist concluded that they often made decisions without considered thought and reflection. How did they usually reach their decisions? “If you ask, they are likely to tell you…they do it mostly by the seat of their pants” (Janis, 1986).

Quick-Thinking Heuristics

When we need to act quickly, the mental shortcuts we call heuristics enable snap judgments. Without conscious awareness, we use automatic, intuitive strategies that are usually both fast and effective. But not always, as research by cognitive psychologists Amos Tversky and Daniel Kahneman (1974) showed.3 These generally helpful shortcuts sometimes lead even the smartest people into quick but dumb decisions. Consider the availability heuristic, which operates when we estimate how common an event is, based on how easily it comes to mind (its mental availability). Casinos know this. They entice us to gamble by making even small wins mentally available with noisy bells and flashing lights. The big losses are soundlessly invisible.

222

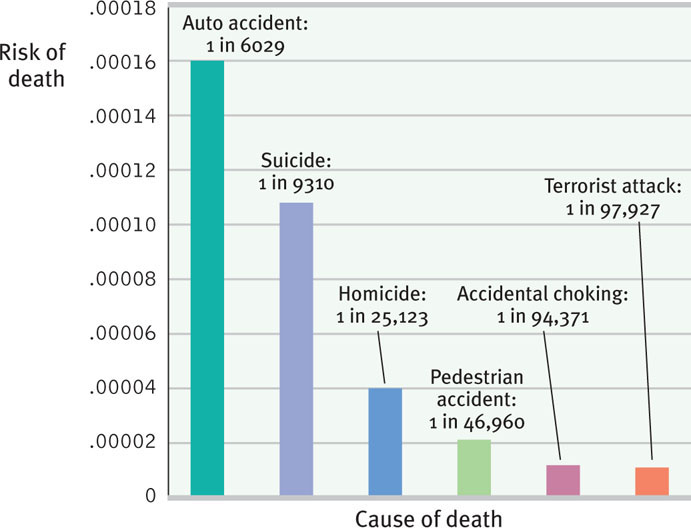

The availability heuristic can distort our judgments of other people. Anything that makes information “pop” into mind can make it seem commonplace. If someone from a particular religious of ethnic group commits a terrorist act, as happened on September 11, 2001, our readily available memory of the dramatic event may shape our impression of the whole group. Even during that horrific year, terrorist acts claimed comparatively few lives. Despite the much greater risk of death from other causes (FIGURE 8.4), the 9/11 terror was more memorable. Emotion-laden images of terror fed our fears (Sunstein, 2007).

We often fear the wrong things (see Thinking Critically About: The Fear Factor). Thanks to readily available images, we may fear extremely rare events. We fear flying because we play old air disaster films in our heads. We fear swimming in ocean waters because we replay Jaws with ourselves as victims. How many shark attacks kill Americans each year? About one. How many does heart disease kill? 600,000. But the vivid image (a shark bite!) often wins, and thus many of us fear sharks more than cheeseburgers and cigarettes (Daley, 2011).

223

Dramatic outcomes make us gasp; probabilities we hardly grasp. We overfeel and underthink. In one experiment, donations to a starving 7-year-old were greater when her image appeared alone, without statistics describing the millions of needy African children like her (Small et al., 2007). “If I look at the mass I will never act,” Mother Teresa reportedly said. “If I look at the one, I will.” “The more who die, the less we care,” noted one psychologist (Slovic, 2010). We reason emotionally.

Overconfidence: Was There Ever Any Doubt?

Sometimes our judgments and decisions go awry because we are more confident than correct. When answering factual questions such as, “Is absinthe a liqueur or a precious stone?” only 60 percent of people in one study answered correctly. (It’s a licorice-flavored liqueur.) Yet those answering felt, on average, 75 percent confident (Fischhoff et al., 1977). This tendency to overestimate our accuracy is overconfidence.

History is full of leaders who, when waging war, were more confident than correct. And classrooms are full of overconfident students who expect to finish assignments and write papers ahead of schedule (Buehler et al., 1994, 2002). In fact, the projects generally take about twice the number of days predicted.

We often overestimate our future free time (Zauberman & Lynch, 2005). Surely we’ll have more free time next month than we do today. So we happily accept invitations, only to discover we’re just as busy when the day rolls around. And believing we’ll surely have more money next year, we take out loans or buy on credit. Despite our past overconfident predictions, we remain overly confident of our next one.

Overconfidence can have adaptive value. Believing that their decisions are right and they have time to spare, self-confident people tend to live happily. They make tough decisions more easily, and they seem believable (Baumeister, 1989; Taylor, 1989). Moreover, we can learn from our mistakes. When given prompt and clear feedback—as weather forecasters receive after each day’s predictions—we learn to be more realistic about the accuracy of our judgments (Fischhoff, 1982). The wisdom to know when we know a thing and when we do not is born of experience.

Our Beliefs Live On—Sometimes Despite Evidence

Our overconfidence in our judgments is startling. Equally startling is our tendency to cling to our beliefs even when the evidence proves us wrong. Belief perseverance often fuels social conflict. Consider a classic study of people with opposing views of the death penalty (Lord et al., 1979). Both sides were asked to read the same material—two new research reports. One reported that the death penalty lowers the crime rate; the other showed that it has no effect on the crime rate. Each side was impressed by the study supporting its own beliefs, and quick to criticize the other study. Thus, showing the two groups the same mixed evidence actually increased their disagreement about the value of capital punishment.

So how can smart thinkers avoid belief perseverance? A simple remedy is to consider the opposite. In a repeat of the death penalty study, researchers asked some participants to be “as objective and unbiased as possible” (Lord et al., 1984). This plea did nothing to reduce people’s biases. They also asked another group to consider “whether you would have made the same high or low evaluations had exactly the same study produced results on the other side of the issue.” In this group, people’s views did change. After imagining the opposite findings, they judged the evidence in a much less biased way.

The more we come to appreciate why our beliefs might be true, the more tightly we cling to them. Once we have explained to ourselves why we believe a child is “gifted” (or has a “learning disorder”), or why candidate X or Y will be a better commander-in-chief, we tend to ignore evidence that challenges our belief. Prejudice persists. Once beliefs take root, it takes stronger evidence to change them than it did to create them. Beliefs often persevere.

Framing: Let Me Put It This Way…

Framing—the way we present an issue—can be a powerful tool of persuasion.

224

THINKING CRITICALLY ABOUT

The Fear Factor—Why We Fear the Wrong Things

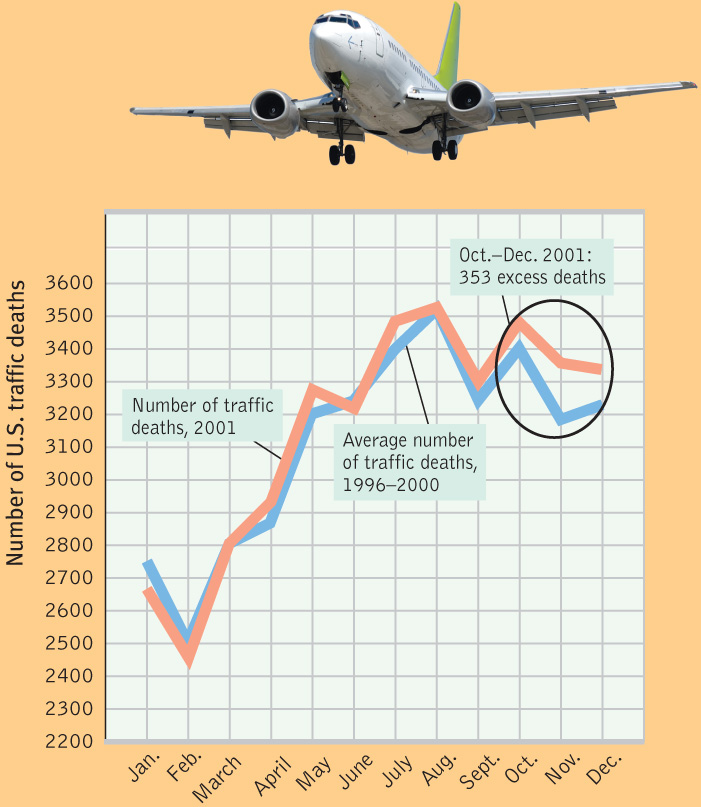

After 9/11, many people feared flying more than driving. In a 2006 Gallup survey, only 40 percent of Americans reported being “not afraid at all” to fly. Yet from 2007 to 2009, Americans were—mile for mile—200 times more likely to die in a motor vehicle accident than on a scheduled flight (National Safety Council, 2012). In 2009 alone, 33,808 Americans were killed in motor vehicle accidents—that’s 650 dead people each week. Meanwhile, in 2009 (as in 2007 and 2008), zero died from accidents on scheduled airline flights.

In a late 2001 essay, I calculated that if—because of 9/11—we flew 20 percent less and instead drove half those unflown miles, about 800 more people would die in the year after 9/11 (Myers, 2001). German psychologist Gerd Gigerenzer (2004, 2006; Gaissmaier & Gigerenzer, 2012) later checked this estimate against actual accident data. (Why didn’t I think to do that?) U.S. traffic deaths did indeed increase significantly in the last three months of 2001 (FIGURE 8.5). By the end of 2002, Gigerenzer estimated, 1600 Americans had “lost their lives on the road by trying to avoid the risk of flying.” Despite our greater fear of flying, flying’s greatest danger is, for most people, the drive to the airport.

How can our intuition about risk be so wrong? Psychologists have identified four forces that feed fear and cause us to ignore higher risks.

1. We fear what our ancestral history has prepared us to fear. Human emotions were road-tested in the Stone Age. Our old brain prepares us to fear yesterday’s risks: snakes, lizards, and spiders (which combined now kill a tiny fraction of the number killed by modern-day threats, such as cars and cigarettes). Yesterday’s risks also prepare us to fear confinement and heights, and therefore flying.

2. We fear what we cannot control. Driving we control; flying we do not.

3. We fear what is immediate. The dangers of flying are mostly in the moments of takeoff and landing. The dangers of driving are diffused across many moments to come, each trivially dangerous.

4. Thanks to the availability heuristic, we fear what is most readily available in memory. Powerful, vivid images, like that of United Flight 175 slicing into the World Trade Center, feed our judgments of risk. Thousands of safe car trips lull us into a comfortable, safe feeling. Similarly, we remember (and fear) disasters (hurricanes, tornadoes, school massacres) that kill people dramatically, in bunches. But we fear too little the less dramatic threats that claim lives quietly, one by one, into the distant future. Horrified citizens and commentators renewed calls for U.S. gun control in 2012, after 20 children and 6 adults were slain in a Connecticut elementary school. Yet, even more Americans are murdered with guns one by one every day, though less dramatically. Bill Gates has noted that each year a half-million children worldwide die from rotavirus. This is the equivalent of four 747s full of children dying every day, and we hear nothing of it (Glass, 2004).

As one risk analyst explained, “If it’s in the news, don’t worry about it. The very definition of news is ‘something that hardly ever happens’” (Schneier, 2007). But the news, and our own memorable experiences, can make us fear the least likely events. Although many people fear dying in a terrorist attack on an airplane, the first decade of the twenty-first century produced one terrorist attempt for every 10.4 million flights. That’s less than one-twentieth the chance of any one of us being struck by lightning (Silver, 2009).

The point to remember: Critical thinkers—smart thinkers—will check their fears against the facts and resist those who tempt us to fear the wrong things.

RETRIEVE + REMEMBER

Question 8.1

Why can news be described as “something that hardly ever happens”? How does knowing this help us assess our fears?

If a tragic event such as a plane crash makes the news, it is noteworthy and unusual, unlike much more common bad events such as traffic accidents. Knowing this, we can worry less about unlikely events and think more about improving the safety of our everyday activities. (For example, we can wear a seat belt when in a vehicle and use the crosswalk when walking.)

Consider how the framing of options can nudge people toward beneficial decisions (Thaler & Sunstein, 2008):

- Life and death. Imagine two surgeons explaining the risk of surgery. One tells patients that during this surgery 10 percent of people die. The other tells patients that 90 percent survive. The information is the same. The effect is not. In surveys, both patients and physicians said the risk seems greater when they hear that 10 percent will die (Marteau, 1989; McNeil et al., 1988; Rothman & Salovey, 1997).

- Why choosing to be an organ donor depends on where you live. In many European countries, as well as in the United States, people decide whether to be organ donors when renewing their driver’s license. In some countries, the assumed answer is Yes, unless you opt out. Nearly 100 percent of the people in the opt-out countries agree to be donors. In the United States, Britain, and Germany, the assumed answer has been No, unless you opt in. In these countries, only about 25 percent have agreed to be donors (Johnson & Goldstein, 2003).

- How to help employees decide to save for their retirement. A 2006 U.S. pension law recognized the framing effect. Before that law, employees who wanted to contribute to a 401(k) retirement plan typically had to choose a lower take-home pay, which few people will do. Companies can now automatically enroll their employees in the plan but allow them to opt out (which would raise the employees’ take-home pay). In both plans, the decision to contribute is the employee’s. But under the new opt-out arrangement, enrollments among 3.4 million workers soared—from 59 percent to 86 percent (Rosenberg, 2010).

225

The point to remember: Framing influences decisions.

The Perils and Powers of Intuition

8-4 How do smart thinkers use intuition?

We have seen how our unreasoned thinking can plague our efforts to solve problems, assess risks, and make wise decisions. Moreover, these perils of intuition persist even when people are offered extra pay for thinking smart or when asked to justify their answers. And they persist even among those with high intelligence, including expert physicians or clinicians (Shafir & LeBoeuf, 2002; Stanovich & West, 2008).

But psychological science is also revealing intuition’s powers.

- Intuition is analysis “frozen into habit” (Simon, 2001). It is implicit knowledge—what we’ve learned but can’t fully explain. We see this in chess masters who, when playing speed chess, intuitively know the right move (Burns, 2004). We see it in the smart and quick judgments of seasoned nurses, firefighters, art critics, car mechanics, and hockey players. And in you, too, for anything in which you have developed a deep and special knowledge, based on experience. In each case, what feels like instant intuition is an acquired ability to size up a situation in an eyeblink.

- Intuition is usually adaptive. Our fast and frugal heuristics let us intuitively assume that fuzzy-looking objects are far away—which they usually are, except on foggy mornings. Our learned associations surface as gut feelings, right or wrong: Seeing a stranger who looks like someone who has harmed or threatened us in the past, we may automatically react with distrust.

HMM…MALE OR FEMALE? When acquired expertise becomes an automatic habit, it feels like intuition. At a glance, experienced chicken sexers just know, yet they cannot easily tell you how they know.Jean-Philippe Ksiazek/AFP/Getty

HMM…MALE OR FEMALE? When acquired expertise becomes an automatic habit, it feels like intuition. At a glance, experienced chicken sexers just know, yet they cannot easily tell you how they know.Jean-Philippe Ksiazek/AFP/Getty - Intuition is huge. Today’s cognitive science offers many examples of unconscious automatic influences on our judgments (Custers & Aarts, 2010). Imagine participating in an experiment on decision making (Strick et al., 2010). Three groups of participants receive complex information about four apartment options. Those in the first group state their choice immediately after reading the information. Those in the second group analyze the information before choosing one of the options. Your group, the third, is distracted for a time and then asked to give your decision. Which group will make the smartest decision? Most people guess that the more complex the choice, the smarter it is to make decisions rationally rather than intuitively (Inbar et al., 2010). Actually, when making complex decisions, we benefit by letting a problem “incubate” while we attend to other things (Sio & Ormerod, 2009; Strick et al., 2010, 2011). The third group, given the distraction, made the best choice. Facing a decision involving a lot of facts, we’re wise to gather all the information we can, and then say, “Give me some time not to think about this.” By taking time even to sleep on it, we let our unconscious mental machinery work, and then await the intuitive result of this automatic processing.

The bottom line: Our two-track mind makes sweet harmony as smart, critical thinking listens to the creative whispers of our vast unseen mind and then evaluates evidence, tests conclusions, and plans for the future.

Thinking Creatively

8-5 What is creativity, and what fosters it?

Creativity is the ability to produce ideas that are both novel and valuable (Hennessey & Amabile, 2010). Consider Princeton mathematician Andrew Wiles’ incredible, creative moment. Pierre de Fermat (1601–1665), a mischief-loving genius, dared scholars to match his solutions to various number theory problems. Three centuries later, one of those problems continued to baffle the greatest mathematical minds, even after a $2 million prize (in today’s money) had been offered for cracking the puzzle.

Wiles had searched for the answer for more than 30 years and reached the brink of a solution. One morning, out of the blue, an “incredible revelation” struck him. “It was so…beautiful…so simple and so elegant. I couldn’t understand how I’d missedit…. It was the most important moment of my working life” (Singh, 1997, p. 25).

Creativity like Wiles’ requires a certain level of aptitude (ability to learn). Thirteen-year-olds who score exceptionally high on aptitude tests, for example, are later more likely to take advanced degrees in science and math and to create published or patented work (Park et al., 2008; Robertson et al., 2010). But creativity is more than school smarts, and it requires a different kind of thinking. Aptitude tests (such as the SAT® Reasoning Test) typically require convergent thinking—an ability to provide a single correct answer. Creativity tests (How many uses can you think of for a brick?) require divergent thinking—the ability to consider many different options and to think in novel ways.

Robert Sternberg and his colleagues (1988, 2003; Sternberg & Lubart, 1991, 1992) believe creativity has five ingredients.

- Expertise—a solid knowledge base—furnishes the ideas, images, and phrases we use as mental building blocks. The more blocks we have, the more novel ways we have to combine them. Wiles’ well-developed base of mathematical knowledge gave him access to many combinations of ideas and methods.

- Imaginative thinking skills give us the ability to see things in novel ways, to recognize patterns, and to make connections. Wiles’ imaginative solution combined two partial solutions.

- A venturesome personality seeks new experiences, tolerates gray areas, takes risks, and continues despite obstacles. Wiles said he worked in near-isolation from the mathematics community, partly to stay focused and avoid distraction. This kind of focus and dedication is an enduring trait.

- Intrinsic motivation (as explained in Chapter 6) arises internally rather than from outside rewards or external pressures (extrinsic motivation) (Amabile & Hennessey, 1992). Creative people seem driven by the pleasure and challenge of the work itself, not by outside rewards, such as meeting deadlines, impressing people, or making money. As Wiles said, “I was so obsessed by this problem that…I was thinking about it all the time—[from] when I woke up in the morning to when I went to sleep at night” (Singh & Riber, 1997).

- A creative environment sparks, supports, and refines creative ideas. Colleagues are an important part of creative environments. In one study of 2026 leading scientists and inventors, the best known of them had challenging and supportive relationships with colleagues (Simonton, 1992). Many creative environments also minimize stress and foster focused awareness (Byron & Khazanchi, 2011). Jonas Salk solved a problem that led to the polio vaccine while in a monastery. Later, when he designed the Salk Institute, he provided quiet spaces where scientists could think and work without interruption (Sternberg, 2006). Serenity seeds spontaneity.

© The New Yorker Collection, 2013, PC Vey from cartoonbank.com. All Rights Reserved.

For those seeking to boost the creative process, see Close-Up: Fostering Your Own Creativity.

. . .

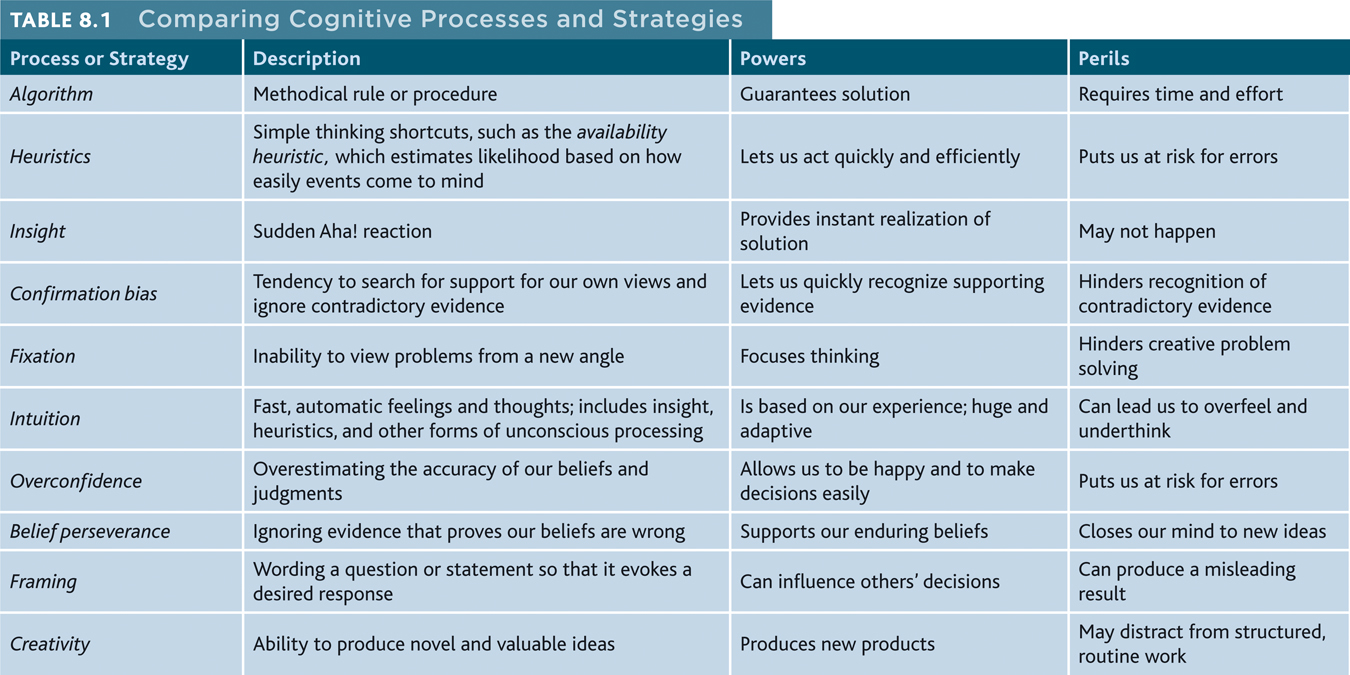

TABLE 8.1 summarizes the cognitive processes and strategies discussed in this section.

228

C L O S E - U P

Fostering Your Own Creativity

Creative achievement springs from creativity-fostering persons and situations. Here are some tips for growing your own creativity.

- Develop your expertise. Become an expert at something. Ask yourself what you care about and most enjoy and then follow your passion.

© The New Yorker Collection, 2010, Mick Stevens, from cartoonbank.com. All Rights Reserved.

© The New Yorker Collection, 2010, Mick Stevens, from cartoonbank.com. All Rights Reserved. - Allow time for ideas to hatch. A broad base of knowledge provides building blocks that can be combined in new and creative ways. During periods of inattention (“sleeping on a problem”), automatic processing can help associations to form (Zhong et al., 2008). So think hard on a problem, but then set it aside and come back to it later.

- Set aside time for your mind to roam freely. Detach from attention-grabbing television, social networking, and video gaming. Jog, go for a long walk, or meditate.

- Experience other cultures and ways of thinking. Expose yourself to multicultural experiences. Viewing life from a different perspective sets the creative juices flowing. Students who have spent time abroad are more adept at working out creative solutions to problems (Leung et al., 2008; Maddux et al., 2009, 2010). Even if you can’t afford to travel abroad, you can get out of your neighborhood and spend time in a different sort of place.

RETRIEVE + REMEMBER

Question 8.2

|

|

1. b, 2. c, 3. e, 4. d, 5. a, 6. f, 7. h, 8. i, 9. g, 10. j

Do Other Species Share Our Cognitive Skills?

8-6 What do we know about thinking in other species?

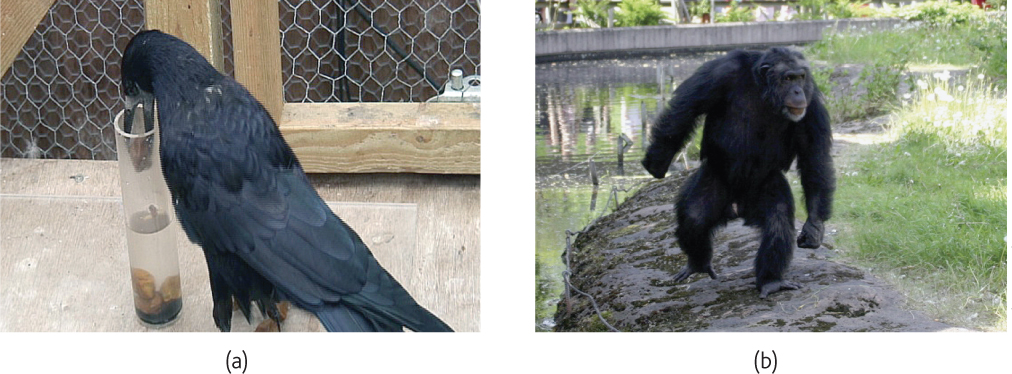

Other animals are smarter than many humans realize. Consider some surprising findings.

USING CONCEPTS AND NUMBERS Even pigeons—mere birdbrains—can sort objects (pictures of cars, cats, chairs, flowers) into categories, or concepts. Shown a picture of a never-before-seen chair, pigeons will reliably peck a key that represents chairs (Wasserman, 1995). The great apes—a group that includes chimpanzees and gorillas—also form concepts, such as cat and dog. After monkeys have learned these concepts, certain frontal lobe neurons in their brains fire in response to new “cat-like” images, others to new “dog-like” images (Freedman et al., 2001).

Until his death in 2007, Alex, an African Grey parrot, displayed jaw-dropping numerical skills. He categorized and named objects (Pepperberg, 2006, 2009). He could comprehend numbers up to 6. He could speak the number of objects. He could add two small clusters of objects and announce the sum. He could indicate which of two numbers was greater. And he gave correct answers when shown various groups of objects. Asked, for example, “What color four?” (meaning “What’s the color of the objects of which there are four?”), he could speak the answer.

DISPLAYING INSIGHT Psychologist Wolfgang Köhler (1925) showed that we are not the only creatures to display insight. He placed a piece of fruit and a long stick outside the cage of a chimpanzee named Sultan, beyond his reach. Inside the cage, he placed a short stick, which Sultan grabbed, using it to try to reach the fruit. After several failed attempts, the chimpanzee dropped the stick and seemed to survey the situation. Then suddenly (as if thinking “Aha!”), Sultan jumped up and seized the short stick again. This time, he used it to pull in the longer stick, which he then used to reach the fruit.

229

USING TOOLS AND TRANSMITTING CULTURE Various animals have displayed creative tool use. Forest-dwelling chimpanzees select different tools for different purposes—a heavy stick for making holes, a light, flexible stick for fishing for termites (Sanz et al., 2004). They break off the reed or stick, strip off any leaves, and carry it to a termite mound. Then they twist it just so and carefully remove it. Termites for lunch! (This is very reinforcing for a chimpanzee.) One anthropologist, trying to mimic the animal’s deft fishing moves, failed miserably.

Researchers have found at least 39 local customs related to chimpanzee tool use, grooming, and courtship (Whiten & Boesch, 2001). One group may slurp termites directly from a stick, another group may pluck them off individually. One group may break nuts with a stone hammer, another with a wooden hammer.

Several experiments have brought chimpanzee cultural transmission into the laboratory (Horner et al., 2006). If Chimpanzee A obtains food either by sliding or by lifting a door, Chimpanzee B will then typically do the same to get food. And so will Chimpanzee C after observing Chimpanzee B. Across a chain of six animals, from Chimpanzee A to Chimpanzee F, chimpanzees see, and chimpanzees do.

Other animals have also shown surprising cognitive talents (FIGURE 8.6). In tests, elephants have demonstrated self-awareness by recognizing themselves in a mirror. They have also displayed their abilities to learn, remember, discriminate smells, empathize, cooperate, teach, and spontaneously use tools (Byrne et al., 2009). As social creatures, chimpanzees have shown altruism, cooperation, and group aggression. Like humans, they will kill their neighbor to gain land, and they grieve over dead relatives (Anderson et al., 2010; Biro et al., 2010; Mitani et al., 2010).

Mathias Osvath

There is no question that other species display many remarkable cognitive skills. But one big question remains: Do they, like humans, exhibit language? First, let’s consider what language is, and how it develops.