6.3 Operant Conditioning

LOQ 6-

It’s one thing to classically condition a dog to drool at the sound of a tone, or a child to fear a white rat. To teach an elephant to walk on its hind legs or a child to say please, we must turn to another type of learning—

Classical conditioning and operant conditioning are both forms of associative learning. But their differences are straightforward:

In classical conditioning, an animal (dog, child, sea slug) forms associations between two events it does not control. Classical conditioning involves respondent behavior—

automatic responses to a stimulus (such as salivating in response to meat powder and later in response to a tone). operant conditioning a type of learning in which a behavior becomes more probable if followed by a reinforcer or is diminished if followed by a punisher.

In operant conditioning, animals associate their own actions with consequences. Actions followed by a rewarding event increase; those followed by a punishing event decrease. Behavior that operates on the environment to produce rewarding or punishing events is called operant behavior.

We can therefore distinguish our classical from our operant conditioning by asking two questions. Are we learning associations between events we do not control (classical conditioning)? Or are we learning associations between our behavior and resulting events (operant conditioning)?

Retrieve + Remember

Question 6.8

•With classical conditioning, we learn associations between events we _____ (do/do not) control. With operant conditioning, we learn associations between our behavior and _____(resulting/random) events.

ANSWERS: do not; resulting

Skinner’s Experiments

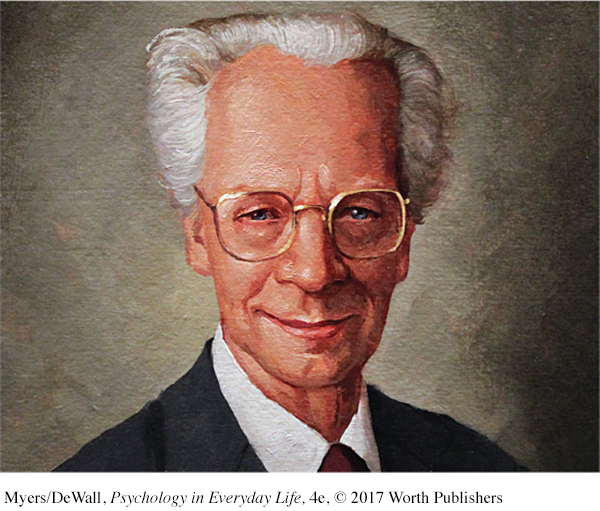

B. F. Skinner (1904–

law of effect Thorndike’s principle that behaviors followed by favorable consequences become more likely, and that behaviors followed by unfavorable consequences become less likely.

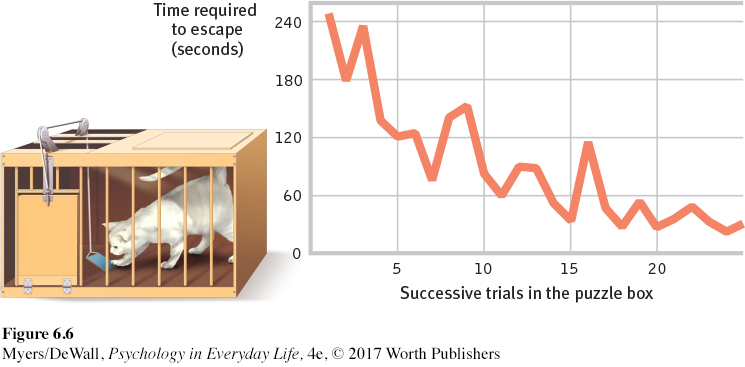

Skinner’s work built on a principle that psychologist Edward L. Thorndike (1874–

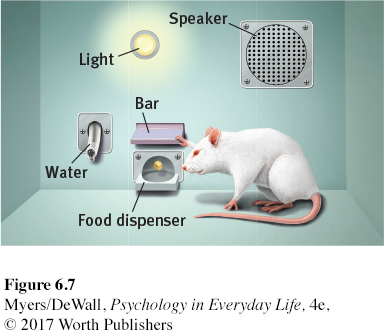

operant chamber in operant conditioning research, a chamber (also known as a Skinner box) containing a bar or key that an animal can manipulate to obtain a food or water reinforcer; attached devices record the animal’s rate of bar pressing or key pecking.

reinforcement in operant conditioning, any event that strengthens the behavior it follows.

For his studies, Skinner designed an operant chamber, popularly known as a Skinner box (FIGURE 6.7). The box has a bar or button that an animal presses or pecks to release a food or water reward. It also has a device that records these responses. This design creates a stage on which rats and other animals act out Skinner’s concept of reinforcement: any event that strengthens (increases the frequency of) a preceding response. What is reinforcing depends on the animal and the conditions. For people, it may be praise, attention, or a paycheck. For hungry and thirsty rats, food and water work well. Skinner’s experiments have done far more than teach us how to pull habits out of a rat. They have explored the precise conditions that foster efficient and enduring learning.

Shaping Behavior

shaping an operant conditioning procedure in which reinforcers guide actions closer and closer toward a desired behavior.

Imagine that you wanted to condition a hungry rat to press a bar. Like Skinner, you could tease out this action with shaping, gradually guiding the rat’s actions toward the desired behavior. First, you would watch how the animal naturally behaves, so that you could build on its existing behaviors. You might give the rat a bit of food each time it approaches the bar. Once the rat is approaching regularly, you would give the treat only when it moves close to the bar, then closer still. Finally, you would require it to touch the bar to get food. By rewarding successive approximations, you reinforce responses that are ever-

Shaping can also help us understand what nonverbal organisms perceive. Can a dog see red and green? Can a baby hear the difference between lower-

Skinner noted that we continually reinforce and shape others’ everyday behaviors, though we may not mean to do so. Isaac’s whining annoys his dad, but look how his dad typically responds:

Isaac: Could you take me to the mall?

Dad: (Continues reading paper.)

Isaac: Dad, I need to go to the mall.

Dad: (distracted) Uh, yeah, just a minute.

Isaac: DAAAD! The mall!!

Dad: Show me some manners! Okay, where are my keys . . .

Isaac’s whining is reinforced, because he gets something desirable—

Or consider a teacher who sticks gold stars on a wall chart beside the names of children scoring 100 percent on spelling tests. As everyone can then see, some children always score 100 percent. The others, who take the same test and may have worked harder than the academic all-

Types of Reinforcers

LOQ 6-

positive reinforcement increases behaviors by presenting positive stimuli, such as food. A positive reinforcer is anything that, when presented after a response, strengthens the response.

negative reinforcement increases behaviors by stopping or reducing negative stimuli, such as shock. A negative reinforcer is anything that, when removed after a response, strengthens the response. (Note: Negative reinforcement is not punishment.)

Up to now, we’ve mainly been discussing positive reinforcement, which strengthens a response by presenting a typically pleasurable stimulus immediately afterward. But, as the whining Isaac story shows us, there are two basic kinds of reinforcement (TABLE 6.1). Negative reinforcement strengthens a response by reducing or removing something undesirable or unpleasant. Isaac’s whining was positively reinforced, because Isaac got something desirable—

| Operant Conditioning Term | Description | Examples |

|---|---|---|

| Positive reinforcement | Add a desirable stimulus | Pet a dog that comes when you call it; pay the person who paints your house. |

| Negative reinforcement | Remove an aversive stimulus | Take painkillers to end pain; fasten seat belt to end loud beeping. |

Note that negative reinforcement is not punishment. (Some friendly advice: Repeat the italicized words in your mind.) Rather, negative reinforcement—

Retrieve + Remember

Question 6.9

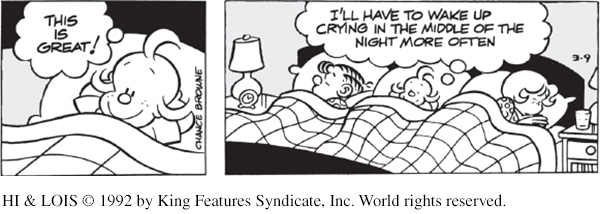

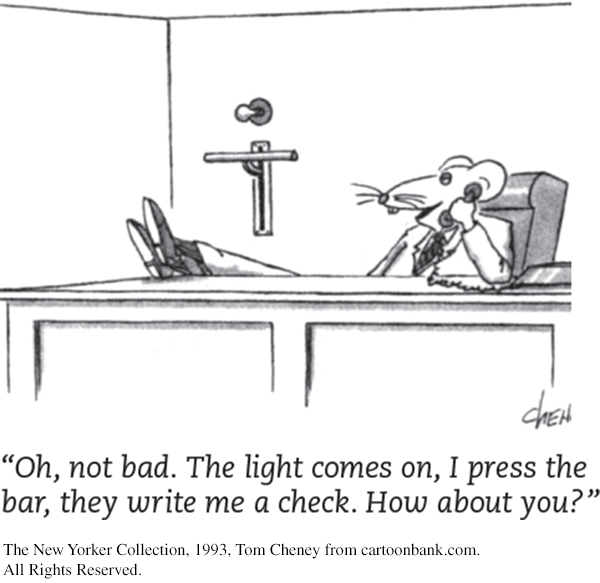

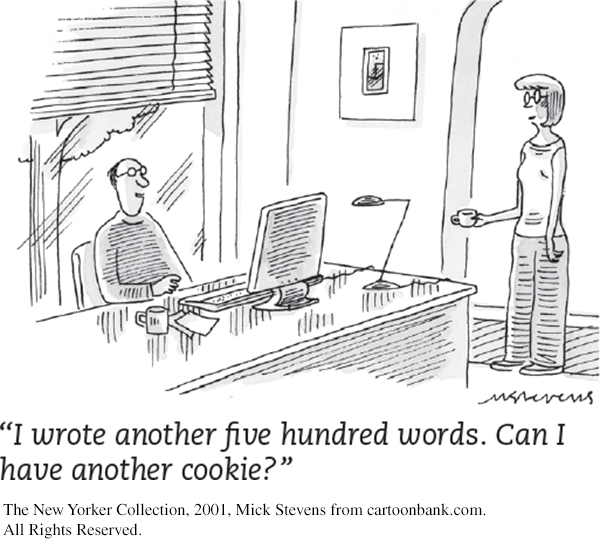

•How is operant conditioning at work in this cartoon?

ANSWER: The baby negatively reinforces her parents’ behavior when she stops crying once they grant her wish. Her parents positively reinforce her cries by letting her sleep with them.

primary reinforcer an event that is innately reinforcing, often by satisfying a biological need.

conditioned reinforcer (also known as secondary reinforcer) an event that gains its reinforcing power through its link with a primary reinforcer.

PRIMARY AND CONDITIONED REINFORCERS Getting food when hungry or having a painful headache go away is innately (naturally) satisfying. These primary reinforcers are unlearned. Conditioned reinforcers, also called secondary reinforcers, get their power through learned associations with primary reinforcers. If a rat in a Skinner box learns that a light reliably signals a food delivery, the rat will work to turn on the light. The light has become a secondary reinforcer linked with food. Our lives are filled with conditioned reinforcers—

IMMEDIATE AND DELAYED REINFORCERS In shaping experiments, rats are conditioned with immediate rewards. You want the rat to press the bar. So, when it sniffs the bar (a step toward the target behavior), you immediately give it a food pellet. If a distraction delays your giving the rat its prize, the rat won’t learn to link the bar sniffing with the food pellet reward.

Unlike rats, humans do respond to delayed reinforcers. We associate the paycheck at the end of the week, the good grade at the end of the semester, the trophy at the end of the season with our earlier actions. Indeed, learning to control our impulses in order to achieve more valued rewards is a big step toward maturity (Logue, 1998a,b). Chapter 3 described a famous finding in which some children did curb their impulses and delay gratification, choosing two marshmallows later over one now. Those same children achieved greater educational and career success later in life (Mischel, 2014).

Sometimes, however, small but immediate pleasures (the enjoyment of watching late-

Reinforcement Schedules

LOQ 6-

reinforcement schedule a pattern that defines how often a desired response will be reinforced.

continuous reinforcement reinforcing a desired response every time it occurs.

In most of our examples, the desired response has been reinforced every time it occurs. But reinforcement schedules vary. With continuous reinforcement, learning occurs rapidly, which makes it the best choice for mastering a behavior. But there’s a catch: Extinction also occurs rapidly. When reinforcement stops—

partial (intermittent) reinforcement reinforcing a response only part of the time; results in slower acquisition but much greater resistance to extinction than does continuous reinforcement.

Real life rarely provides continuous reinforcement. Salespeople don’t make a sale with every pitch. But they persist because their efforts are occasionally rewarded. And that’s the good news about partial (intermittent) reinforcement schedules, in which responses are sometimes reinforced, sometimes not. Learning is slower than with continuous reinforcement, but resistance to extinction is greater. Imagine a pigeon that has learned to peck a key to obtain food. If you gradually phase out the food delivery until it occurs only rarely, in no predictable pattern, the pigeon may peck 150,000 times without a reward (Skinner, 1953). Slot machines reward gamblers in much the same way—

Lesson for parents: Partial reinforcement also works with children. What happens when we occasionally give in to children’s tantrums for the sake of peace and quiet? We have intermittently reinforced the tantrums. This is the best way to make a behavior persist.

Skinner (1961) and his collaborators compared four schedules of partial reinforcement. Some are rigidly fixed, some unpredictably variable (TABLE 6.2).

| Fixed | Variable | |

|---|---|---|

| Ratio | Every so many: reinforcement after every nth behavior, such as buy 10 coffees, get 1 free, or pay workers per product unit produced | After an unpredictable number: reinforcement after a random number of behaviors, as when playing slot machines or fly fishing |

| Interval | Every so often: reinforcement for behavior after a fixed time, such as Tuesday discount prices | Unpredictably often: reinforcement for behavior after a random amount of time, as when checking our phone for a message |

fixed-

Fixed-

variable-

Variable-

fixed-

Fixed-

variable-

Variable-

In general, response rates are higher when reinforcement is linked to the number of responses (a ratio schedule) rather than to time (an interval schedule). But responding is more consistent when reinforcement is unpredictable (a variable schedule) than when it is predictable (a fixed schedule).

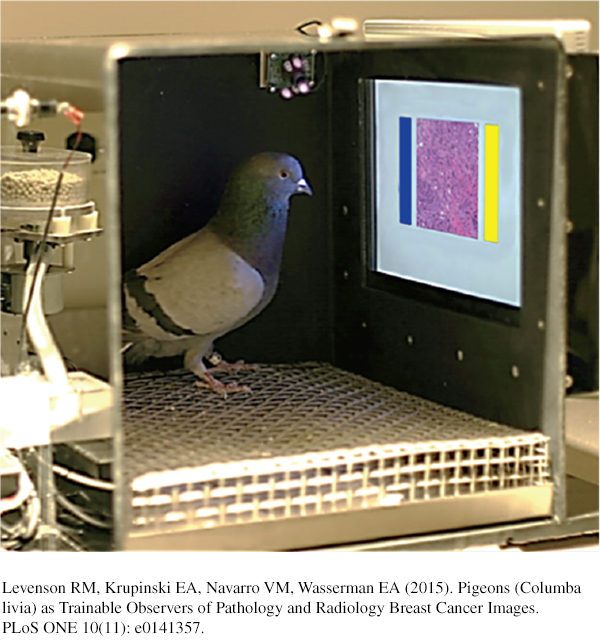

Animal behaviors differ, yet Skinner (1956) contended that the reinforcement principles of operant conditioning are universal. It matters little, he said, what response, what reinforcer, or what species you use. The effect of a given reinforcement schedule is pretty much the same: “Pigeon, rat, monkey, which is which? It doesn’t matter. . . . Behavior shows astonishingly similar properties.”

Retrieve + Remember

Question 6.10

•People who send spam are reinforced by which schedule? Home bakers checking the oven to see if the cookies are done are on which schedule? Coffee shops that offer a free drink after every 10 drinks purchased are using which reinforcement schedule?

ANSWERS: Spammers are reinforced on a variable-

Punishment

LOQ 6-

punishment an event that decreases the behavior it follows.

Reinforcement increases a behavior; punishment does the opposite. A punisher is any consequence that decreases the frequency of the behavior it follows (TABLE 6.3). Swift and sure punishers can powerfully restrain unwanted behaviors. The rat that is shocked after touching a forbidden object and the child who is burned by touching a hot stove will learn not to repeat those behaviors.

| Type of Punisher | Description | Examples |

|---|---|---|

| Positive punishment | Administer an aversive stimulus | Spray water on a barking dog; give a traffic ticket for speeding. |

| Negative punishment | Withdraw a rewarding stimulus | Take away a misbehaving teen’s driving privileges; revoke a library card for nonpayment of fines. |

Criminal behavior, much of it impulsive, is also influenced more by swift and sure punishers than by the threat of severe sentences (Darley & Alter, 2013). Thus, when Arizona introduced an exceptionally harsh sentence for first-

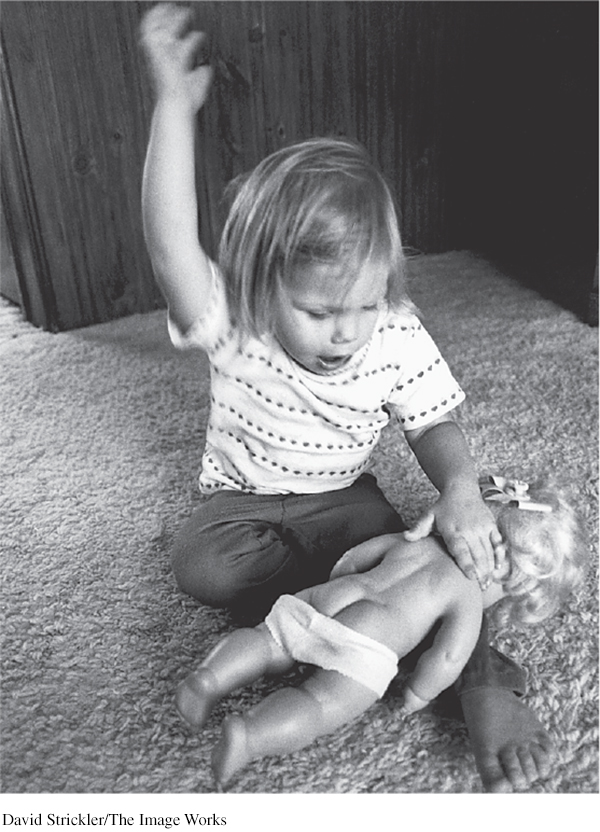

What do punishment studies tell us about parenting practices? Should we physically punish children to change their behavior? Many psychologists and supporters of nonviolent parenting say No, pointing out four major drawbacks of physical punishment (Gershoff, 2002; Marshall, 2002).

Punished behavior is suppressed, not forgotten. This temporary state may (negatively) reinforce parents’ punishing behavior. The child swears, the parent swats, the parent hears no more swearing and feels the punishment successfully stopped the behavior. No wonder spanking is a hit with so many parents—

with 70 percent of American adults agreeing that sometimes children need a “good, hard spanking” (Child Trends, 2013). Punishment teaches discrimination among situations. In operant conditioning, discrimination occurs when we learn that some responses, but not others, will be reinforced. Did the punishment effectively end the child’s swearing? Or did the child simply learn that while it’s not okay to swear around the house, it’s okay elsewhere?

Page 181Punishment can teach fear. In operant conditioning, generalization occurs when our responses to similar stimuli are also reinforced. A punished child may associate fear not only with the undesirable behavior but also with the person who delivered the punishment or the place it occurred. Thus, children may learn to fear a punishing teacher and try to avoid school, or may become more anxious (Gershoff et al., 2010). For such reasons, most European countries and most U.S. states now ban hitting children in schools and child-

care institutions (EndCorporalPunishment.org). As of 2015, 47 countries outlaw hitting by parents, giving children the same legal protection given to adults. Physical punishment may increase aggression by modeling violence as a way to cope with problems. Studies find that spanked children are at increased risk for aggression (MacKenzie et al., 2013). We know, for example, that many aggressive delinquents and abusive parents come from abusive families (Straus et al., 1997).

Some researchers question this logic. Physically punished children may be more aggressive, they say, for the same reason that people who have undergone psychotherapy are more likely to suffer depression—

See LaunchPad’s Video: Correlational Studies for a helpful tutorial animation.

See LaunchPad’s Video: Correlational Studies for a helpful tutorial animation.

The debate continues. Some researchers note that frequent spankings predict future aggression—

Parents of delinquent youths may not know how to achieve desirable behaviors without screaming, hitting, or threatening their children with punishment (Patterson et al., 1982). Training programs can help them translate dire threats (“Apologize right now or I’m taking that cell phone away!”) into positive incentives (“You’re welcome to have your phone back when you apologize”). Stop and think about it. Aren’t many threats of punishment just as forceful, and perhaps more effective, when rephrased positively? Thus, “If you don’t get your homework done, I’m not giving you money for a movie!” could be phrased more positively as . . . .

In classrooms, too, teachers can give feedback by saying “No, but try this . . .” and “Yes, that’s it!” Such responses reduce unwanted behavior while reinforcing more desirable alternatives. Remember: Punishment tells you what not to do; reinforcement tells you what to do.

What punishment often teaches, said Skinner, is how to avoid it. The bottom line: Most psychologists now favor an emphasis on reinforcement. Notice people doing something right and affirm them for it.

Retrieve + Remember

Question 6.11

•Fill in the blanks below with one of the following terms: negative reinforcement (NR), positive punishment (PP), and negative punishment (NP). The first answer, positive reinforcement (PR), is provided for you.

| Type of Stimulus | Give It | Take It Away |

|---|---|---|

| Desired (for example, a teen’s use of the car): | 1. PR | 2. |

| Undesired/aversive (for example, an insult): | 3. | 4. |

ANSWERS: 1. PR (positive reinforcement); 2. NP (negative punishment); 3. PP (positive punishment); 4. NR (negative reinforcement)

Skinner’s Legacy

LOQ 6-

B. F. Skinner stirred a hornet’s nest with his outspoken beliefs. He repeatedly insisted that external influences (not internal thoughts and feelings) shape behavior. And he urged people to use operant conditioning principles to influence others’ behavior at school, work, and home. Knowing that behavior is shaped by its results, he argued that we should use rewards to evoke more desirable behavior.

Skinner’s critics objected, saying that by neglecting people’s personal freedom and trying to control their actions, he treated them as less than human. Skinner’s reply: External consequences already control people’s behavior. So why not steer those consequences toward human betterment? Wouldn’t reinforcers be more humane than the punishments used in homes, schools, and prisons? And if it is humbling to think that our history has shaped us, doesn’t this very idea also give us hope that we can shape our future?

To review and experience simulations of operant conditioning, visit LaunchPad’s PsychSim 6: Operant Conditioning and also PsychSim 6: Shaping.

To review and experience simulations of operant conditioning, visit LaunchPad’s PsychSim 6: Operant Conditioning and also PsychSim 6: Shaping.

Applications of Operant Conditioning

In later chapters we will see how psychologists apply operant conditioning principles to help people reduce high blood pressure or gain social skills. Reinforcement techniques are also at work in schools, workplaces, and homes, and these principles can support our self-

AT SCHOOL More than 50 years ago, Skinner and others worked toward a day when “machines and textbooks” would shape learning in small steps, by immediately reinforcing correct responses. Such machines and texts, they said, would revolutionize education and free teachers to focus on each student’s special needs. “Good instruction demands two things,” said Skinner (1989). “Students must be told immediately whether what they do is right or wrong and, when right, they must be directed to the step to be taken next.”

Skinner might be pleased to know that many of his ideals for education are now possible. Teachers used to find it difficult to pace material to each student’s rate of learning, and to provide prompt feedback. Online adaptive quizzing, such as the LearningCurve system available with this text, does both. Students move through quizzes at their own pace, according to their own level of understanding. And they get immediate feedback on their efforts, including personalized study plans.

AT WORK Skinner’s ideas also show up in the workplace. Knowing that reinforcers influence productivity, many organizations have invited employees to share the risks and rewards of company ownership. Others have focused on reinforcing a job well done. Rewards are most likely to increase productivity if the desired performance is both well defined and achievable. How might managers successfully motivate their employees? Reward specific, achievable behaviors, not vaguely defined “merit.”

Operant conditioning also reminds us that reinforcement should be immediate. IBM legend Thomas Watson understood. When he observed an achievement, he wrote the employee a check on the spot (Peters & Waterman, 1982). But rewards don’t have to be material, or lavish. An effective manager may simply walk the floor and sincerely praise people for good work, or write notes of appreciation for a completed project. As Skinner said, “How much richer would the whole world be if the reinforcers in daily life were more effectively contingent on productive work?”

IN PARENTING As we have seen, parents can learn from operant conditioning practices. Parent-

To disrupt this cycle, parents should remember the basic rule of shaping: Notice people doing something right and affirm them for it. Give children attention and other reinforcers when they are behaving well (Wierson & Forehand, 1994). Target a specific behavior, reward it, and watch it increase. When children misbehave or are defiant, do not yell at or hit them. Simply explain what they did wrong and give them a time-

TO CHANGE YOUR OWN BEHAVIOR Want to stop smoking? Eat less? Study or exercise more? To reinforce your own desired behaviors and extinguish the undesired ones, psychologists suggest applying operant conditioning in five steps.

Conditioning principles may also be applied in clinical settings. Explore some of these applications in LaunchPad’s IMMERSIVE LEARNING: How Would You Know If People Can Learn to Reduce Anxiety?

Conditioning principles may also be applied in clinical settings. Explore some of these applications in LaunchPad’s IMMERSIVE LEARNING: How Would You Know If People Can Learn to Reduce Anxiety?

State a realistic goal in measurable terms and announce it. You might, for example, aim to boost your study time by an hour a day. Share that goal with friends to increase your commitment and chances of success.

Decide how, when, and where you will work toward your goal. Take time to plan. Those who list specific steps showing how they will reach their goals more often achieve them (Gollwitzer & Oettingen, 2012).

Page 183Monitor how often you engage in your desired behavior. You might log your current study time, noting under what conditions you do and don’t study. (When I [DM] began writing textbooks, I logged how I spent my time each day and was amazed to discover how much time I was wasting. I [ND] experienced a similar rude awakening when I started tracking my daily writing hours.)

Reinforce the desired behavior. To increase your study time, give yourself a reward (a snack or some activity you enjoy) only after you finish your extra hour of study. Agree with your friends that you will join them for weekend activities only if you have met your realistic weekly studying goal.

Reduce the rewards gradually. As your new behaviors become habits, give yourself a mental pat on the back instead of a cookie.

Retrieve + Remember

Question 6.12

•Ethan constantly misbehaves at preschool even though his teacher scolds him repeatedly. Why does Ethan’s misbehavior continue, and what can his teacher do to stop it?

ANSWER: If Ethan is seeking attention, the teacher’s scolding may be reinforcing rather than punishing. To change Ethan’s behavior, his teacher could offer reinforcement (such as praise) each time he behaves well. The teacher might encourage Ethan toward increasingly appropriate behavior through shaping, or by rephrasing rules as rewards instead of punishments (“You can have a snack if you play nicely with the other children” [reward] rather than “You will not get a snack if you misbehave!” [punishment]).

Contrasting Classical and Operant Conditioning

LOQ 6-

Both classical and operant conditioning are forms of associative learning (TABLE 6.4). In both, we acquire behaviors that may later become extinct and then spontaneously reappear. We often generalize our responses but learn to discriminate among different stimuli.

| Classical Conditioning | Operant Conditioning | |

|---|---|---|

| Basic idea | Learning associations between events we don’t control | Learning associations between our own behavior and its consequences |

| Response | Involuntary, automatic | Voluntary, operates on environment |

| Acquisition | Associating events; NS is paired with US and becomes CS | Associating response with a consequence (reinforcer or punisher) |

| Extinction | CR decreases when CS is repeatedly presented alone | Responding decreases when reinforcement stops |

| Spontaneous recovery | The reappearance, after a rest period, of an extinguished CR | The reappearance, after a rest period, of an extinguished response |

| Generalization | Responding to stimuli similar to the CS | Responding to similar stimuli to achieve or prevent a consequence |

| Discrimination | Learning to distinguish between a CS and other stimuli that do not signal a US | Learning that some responses, but not others, will be reinforced |

Classical and operant conditioning also differ: Through classical conditioning, we associate different events that we don’t control, and we respond automatically (respondent behaviors). Through operant conditioning, we link our behaviors—

As we shall see next, our biology and our thought processes influence both classical and operant conditioning.

Retrieve + Remember

Question 6.13

•Salivating in response to a tone paired with food is a(n)_____ behavior; pressing a bar to obtain food is a(n)_____ behavior.

ANSWERS: respondent; operant