From our earlier discussion of critical experiments, you have a sense of how important it is to have a well-

In our initial discussion of experiments, we just described what the experiment was, without examining why the researchers chose to perform the experiment the way they did. In this section, we explore some of the ways to maximize an experiment’s power, and we’ll find that, with careful planning, it is possible to increase an experiment’s ability to discern causes and effects.

First, let’s consider some elements common to most experiments.

- 1. Treatment: any experimental condition applied to the research subjects. It might be the shaving of one of an individual’s eyebrows, or the pattern used to show “suspects” (all at once or one at a time) to the witness of a staged crime, or a dosage of echinacea given to an individual.

- 2. Experimental group: a group of subjects who are exposed to a particular treatment—

for example, the individuals given echinacea rather than placebo in the experiment described above. It is sometimes referred to as the “treatment group.” - 3. Control group: a group of subjects who are treated identically to the experimental group, with one exception—

they are not exposed to the treatment. An example would be the individuals given placebo rather than echinacea. - 4. Variables: the characteristics of an experimental system that are subject to change. They might be, for example, the amount of echinacea a person is given, or a measure of the coarseness of an individual’s hair. When we speak of “controlling” variables—

the most important feature of a good experiment— we are describing the attempt to minimize any differences (which are also called “variables”) between a control group and an experimental group other than the treatment itself. That way, any differences between the groups in the outcomes we observe are most likely due to the treatment.

Let’s look at a real-

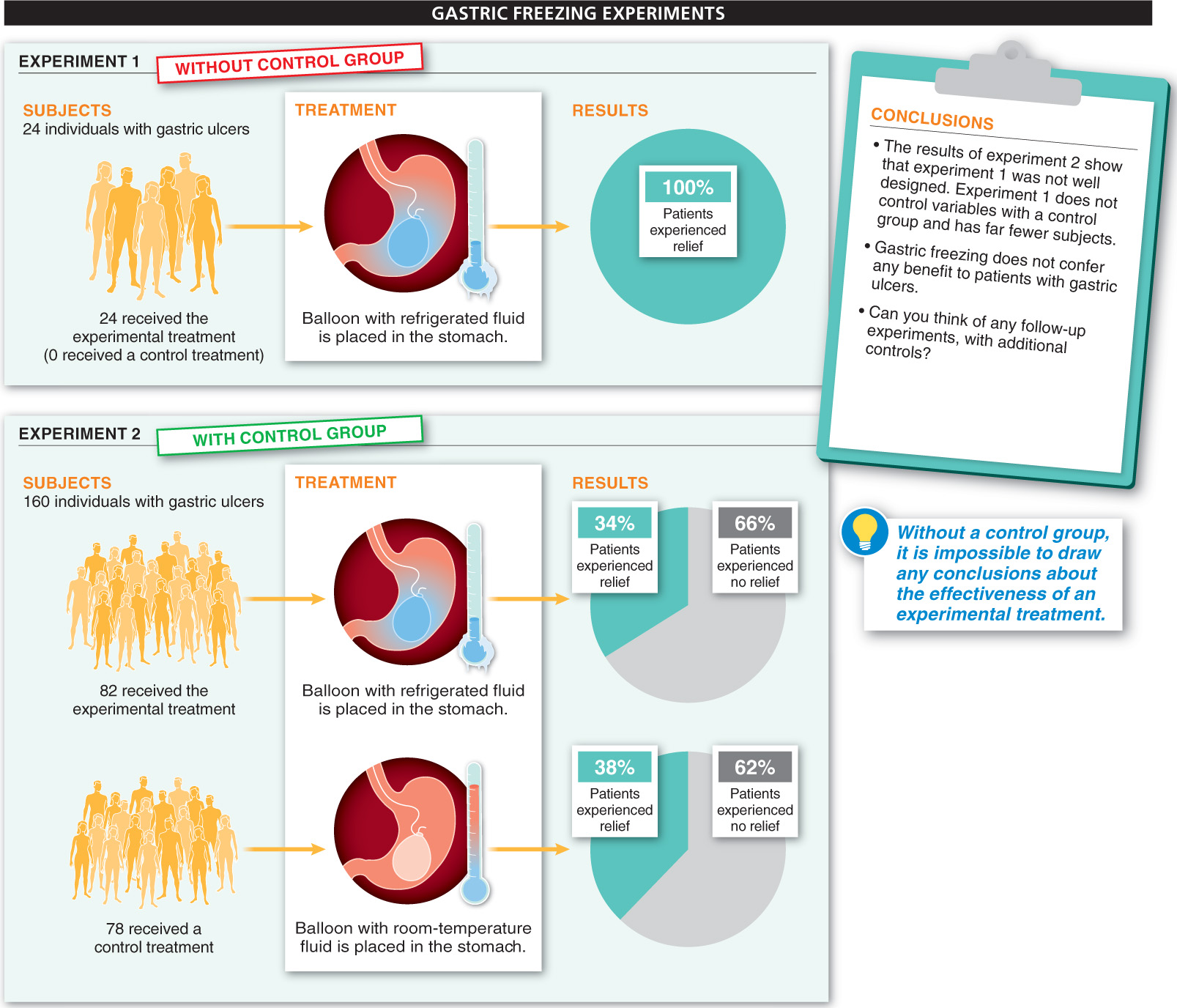

Stomach ulcers are erosions of the stomach lining that can be very painful. In the late 1950s, a doctor reported in the Journal of the American Medical Association that stomach ulcers could be effectively treated by having a patient swallow a balloon connected to some tubes that circulated a refrigerated fluid. He argued that by super-

17

Although there was a clear hypothesis (“Gastric cooling reduces the severity of ulcers”) and some compelling observations (all 24 patients experienced relief), this experiment was not designed well. In particular, there was no clear group with whom to compare the patients who received the treatment. In other words, who is to say that just going to the doctor or having a balloon put into your stomach doesn’t improve ulcers? The results of this doctor’s experiment do not rule out these interpretations.

A few years later, another researcher decided to do a more carefully controlled study. He recruited 160 ulcer patients and gave 82 of them the gastric freezing treatment. The other 78 received a similar treatment in which they swallowed the balloon but had room-

18

Surprisingly, although the researcher found that for 34% of those in the gastric freezing group their condition improved, he also found that 38% of those in the control group improved. These results indicated that gastric freezing didn’t actually confer any benefit when compared with a treatment that did not involve gastric freezing. Not surprisingly, the practice was abandoned.

A surprising result from the gastric freezing study—

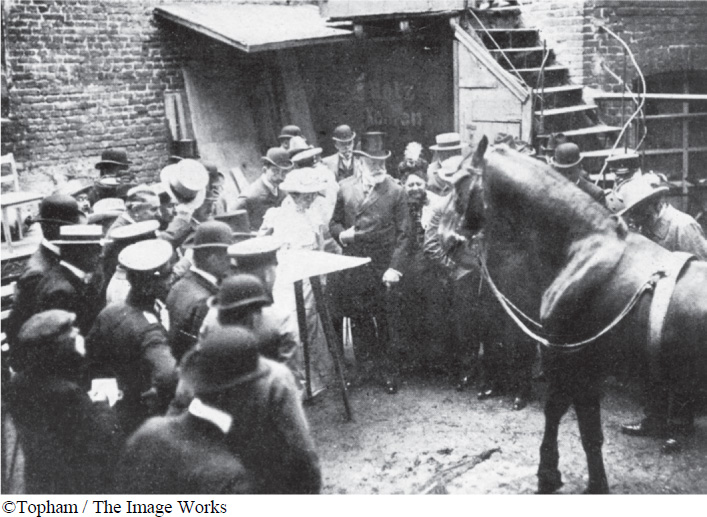

Another pitfall to be aware of in designing an experiment is to ensure that the persons conducting the experiment don’t influence the experiment’s outcome. An experimenter can often unwittingly influence the results of an experiment. This phenomenon is seen in the story of a horse named Clever Hans. Hans was considered clever because his owner claimed that Hans could perform remarkable intellectual feats, including multiplication and division. When given a numerical problem, the horse would tap out the answer number with his foot. Controlled experiments, however, demonstrated that Hans was only able to solve problems when he could see the person asking the question and when that person knew the answer (FIGURE 1-15). It turned out that the questioners, unintentionally and through very subtle body language, revealed the answers.

The Clever Hans phenomenon highlights the benefits of instituting even greater controls when designing an experiment. In particular, it highlights the value of blind experimental design, in which the experimental subjects do not know which treatment (if any) they are receiving, and double-

Another hallmark of an extremely well-

The use of randomized, controlled, double-

Suppose you want to know whether a new drug is effective in fighting the human immunodeficiency virus (HIV), the virus that leads to AIDS. Which experiment would be better, one in which the drug is added to HIV-

19

Good experimental design is more complex than simply following a single recipe. The only way to determine the quality of an experiment is to assess how well the variables that were not of interest were controlled, and how well the experimental treatment tested the relationship of interest.

TAKE-HOME MESSAGE 1.11

To draw clear conclusions from experiments, it is essential to hold constant all those variables we are not interested in. Control and experimental groups should differ only with respect to the treatment of interest. Differences in outcomes between the groups can then be attributed to the treatment.

In crafting a well-

First, treatment is any experimental condition that is applied to the research subjects. Second, the experimental group is the group of subjects who are exposed to a particular treatment. Third, the control group is a group of subjects who are treated identically to the experimental group, except they are not exposed to the treatment. Finally, variables are the characteristics of an experimental system that are subject to change.