1.4 Return of the Mind: Psychology Expands

Watson, Skinner, and the behaviorists dominated psychology from the 1930s to the 1950s. Psychologist Ulric Neisser recalled the atmosphere when he was a student at Swarthmore in the early 1950s:

Behaviorism was the basic framework for almost all of psychology at the time. It was what you had to learn. That was the age when it was supposed that no psychological phenomenon was real unless you could demonstrate it in a rat. (quoted in Baars, 1986, p. 275)

Behaviorism wouldn’t dominate the field for much longer, however, and Neisser himself would play an important role in developing an alternative perspective. Why was behaviorism replaced? Although behaviorism allowed psychologists to measure, predict, and control behavior, it did this by ignoring some important things. First, it ignored the mental processes that had fascinated psychologists such as Wundt and James and, in so doing, found itself unable to explain some very important phenomena, such as how children learn language. Second, it ignored the evolutionary history of the organisms it studied and was thus unable to explain why, for example, a rat could learn to associate nausea with food much more quickly than it could learn to associate nausea with a tone or a light. As we will see, the approaches that ultimately replaced behaviorism met these kinds of problems head-

The Pioneers of Cognitive Psychology

Even at the height of behaviorist domination, there were a few revolutionaries whose research and writings were focused on mental processes. German psychologist Max Wertheimer (1880–1943) focused on the study of illusions, errors of perception, memory, or judgment in which subjective experience differs from objective reality. In one of Wertheimer’s experiments, a person was shown two lights that flashed quickly on a screen, one after the other. One light was flashed through a vertical slit, the other through a diagonal slit. When the time between two flashes was relatively long (one fifth of a second or more), an observer would see that it was just two lights flashing in alternation. But when Wertheimer reduced the time between flashes to around one twentieth of a second, observers saw a single flash of light moving back and forth (Fancher, 1979; Sarris, 1989). Wertheimer reasoned that the perceived motion could not be explained in terms of the separate elements that cause the illusion (the two flashing lights) but instead that the moving flash of light is perceived as a whole rather than as the sum of its two parts. This unified whole, which in German is called Gestalt, makes up the perceptual experience. Wertheimer’s interpretation of the illusion led to the development of Gestalt psychology, a psychological approach that emphasizes that we often perceive the whole rather than the sum of the parts. In other words, the mind imposes organization on what it perceives, so people don’t see what the experimenter actually shows them (two separate lights); instead, they see the elements as a unified whole (one moving light).

21

Why might people not see what an experimenter actually showed them?

Another pioneer who focused on the mind was Sir Frederic Bartlett (1886–1969), a British psychologist interested in memory. Bartlett was dissatisfied with existing research, especially the research of German psychologist Hermann Ebbinghaus (1850–1909), who had performed groundbreaking experiments on memory in 1885 (described in the Memory chapter). Serving as his own research subject, Ebbinghaus tried to discover how quickly and how well he could memorize and recall meaningless information, such as the three-

Jean Piaget (1896–1980) was a Swiss psychologist who studied the perceptual and cognitive errors of children in order to gain insight into the nature and development of the human mind. For example, in one of his tasks, Piaget gave a 3-

22

German psychologist Kurt Lewin (1890–1947) was also a pioneer in the study of thought at a time when thought had been banished from psychology. Lewin (1936) argued that a person’s behavior in the world could be predicted best by understanding the person’s subjective experience of the world. A television soap opera is a meaningless series of unrelated physical movements unless one thinks about the characters’ experiences (how Karen feels about Bruce; what Van was planning to say to Kathy about Emily; and whether Linda’s sister, Nancy, will always hate their mother for meddling in her marriage, etc.). Lewin realized that it was not the stimulus, but rather the person’s construal of the stimulus, that determined the person’s subsequent behavior. A pinch on the cheek can be pleasant or unpleasant depending on who administers it, under what circumstances, and to which set of cheeks. Lewin used a special kind of mathematics called topology to model the person’s subjective experience, and although his topological theories were not particularly influential, his attempts to model mental life and his insistence that psychologists study how people construe their worlds would have a lasting impact on psychology.

How did the advent of computers change psychology?

But, aside from a handful of pioneers such as these, psychologists happily ignored mental processes until the 1950s, when something important happened: the computer. The advent of computers had enormous practical impact, of course, but it also had an enormous conceptual impact on psychology. People and computers differ in important ways, but both seem to register, store, and retrieve information, leading psychologists to wonder whether the computer might be useful as a model for the human mind. Computers are information-

Technology and the Development of Cognitive Psychology

Although the contributions of psychologists such as Wertheimer, Bartlett, Piaget, and Lewin provided early alternatives to behaviorism, they did not depose it. That job required the Army. During World War II, the military turned to psychologists to help understand how soldiers could best learn to use new technologies, such as radar. Radar operators had to pay close attention to their screens for long periods while trying to decide whether blips were friendly aircraft, enemy aircraft, or flocks of wild geese in need of a good chasing (Ashcraft, 1998; Lachman, Lachman, & Butterfield, 1979). How could radar operators be trained to make quicker and more accurate decisions? The answer to this question clearly required more than the swift delivery of pellets to the radar operator’s food tray. It required that those who designed the equipment think about and talk about cognitive processes, such as perception, attention, identification, memory, and decision making. Behaviorism solved the problem by denying it, so some psychologists decided to deny behaviorism and forge ahead with a new approach.

23

What did psychologists learn from pilots during World War II?

British psychologist Donald Broadbent (1926–1993) was among the first to study what happens when people try to pay attention to several things at once. For instance, Broadbent observed that pilots can’t attend to many different instruments at once and must actively move the focus of their attention from one to another (Best, 1992). Broadbent (1958) showed that the limited capacity to handle incoming information is a fundamental feature of human cognition and that this limit could explain many of the errors that pilots (and other people) made. At about the same time, American psychologist George Miller (1956) pointed out a striking consistency in our capacity limitations across a variety of situations: We can pay attention to, and briefly hold in memory, about seven (give or take two) pieces of information. Cognitive psychologists began conducting experiments and devising theories to better understand the mind’s limited capacity, a problem that behaviorists had ignored.

As you have already read, the invention of the computer in the 1950s had a profound impact on psychologists’ thinking. A computer is made of hardware (e.g., chips and disk drives today; magnetic tapes and vacuum tubes a half century ago) and software (stored on optical disks today; on punch cards a half-

Ironically, the emergence of cognitive psychology was also energized by the appearance of B. F. Skinner’s (1957) book, Verbal Behavior, which offered a behaviorist analysis of language. A linguist at the Massachusetts Institute of Technology (MIT), Noam Chomsky (b. 1928), published a devastating critique of the book in which he argued that Skinner’s insistence on observable behavior had caused him to miss some of the most important features of language. According to Chomsky, language relies on mental rules that allow people to understand and produce novel words and sentences. The ability of even the youngest child to generate new sentences that he or she had never heard before flew in the face of the behaviorist claim that children learn to use language by reinforcement. Chomsky provided a clever, detailed, and thoroughly cognitive account of language that could explain many of the phenomena that the behaviorist account could not (Chomsky, 1959).

These developments during the 1950s set the stage for an explosion of cognitive studies during the 1960s. Cognitive psychologists did not return to the old introspective procedures used during the 19th century, but instead developed new and ingenious methods that allowed them to study cognitive processes. The excitement of the new approach was summarized in a landmark book, Cognitive Psychology, written by someone you met earlier in this chapter, Ulric Neisser. Neisser’s (1967) book provided a foundation for the development of cognitive psychology, which grew and thrived in the years that followed.

24

The Brain Meets the Mind: The Rise of Cognitive Neuroscience

If cognitive psychologists studied the software of the mind, they had little to say about the hardware of the brain. And yet, as any computer scientist knows, the relationship between software and hardware is crucial: Each element needs the other to get the job done. Our mental activities often seem so natural and effortless—

Karl Lashley (1890–1958), a psychologist who studied with John B. Watson, conducted a famous series of studies in which he trained rats to run mazes, surgically removed parts of their brains, and then measured how well they could run the maze again. Lashley hoped to find the precise spot in the brain where learning occurred. Alas, no one spot seemed to uniquely and reliably eliminate learning (Lashley, 1960). Rather, Lashley simply found that the more of the rat’s brain he removed, the more poorly the rat ran the maze. Lashley was frustrated by his inability to identify a specific site of learning, but his efforts inspired other scientists to take up the challenge. They developed a research area called physiological psychology. Today, this area has grown into behavioral neuroscience, an approach to psychology that links psychological processes to activities in the nervous system and other bodily processes. To learn about the relationship between brain and behavior, behavioral neuroscientists observe animals’ responses as the animals perform specially constructed tasks, such as running through a maze to obtain food rewards. The neuroscientists can record electrical or chemical responses in the brain as the task is being performed, or later remove specific parts of the brain to see how performance is affected.

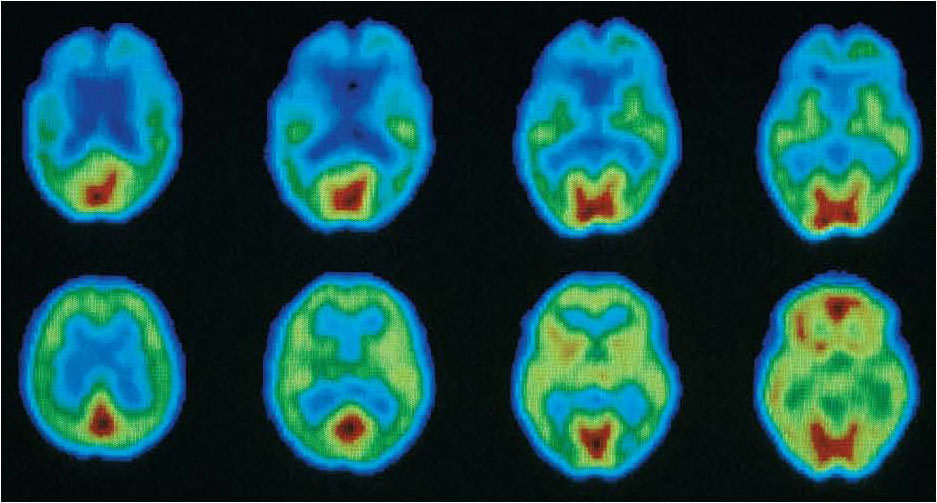

Of course, experimental brain surgery cannot ethically be performed on human beings; thus, psychologists who want to study the human brain often have to rely on nature’s cruel and inexact experiments. Birth defects, accidents, and illnesses often cause damage to particular brain regions, and if that damage disrupts a particular ability, then psychologists deduce that the region is involved in producing the ability. For example, in the Memory chapter you’ll learn about a patient whose memory was virtually eliminated by damage to a specific part of the brain, and you’ll see how this tragedy provided scientists with remarkable clues about how memories are stored (Scoville & Milner, 1957). But in the late 1980s, technological breakthroughs led to the development of noninvasive brain scanning techniques that made it possible for psychologists to watch what happens inside a human brain as a person performs a task such as reading, imagining, listening, and remembering. Brain scanning is an invaluable tool because it allows us to observe the brain in action and to see which parts are involved in which operations (see the Neuroscience and Behavior chapter).

What have we learned by watching the brain at work?

For example, researchers used scanning technology to identify the parts of the brain in the left hemisphere that are involved in specific aspects of language, such as understanding or producing words (Peterson et al., 1989). Later scanning studies showed that people who are deaf from birth, but who learn to communicate using American Sign Language (ASL), rely on regions in the right hemisphere (as well as the left) when using ASL. In contrast, people with normal hearing who learned ASL after puberty seemed to rely only on the left hemisphere when using ASL (Newman et al., 2002). These findings suggest that although both spoken and signed language usually rely on the left hemisphere, the right hemisphere also can become involved—

Figure 1.2: PET Scans of Healthy and Alzheimer’s Brains PET scans are one of a variety of brain imaging technologies that psychologists use to observe the living brain. The four brain images on the top each come from a person suffering from Alzheimer’s disease; the four on the bottom each come from a healthy person of similar age. The red and green areas reflect higher levels of brain activity compared to the blue areas, which reflect lower levels of activity. In each image, the front of the brain is on the top and the back of the brain is on the bottom. You can see that the person with Alzheimer’s disease, compared with the healthy person, shows more extensive areas of lowered activity toward the front of the brain.

Figure 1.2: PET Scans of Healthy and Alzheimer’s Brains PET scans are one of a variety of brain imaging technologies that psychologists use to observe the living brain. The four brain images on the top each come from a person suffering from Alzheimer’s disease; the four on the bottom each come from a healthy person of similar age. The red and green areas reflect higher levels of brain activity compared to the blue areas, which reflect lower levels of activity. In each image, the front of the brain is on the top and the back of the brain is on the bottom. You can see that the person with Alzheimer’s disease, compared with the healthy person, shows more extensive areas of lowered activity toward the front of the brain.

25

The Adaptive Mind: The Emergence of Evolutionary Psychology

Psychology’s renewed interest in mental processes and its growing interest in the brain were two developments that led psychologists away from behaviorism. A third development also pointed them in a different direction. Recall that one of behaviorism’s key claims was that organisms are blank slates on which experience writes its lessons, and hence any one lesson should be as easily written as another. But in experiments conducted during the 1960s and 1970s, psychologist John Garcia and his colleagues showed that rats can learn to associate nausea with the smell of food much more quickly than they can learn to associate nausea with a flashing light (Garcia, 1981). Why should this be? In the real world of forests, sewers, and garbage cans, nausea is usually caused by spoiled food and not by lightning, and although these particular rats had been born in a laboratory and had never left their cages, millions of years of evolution had prepared their brains to learn the natural association more quickly than the artificial one. In other words, it was not only the rat’s learning history but the rat’s ancestors’ learning histories that determined the rat’s ability to learn.

Although that fact was at odds with the behaviorist doctrine, it was the credo for a new kind of psychology. Evolutionary psychology explains mind and behavior in terms of the adaptive value of abilities that are preserved over time by natural selection. Evolutionary psychology has its roots in Charles Darwin’s theory of natural selection, which, as we saw earlier, holds that the features of an organism that help it survive and reproduce are more likely than other features to be passed on to subsequent generations.

26

This theory inspired the functionalist approaches of William James and G. Stanley Hall because it led them to focus on how mental abilities help people to solve problems and therefore increase their chances of survival. But it is only since the publication in 1975 of Sociobiology, by the biologist E. O. Wilson, that evolutionary thinking has had an identifiable presence in psychology. That presence is steadily increasing (Buss, 1999; Pinker, 1997a, 1997b; Tooby & Cosmides, 2000). Evolutionary psychologists think of the mind as a collection of specialized “modules” that are designed to solve the human problems our ancestors faced as they attempted to eat, mate, and reproduce over millions of years. According to evolutionary psychology, the brain is not an all-

Consider, for example, how evolutionary psychology treats the emotion of jealousy. All of us who have been in romantic relationships have experienced jealousy, if only because we noticed our partner noticing someone else. Jealousy can be a powerful, overwhelming emotion that we might wish to avoid, but according to evolutionary psychology, it exists today because it once served an adaptive function. If some of our hominid ancestors experienced jealousy and others I did not, then the ones who experienced it might have been more likely to guard their mates and aggress against their rivals and thus may have been more likely to reproduce their “jealous genes” (Buss, 2000, 2007; Buss & Haselton, 2005).

Critics of the evolutionary approach point out that many current traits of people and other animals probably evolved to serve different functions than those they currently serve. For example, biologists believe that the feathers of birds probably evolved initially to perform such functions as regulating body temperature or capturing prey and only later served the entirely different function of flight. Likewise, people are reasonably adept at learning to drive a car, but nobody would argue that such an ability is the result of natural selection; the learning abilities that allow us to become skilled car drivers must have evolved for purposes other than driving cars.

Complications such as these have led the critics to wonder how evolutionary hypotheses can ever be tested (Coyne, 2000; Sterelny & Griffiths, 1999). We don’t have a record of our ancestors’ thoughts, feelings, and actions, and fossils won’t provide much information about the evolution of mind and behavior. Testing ideas about the evolutionary origins of psychological phenomena is indeed a challenging task, but not an impossible one (Buss et al., 1998; Pinker, 1997a, 1997b).

What evidence suggests that some traits can be inherited?

Start with the assumption that evolutionary adaptations should also increase reproductive success. So, if a specific trait or feature has been favored by natural selection, it should be possible to find some evidence of this in the numbers of offspring that are produced by the trait’s bearers. Consider, for instance, the hypothesis that men tend to have deep voices because women prefer to mate with baritones rather than sopranos. To investigate this hypothesis, researchers studied a group of modern hunter-

27

Psychologists such as Max Wertheimer, Frederic Bartlett, Jean Piaget, and Kurt Lewin defied the behaviorist doctrine and studied the inner workings of the mind. Their efforts, as well as those of later pioneers such as Donald Broadbent, paved the way for cognitive psychology to focus on inner mental processes such as perception, attention, memory, and reasoning.

Psychologists such as Max Wertheimer, Frederic Bartlett, Jean Piaget, and Kurt Lewin defied the behaviorist doctrine and studied the inner workings of the mind. Their efforts, as well as those of later pioneers such as Donald Broadbent, paved the way for cognitive psychology to focus on inner mental processes such as perception, attention, memory, and reasoning. Cognitive psychology developed as a field due to the invention of the computer, psychologists’ efforts to improve the performance of the military, and Noam Chomsky’s theories about language.

Cognitive psychology developed as a field due to the invention of the computer, psychologists’ efforts to improve the performance of the military, and Noam Chomsky’s theories about language. Cognitive neuroscience attempts to link the brain with the mind by studying individuals with brain damage (connecting the area damaged with the loss of specific abilities) and individuals without brain damage, using brain scanning techniques.

Cognitive neuroscience attempts to link the brain with the mind by studying individuals with brain damage (connecting the area damaged with the loss of specific abilities) and individuals without brain damage, using brain scanning techniques. Evolutionary psychology focuses on the adaptive function that minds and brains serve and seeks to understand the nature and origin of psychological processes in terms of natural selection.

Evolutionary psychology focuses on the adaptive function that minds and brains serve and seeks to understand the nature and origin of psychological processes in terms of natural selection.