4.4 Audition: More Than Meets the Ear

Vision is based on the spatial pattern of light waves on the retina. The sense of hearing, by contrast, is all about sound waves: changes in air pressure unfolding over time. Plenty of things produce sound waves: the collision of a tree hitting the forest floor, the impact of two hands clapping, the vibration of vocal cords during a stirring speech, the resonance of a bass guitar string during a thrash metal concert. Understanding auditory experience requires understanding how we transform changes in air pressure into perceived sounds.

Sensing Sound

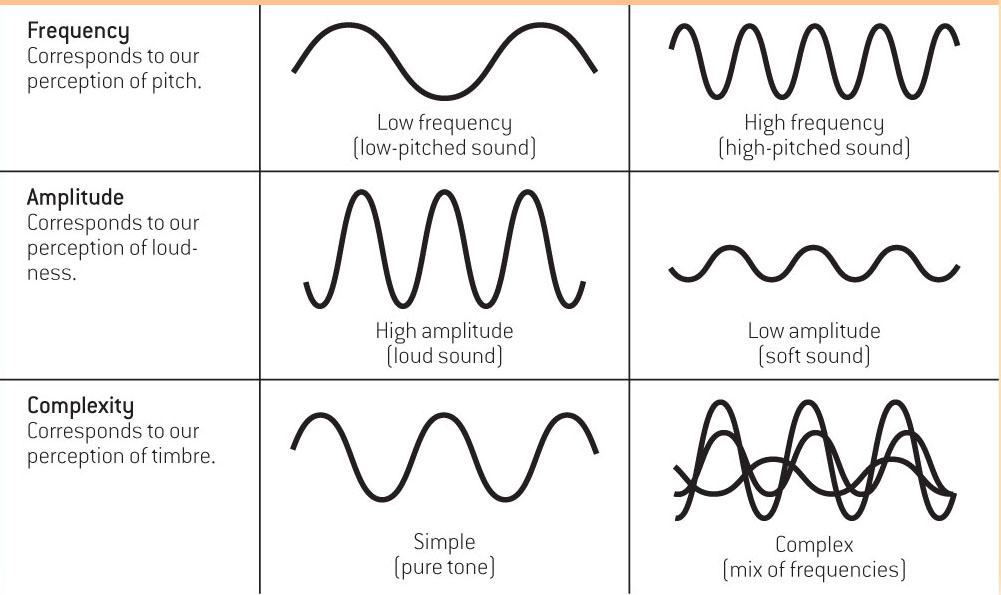

Striking a tuning fork produces a pure tone, a simple sound wave that first increases air pressure and then creates a relative vacuum. This cycle repeats hundreds or thousands of times per second as sound waves travel outward in all directions from the source. Just as there are three dimensions of light waves corresponding to three dimensions of visual perception, so, too, there are three physical dimensions of a sound wave. Frequency, amplitude, and complexity determine what we hear as the pitch, loudness, and quality of a sound (see TABLE 4.4).

The frequency of the sound wave, or its wavelength, depends on how often the peak in air pressure passes the ear or a microphone, measured in cycles per second, or hertz (Hz). Changes in the physical frequency of a sound wave are perceived by humans as changes in pitch, how high or low a sound is.

The frequency of the sound wave, or its wavelength, depends on how often the peak in air pressure passes the ear or a microphone, measured in cycles per second, or hertz (Hz). Changes in the physical frequency of a sound wave are perceived by humans as changes in pitch, how high or low a sound is. The amplitude of a sound wave refers to its height, relative to the threshold for human hearing (which is set at zero decibels, or dBs). Amplitude corresponds to loudness, or a sound’s intensity. To give you an idea of amplitude and intensity, the rustling of leaves in a soft breeze is about 20 dB, normal conversation is measured at about 40 dB, shouting produces 70 dB, a Slayer concert is about 130 dB, and the sound of the space shuttle taking off 1 mile away registers at 160 dB or more. That’s loud enough to cause permanent damage to the auditory system and is well above the pain threshold; in fact, any sounds above 85 dB can be enough to cause hearing damage, depending on the length and type of exposure.

The amplitude of a sound wave refers to its height, relative to the threshold for human hearing (which is set at zero decibels, or dBs). Amplitude corresponds to loudness, or a sound’s intensity. To give you an idea of amplitude and intensity, the rustling of leaves in a soft breeze is about 20 dB, normal conversation is measured at about 40 dB, shouting produces 70 dB, a Slayer concert is about 130 dB, and the sound of the space shuttle taking off 1 mile away registers at 160 dB or more. That’s loud enough to cause permanent damage to the auditory system and is well above the pain threshold; in fact, any sounds above 85 dB can be enough to cause hearing damage, depending on the length and type of exposure. Differences in the complexity of sound waves, or their mix of frequencies, correspond to timbre, a listener’s experience of sound quality or resonance. Timbre (pronounced “TAM-

Differences in the complexity of sound waves, or their mix of frequencies, correspond to timbre, a listener’s experience of sound quality or resonance. Timbre (pronounced “TAM-ber”) offers us information about the nature of sound. The same note played at the same loudness produces a perceptually different experience depending on whether it was played on a flute versus a trumpet, a phenomenon due entirely to timbre. Many natural sounds also illustrate the complexity of wavelengths, such as the sound of bees buzzing, the tonalities of speech, or the babbling of a brook. Unlike the purity of a tuning fork’s hum, the drone of cicadas is a clamor of many different sound frequencies.

Why does one note sound so different on a flute and a trumpet?

Most sounds—

The Human Ear

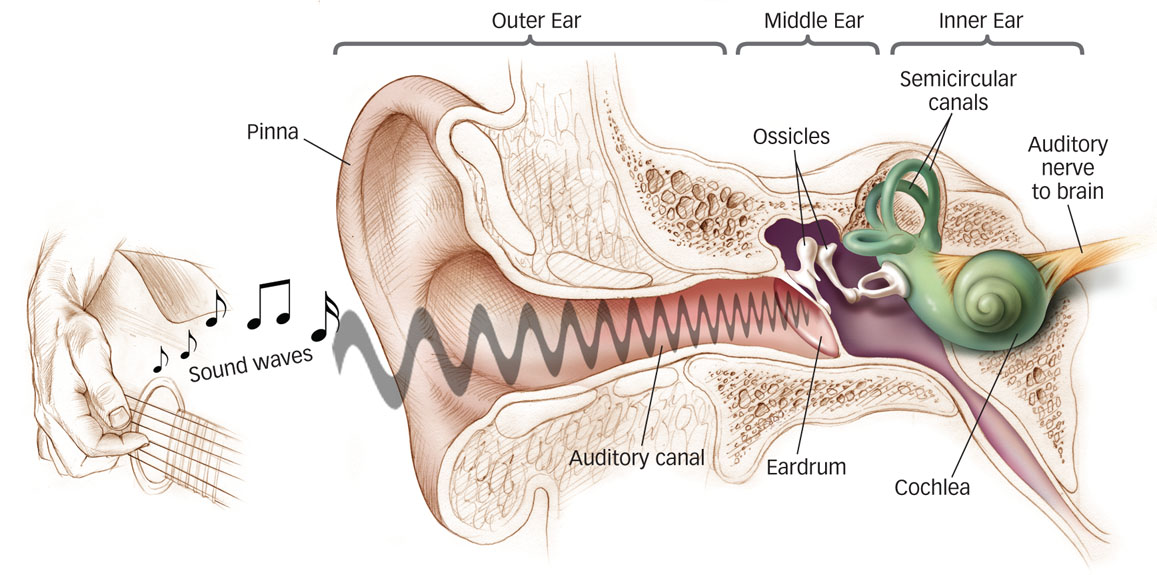

How does the auditory system convert sound waves into neural signals? The process is very different from the visual system, which is not surprising, given that light is a form of electromagnetic radiation, whereas sound is a physical change in air pressure over time: Different forms of energy require different processes of transduction. The human ear is divided into three distinct parts, as shown in FIGURE 4.23. The outer ear collects sound waves and funnels them toward the middle ear, which transmits the vibrations to the inner ear, embedded in the skull, where they are transduced into neural impulses.

Figure 4.23: Anatomy of the Human Ear The pinna funnels sound waves into the auditory canal to vibrate the eardrum at a rate that corresponds to the sound’s constituent frequencies. In the middle ear, the ossicles pick up the eardrum vibrations, amplify them, and pass them along by vibrating a membrane at the surface of the fluid-

Figure 4.23: Anatomy of the Human Ear The pinna funnels sound waves into the auditory canal to vibrate the eardrum at a rate that corresponds to the sound’s constituent frequencies. In the middle ear, the ossicles pick up the eardrum vibrations, amplify them, and pass them along by vibrating a membrane at the surface of the fluid-159

How do hair cells in the ear enable us to hear?

The outer ear consists of the visible part on the outside of the head (called the pinna); the auditory canal; and the eardrum, an airtight flap of skin that vibrates in response to sound waves gathered by the pinna and channeled into the canal. The middle ear, a tiny, air-

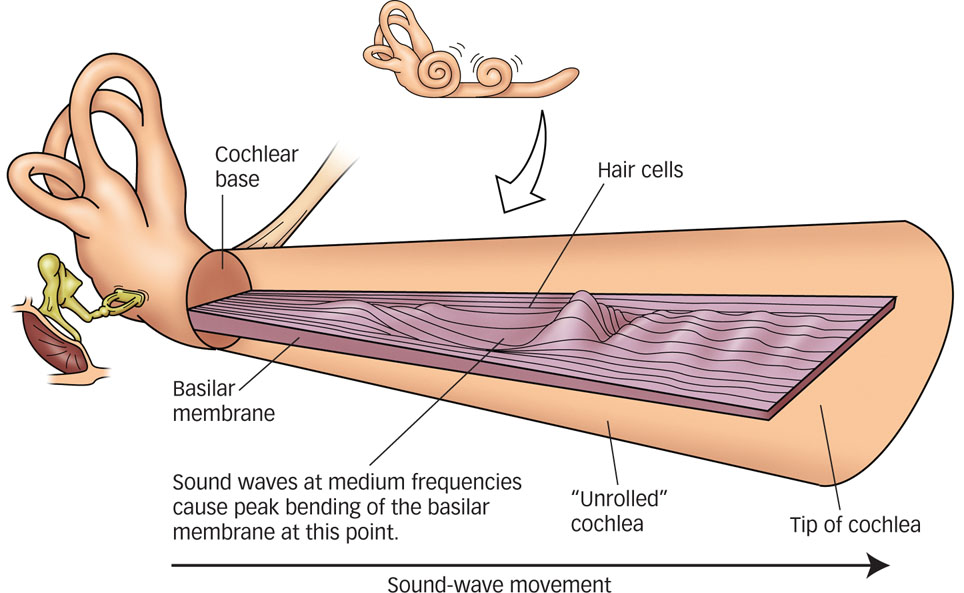

The inner ear contains the spiral-

Figure 4.24: Auditory Transduction Inside the cochlea (shown here as though it were uncoiling) the basilar membrane undulates in response to wave energy in the cochlear fluid. Waves of differing frequencies ripple varying locations along the membrane, from low frequencies at its tip to high frequencies at the base, and bend the embedded hair cell receptors at those locations. The hair cell motion generates impulses in the auditory neurons, whose axons form the auditory nerve that emerges from the cochlea.

Figure 4.24: Auditory Transduction Inside the cochlea (shown here as though it were uncoiling) the basilar membrane undulates in response to wave energy in the cochlear fluid. Waves of differing frequencies ripple varying locations along the membrane, from low frequencies at its tip to high frequencies at the base, and bend the embedded hair cell receptors at those locations. The hair cell motion generates impulses in the auditory neurons, whose axons form the auditory nerve that emerges from the cochlea.

160

Perceiving Pitch

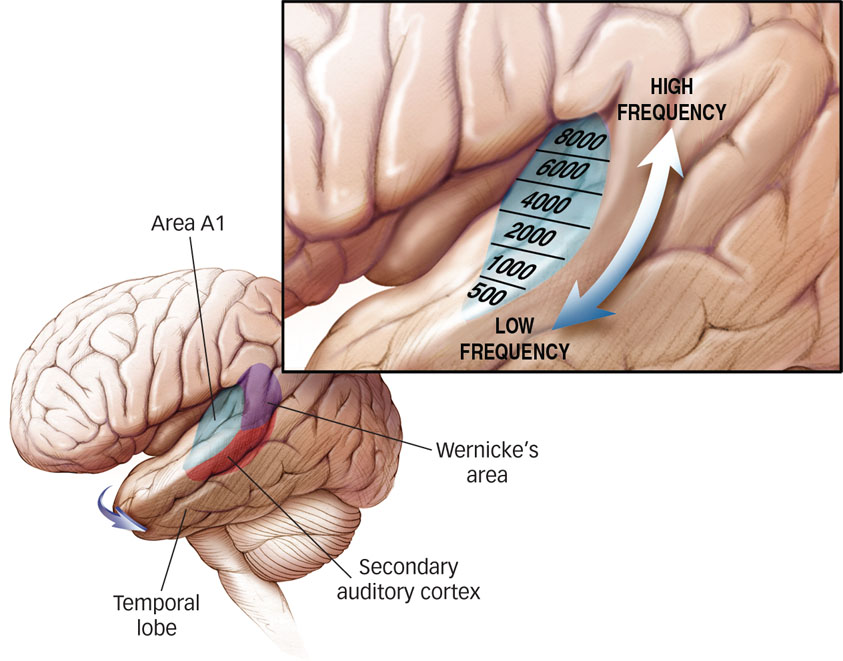

Figure 4.25: Primary Auditory Cortex Area Al is folded into the temporal lobe beneath the lateral fissure in each hemisphere. The left hemisphere auditory areas govern speech in most people. A1 cortex has a topographic organization (inset), with lower frequencies mapping toward the front of the brain and higher frequencies toward the back, mirroring the organization of the basilar membrane along the cochlea (see FIGURE 4.24).

Figure 4.25: Primary Auditory Cortex Area Al is folded into the temporal lobe beneath the lateral fissure in each hemisphere. The left hemisphere auditory areas govern speech in most people. A1 cortex has a topographic organization (inset), with lower frequencies mapping toward the front of the brain and higher frequencies toward the back, mirroring the organization of the basilar membrane along the cochlea (see FIGURE 4.24).

From the inner ear, action potentials in the auditory nerve travel to the thalamus and ultimately to an area of the cerebral cortex called area A1, a portion of the temporal lobe that contains the primary auditory cortex (see FIGURE 4.25). For most of us, the auditory areas in the left hemisphere analyze sounds related to language and those in the right hemisphere specialize in rhythmic sounds and music. There is also evidence that the auditory cortex is composed of two distinct streams, roughly analogous to the dorsal and ventral streams of the visual system. Spatial (“where”) auditory features, which allow you to locate the source of a sound in space, are handled by areas toward the back (caudal) part of the auditory cortex, whereas nonspatial (“what”) features, which allow you to identify the sound, are handled by areas in the lower (ventral) part of the auditory cortex (Recanzone & Sutter, 2008).

Neurons in area A1 respond well to simple tones, and successive auditory areas in the brain process sounds of increasing complexity (see FIGURE 4.25, inset; Rauschecker & Scott, 2009; Schreiner, Read, & Sutter, 2000; Schreiner & Winer, 2007). The human ear is most sensitive to frequencies around 1,000 to 3,500 Hz. But how is the frequency of a sound wave encoded in a neural signal? Our ears have evolved two mechanisms to encode sound-

The place code, used mainly for high frequencies, refers to the process by which different frequencies stimulate neural signals at specific places along the basilar membrane. In a series of experiments carried out from the 1930s to the 1950s, Nobel laureate Georg von Békésy (1899–1972) used a microscope to observe the basilar membrane in the inner ear of cadavers that had been donated for medical research (Békésy, 1960). Békésy found that the movement of the basilar membrane resembles a traveling wave (see FIGURE 4.24). The frequency of the stimulating sound determines the wave’s shape. When the frequency is low, the wide, floppy tip (apex) of the basilar membrane moves the most; when the frequency is high, the narrow, stiff end (base) of the membrane moves the most. The movement of the basilar membrane causes hair cells to bend, initiating a neural signal in the auditory nerve. Axons fire the strongest in the hair cells along the area of the basilar membrane that moves the most, and the brain uses information about which axons are the most active to help determining the pitch you “hear.”

The place code, used mainly for high frequencies, refers to the process by which different frequencies stimulate neural signals at specific places along the basilar membrane. In a series of experiments carried out from the 1930s to the 1950s, Nobel laureate Georg von Békésy (1899–1972) used a microscope to observe the basilar membrane in the inner ear of cadavers that had been donated for medical research (Békésy, 1960). Békésy found that the movement of the basilar membrane resembles a traveling wave (see FIGURE 4.24). The frequency of the stimulating sound determines the wave’s shape. When the frequency is low, the wide, floppy tip (apex) of the basilar membrane moves the most; when the frequency is high, the narrow, stiff end (base) of the membrane moves the most. The movement of the basilar membrane causes hair cells to bend, initiating a neural signal in the auditory nerve. Axons fire the strongest in the hair cells along the area of the basilar membrane that moves the most, and the brain uses information about which axons are the most active to help determining the pitch you “hear.”How does the frequency of a sound wave relate to what we hear?

A complementary process handles lower frequencies. A temporal code registers relatively low frequencies (up to about 5000 Hz) via the firing rate of action potentials entering the auditory nerve. Action potentials from the hair cells are synchronized in time with the peaks of the incoming sound waves (Johnson, 1980). If you imagine the rhythmic boom-

A complementary process handles lower frequencies. A temporal code registers relatively low frequencies (up to about 5000 Hz) via the firing rate of action potentials entering the auditory nerve. Action potentials from the hair cells are synchronized in time with the peaks of the incoming sound waves (Johnson, 1980). If you imagine the rhythmic boom-boom- of a bass drum, you can probably also imagine the fire-boom fire- of action potentials corresponding to the beats. This process provides the brain with very precise information about pitch that supplements the information provided by the place code.fire

161

Localizing Sound Sources

Just as the differing positions of our eyes give us stereoscopic vision, the placement of our ears on opposite sides of the head gives us stereophonic hearing. The sound arriving at the ear closer to the sound source is louder than the sound in the farther ear, mainly because the listener’s head partially blocks sound energy. This loudness difference decreases as the sound source moves from a position directly to one side (maximal difference) to straight ahead (no difference).

Another cue to a sound’s location arises from timing: Sound waves arrive a little sooner at the near ear than at the far ear. The timing difference can be as brief as a few microseconds, but together with the intensity difference, it is sufficient to allow us to perceive the location of a sound. When the sound source is ambiguous, you may find yourself turning your head from side to side to localize it. By doing this, you are changing the relative intensity and timing of sound waves arriving in your ears and collecting better information about the likely source of the sound. Turning your head also allows you to use your eyes to locate the source of the sound—

Hearing Loss

Sound amplification helps in the case of which type of hearing loss?

Broadly speaking, hearing loss has two main causes. Conductive hearing loss arises because the eardrum or ossicles are damaged to the point that they cannot conduct sound waves effectively to the cochlea. The cochlea itself, however, is normal, making this a kind of “mechanical problem” with the moving parts of the ear: the hammer, anvil, stirrup, or eardrum. In many cases, medication or surgery can correct the problem. Sound amplification from a hearing aid also can improve hearing through conduction via the bones around the ear directly to the cochlea.

Sensorineural hearing loss is caused by damage to the cochlea, the hair cells, or the auditory nerve, and it happens to almost all of us as we age. Sensorineural hearing loss can be heightened in people regularly exposed to high noise levels (such as rock musicians or jet mechanics). Simply amplifying the sound does not help because the hair cells can no longer transduce sound waves. In these cases a cochlear implant may offer some relief.

A cochlear implant is an electronic device that replaces the function of the hair cells (Waltzman, 2006). The external parts of the device include a microphone and speech processor, about the size of a USB key, worn behind the ear, and a small, flat, external transmitter that sits on the scalp behind the ear. The implanted parts include a receiver just inside the skull and a thin wire containing electrodes inserted into the cochlea to stimulate the auditory nerve. Sound picked up by the microphone is transformed into electric signals by the speech processor, which is essentially a small computer. The signal is transmitted to the implanted receiver, which activates the electrodes in the cochlea. Cochlear implants are now in routine use and can improve hearing to the point where speech can be understood.

Marked hearing loss is commonly experienced by people as they grow older, but is rare in an infant. However, infants who have not yet learned to speak are especially vulnerable because they may miss the critical period for language learning (see the Learning chapter). Without auditory feedback during this time, normal speech is nearly impossible to achieve, but early use of cochlear implants has been associated with improved speech and language skills for deaf children (Hay-

162

THE REAL WORLD: Music Training: Worth the Time

Did you learn to play an instrument when you were younger? Maybe for you, music was its own reward (or maybe not). For anyone who practiced mostly to keep Mom and Dad happy, there’s good news. Musical training has a range of benefits. Let’s start with the brain. Musicians have greater plasticity in the motor cortex compared with nonmusicians (Rosenkranz et al., 2007); they have increased grey matter in motor and auditory brain regions compared with nonmusicians (Gaser & Schlaug, 2003; Hannon & Trainor, 2007); and they show differences in brain responses to musical stimuli compared with nonmusicians (Pantev et al., 1998). But musical training also extends to auditory processing in nonmusical domains (Kraus & Chandrasekaran, 2010). Musicians, for example, show enhanced brain responses when listening to speech compared with nonmusicians (Parbery-

Remembering to be careful not to confuse correlation with causation, you may ask: Do differences between musicians and nonmusicians reflect the effects of musical training, or do they reflect individual differences, perhaps genetic ones, that lead some people to become musicians in the first place? Maybe people blessed with enhanced brain responses to musical or other auditory stimuli decide to become musicians because of their natural abilities. Recent experiments support a causal role for musical training. One study demonstrated structural brain differences in auditory and motor areas of elementary schoolchildren after 15 months of musical training (learning to play piano), compared with children who did not receive musical training (Hyde et al., 2009). Furthermore, brain changes in the trained group were associated with improvements in motor and auditory skills. Another study compared two groups of 8-

We don’t yet know all the reasons why musical training has such broad effects on auditory processing, but one likely contributor is that learning to play an instrument demands attention to precise details of sounds (Kraus & Chandrasekaran, 2010). Future studies will no doubt pinpoint additional factors, but the research to date leaves little room for doubt that your hours of practice were indeed worth the time.

Perceiving sound depends on three physical dimensions of a sound wave: The frequency of the sound wave determines the pitch; the amplitude determines the loudness; and differences in the complexity, or mix, of frequencies determines the sound quality or timbre.

Perceiving sound depends on three physical dimensions of a sound wave: The frequency of the sound wave determines the pitch; the amplitude determines the loudness; and differences in the complexity, or mix, of frequencies determines the sound quality or timbre. Auditory pitch perception begins in the ear, which consists of an outer ear that funnels sound waves toward the middle ear, which in turn sends the vibrations to the inner ear, which contains the cochlea. Action potentials from the inner ear travel along an auditory pathway through the thalamus to the primary auditory cortex (area A1) in the temporal lobe.

Auditory pitch perception begins in the ear, which consists of an outer ear that funnels sound waves toward the middle ear, which in turn sends the vibrations to the inner ear, which contains the cochlea. Action potentials from the inner ear travel along an auditory pathway through the thalamus to the primary auditory cortex (area A1) in the temporal lobe. Auditory perception depends on both a place code and a temporal code. Our ability to localize sound sources depends critically on the placement of our ears on opposite sides of the head.

Auditory perception depends on both a place code and a temporal code. Our ability to localize sound sources depends critically on the placement of our ears on opposite sides of the head. Some hearing loss can be overcome with hearing aids that amplify sound. When hair cells are damaged, a cochlear implant is a possible solution.

Some hearing loss can be overcome with hearing aids that amplify sound. When hair cells are damaged, a cochlear implant is a possible solution.

163