4.2 Vision I: How the Eyes and the Brain Convert Light Waves to Neural Signals

You might be proud of your 20/20 vision, even if it is corrected by glasses or contact lenses. 20/20 refers to a measurement associated with a Snellen chart, named after Hermann Snellen (1834–

4.2.1 Sensing Light

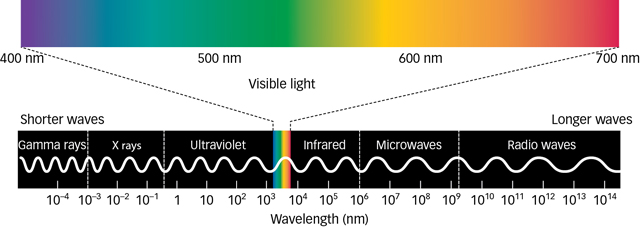

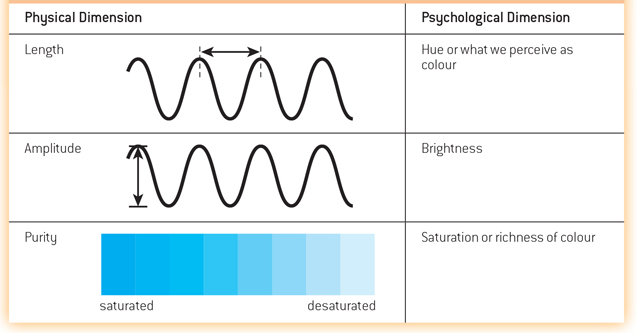

Visible light is simply the portion of the electromagnetic spectrum that we can see, and it is an extremely small slice (see FIGURE 4.3). You can think about light as waves of energy. Like ocean waves, light waves vary in height and in the distance between their peaks, or wavelengths. There are three properties of light waves, each of which has a physical dimension that produces a corresponding psychological dimension (see TABLE 4.3). In other words, light does not need a human to have the properties it does: Length, amplitude, and purity are properties of the light waves themselves. What humans perceive from those properties are colour, brightness, and saturation.

Figure 4.3: Electromagnetic Spectrum The sliver of light waves visible to humans as a rainbow of colours from violet-

Figure 4.3: Electromagnetic Spectrum The sliver of light waves visible to humans as a rainbow of colours from violet-The length of a light wave determines its hue, or what humans perceive as colour.

The intensity or amplitude of a light wave—

how high the peaks are— determines what we perceive as the brightness of light. Purity is the number of distinct wavelengths that make up the light. Purity corresponds to what humans perceive as saturation, or the richness of colours.

4.2.1.1 The Human Eye

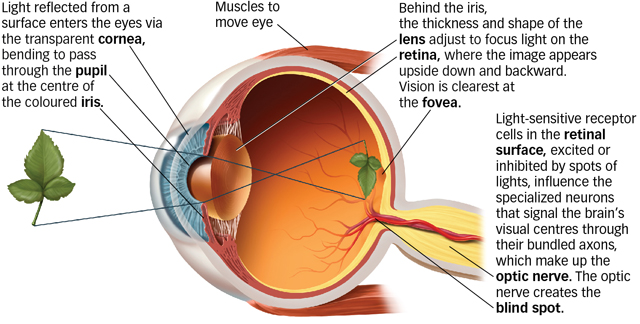

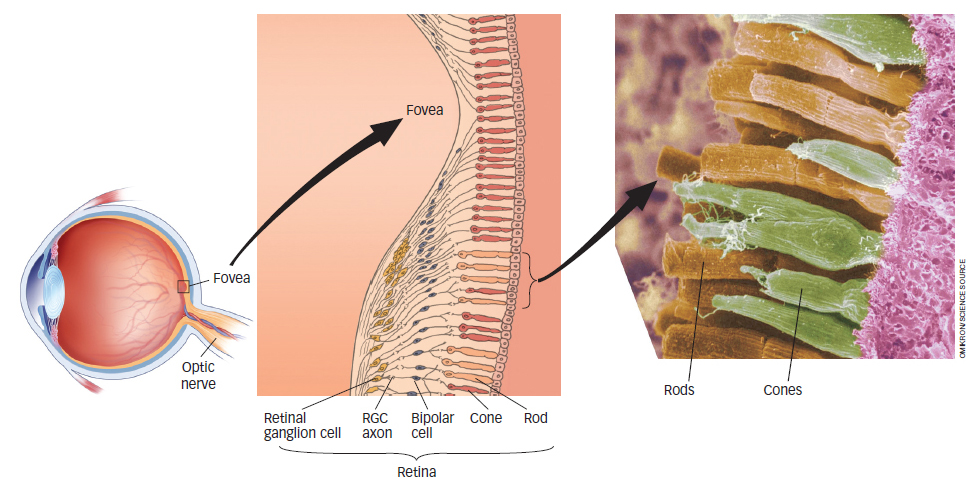

Eyes have evolved as specialized organs to detect light. FIGURE 4.4 shows the human eye in cross-

|

Immediately behind the iris, muscles inside the eye control the shape of the lens to bend the light again and focus it onto the retina, light-

How do eyeglasses actually correct vision?

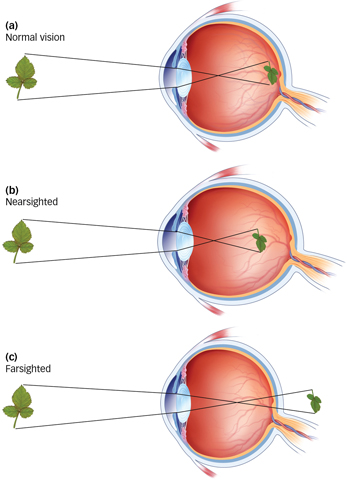

If your eyeballs are a little too long or a little too short, the lens will not focus images properly on the retina. If the eyeball is too long, images are focused in front of the retina, leading to nearsightedness (myopia), which is shown in FIGURE 4.5b. If the eyeball is too short, images are focused behind the retina, and the result is farsightedness (hyperopia), as shown in FIGURE 4.5c. Eyeglasses, contact lenses, and surgical procedures can correct either condition. For example, eyeglasses and contacts both provide an additional lens to help focus light more appropriately, and procedures such as LASIK physically reshape the eye’s existing lens.

Figure 4.4: Anatomy of the Human Eye Specialized organs of the eye evolved to detect light.

Figure 4.4: Anatomy of the Human Eye Specialized organs of the eye evolved to detect light.

Figure 4.5: Accommodation Inside the eye, the lens changes shape to focus nearby or faraway objects on the retina. People with normal vision focus the image on the retina at the back of the eye, both for near and far objects (a). Nearsighted people see clearly what’s nearby, but distant objects are blurry because light from them is focused in front of the retina, a condition called myopia (b). Farsighted people have the opposite problem: Distant objects are clear, but those nearby are blurry because their point of focus falls beyond the surface of the retina, a condition called hyperopia (c).

Figure 4.5: Accommodation Inside the eye, the lens changes shape to focus nearby or faraway objects on the retina. People with normal vision focus the image on the retina at the back of the eye, both for near and far objects (a). Nearsighted people see clearly what’s nearby, but distant objects are blurry because light from them is focused in front of the retina, a condition called myopia (b). Farsighted people have the opposite problem: Distant objects are clear, but those nearby are blurry because their point of focus falls beyond the surface of the retina, a condition called hyperopia (c).

Figure 4.6: Close-

Figure 4.6: Close-4.2.1.2 From the Eye to the Brain

How does a wavelength of light become a meaningful image? The retina is the interface between the world of light outside the body and the world of vision inside the central nervous system. Two types of photoreceptor cells in the retina contain light-

What are the major differences between rods and cones?

Rods are much more sensitive photoreceptors than cones, but this sensitivity comes at a cost. Because all rods contain the same photopigment, they provide no information about colour and sense only shades of grey. Think about this the next time you wake up in the middle of the night and make your way to the bathroom for a drink of water. Using only the moonlight from the window to light your way, do you see the room in colour or in shades of grey? Rods and cones differ in several other ways as well, most notably in their numbers. About 120 million rods are distributed more or less evenly around each retina except in the very centre, the fovea, an area of the retina where vision is the clearest and there are no rods at all. The absence of rods in the fovea decreases the sharpness of vision in reduced light, but it can be overcome. For example, when amateur astronomers view dim stars through their telescopes at night, they know to look a little off to the side of the target so that the image will not fall on the rod-

In contrast to rods, each retina contains only about 6 million cones, which are densely packed in the fovea and much more sparsely distributed over the rest of the retina, as you can see in Figure 4.6. This distribution of cones directly affects visual acuity and explains why objects off to the side, in your peripheral vision, are not so clear. The light reflecting from those peripheral objects is less likely to land in the fovea, making the resulting image less clear. The more fine detail encoded and represented in the visual system, the clearer the perceived image. The process is analogous to the quality of photographs taken with a 6-

The retina is thick with cells. As seen in Figure 4.6, the photoreceptor cells (rods and cones) form the innermost layer. They are beneath a layer of transparent neurons, the bipolar and retinal ganglion cells. The bipolar cells collect neural signals from the rods and cones and transmit them to the outermost layer of the retina, where neurons called retinal ganglion cells (RGCs) organize the signals and send them to the brain.

The bundled RGC axons—

4.2.2 Perceiving Colour

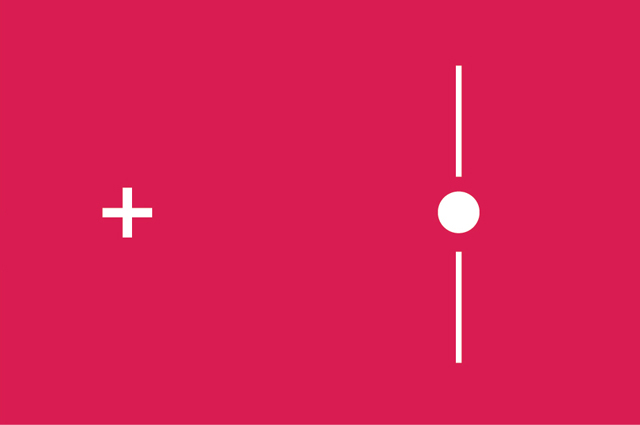

Figure 4.7: Blind Spot Demonstration To find your blind spot, close your left eye and stare at the cross with your right eye. Hold the book 15 to 30 cm away from your eyes and move it slowly toward and away from you until the dot disappears. The dot is now in your blind spot and not visible. At this point the vertical lines may appear as one continuous line because the visual system fills in the area occupied by the missing dot. To test your left eye’s blind spot, turn the book upside down and repeat with your right eye closed.

Figure 4.7: Blind Spot Demonstration To find your blind spot, close your left eye and stare at the cross with your right eye. Hold the book 15 to 30 cm away from your eyes and move it slowly toward and away from you until the dot disappears. The dot is now in your blind spot and not visible. At this point the vertical lines may appear as one continuous line because the visual system fills in the area occupied by the missing dot. To test your left eye’s blind spot, turn the book upside down and repeat with your right eye closed.

Sir Isaac Newton pointed out around 1670 that colour is not something “in” light. In fact, colour is nothing but our perception of wavelengths from the spectrum of visible light (see Figure 4.3). We perceive the shortest visible wavelengths as deep purple. As wavelengths increase, the colour perceived changes gradually and continuously to blue, then green, yellow, orange, and, with the longest visible wavelengths, red. This rainbow of hues and accompanying wavelengths is called the visible spectrum, illustrated in FIGURE 4.8.

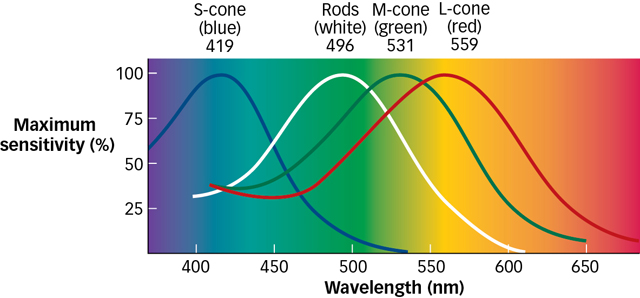

You will recall that all rods are ideal for low-

The fact that three types of cones in the retina respond preferentially to different wavelengths (corresponding to blue, green, or red light) means that the pattern of responding across the three types of cones provides a unique code for each colour. In fact, researchers can “read out” the wavelength of the light entering the eye by working backward from the relative firing rates of the three types of cones (Gegenfurtner & Kiper, 2003). A genetic disorder in which one of the cone types is missing—

Figure 4.8: Seeing in Colour We perceive a spectrum of colour because objects selectively absorb some wavelengths of light and reflect others. Colour perception corresponds to the relative activity of the three types of cones. Each type is most sensitive to a narrow range of wavelengths in the visible spectrum—

Figure 4.8: Seeing in Colour We perceive a spectrum of colour because objects selectively absorb some wavelengths of light and reflect others. Colour perception corresponds to the relative activity of the three types of cones. Each type is most sensitive to a narrow range of wavelengths in the visible spectrum—

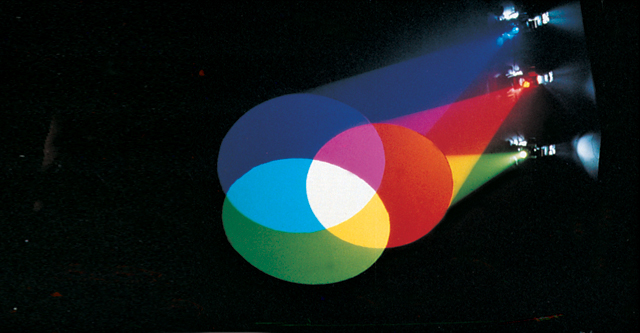

Figure 4.9: Colour Mixing The millions of shades of colour that humans can perceive are products not only of a light’s wavelength, but also of the mixture of wavelengths a stimulus absorbs or reflects. Coloured spotlights work by causing the surface to reflect light of a particular wavelength, which stimulates the red, blue, or green photopigments in the cones. When all visible wavelengths are present, we see white.

Figure 4.9: Colour Mixing The millions of shades of colour that humans can perceive are products not only of a light’s wavelength, but also of the mixture of wavelengths a stimulus absorbs or reflects. Coloured spotlights work by causing the surface to reflect light of a particular wavelength, which stimulates the red, blue, or green photopigments in the cones. When all visible wavelengths are present, we see white.

What happens when the cones in your eyes get fatigued?

Colour deficiency is often referred to as colour blindness, but in fact, people missing only one type of cone can still distinguish many colours, just not as many as someone who has the full complement of three cone types. You can create a kind of temporary colour deficiency by exploiting the idea of sensory adaptation. Just like the rest of your body, cones need an occasional break too. Staring too long at one colour fatigues the cones that respond to that colour, producing a form of sensory adaptation that results in a colour afterimage. To demonstrate this effect for yourself, follow these instructions for FIGURE 4.10:

Figure 4.10: Colour Afterimage Demonstration Follow the accompanying instructions in the text, and sensory adaptation will do the rest. When the afterimage fades, you can get back to reading the chapter.

Figure 4.10: Colour Afterimage Demonstration Follow the accompanying instructions in the text, and sensory adaptation will do the rest. When the afterimage fades, you can get back to reading the chapter.

Stare at the yellow patch for about 1 minute. Try to keep your eyes as still as possible.

After a minute, look to the right of the yellow patch. You should see a vivid colour aftereffect that lasts for a minute or more. Pay particular attention to the colour in the afterimage.

Were you puzzled that the yellowish patch produces a bluish afterimage? This result reveals something important about colour perception. The explanation stems from the colour-opponent system, where pairs of visual neurons work in opposition: blue-

4.2.3 The Visual Brain

What is the relationship between the right and left eyes, and the right and left visual fields?

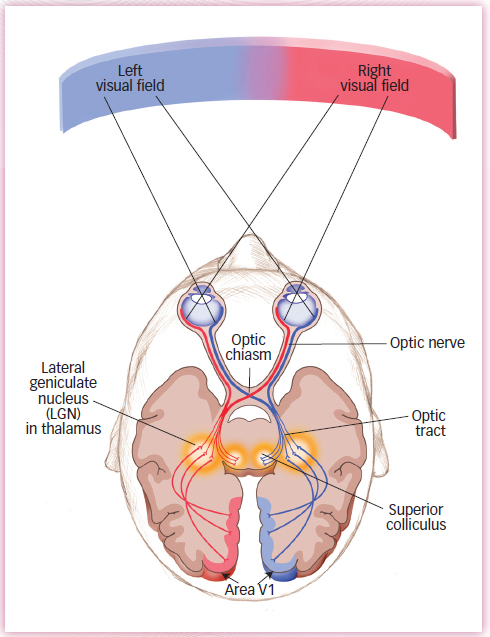

Streams of action potentials containing information encoded by the retina (neural impulses) travel to the brain along the optic nerve. Half of the axons in the optic nerve that leave each eye come from retinal ganglion cells (RGCs) that code information in the right visual field, whereas the other half code information in the left visual field. These two nerve bundles link to the left and right hemispheres of the brain, respectively (see FIGURE 4.11 ). The optic nerve travels from each eye to the lateral geniculate nucleus (LGN), located in the thalamus. As you will recall from the Neuroscience and Behaviour chapter, the thalamus receives inputs from all of the senses except smell. From there, the visual signal travels to the back of the brain, to a location called area V1, the part of the occipital lobe that contains the primary visual cortex. Here the information is systematically mapped into a representation of the visual scene.

Figure 4.11: Visual Pathway from Eye through Brain Objects in the right visual field stimulate the left half of each retina, and objects in the left visual field stimulate the right half of each retina. The optic nerves, one exiting each eye, are formed by the axons of retinal ganglion cells emerging from the retina. Just before they enter the brain at the optic chiasm, about half the nerve fibres from each eye cross. The left half of each optic nerve (representing the right visual field) runs through the brain’s left hemisphere via the thalamus, and the right half of each optic nerve (representing the left visual field) travels this route through the right hemisphere. So, information from the right visual field ends up in the left hemisphere and information from the left visual field ends up in the right hemisphere.

Figure 4.11: Visual Pathway from Eye through Brain Objects in the right visual field stimulate the left half of each retina, and objects in the left visual field stimulate the right half of each retina. The optic nerves, one exiting each eye, are formed by the axons of retinal ganglion cells emerging from the retina. Just before they enter the brain at the optic chiasm, about half the nerve fibres from each eye cross. The left half of each optic nerve (representing the right visual field) runs through the brain’s left hemisphere via the thalamus, and the right half of each optic nerve (representing the left visual field) travels this route through the right hemisphere. So, information from the right visual field ends up in the left hemisphere and information from the left visual field ends up in the right hemisphere.

4.2.3.1 Neural Systems for Perceiving Shape

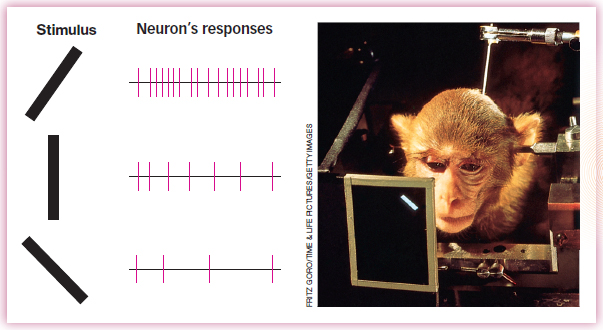

One of the most important functions of vision involves perceiving the shapes of objects; our day-

Figure 4.12: Single-

Figure 4.12: Single-4.2.3.2 Pathways for What, Where, and How

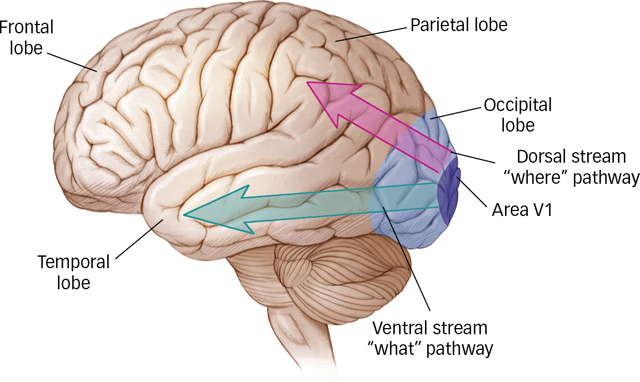

Two functionally distinct pathways, or visual streams, project from the occipital cortex to visual areas in other parts of the brain (see FIGURE 4.13):

Figure 4.13: Visual Streaming One interconnected visual system forms a pathway that courses from the occipital visual regions into the lower temporal lobe. This ventral pathway enables us to identify what we see. Another interconnected pathway travels from the occipital lobe through the upper regions of the temporal lobe into the parietal regions. This dorsal pathway allows us to locate objects, to track their movements, and to move in relation to them.

Figure 4.13: Visual Streaming One interconnected visual system forms a pathway that courses from the occipital visual regions into the lower temporal lobe. This ventral pathway enables us to identify what we see. Another interconnected pathway travels from the occipital lobe through the upper regions of the temporal lobe into the parietal regions. This dorsal pathway allows us to locate objects, to track their movements, and to move in relation to them.

The ventral (below) stream travels across the occipital lobe into the lower levels of the temporal lobes and includes brain areas that represent an object’s shape and identity, in other words, what it is, essentially a “what” pathway (Kravtiz et al., 2013; Ungerleider & Mishkin, 1982).

What are the main jobs of the ventral and dorsal streams?

The dorsal (above) stream travels up from the occipital lobe to the parietal lobes (including some of the middle and upper levels of the temporal lobes), connecting with brain areas that identify the location and motion of an object, in other words, where it is (Kravtiz et al., 2011). Because the dorsal stream allows us to perceive spatial relations, researchers originally dubbed it the “where” pathway (Ungerleider & Mishkin, 1982). Neuroscientists from the University of Western Ontario later argued that because the dorsal stream is crucial for guiding movements, such as aiming, reaching, or tracking with the eyes, the “where” pathway should more appropriately be called the “how” pathway (Milner & Goodale, 1995).

How do we know there are two pathways? The most dramatic evidence comes from studying the impairments that result from brain injuries to each of the areas.

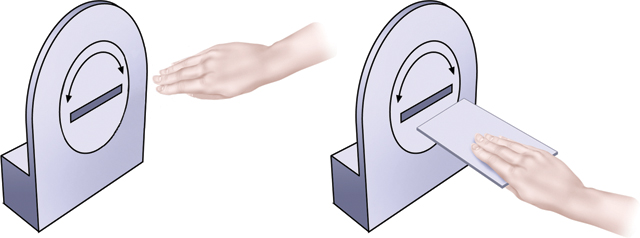

For example, a woman known as D.F. suffered permanent damage to a large region of the lateral occipital cortex, an area in the ventral stream (Goodale et al., 1991). Her ability to recognize objects by sight was greatly impaired, although her ability to recognize objects by touch was normal. This suggests that her visual representation of objects, and not her memory for objects, was damaged. D.F.’s brain damage belongs to a category called visual form agnosia, the inability to recognize objects by sight (Goodale & Milner, 1992, 2004). Oddly, although D.F. could not recognize objects visually, she could accurately guide her actions by sight, as demonstrated in FIGURE 4.14. When D.F. was scanned with fMRI, researchers found that she showed normal activation of regions within the dorsal stream during guided movement (James et al., 2003).

Figure 4.14: Testing Visual Form Agnosia When researchers asked D.F. to orient her hand to match the angle of the slot in the testing apparatus, she was unable to comply (left). However, when asked to insert a card into the slot at various angles (right), D.F. accomplished the task virtually to perfection.

Figure 4.14: Testing Visual Form Agnosia When researchers asked D.F. to orient her hand to match the angle of the slot in the testing apparatus, she was unable to comply (left). However, when asked to insert a card into the slot at various angles (right), D.F. accomplished the task virtually to perfection.

Conversely, other people with brain damage to the parietal lobe, a section of the dorsal stream, have difficulty using vision to guide their reaching and grasping movements (Perenin & Vighetto, 1988). However, their ventral streams are intact, meaning they recognize what objects are.

We can conclude from these two patterns of impairment that the ventral and dorsal visual streams are functionally distinct; it is possible to damage one while leaving the other intact. Still, the two streams must work together during visual perception in order to integrate “what” and “where,” and researchers are starting to examine how they interact. One intriguing possibility is suggested by recent fMRI research indicating that some regions within the dorsal stream are sensitive to properties of an object’s identity, responding differently, for example, to line drawings of the same object in different sizes or viewed from different vantage points (Konen & Kastner, 2008; Sakuraba et al., 2012). This may be what allows the dorsal and ventral streams to exchange information and thus integrate the “what” and “where” (Farivar, 2009; Konen & Kastner, 2008).

Light passes through several layers in the eye to reach the retina. Two types of photoreceptor cells in the retina transduce light into neural impulses: cones, which operate under normal daylight conditions and sense colour; and rods, which are active under low-

light conditions for night vision. The neural impulses are sent along the optic nerve to the brain. The retina contains several layers, and the outermost consists of retinal ganglion cells (RGCs) that collect and send signals to the brain. Bundles of RGCs form the optic nerve.

Light striking the retina causes a specific pattern of response in each of three cone types that are critical to colour perception: short-

wavelength (bluish) light, medium- wavelength (greenish) light, and long- wavelength (reddish) light. The overall pattern of response across the three cone types results in a unique code for each colour. Information encoded by the retina travels to the brain along the optic nerve, which connects to the lateral geniculate nucleus (LGN) in the thalamus and then to the primary visual cortex, area V1, in the occipital lobe.

Two functionally distinct pathways project from the occipital lobe to visual areas in other parts of the brain. The ventral stream travels into the lower levels of the temporal lobes and includes brain areas that represent an object’s shape and identity. The dorsal stream goes from the occipital lobes to the parietal lobes, connecting with brain areas that identify the location and motion of an object.