2.3 Explanation: Discovering Why People Do What They Do

It would be interesting to know whether happy people are healthier than unhappy people, but it would be even more interesting to know why. Does happiness make people healthier? Does being healthy make people happier? Does being rich make people healthy and happy? Scientists have developed some clever ways of using their measurements to answer questions like these. In the first section (Correlation), we’ll examine techniques that can tell us whether two variables, such as health and happiness, are related; in the second section (Causation), we’ll examine techniques that can tell us whether this relationship is one of cause and effect; in the third section (Drawing Conclusions), we’ll see what kinds of conclusions these techniques allow us to draw; and in the fourth section (Thinking Critically about Evidence), we’ll discuss the difficulty that most of us have drawing such conclusions.

Correlation

|

Participant |

Hours of Sleep |

No. of Presidents Named |

|---|---|---|

|

A |

0 |

11 |

|

B |

0 |

17 |

|

C |

2.7 |

16 |

|

D |

3.1 |

21 |

|

E |

4.4 |

17 |

|

F |

5.5 |

16 |

|

G |

7.6 |

31 |

|

H |

7.9 |

41 |

|

I |

9 |

40 |

|

J |

9.1 |

35 |

|

K |

9.6 |

38 |

|

L |

9 |

43 |

How much sleep did you get last night? Okay, now, how many U.S. presidents can you name? If you asked a dozen college students those two questions, you’d probably get a pattern of responses like the one shown in TABLE 2.1. Looking at these results, you’d probably conclude that students who got a good night’s sleep tend to be better president-

When you asked college students questions about sleep and presidents, you actually did three things:

- First, you measured a pair of variables, which are properties whose values can vary across individuals or over time. You measured one variable (number of hours slept) whose value could vary from 0 to 24, and you measured a second variable (number of presidents named) whose value could vary from 0 to 43.

variable

A property whose value can vary across individuals or over time.

- Second, you did this again and again. That is, you made a series of measurements, not just one.

- Third, you looked for a pattern in your series of measurements. If you looked at the second and third columns, you would notice that the values generally increase as you move from top to bottom. In other words, the two variables show a correlation (as in “co-

relation”), meaning that variations in the value of one variable are synchronized with variations in the value of the other. correlation

Two variables are said to “be correlated” when variations in the value of one variable are synchronized with variations in the value of the other.

35

What’s so cool about this is that simply by looking for synchronized patterns of variation, we can use measurement to discover the relationships between variables. For example, you know that people who smoke generally die younger than people who don’t, but this is just a shorthand way of saying that as the value of cigarette consumption increases, the value of longevity decreases. Correlations not only describe the world as it is, they also allow us to predict the world as it will be. For example, given the correlation between smoking and longevity, you can predict with some confidence that a young person who starts smoking today will probably not live as long as a young person who doesn’t. In short, when two variables are correlated, knowledge of the value of one variable allows us to make predictions about the value of the other variable.

A correlation can be positive or negative. When two variables have a “more-

Causation

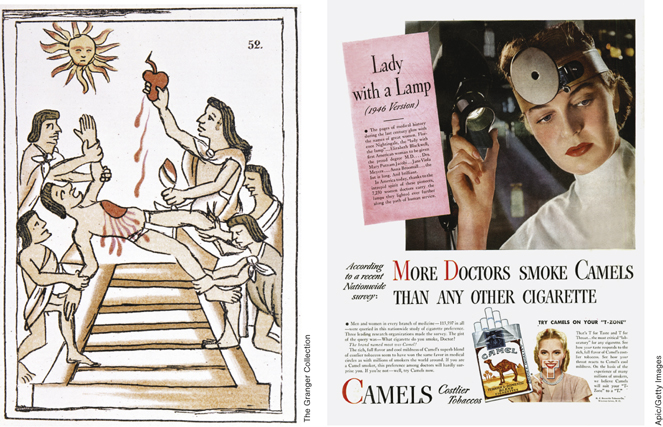

We observe correlations all the time: between automobiles and pollution, between bacon and heart attacks, between sex and pregnancy. Natural correlations are the correlations observed in the world around us, and although such observations can tell us whether two variables have a relationship, they cannot tell us what kind of relationship these variables have. For example, many studies (Anderson & Bushman, 2001; Anderson et al., 2003; Huesmann et al., 2003) have found a positive correlation between the amount of violence to which a child is exposed through media such as television, movies, and video games (variable X) and the aggressiveness of the child’s behavior (variable Y). The more media violence a child is exposed to, the more aggressive that child is likely to be. These variables clearly have a relationship—

natural correlations

A correlation observed in the world around us.

Apic/Getty Images

The Third-Variable Problem

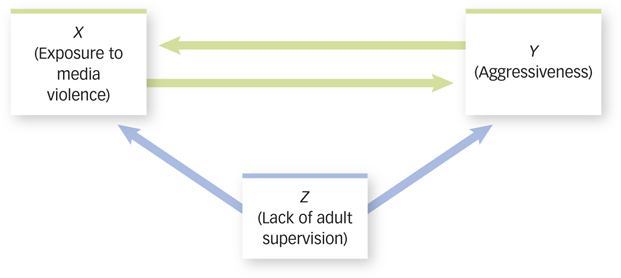

One possibility is that exposure to media violence (X) causes aggressiveness (Y). For example, media violence may teach children that aggression is a reasonable way to vent anger and solve problems. A second possibility is that aggressiveness (Y) causes children to be exposed to media violence (X). For example, children who are naturally aggressive may be especially likely to seek opportunities to play violent video games or watch violent movies. A third possibility is that a third variable (Z) causes children to be aggressive (Y) and to be exposed to media violence (X), neither of which is causally related to the other. For example, lack of adult supervision (Z) may allow children to get away with bullying others and to get away with watching television shows that adults would normally not allow. In other words, the relation between aggressiveness and exposure to media violence may be a case of third-variable correlation, which means that two variables are correlated only because each is causally related to a third variable. FIGURE 2.2 shows three possible causes of any correlation.

third-variable correlation

Two variables are correlated only because each is causally related to a third variable.

36

What is third-

How can we determine by simple observation which of these three possibilities best explains the relationship between exposure to media violence and aggressiveness? Take a deep breath. The answer is: We can’t. When we observe a natural correlation, the possibility of third-

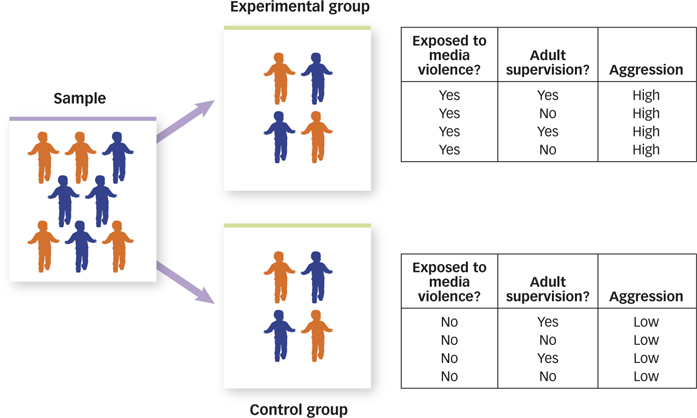

The most straightforward way to determine whether a third variable, such as lack of adult supervision (Z), causes both exposure to media violence (X) and aggressive behavior (Y) is to eliminate differences in adult supervision (Z) among a group of children and see if the correlation between exposure (X) and aggressiveness (Y) is eliminated too. For instance, we could measure children who have different amounts of adult supervision, but we could make sure that for every child we measure who is exposed to media violence and is supervised Q% of the time, we also observe a child who is not exposed to media violence and is supervised Q% of the time, thus ensuring that children who are and are not exposed to media violence have the same amount of adult supervision on average. So if those who were exposed are on average more aggressive than those who were not exposed, we can be sure that lack of adult supervision was not the cause of this difference.

George Marks/Getty Images

But don’t applaud just yet. Because even if we used this technique to eliminate a particular third variable (such as lack of adult supervision), we would not be able to dismiss all third variables. For example, as soon as we finished making these observations, it might suddenly occur to us that emotional instability could cause children to gravitate toward violent television or video games and to behave aggressively. Emotional instability would be a new third variable (Z), and we would have to design a new test to investigate whether this variable explains the correlation between exposure (X) and aggression (Y). Unfortunately, we could keep dreaming up new third variables all day long without ever breaking a sweat, and every time we dreamed one up, we would have to rush out and do a new test to determine whether this third variable was the cause of the correlation between exposure and aggressiveness. Because we can’t rule out every possible third variable, we can never be absolutely sure that the correlation we observe between X and Y is evidence of a causal relationship between them. The third-variable problem refers to the fact that a causal relationship between two variables cannot be inferred from the naturally occurring correlation between them because of the ever-

third-variable problem

The fact that a causal relationship between two variables cannot be inferred from the naturally occurring correlation between them because of the ever-

experiment

A technique for establishing the causal relationship between variables.

37

Manipulation

The most important thing to know about experiments is that you’ve been doing them all your life. Imagine that you are scanning Facebook on a laptop when all of a sudden you lose your wireless connection. You suspect that another device—

So how could you test your suspicion? Well, rather than observing the correlation between phone usage and connectivity, you could try to create a correlation by intentionally making calls on your roommate’s phone and observing changes in your laptop’s connectivity as you did so. If you observed that “wireless connection off” only occurred in conjunction with “phone on,” then you could conclude that your roommate’s phone was the cause of your failed connection, and you could sell the phone on eBay and then lie about it when asked. The technique you used to solve the third-

manipulation

Changing a variable in order to determine its causal power.

How does manipulation solve the third-

Manipulation can solve scientific problems too. For example, imagine that our passive observation of children revealed a positive correlation between exposure to violence and aggressive behaviors, and that now we want to design an experiment to determine whether the exposure is the cause of the aggression. We could ask some children to participate in an experiment, have half of them play violent video games for an hour while the other half does not, and then, at the end of the hour, we could measure their aggression and compare the measurements across the two groups. When we compared these measurements, we would essentially be computing the correlation between a variable that we manipulated (exposure) and a variable that we measured (aggression). But because we manipulated rather than measured exposure, we would never have to ask whether a third variable (such as lack of adult supervision) caused children to experience different levels of exposure. After all, we already know what caused that to happen. We did!

38

Experimentation involves three critical steps (and several ridiculously confusing terms):

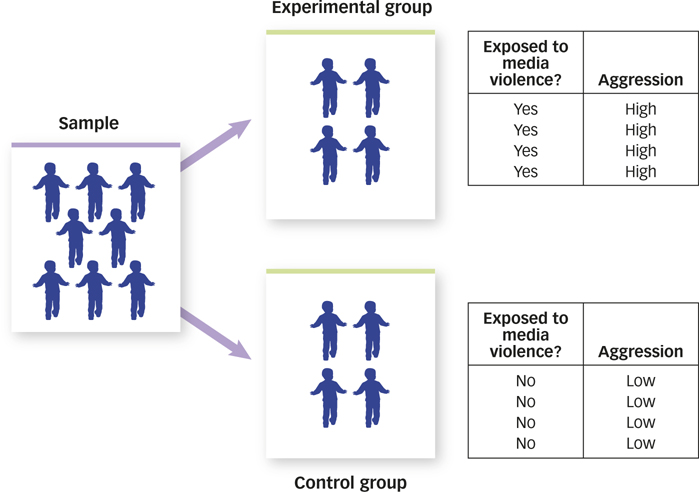

- First, we manipulate. We call the variable that is manipulated the independent variable because it is under our control, and thus it is “independent” of what a participant says or does. When we manipulate an independent variable (such as exposure to media violence), we end up with two groups of participants: an experimental group, which is the group of people who experience a stimulus, and a control group, which is the group of people who do not experience that stimulus.

independent variable

The variable that is manipulated in an experiment.

experimental group

The group of people who are exposed to a particular manipulation, as compared to the control group, in an experiment.

control group

The group of people who are not exposed to the particular manipulation, as compared to the experimental group, in an experiment.

- Second, having manipulated one variable (exposure), we now measure another variable (aggression). We call the variable that is measured the dependent variable because its value “depends” on what the participant says or does.

dependent variable

The variable that is measured in a study.

- Third and finally, we look to see whether our manipulation of the independent variable produced changes in the dependent variable. FIGURE 2.3 shows exactly how manipulation works.

Random Assignment

Why can’t we allow people to select the condition of the experiment in which they will participate?

Once we have manipulated an independent variable and measured a dependent variable, we’ve done one of the two things that experimentation requires. The second thing is a little less intuitive but equally important. Imagine that we began our exposure and aggression experiment by finding a group of children and asking each child whether he or she would like to be in the experimental group or the control group. Imagine that half the children said that they’d like to play violent video games and the other half said they would rather not. Imagine that we let the children do what they wanted to do, measured aggression some time later, and found that the children who had played the violent video games were more aggressive than those who had not. Would this experiment allow us to conclude that playing violent video games causes aggression? Definitely not—

What are the three main steps in doing an experiment?

39

Because we let the children decide for themselves whether or not they would play violent video games, and children who ask to play such games are probably different in many ways from those who ask not to. They may be older, or stronger, or smarter—

self-selection

A problem that occurs when anything about a person determines whether he or she will be included in the experimental or control group.

If we want to be sure that there is one and only one difference between the children in our study who are and are not exposed to media violence, then their inclusion in the experimental or control groups must be randomly determined. One way to do this is to flip a coin. For example, we could walk up to each child in our experiment, flip a coin, and assign the child to play violent video games if the coin lands heads up and not to play violent video games if the coin lands heads down. Random assignment is a procedure that lets chance assign people to the experimental or the control group.

random assignment

A procedure that lets chance assign people to the experimental or control group.

What would happen if we assigned children to groups with a coin flip? As FIGURE 2.4 shows, we could expect the experimental group and the control group to have roughly equal numbers of supervised kids and unsupervised kids, roughly equal numbers of emotionally stable and unstable kids, roughly equal numbers of big kids and small kids, of active kids, fat kids, tall kids, funny kids, and kids with blue hair named Harry McSweeny. Because the kids in the two groups would be the same on average in terms of height, weight, emotional stability, adult supervision, and every other variable in the known universe except the one we manipulated, we could be sure that the variable we manipulated (exposure) was the one and only cause of any changes in the variable we measured (aggression). After all, if exposure was the only difference between the two groups of children then it must be the cause of any differences in aggression we observe.

40

Why is random assignment so useful and important?

Significance

Random assignment is a powerful tool, but like a lot of tools, it doesn’t work every time, we use it. If we randomly assigned children to play or not to play violent video games, we could expect the two groups to have roughly equal numbers of supervised and unsupervised kids, roughly equal numbers of emotionally stable and unstable kids, and so on. The key word in that sentence is roughly. Coin flips are inherently unpredictable, and every once in a long while by sheer chance alone, a coin will assign more unsupervised, emotionally unstable kids to play violent video games and more supervised, emotionally stable kids to play none. When this happens, random assignment has failed—

How can we tell when random assignment has failed? Unfortunately, we can’t for sure. But we can calculate the odds that random assignment has failed each time we use it. Psychologists perform a statistical calculation every time they do an experiment, and they do not accept the results of those experiments unless the calculation tells them that there is less than a 5% chance that they would have found differences between the experimental and control groups if random assignment had failed. Such differences are said to be statistically significant, which means they were unlikely to have been caused by a third variable.

Drawing Conclusions

If we applied all the techniques discussed so far, we would have designed an experiment that had internal validity, which is an attribute of an experiment that allows it to establish causal relationships. When we say that an experiment is internally valid, we mean that everything inside the experiment is working exactly as it must in order for us to draw conclusions about causal relationships. But what exactly are those conclusions? If our imaginary experiment revealed a difference between the aggressiveness of children in the experimental and control groups, then we could conclude that media violence as we defined it caused aggression as we defined it in the people whom we studied. Notice those phrases in italics. Each corresponds to an important restriction on the kinds of conclusions we can draw from an experiment, so let’s consider each in turn.

internal validity

An attribute of an experiment that allows it to establish causal relationships.

Representative Variables

The results of any experiment depend, in part, on how the independent and dependent variables are defined. For instance, we are more likely to find that exposure to media violence causes aggression when we define exposure as “playing Grand Theft Auto for 10 hours” rather than “playing Pro Quarterback for 10 minutes,” or when we define aggression as “interrupting another person” rather than “smacking someone silly with a tire iron.” The way we define variables can have a profound influence on what we find, so which of these is the right way?

41

The Real World: Oddsly Enough

Oddsly Enough

A recent Gallup survey found that 53% of college graduates believe in extra-

The Nobel laureate Luis Alvarez was reading the newspaper one day, and a particular story got him thinking about an old college friend whom he hadn’t seen in years. A few minutes later, he turned the page and was shocked to see the very same friend’s obituary. But before concluding that he had an acute case of ESP, Alvarez decided to use probability theory to determine just how amazing this coincidence really was.

First he estimated the number of friends an average person has, and then he estimated how often an average person thinks about each of those friends. With these estimates in hand, he did a few simple calculations and determined the likelihood that someone would think about a friend five minutes before learning about that friend’s death. The odds were astonishing. In a country the size of the United States, for example, Alvarez predicted that this amazing coincidence should happen to 10 people every day (Alvarez, 1965).

“In 10 years there are 5 million minutes,” says statistics professor Irving Jack. “That means each person has plenty of opportunity to have some remarkable coincidences in his life” (quoted in Neimark, 2004). For example, 250 million Americans dream for about two hours every night (that’s a half billion hours of dreaming!), so it isn’t surprising that two people sometimes have the same dream, or that we sometimes dream about something that actually happens the next day. As mathematics professor John Allen Paulos (quoted in Neimark, 2004) put it, “In reality, the most astonishingly incredible coincidence imaginable would be the complete absence of all coincidence.”

If all of this seems surprising to you, then you are not alone. Research shows that people routinely underestimate the likelihood of coincidences happening by chance (Diaconis & Mosteller, 1989; Falk & McGregor, 1983; Hintzman, Asher, & Stern, 1978). If you want to profit from this fact, assemble a group of 24 or more people, and bet anyone that at least two of the people share a birthday. The odds are in your favor, and the bigger the group, the better the odds. In fact, in a group of 35, the odds are 85%. Happy fleecing!

AP Photo/Keystone, TIPress/Samuel Golay

One answer is that we should define variables in an experiment as they are defined in the real world. External validity is an attribute of an experiment in which variables have been defined in a normal, typical, or realistic way. It seems pretty clear that the kind of aggressive behavior that concerns teachers and parents lies somewhere between an interruption and an assault, and that the kind of media violence to which children are typically exposed lies somewhere between sports and felonies. If the goal of an experiment is to determine whether the kinds of media violence to which children are typically exposed cause the kinds of aggression with which societies are typically concerned, then external validity is essential. When variables are defined in an experiment as they typically are in the real world, we say that the variables are representative of the real world.

external validity

An attribute of an experiment in which variables have been defined in a normal, typical, or realistic way.

42

Hot Science: Do Violent Movies Make Peaceful Streets?

Do Violent Movies Make Peaceful Streets?

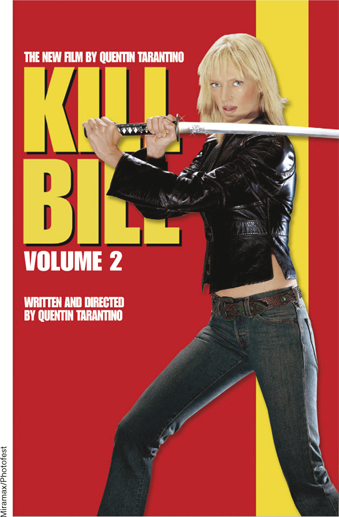

In 2000, the American Academy of Pediatrics and five other public health organizations issued a joint statement warning about the risks of exposure to media violence. They cited evidence from psychological experiments in which children and young adults who were exposed to violent movie clips showed a sharp increase in aggressive behavior immediately afterward. These health organizations noted that “well over 1,000 studies … point overwhelmingly to a causal connection between media violence and aggressive behavior” (American Academy of Pediatrics, 2000).

Given the laboratory results, we might expect to see a correlation in the real world between the number of people who see violent movies in theaters and the number of violent crimes. When economists Gordon Dahl and Stefano Della Vigna (2009) analyzed crime statistics and box office statistics, they found just such a correlation—

Laboratory experiments clearly show that exposure to media violence can cause aggression. But as the movie theater data remind us, experiments are a tool for establishing the causal relationships between variables and are not meant to be miniature versions of the real world, where things are ever so much more complex.

External validity sounds like such a good idea that you may be surprised to learn that most psychology experiments are externally invalid—

Why isn’t external validity always necessary?

Representative People

Our imaginary experiment on exposure to media violence and aggression would allow us to conclude that exposure as we defined it caused aggression as we defined it in the people whom we studied. That last phrase represents another important restriction on the kinds of conclusions we can draw from experiments.

Who are the people whom psychologists study? Psychologists rarely observe an entire population, which is a complete collection of people, such as the population of human beings (about 7 billion), the population of Californians (about 38 million), or the population of people with Down syndrome (about 1 million). Rather, psychologists observe a sample, which is a partial collection of people drawn from a population.

population

A complete collection of participants who might possibly be measured.

sample

A partial collection of people drawn from a population.

43

What is the difference between a population and a sample?

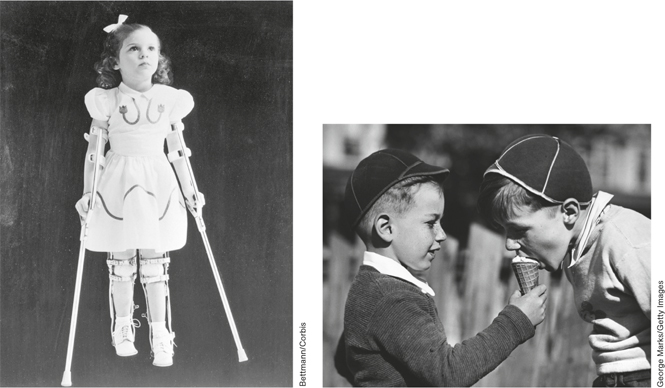

The size of a sample can be as small as 1. For example, some individuals are so remarkable that they deserve close study, and psychologists study them by using the case method, which is a procedure for gathering scientific information by studying a single individual. We can learn a lot about memory by studying Akira Haraguchi, who can recite the first 100,000 digits of pi, or about creativity by studying Jay Greenburg whose 5th symphony was recorded by the London Symphony Orchestra when he was just 15 years old. Cases like these are not only interesting in their own right, but can also provide important insights into how the rest of us work.

case method

A procedure for gathering scientific information by studying a single individual.

Of course, most of the psychological studies you will read about in this book included samples of ten, a hundred, a thousand, or even several thousand people. So how do psychologists decide which people to include in a sample? One way to select a sample from a population is by random sampling, which is a technique for choosing participants that ensures that every member of a population has an equal chance of being included in the sample. When we randomly sample participants from a population, the sample is said to be representative of the population. Random sampling allows us to generalize from the sample to the population—

random sampling

A technique for choosing participants that ensures that every member of a population has an equal chance of being included in the sample.

REUTERS/Jim Young

Random sampling sounds like such a good idea that you might be surprised to learn that most psychological studies involve nonrandom samples—

44

So how can we learn anything from psychology experiments? Isn’t the failure to sample randomly a fatal flaw? No, it’s not, and there are three reasons why.

Why is nonrandom sampling not a fatal flaw?

- Sometimes the similarity of a sample and a population doesn’t matter. If one pig flew over the Statue of Liberty just one time, it would instantly disprove the traditional theory of porcine locomotion. It wouldn’t matter if all pigs flew or if any other pigs ever flew. One flying pig is enough. An experimental result can be illuminating even when the sample isn’t typical of the population.

- When the ability to generalize an experimental result is important, psychologists perform new experiments that use the same procedures with different samples. For example, after measuring how a nonrandomly selected group of American children behaved after playing violent video games, we might try to replicate our experiment with Japanese children, or with American teenagers, or with deaf adults. If the results of our study were replicated in these other samples, then we would be more confident (but never completely confident) that the results describe a basic human tendency.

- Sometimes the similarity of the sample and the population is a reasonable starting assumption. Few of us would be willing to take an experimental medicine if a nonrandom sample of seven participants took it and died, and that would be true even if the seven participants were mice. Although these nonrandomly sampled participants would be different from us in many ways (including tails and whiskers), most of us would be willing to generalize from their experience to ours because we know that even mice share enough of our basic biology to make it a good bet that what harms them can harm us too. By this same reasoning, if a psychology experiment demonstrated that some American children behaved violently after playing violent video games, we should ask whether there is any compelling reason to suspect that Ecuadorian college students or middle-

aged Australians would behave any differently? If the answer is yes, then experiments provide a way for us to investigate that possibility.

Thinking Critically about Evidence

As you’ve seen in this chapter, the scientific method produces empirical evidence. But empirical evidence is only useful if we know how to think about it, and the fact is that most of us don’t. Using evidence requires critical thinking, which involves asking ourselves tough questions about whether we have interpreted the evidence in an unbiased way, and about whether the evidence tells not just the truth, but the whole truth. Research suggests that most people have trouble doing both of these things and that educational programs designed to teach or improve critical thinking skills are not particularly effective (Willingham, 2007). Why do we have so much trouble thinking critically? There are two reasons: First, we tend to see what we expect to see, and second, we tend to ignore what we can’t see. Let’s explore each of these tendencies in turn.

45

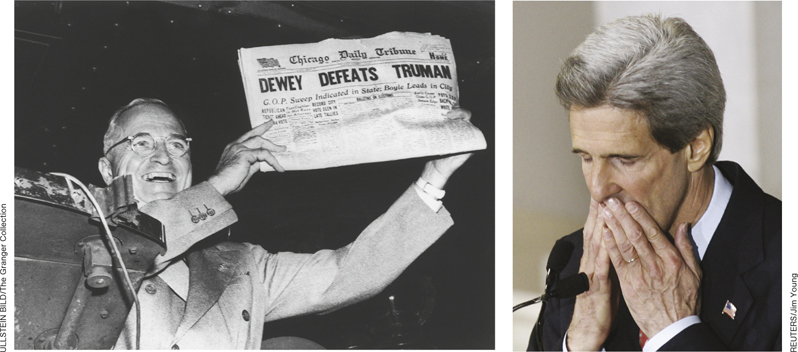

We See What We Expect to See

People’s beliefs can color their views of evidence and cause them to see what they expected to see. For instance, participants in one study (Darley & Gross, 1983) learned about a little girl named Hannah. One group of participants was told that Hannah came from an affluent family, which led the participants to expect her to be a good student. Another group of participants was told that Hannah came from a poor family, which led them to expect her to be a bad student. All participants were then shown a video of Hannah taking a reading test and were asked to rate Hannah’s performance. Although both groups saw exactly the same video, those participants who believed that Hannah was from an affluent family rated her performance more positively than did those who believed that Hannah was from a poor family. What’s more, both groups of participants defended their conclusions by citing evidence from the video. Numerous experiments show that people tend to look for evidence that confirms their beliefs (Hart et al., 2009; Snyder & Swann, 1978) and tend to stop looking when they find it (Kunda, 1990). What’s more, when people do encounter evidence that disconfirms their beliefs, they hold it to a very high standard (Gilovich, 1991; Ross, Lepper, & Hubbard, 1975).

How do our beliefs shape the way we think about evidence?

Because it is so easy to see what we expect to see, the first step in critical thinking is simply to doubt your own conclusions—

We Consider What We See and Ignore What We Don’t

Not only do people see what they expect to see, but they fail to consider what they can’t see. For example, participants in one study (Newman, Wolff, & Hearst, 1980) played a game in which they were shown a set of trigrams, which are three-

Why is it important to consider unseen evidence?

This tendency can cause us to draw all kinds of erroneous conclusions. Consider a study in which participants were randomly assigned to play one of two roles in a game (Ross, Amabile, & Steinmetz, 1977). The “quizmasters” were asked to make up a series of difficult questions, and the “contestants” were asked to answer them. If you give this a quick try, you will discover that it’s very easy to generate questions that you can answer but that most other people cannot. For example, think of the last city you visited. Now give someone the name of the hotel you stayed in and ask him or her what street it’s on. They’re not likely to know.

46

So participants who were cast in the role of quizmaster naturally asked lots of clever-

DATA VISUALIZATION

Does SAT Performance Correlate to Family Income and Education Level?

www.macmillanhighered.com/

The Skeptical Stance

Winston Churchill once said that democracy is the worst form of government, except for all the others. Similarly, science is not an infallible method for learning about the world; it’s just a whole lot less fallible than the other methods. Science is a human enterprise, and humans make mistakes. They see what they expect to see, and they rarely consider what they can’t see at all.

What makes science different from most other human enterprises is that scientists actively seek to discover and remedy these biases. Scientists are constantly striving to make their observations more accurate and their reasoning more rigorous, and they invite anyone and everyone to examine their evidence and challenge their conclusions. As such, science is the ultimate democracy—

So think of the remaining chapters in this book as a report from the field—

47

SUMMARY QUIZ [2.3]

Question 2.7

| 1. | When we observe a natural correlation, what keeps us from concluding that one variable is the cause and the other is the effect? |

- the third-

variable problem - random assignment

- random sampling

- statistical significance

a.

Question 2.8

| 2. | A researcher administers a questionnaire concerning attitudes toward global warming to people of both genders and of all ages who live all across the country. The dependent variable in the study is the participant’s _____________. |

- age

- gender

- attitudes toward global warming

- geographic location

c.

Question 2.9

| 3. | The characteristic of an experiment that allows conclusions about causal relationships to be drawn is called |

- external validity.

- internal validity.

- generalization.

- self-

selection.

b.

Question 2.10

| 4. | When people find evidence that confirms their beliefs, they often |

- tend to stop looking their beliefs.

- seek additional evidence that disconfirms them.

- consider what they cannot see.

- think critically about it.

a.