5.3 Operant Conditioning

Jeremy Lin appears on a 2012 cover of Sports Illustrated. This rookie player amazed the world on February 4 of that same year when he came off the bench and led the New York Knicks to victory against the New Jersey Nets. It was the first game of a Lin-

THE JEREMY LIN SHOW Madison Square Garden, February 4, 2012: The New York Knicks are playing their third game in three nights, hoping to end a losing streak. But they are already trailing far behind the New Jersey Nets in the first quarter. That’s when Jeremy Lin, a third-

THE JEREMY LIN SHOW Madison Square Garden, February 4, 2012: The New York Knicks are playing their third game in three nights, hoping to end a losing streak. But they are already trailing far behind the New Jersey Nets in the first quarter. That’s when Jeremy Lin, a third-

Jeremy Lin was an overnight sensation. People around the world were fascinated by him for many reasons. He was the fourth Asian American player in NBA history, the first Harvard graduate to play in the league since the 1950s, and previously a benchwarmer, not even drafted out of college (Beck, 2011, December 28). Everyone was asking the same question: Would Jeremy be a one-

Jeremy ended up leading the Knicks through a seven-

Like any of us, Jeremy is a unique blend of nature and nurture. He was blessed with a hearty helping of physical capabilities (nature), such as speed, agility, and coordination. But he was also shaped by the circumstances of his life (nurture). This second category is where learning comes in. Let’s find out how.

The Shaping of Behavior

Jeremy and his teammates from Palo Alto High School celebrate a victory that led them to the 2006 state finals. Pictured in the center is Coach Peter Diepenbrock, who has maintained a friendship with Jeremy since he graduated. Prior to Jeremy’s sensational season with the Knicks, Diepenbrock helped him with his track workouts.

When Jeremy began his freshman year at Palo Alto High School, he was about 5´3˝ and 125 pounds—

Things changed when Jeremy found himself in a bigger pond, playing basketball at Harvard University. That’s when Coach Diepenbrock says the young player began working harder on aspects of practice he didn’t particularly enjoy, such as weight lifting, ball handling, and conditioning. When Jeremy was picked up by the NBA, his diligence soared to a new level. While playing with the Golden State Warriors, he would eat breakfast at the team’s training facility by 8:30 A.M., three and a half hours before practice. “Then, all of sudden, you’d hear a ball bouncing on the floor,” Keith Smart, a former coach told The New York Times (Beck, 2012, February 24, para. 14). Between NBA seasons, Jeremy returned to his alma mater Palo Alto High School to run track workouts with Coach Diepenbrock. He also trained with a shooting coach and spent “an inordinate amount of time” honing his shot, according to Diepenbrock.

Jeremy’s persistence paid off. The once-

operant conditioning Learning that occurs when voluntary actions become associated with their consequences.

OPERANT CONDITIONING DEFINED What has kept Jeremy working so hard all these years? Psychologists might attribute Jeremy’s ongoing efforts to operant conditioning, a type of learning in which people or animals come to associate their voluntary actions with their consequences. Whether pleasant or unpleasant, the effects of a behavior influence future actions. Think about some of the consequences of Jeremy’s training—

LO 7 Describe Thorndike’s law of effect.

American psychologist Burrhus Frederic Skinner, or simply B. F. Skinner, is one of the most influential psychologists of all time. Skinner believed that every thought, emotion, and behavior (basically anything psychological) is shaped by factors in the environment. Using animal chambers known as “Skinner Boxes,” he conducted carefully controlled experiments on animal behavior.

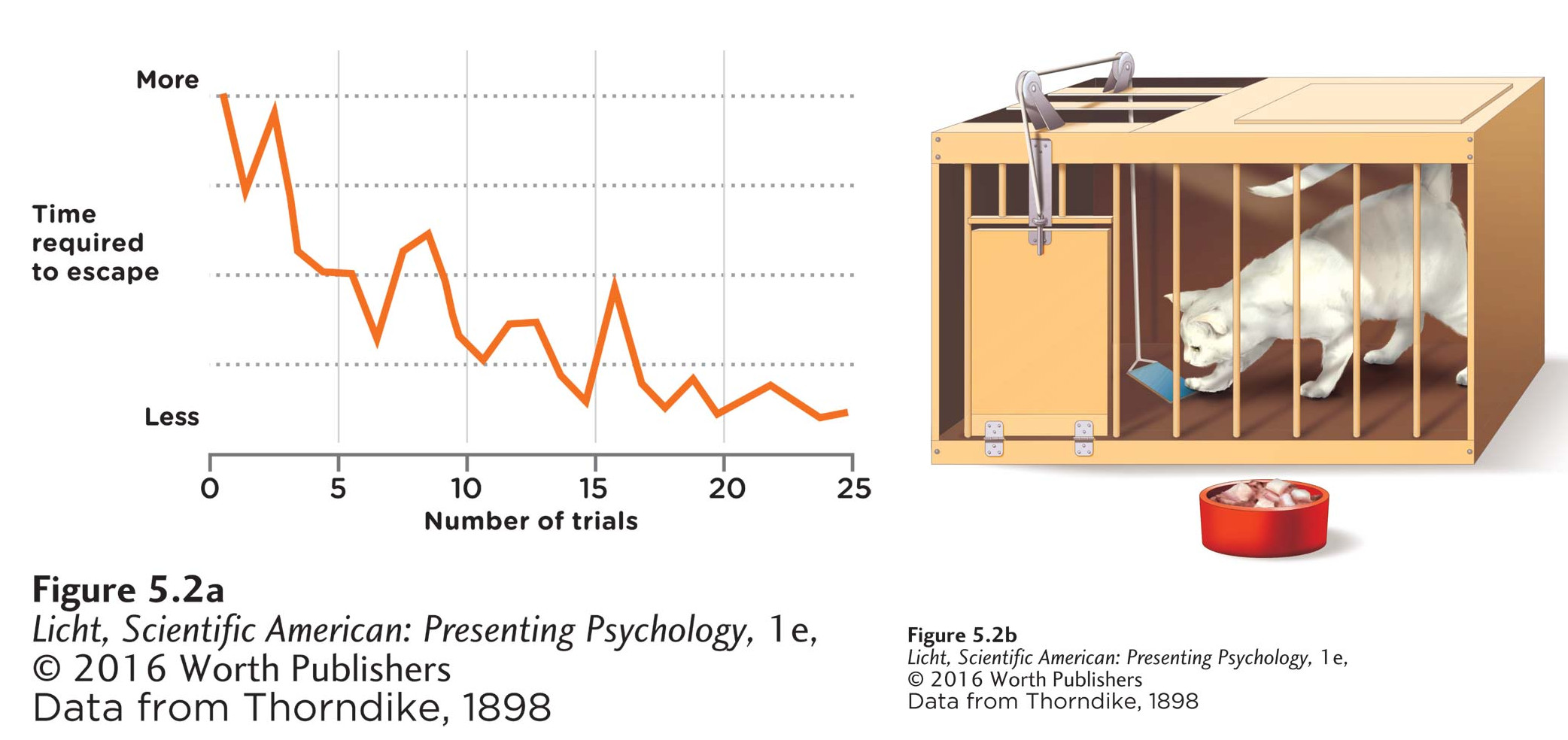

THORNDIKE AND HIS CATS One of the first scientists to objectively study the effect of consequences on behavior was American psychologist Edward Thorndike (1874 –1949). Thorndike’s early research focused on chicks and other animals, many of which he kept in his apartment. But after an incubator almost caught fire, his landlady insisted he get rid of the chicks (Hothersall, 2004). It was in the lab that Thorndike conducted his research on cats. His most famous experimental setup involved putting a cat in a latched cage called a “puzzle box” and planting enticing pieces of fish outside the door. When first placed in the box, the cat would scratch and paw around randomly, but after a while, just by chance, it would pop the latch, causing the door to release. The cat would then escape the cage to devour the fish (Figure 5.2). The next time the cat was put in the box, it would repeat this random activity, scratching and pawing with no particular direction. And again, just by chance, the cat would pop the latch that released the door and freed it to eat the fish. Each time the cat was returned to the box, the number of random activities decreased until eventually it was able to break free almost immediately (Thorndike, 1898).

Early psychologist Edward Thorndike conducted his well-

We should highlight a few important issues relating to this early research. First, these cats discovered the solution to the puzzle box accidentally, while exhibiting their naturally occurring behaviors (scratching and exploring). So, they initially obtained the fish treat by accident. The other important point is that the measure of learning was not an exam grade or basketball score, but the amount of time it took the cats to break free.

law of effect Thorndike’s principle stating that behaviors are more likely to be repeated when followed by pleasurable outcomes, and those followed by something unpleasant are less likely to be repeated.

The cats’ behavior, Thorndike reasoned, could be explained by the law of effect, which says that a behavior (opening the latch) is more likely to happen again when followed by a pleasurable outcome (delicious fish). Behaviors that lead to pleasurable outcomes will be repeated, while behaviors that don’t lead to pleasurable outcomes (or are followed by something unpleasant) will not be repeated. The law of effect is not limited to cats. When was the last time your behavior changed as a result of a pleasurable outcome?

reinforcers Consequences, such as events or objects, that increase the likelihood of a behavior reoccurring.

reinforcement Process by which an organism learns to associate a voluntary behavior with its consequences.

Most contemporary psychologists would call the fish in Thorndike’s experiments reinforcers, because the fish increased the likelihood that the preceding behavior (escaping the cage) would occur again. Reinforcers are consequences that follow behaviors, and they are a key component of operant conditioning. Our daily lives abound with examples of reinforcers. Praise, hugs, good grades, enjoyable food, and attention are all reinforcers that increase the probability the behaviors they follow will be repeated. Through the process of reinforcement, targeted behaviors become more frequent. A child praised for sharing a toy is more likely to share in the future. A student who studies hard and earns an A on an exam is more likely to prepare well for upcoming exams.

SKINNER AND BEHAVIORISM Some of the earliest and most influential research on operant conditioning came out of the lab of B. F. Skinner (1904 –1990), an American psychologist. Like Pavlov, Skinner had not planned to study learning. Upon graduating from college, Skinner decided to become a writer and a poet, but after a year of trying his hand at writing, he decided he “had nothing to say” (Skinner, 1976). Around this time, he began to read the work of Watson and Pavlov, which inspired him to pursue a graduate degree in psychology. He enrolled at Harvard, took some psychology classes that he found “dull,” and eventually joined a lab in the Department of Biology, where he could study the subject he found most intriguing: animal behavior.

behaviorism The scientific study of observable behavior.

Skinner was devoted to behaviorism, the scientific study of observable behavior. Behaviorists believed that psychology could only be considered a “true science” if it was based on the study of behaviors that could be seen and documented. In relation to learning, Skinner and other behaviorists proposed that all behaviors, thoughts, and emotions are shaped by factors in the external environment.

LO 8 Explain shaping and the method of successive approximations.

Synonyms

Skinner boxes operant chambers

shaping The use of reinforcers to guide behavior to the acquisition of a desired, complex behavior.

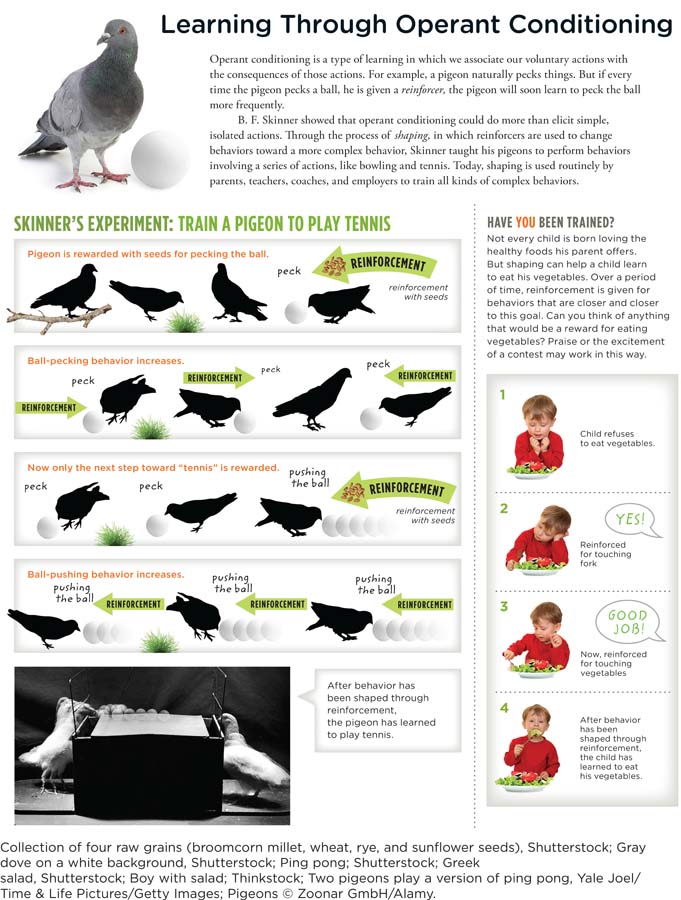

SHAPING AND SUCCESSIVE APPROXIMATIONS Building on Thorndike’s law of effect and Watson’s approach to research, Skinner demonstrated, among other things, that rats can learn to push levers and pigeons can learn to bowl (Peterson, 2004). Since animals can’t be expected to immediately perform such complex behaviors, Skinner employed shaping, the use of reinforcers to change behaviors through small steps toward a desired behavior (see Infographic 5.2 below). Skinner used shaping to teach a rat to “play basketball” (dropping a marble through a hole) and pigeons to “bowl” (nudging a ball down a miniature alley). As you can see in the photo on page 187. Skinner placed animals in chambers, or Skinner boxes, which were outfitted with food dispensers the animals could activate (by pecking a target or pushing on a lever, for instance) and recording equipment to monitor these behaviors. These boxes allowed Skinner to conduct carefully controlled experiments, measuring activity precisely and advancing the scientific and systematic study of behavior.

INFOGRAPHIC 5.2

successive approximations A method of shaping that uses reinforcers to condition a series of small steps that gradually approach the target behavior.

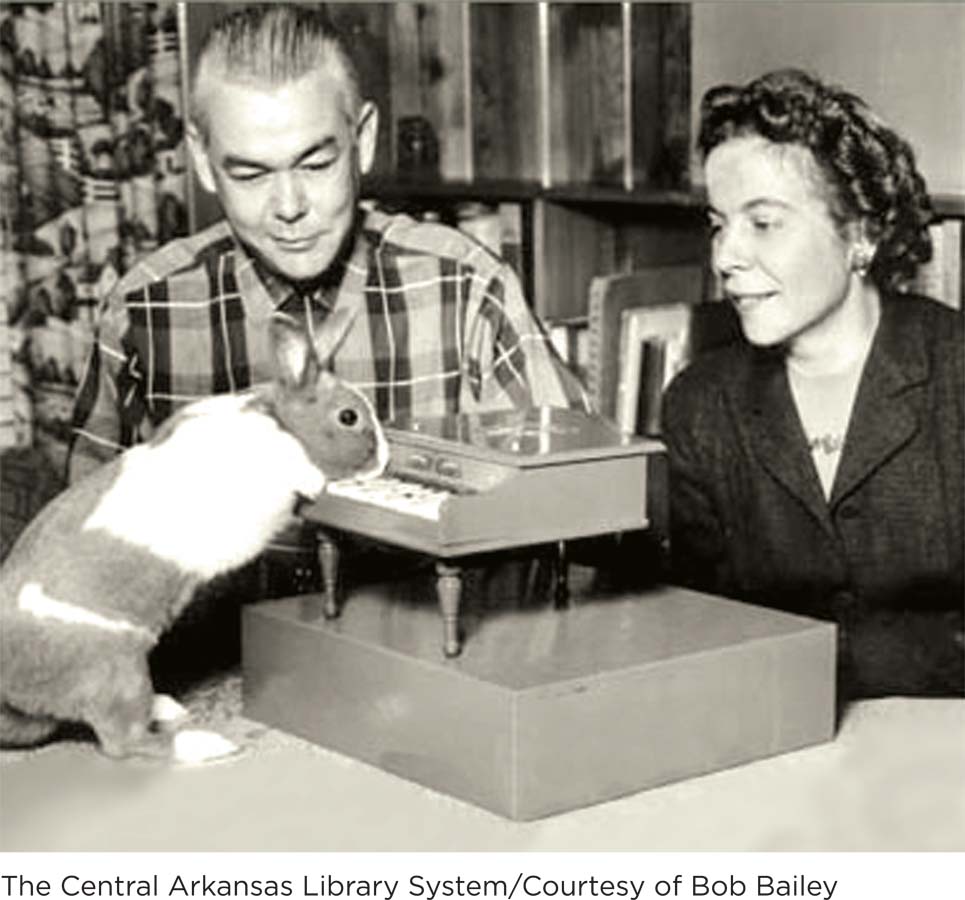

Keller and Marian Breland observe one of their animal performers at the IQ Zoo in Hot Springs, Arkansas, circa 1960. Using the operant conditioning concepts they learned from B. F. Skinner, the Brelands trained ducks to play guitars, raccoons to shoot basketballs, and chickens to tell fortunes. But their animal “students” did not always cooperate; sometimes their instincts interfered with the conditioning process (Bihm, Gillaspy, Lammers, & Huffman, 2010).

How in the world did Skinner get pigeons to bowl? The first task was to break the bowling lessons into small steps that pigeons could accomplish. Next, he introduced reinforcers as consequences for behaviors that came closer and closer to achieving the desired goal—

Successive approximations can also be used with humans, who are sometimes unwilling or unable to change problematic behaviors overnight. For example, psychologists have used successive approximation to change truancy behavior in adolescents (Enea & Dafinoiu, 2009). The truant teens were provided reinforcers for consistent attendance, but with small steps requiring increasingly more days in school.

It is amazing that the principles used for training animals can also be harnessed to keep teenagers in school. Is there anything operant conditioning can’t accomplish?

THINK again

Chickens Can’t Play Baseball

Rats can be conditioned to press levers; pigeons can be trained to bowl; and—

Rats can be conditioned to press levers; pigeons can be trained to bowl; and—

BASEBALL? NO. PIANO? YES.

Here’s a rundown of what happened: The Brelands placed a chicken in a cage adjacent to a scaled down “baseball field,” where it had access to a loop attached to a baseball bat. If the chicken managed to swing the bat hard enough to send the ball into the outfield, a food reward was delivered at the other end of the cage. Off the bird would go, running toward its meal dispenser like a baseball player sprinting to first base—

instinctive drift The tendency for animals to revert to instinctual behaviors after a behavior pattern has been learned.

How did the Brelands explain the chickens’ behavior? They believed that the birds were demonstrating instinctive drift, the tendency for instinct to undermine conditioned behaviors. A chicken’s pecking, for example, is an instinctive food-

These examples involve researchers deliberately shaping behaviors with reinforcers in a laboratory setting. Many behaviorists believe behaviors are being shaped all of the time, both in and out of the laboratory. What factors in the environment might be shaping your behavior?

Common Features of Operant and Classical Conditioning

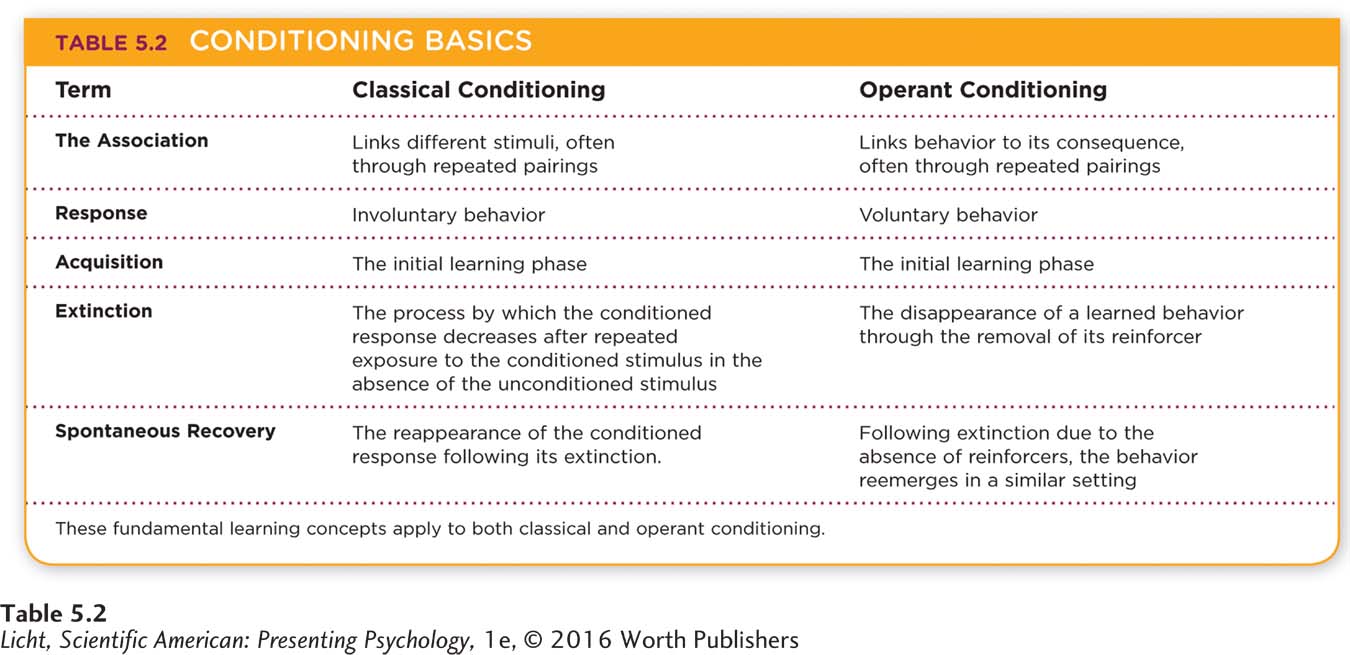

Both operant and classical conditioning are forms of learning, and they share many common principles (Table 5.2). As with classical conditioning, behaviors learned through operant conditioning go through an acquisition phase. Jeremy Lin learned to dunk a basketball when he was a sophomore in high school. The cats in Thorndike’s experiments learned how to escape their puzzle boxes after a certain number of trials. In both cases, the acquisition stage occurred through the gradual process of shaping. Behaviors learned through operant conditioning are also subject to extinction—

With operant conditioning, stimulus generalization is seen when a previously learned response to one stimulus occurs in the presence of a similar stimulus. A rat is conditioned to push a particular type of lever, but it may push a variety of other lever types similar in shape, size, and color. Horses also show stimulus generalization. With successive approximations using a tasty oat-

Stimulus discrimination is also at work in operant conditioning, as organisms can learn to discriminate between behaviors that do and do not result in reinforcement. With the use of reinforcers, turtles can learn to discriminate among black, white, and gray paddles. In one study, researchers rewarded a turtle with morsels of meat when it chose a black paddle over a white one; subsequently, the turtle chose the black paddle over other-

Stimulus discrimination even applies to basketball players. Jeremy Lin certainly has learned to discriminate between teammates and opponents. It’s unlikely he would get reinforcement from the crowd if he mistook an opponent for a teammate and passed the ball to the other team. Making a perfect pass to a teammate, on the other hand, would likely earn approval. This brings us to the next topic, positive reinforcement, where we start to see how classical and operant conditioning differ.

Types of Reinforcement

LO 9 Identify the differences between positive and negative reinforcement.

positive reinforcement The process by which reinforcers are added or presented following a targeted behavior, increasing the likelihood of it occurring again.

POSITIVE REINFORCEMENT With operant conditioning, an organism learns to associate voluntary behaviors with their consequences. Any stimulus that increases a behavior is a reinforcer. What we haven’t addressed is that a reinforcer can be something added or something taken away. In the process of positive reinforcement, reinforcers are presented (added) following the targeted behavior, and reinforcers in this case are generally pleasant (see Infographic 5.3 on page 199). By presenting positive reinforcers following a target behavior, we are increasing the chances that the target behavior will occur again. If the behavior doesn’t increase after the stimulus is presented, that particular stimulus should not be considered a reinforcer. The fish treats that Thorndike’s cats received immediately after escaping the puzzle box and the morsels of bird feed that Skinner’s pigeons got for bowling are examples of positive reinforcement. In both cases, the reinforcers were added following the desired behavior and were pleasurable.

Georgia preschool teacher Inyite (Shell) Adie-

There were also many potential positive reinforcers driving Jeremy Lin. The praise that his coaches gave him for passing a ball to a teammate would be an example of positive reinforcement. Back in high school, Coach Diepenbrock rewarded players with stickers to provide feedback on their performance (“kind of middle schoolish,” he admits, but effective nonetheless). Jeremy averaged the highest sticker score of any player ever. Did the sticker system have an effect on Jeremy’s behavior? Coach Diepenbrock cannot be certain, but if it did, seeing his sticker-

You may be wondering if this approach could be used in a college classroom. The answer would inevitably depend on the people involved. Remember, the definition of a positive reinforcer depends on the organism’s response to its presence (Skinner, 1953). You may love getting stickers, but your classmate may be offended by them.

Keep in mind, too, that not all positive reinforcers are pleasant; when we refer to positive reinforcement, we mean that something has been added. For example, if a child is starved for attention, then any kind of attention (including a reprimand) would be experienced as a positive reinforcer. Every time the child misbehaves, she gets reprimanded, and reprimanding is a form of attention, which the child craves. The scolding reinforces the misbehavior.

negative reinforcement The removal of an unpleasant stimulus following a target behavior, which increases the likelihood of it occurring again.

NEGATIVE REINFORCEMENT We have established that behaviors can be increased or strengthened by the addition of a stimulus. But it is also possible to increase a behavior by taking something away. Behaviors can increase in response to negative reinforcement, through the process of taking away (or subtracting) something unpleasant. Skinner used negative reinforcement to shape the behavior of his rats. The rats were placed in Skinner boxes with floors that delivered a continuous mild electric shock—

Think about some examples of negative reinforcement in your own life. If you try to drive your car without your seat belt, does your car make an annoying beeping sound? If so, the automakers have employed negative reinforcement to increase your use of seat belts. The beeping provides an annoyance (an unpleasant stimulus) that prompts most people to put on their seat belts (the desired behavior increases) to make the beeping stop, and thus remove the unpleasant stimulus. The next time you get in the car, you will be faster to put on your seat belt, because you have learned that buckling up immediately makes the annoying sound go away. For another example of negative reinforcement, picture a dog that constantly begs for treats. The begging (an unpleasant stimulus) stops the moment the dog is given a treat, a pattern that increases your treat-

Notice that with negative reinforcement, the target behaviors increase in order to remove an unwanted condition. Returning to our example of Jeremy Lin, how might a basketball coach use negative reinforcement to increase a behavior? Of course, the coach can’t build an electric grid in the flooring (as Skinner did with his rats) to get his players moving faster. He might, however, start each practice session by whining and complaining (a very annoying stimulus) about how slow the players are moving. But as soon as their level of activity increases, he stops his annoying behavior. The players then learn to avoid the coach’s whining and complaining simply by running faster and working harder at every practice. Thus, the removal of the annoying stimulus (whining and complaining) increases the desired behavior (running faster). Keep in mind that the goal of negative reinforcement is to increase a desired behavior. Try to remember this when you read the section on punishment.

LO 10 Distinguish between primary and secondary reinforcers.

Synonyms

negative reinforcement omission training

secondary reinforcers conditioned reinforcers

primary reinforcer A reinforcer that satisfies a biological need, such as food, water, physical contact; innate reinforcer.

secondary reinforcer Reinforcers that do not satisfy biological needs but often gain their power through their association with primary reinforcers.

PRIMARY AND SECONDARY REINFORCERS There are two major categories of reinforcers: primary and secondary. The food with which Skinner rewarded his pigeons and rats is considered a primary reinforcer (innate reinforcer), because it satisfies a biological need. Food, water, and physical contact are considered primary reinforcers (for both animals and people) because they meet essential requirements. Many of the reinforcers shaping human behavior are secondary reinforcers, which means they do not satisfy biological needs but often derive their power from their connection with primary reinforcers. Although money is not a primary reinforcer, we know from experience that it gives us access to primary reinforcers, such as food, a safe place to live, and perhaps even the ability to attract desirable mates. Thus, money is a secondary reinforcer. The list of secondary reinforcers is long and varied, because different people find different things and activities to be reinforcing. Listening to music, washing dishes, taking a ride in your car—

Standing before a giant red mailbox, London postal worker Imtiyaz Chawan holds a Guinness World Records certificate. Through the Royal Mail Group’s Payroll Giving Scheme, British postal workers donated money to 975 charitable groups, setting a record for the number of charities supported by a payroll giving scheme (Guinness Book of World Records News, 2012, February 6). Charitable giving is the type of positive behavior that can spread through social networks. Many charities are now using social media for fundraising purposes.

Secondary reinforcers are evident in everyday social interactions. Think about how your behaviors might change in response to praise from a boss, a pat on the back from a coworker, or even a nod of approval from a friend on Facebook or Instagram. Yes, reinforcers can even exert their effects through the digital channels of social media.

SOCIAL MEDIA and psychology

Contagious Behaviors

Why do you keep glancing at your Facebook page, and what compels you to check your phone 10 times an hour? All those little tweets and updates you receive are reinforcing. It feels good to be retweeted, and it’s nice to see people “like” your Instagram posts.

Why do you keep glancing at your Facebook page, and what compels you to check your phone 10 times an hour? All those little tweets and updates you receive are reinforcing. It feels good to be retweeted, and it’s nice to see people “like” your Instagram posts.

WHY DO YOU CHECK YOUR PHONE 10 TIMES AN HOUR?

With its never-

Now that we have a basic understanding of operant conditioning, let’s take things to the next level and examine its guiding principles.

The Power of Partial Reinforcement

Ivonne runs tethered to her husband, G. John Schmidt. She sets the pace, while he warns her of any changes in terrain, elevation, and direction. When Ivonne started running in 2001, her friends reinforced her with hot chocolate. These days, she doesn’t need sweet treats to keep her coming back. The pleasure she derives from running is reinforcement enough.

LO 11 Describe continuous reinforcement and partial reinforcement.

A year after graduating from Stanford, Ivonne returned to New York City and began looking for a new activity to get her outside and moving. She found the New York Road Runners Club, which connected her with an organization that supports and trains runners with all types of disabilities, including paraplegia, amputation, and cerebral palsy. Having no running experience (apart from jogging on a treadmill), Ivonne showed up at a practice one Saturday morning in Central Park and ran 2 miles with one of the running club’s guides. The next week she came back for more, and then the next, and the next.

continuous reinforcement A schedule of reinforcement in which every target behavior is reinforced.

Why are slot machines so enticing? The fact that they deliver rewards occasionally and unpredictably makes them irresistible to many gamblers. Slot machines take advantage of the partial reinforcement effect, which states that behaviors are more persistent when reinforced intermittently, rather than continuously.

CONTINUOUS REINFORCEMENT When Ivonne first started attending practices, her teammates promised to buy her hot chocolate whenever she increased her distance. “Every time they would try to get me to run further, they’d say, ‘We’ll have hot chocolate afterwards!’” Ivonne remembers. “They actually would follow through with their promise!” The hot chocolate was given in a schedule of continuous reinforcement, because the reinforcer was presented every time Ivonne ran a little farther. Continuous reinforcement can be used in a variety of settings: a child getting praise every time he does the dishes; a dog getting a treat every time it comes when called. You get the commonality: reinforcement every time the behavior is produced.

partial reinforcement A schedule of reinforcement in which target behaviors are reinforced intermittently, not continuously.

PARTIAL REINFORCEMENT Continuous reinforcement comes in handy for a variety of purposes and is ideal for establishing new behaviors during the acquisition phase. But delivering reinforcers intermittently, or every once in a while, works better for maintaining behaviors. We call this approach partial reinforcement. Returning to the examples listed for continuous reinforcement, we can also imagine partial reinforcement being used: The child gets praise almost every time he does the dishes; a dog gets a treat every third time it comes when called. The reinforcer is not given every time the behavior is observed, only some of the time.

Early on, Ivonne received a reinforcer from her training buddies for every workout she increased her mileage. But how might partial reinforcement be used to help a runner increase her mileage? Perhaps instead of hot chocolate on every occasion, the treat could come after every other successful run. Or, a coach might praise the runner’s hard work only some of the time. The amazing thing about partial reinforcement is that it happens to all of us, in an infinite number of settings, and we might never know how many times we have been partially reinforced for any particular behavior. Common to all of these partial reinforcement situations is that the target behavior is exhibited, but the reinforcer is not supplied each time this occurs.

The hard work and reinforcement paid off for Ivonne. In 2003 she ran her first marathon—

Synonyms

partial reinforcement intermittent reinforcement

partial reinforcement effect The tendency for behaviors acquired through intermittent reinforcement to be more resistant to extinction than those acquired through continuous reinforcement.

PARTIAL REINFORCEMENT EFFECT When Skinner put the pigeons in his experiments on partial reinforcement schedules, they would peck at a target up to 10,000 times without getting food before giving up (Skinner, 1953). According to Skinner, “Nothing of this sort is ever obtained after continuous reinforcement” (p. 99). The same seems to be true with humans. In one study from the mid-

Remember, partial reinforcement works very well for maintaining behaviors, but not necessarily for establishing behaviors. Imagine how long it would take Skinner’s pigeons to learn the first step in the shaping process (looking at the ball) if they were rewarded for doing so only 1 in 5 times. The birds learn fastest when reinforced every time, but their behavior will persist longer if they are given partial reinforcement thereafter. Here’s another example: Suppose you are housetraining your puppy. The best plan is to start the process with continuous reinforcement (praise the dog every time it “goes” outside), but then shift to partial reinforcement once the desired behavior is established.

Timing Is Everything: Reinforcement Schedules

LO 12 Name the schedules of reinforcement and give examples of each.

Skinner identified various ways to administer partial reinforcement, or partial reinforcement schedules. As often occurs in scientific research, he stumbled on the idea by chance. Late one Friday afternoon, Skinner realized he was running low on the food pellets he used as reinforcers for his laboratory animals. If he continued rewarding the animals on a continuous basis, the pellets would run out before the end of the weekend. With this in mind, he decided only to reinforce some of the desired behaviors (Skinner, 1956, 1976). The new strategy worked like a charm. The animals kept performing the target behaviors, even though they weren’t given reinforcers every time.

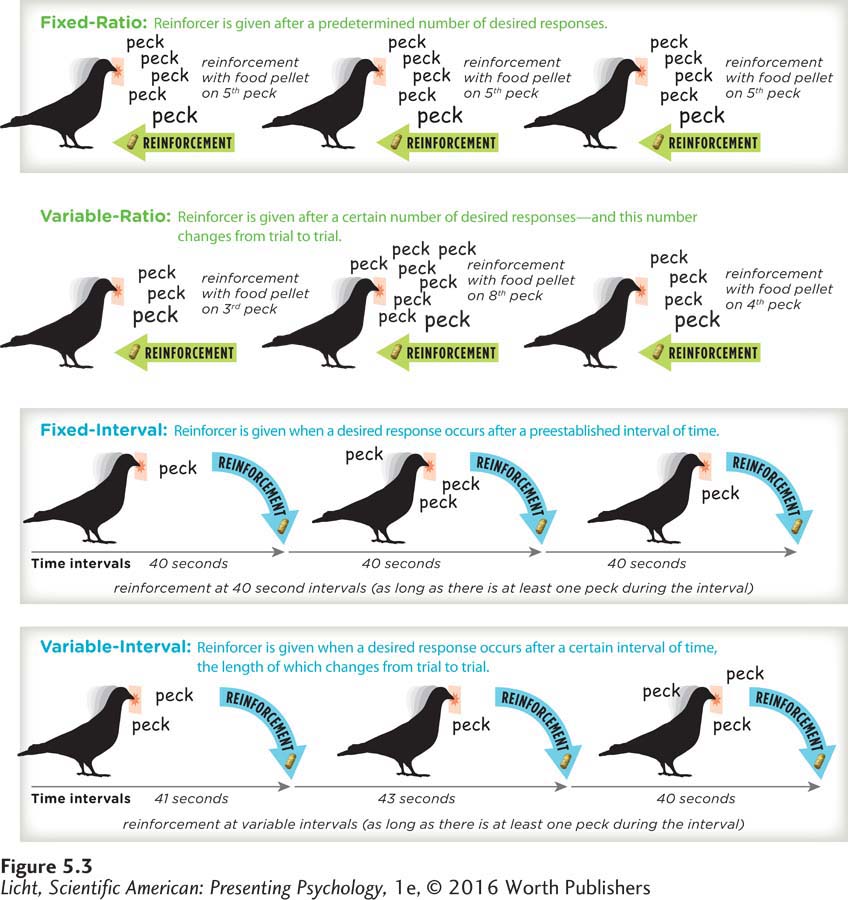

Clearly partial reinforcement is effective, but how exactly should it be delivered? Four different reinforcement schedules can be used: fixed-

Continuous reinforcement is ideal for establishing new behaviors. But once learned, a behavior is best maintained with partial reinforcement. Partial reinforcement can be delivered according to four different schedules of reinforcement, as shown here.

fixed-

FIXED-

variable-

VARIABLE-

fixed-

FIXED-

variable-

VARIABLE-

So far, we have learned about increasing desired behaviors through reinforcement, but not all behaviors are desirable. Let’s turn our attention to techniques used to suppress undesirable behaviors.

The Trouble with Punishment

punishment The application of a consequence that decreases the likelihood of a behavior recurring.

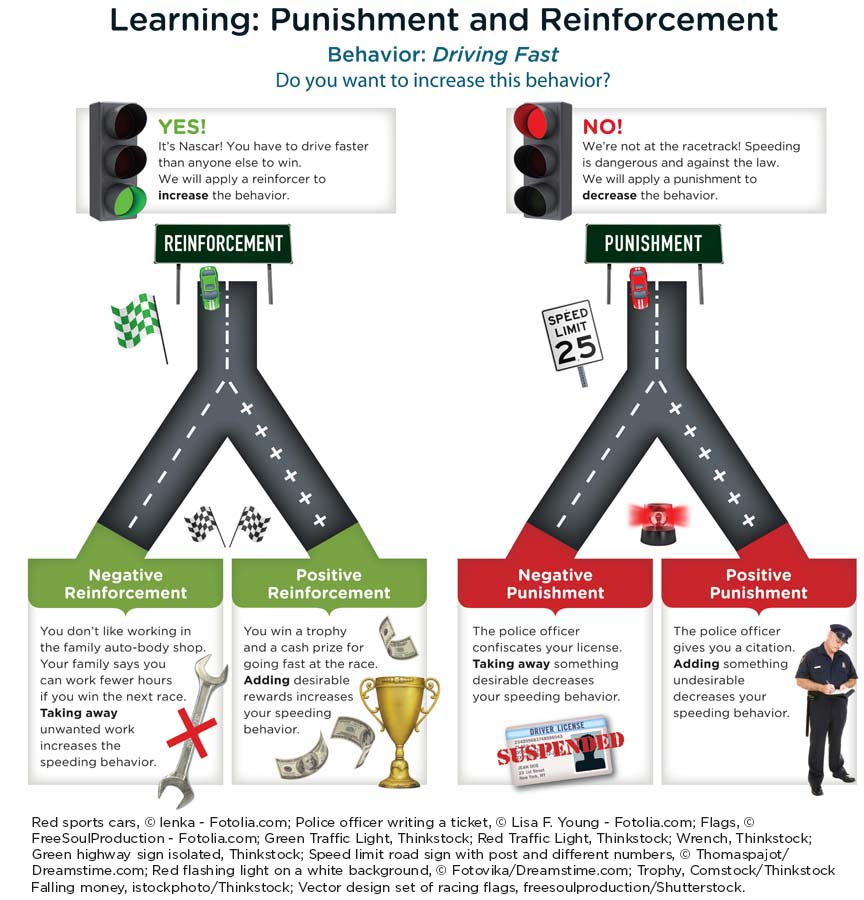

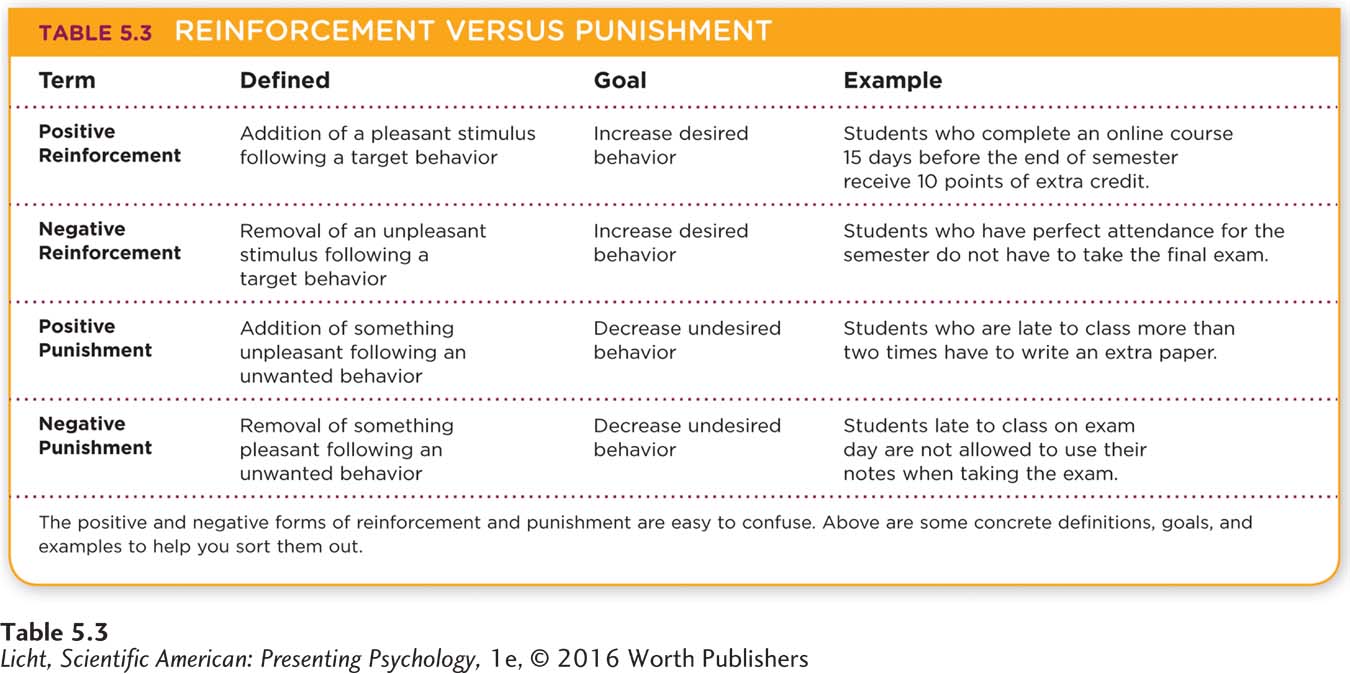

In contrast to reinforcement, which makes a behavior more likely to recur, the goal of punishment is to decrease or stop a behavior (Infographic 5.3). Punishment is used to reduce unwanted behaviors by instilling an association between a behavior and some unwanted consequence (for example, between stealing and going to jail, or between misbehaving and a spanking). Punishment isn’t always effective, however; people are often willing to accept unpleasant consequences to get something they really want.

INFOGRAPHIC 5.3

positive punishment The addition of something unpleasant following an unwanted behavior, with the intention of decreasing that behavior.

Sending a child to a corner for a “time-

POSITIVE AND NEGATIVE PUNISHMENT There are two major categories of punishment: positive and negative. With positive punishment, something aversive or disagreeable is applied following an unwanted behavior. For example, getting a ticket for speeding is a positive punishment, the aim of which is to decrease driving over the speed limit. Paying a late fine for overdue library books is a positive punishment, the goal of which is to decrease returning library books past their due date. In basketball, a personal foul (for example, inappropriate physical contact) might result in positive punishment, such as a free throw for the opposing team. Here, the addition of something aversive (the other team getting a wide open shot) is used with the intention of decreasing a behavior (pushing, shoving, and the like).

negative punishment The removal of something desirable following an unwanted behavior, with the intention of decreasing that behavior.

The goal of negative punishment is also to reduce an unwanted behavior, but in this case, it is done by taking away something desirable. A person who drives while inebriated runs the risk of negative punishment, as his driver’s license may be taken away. This loss of driving privileges is a punishment designed to reduce drunken driving. If you never return your library books, you might suffer the negative punishment of losing your borrowing privileges. The goal is to decrease behaviors that lead to lost or stolen library books. What kind of negative punishment might be used to rein in illegal conduct in basketball? Just ask one of Jeremy Lin’s former teammates, superstar Carmelo Anthony, one of many players suspended for participating in a 2006 brawl between the Knicks and the Denver Nuggets. Anthony, a Nuggets player at the time (how ironic that he was later traded to the Knicks), was dealt a 15-

Punishment may be useful for the purposes of basketball, but how does it figure into everyday life? Think about the last time you tried using punishment to reduce unwanted behavior. Perhaps you scolded your puppy for having an accident, or snapped at your housemate for leaving dirty dishes in the sink. If you are a parent or caregiver of a young child, perhaps you have tried to reign in misbehavior with various types of punishment, such as spanking.

test yourself

Which process matches each of the following examples?

Choose from positive reinforcement, negative reinforcement, positive punishment, and negative punishment.

Question 1

1. Carlos’ parents grounded him the last time he stayed out past his curfew, so tonight he came home right on time.

negative punishment

Question 2

2. Jinhee spent an entire week helping an elderly neighbor clean out her basement after a flood. The local newspaper caught wind of the story and ran it as an inspiring front-

positive reinforcement

Question 3

3. The trash stinks, so Sheri takes it out.

negative reinforcement

Question 4

4. Gabriel’s assistant had a bad habit of showing up late for work, so Gabriel docked his pay.

negative punishment

Question 5

5. During food drives, the basketball team offers to wash your car for free if you donate six items or more to the local homeless shelter.

positive reinforcement

Question 6

6. Claire received a stern lecture for texting in class. She doesn’t want to hear that again, so now she turns off her phone when she enters the classroom.

positive punishment

CONTROVERSIES

Spotlight on Spanking

Were you spanked as a child? Would you or do you spank your own children? Statistically speaking, there is a good chance your answer will be yes to both questions. Studies suggest that about two thirds of American parents use corporal (physical) punishment to discipline their young children (Gershoff, 2008; Regalado, Sareen, Inkelas, Wissow, & Halfon, 2004; Zolotor, Theodore, Runyan, Chang, & Laskey, 2011). But is spanking an effective and acceptable means of discipline?

Were you spanked as a child? Would you or do you spank your own children? Statistically speaking, there is a good chance your answer will be yes to both questions. Studies suggest that about two thirds of American parents use corporal (physical) punishment to discipline their young children (Gershoff, 2008; Regalado, Sareen, Inkelas, Wissow, & Halfon, 2004; Zolotor, Theodore, Runyan, Chang, & Laskey, 2011). But is spanking an effective and acceptable means of discipline?

TO SPANK OR NOT TO SPANK . . .

There is little doubt that spanking can provide a fast-

CONNECTIONS

In Chapter 2, we noted the primary roles of the frontal lobes: to organize information processed in other areas of the brain, orchestrate higher-

Apart from sending children the message that aggression is okay, corporal punishment may promote serious long-

Critics argue that studies casting a negative light on spanking are primarily correlational, meaning they show only a link—

Scholars on both sides make valid points, but the debate is somewhat lopsided, as an increasing number of studies suggest that spanking is ineffective and emotionally damaging (Gershoff & Bitensky, 2008; Smith, 2012; Straus, 2005).

LO 13 Explain how punishment differs from negative reinforcement.

PUNISHMENT VERSUS NEGATIVE REINFORCEMENT Punishment and negative reinforcement are two concepts that students often find difficult to distinguish (Table 5.3; also see Infographic 5.3 on p. 199). Remember that punishment (positive or negative) is designed to decrease the behavior that it follows, whereas reinforcement (positive or negative) aims to increase the behavior. Operant conditioning uses reinforcers (both positive and negative) to increase target behaviors, and punishment to decrease unwanted behaviors.

If all the positives and negatives are confusing you, just think in terms of math: Positive always means adding something, and negative means taking it away. Punishment can be positive, which means the addition of something viewed as unpleasant (“Because you made a mess of your room, you have to wash all the dishes!”), or negative, which involves the removal of something viewed as pleasant or valuable (“Because you made a mess of your room, no ice cream for you!”). For basketball players, a positive punishment might be adding more wind sprints to decrease errors on the free throw line. An example of negative punishment might be benching the players for brawling on the court; taking away the players’ court time to decrease their fighting behavior.

Apply This

THINK POSITIVE REINFORCEMENT

With all this talk of chickens, basketball players, and triathletes, you may be wondering how operant conditioning applies to you. Just think about the last time you earned a good grade on a test after studying really hard. How did this grade affect your preparation for the next test? If it made you study more, then it served as a positive reinforcer. A little dose of positive reinforcement goes a long way when it comes to increasing productivity. Let’s examine three everyday dilemmas and brainstorm ways we could use positive reinforcers to achieve better outcomes.

Problem 1: Your housemate frequently goes to sleep without washing his dinner dishes. Almost every morning, you walk into the kitchen and find a tower of dirty pans and plates sitting in the sink. No matter how much you nag and complain, he simply will not change his ways. Solution: Nagging and complaining are getting you nowhere. Try positive reinforcers instead. Wait until a day your housemate takes care of his dishes and then pour on the praise. You might be pleasantly surprised the next morning.

REINFORCEMENT STRATEGIES YOU CAN PUT TO GOOD USE

Problem 2: Your child is annoying you with her incessant whining. She whines for milk, so you give it to her. She whines for someone to play with, so you play with her. Why does your child continue to whine although you are responding to all her needs? Solution: Here, we have a case in which positive reinforcers are driving the problem. When you react to your child’s gripes and moans, you are reinforcing them. Turn off your ears to the whining. You might even want to say something like, “I can’t hear you when you’re whining. If you ask me in a normal voice, I’ll be more than happy to help.” Then reinforce her more mature behavior by responding attentively.

Problem 3: You just trained your puppy to sit. She was cooperating wonderfully until about a week after you stopped rewarding her with dog biscuits. You want her to sit on command, but you can’t keep doling out doggie treats forever. Solution: Once the dog has adopted the desired behavior, begin reinforcing unpredictably. Remember, continuous reinforcement is most effective for establishing behaviors, but a variable schedule (that is, giving treats intermittently) is a good bet if you want to make the behavior stick (Pryor, 2002).

Classical and Operant Conditioning: What’s the Difference?

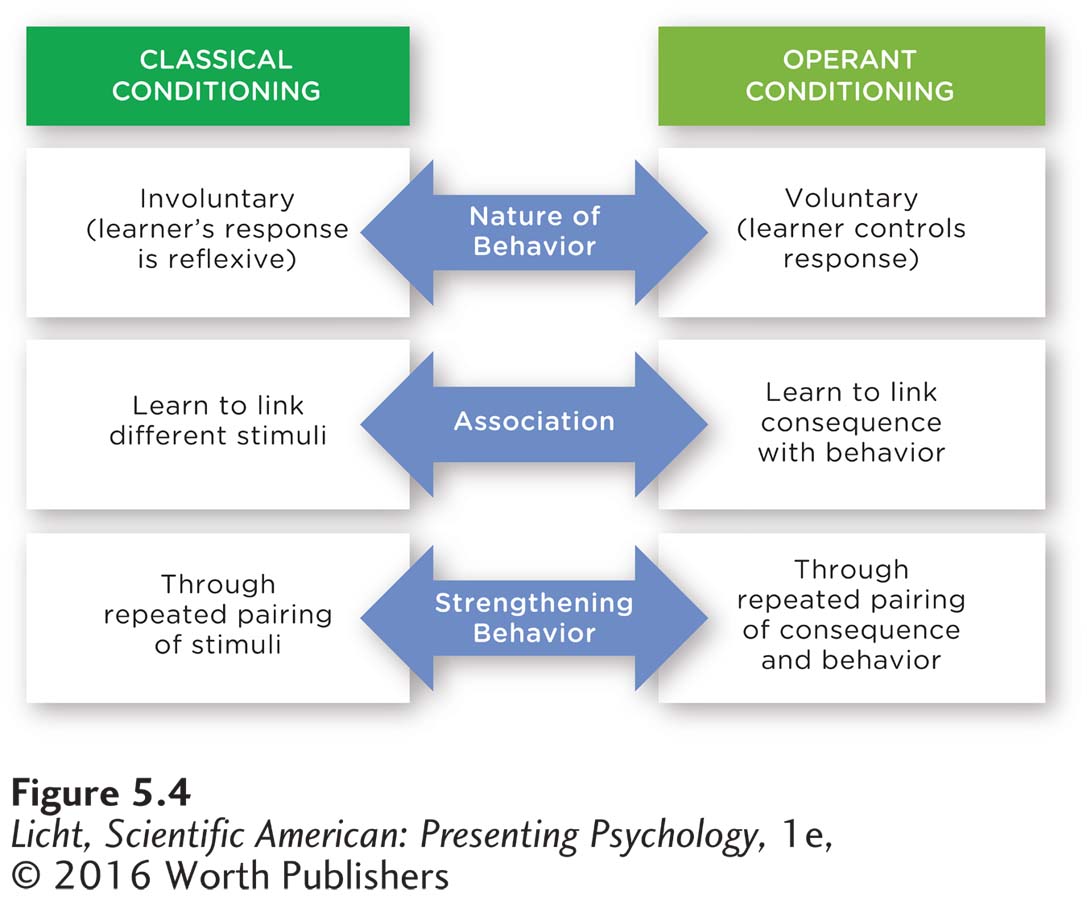

Students sometimes have trouble differentiating classical and operant conditioning (Figure 5.4). After all, both forms of conditioning—

But there are also key differences between classical and operant conditioning. In classical conditioning, the learned behaviors are involuntary, or reflexive. Ivonne cannot directly control her heart rate any more than Pavlov’s dogs can decide when to salivate. Operant conditioning, on the other hand, concerns voluntary behavior. Jeremy Lin had power over his decision to practice his shot, just as Skinner’s pigeons had control over swatting bowling balls with their beaks. In short, classical conditioning is an involuntary form of learning, whereas operant conditioning requires active effort.

Another important distinction is the way in which behaviors are strengthened. In classical conditioning, behaviors become more frequent with repeated pairings of stimuli. The more often Ivonne smells chlorine before swim practice, the tighter the association she makes between chlorine and swimming. Operant conditioning is also strengthened by repeated pairings, but in this case, the connection is between a behavior and its consequences. Reinforcers strengthen the behavior; punishment weakens it. The more benefits (reinforcers) Jeremy gains from succeeding in basketball, the more likely he will keep practicing.

Often classical conditioning and operant conditioning occur simultaneously. A baby learns that he gets fed when he cries; getting milk reinforces the crying behavior (operant conditioning). At the same time, the baby learns to associate milk with the appearance of the bottle. As soon as he sees his mom or dad take the bottle from the refrigerator, he begins salivating in anticipation of gulping it down (classical conditioning).

Classical and operant conditioning are not the only ways we learn. There is one major category of learning we have yet to cover. Use this hint to guess what it might be: How did you learn to peel a banana, open an umbrella, and throw a Frisbee? Somebody must have shown you.

show what you know

Question 1

1. According to Thorndike and the __________, behaviors are more likely to be repeated when they are followed by pleasurable outcomes.

law of effect

Question 2

2. A third-

classical conditioning.

an unconditioned response.

an unconditioned stimulus.

stimulus generalization.

d. stimulus generalization.

Question 3

3. A child disrupts class and the teacher writes her name on the board. For the rest of the week, the child does not act up. The teacher used __________ to decrease the child’s disruptive behaviors.

positive punishment

negative punishment

positive reinforcement

negative reinforcement

a. positive punishment

Question 4

4. Think about a behavior you would like to change (either yours or someone else’s). Devise a schedule of reinforcement using positive and negative reinforcement to change that behavior. Also contemplate how you might use successive approximations. What primary and secondary reinforcers would you use?

Answers will vary, but can be based on the following definitions. Reinforcers are consequences that increase the likelihood of a behavior reoccurring. Positive reinforcement is the process by which pleasant reinforcers are presented following a target behavior. Negative reinforcement occurs with the removal of an unpleasant stimulus following a target behavior. Successive approximation is a method for shaping that uses reinforcers to condition a series of small steps that gradually approach the target behavior.

Question 5

5. How do continuous and partial reinforcement differ?

Continuous reinforcement is a schedule of reinforcement in which every target behavior is reinforced. Partial reinforcement is a schedule of reinforcement in which target behaviors are reinforced intermittently, not continuously. Continuous reinforcement is generally more effective for establishing a behavior, whereas learning through partial reinforcement is more resistant to extinction and useful for maintaining behavior.