8.4 Reasoning, Judgment, and Decision Making

As you’ve just seen, people are good at language. Even young children understand and produce sentences expertly. Now, however, we’ll explore three types of thinking—

Reasoning

Preview Questions

Question

What psychological processes get in the way of good logical reasoning?

What psychological processes get in the way of good logical reasoning?

Question

Do people always have difficulty with logical reasoning?

Do people always have difficulty with logical reasoning?

We had all listened with the deepest interest to this sketch of the night’s doings, which Holmes had deduced from signs so subtle and minute that, even when he had pointed them out to us, we could scarcely follow him in his reasoning.

—Arthur Conan Doyle, The Treasury of Sherlock Holmes (2007, p. 267)

Do you read detective stories? If so, you can anticipate the climax. Before dazzled onlookers, the private eye reviews evidence, analyzes its implications logically, and draws conclusions so compelling that the criminal confesses on the spot.

The detective is engaged in reasoning, which is the process of drawing conclusions that are based on facts, beliefs, and experiences (Johnson-

Detective stories preview research findings in the psychology of reasoning. Notice not only the powers of the detective, but also the reactions of the onlookers: dazzled. In a good detective story, the facts are available to everyone—

Much psychological research suggests the same thing. A distinguished psychologist concluded that research on logical reasoning skills shows that people have only “a modicum of competence” (Johnson-

CONFIRMATION BIAS. Reasoning often is impaired by confirmation bias. Confirmation bias is the tendency to seek out information that is consistent with whatever initial conclusions you have drawn, and to disregard information that might contradict those conclusions.

329

Confirmation bias can impair reasoning because your initial conclusion might be wrong. To find out for sure, you should seek out disconfirming evidence, that is, evidence that could disprove your guess. If you think “the man who committed the midnight murder in the hotel was the butler,” you’d better be sure there’s no evidence that the butler was somewhere other than the hotel at midnight. If you look only for confirming evidence (“the butler had a motive”), you might miss critical disconfirming evidence (“witnesses saw the butler asleep in a movie theatre at midnight”).

You can see confirmation bias in action by trying an arithmetic problem (from Wason, 1960). Your job is to figure out the rule of arithmetic that generates sets of numbers. You first see three numbers that conform to the rule. You then need to suggest additional sets of three numbers that might help you determine what the rule is. After each set of three, you’re told whether your numbers conform to the rule. When you think you know what the rule is, you can take a guess.

Here are the first three numbers:

2 4 6

What do you think the rule might be? And what new set of three numbers would you suggest to find out what it is?

Here’s what a typical participant did. The participant would first think the rule is “a series of even numbers” and then suggest new sets of even numbers to see if they conform to the rule. “How about 8, 10, 12?” “14, 16, 18?” “20, 22, 24?” Each time, the participant learned that, yes, the numbers conform to the rule the experimenter had used. Yet the rule was not “a series of even numbers.” The actual rule was “any three numbers arranged in order of increasing magnitude.”

Participants thus displayed confirmation bias. They suggested numbers consistent with their initial guess, and failed to suggest other possibilities (e.g., 1, 3, 5) that could disconfirm their initial guess.

Confirmation bias is pervasive. Once people develop opinions and beliefs, they commonly seek out information that confirms their thinking (Nickerson, 1998). Social psychologists (see Chapter 12) propose, for example, that confirmation bias explains people’s tendency to believe media reports are biased against their own political attitudes. When people with either pro-

REASONING ABOUT EVOLUTIONARILY RELEVANT PROBLEMS. Do people always find reasoning difficult? The psychologist Leda Cosmides doesn’t think so. She notes that reasoning problems presented in research—

Do you believe in ESP? What kind of information might you tend to notice and remember in support of this belief?

One such problem is not getting cheated when exchanging goods (Cosmides, 1989). People have exchanged goods for thousands of years, either by trading or by using currency. In the past, they might have exchanged livestock for grain. Today, you exchange money for groceries. Throughout human history, it has been important to avoid being cheated. Evolution may have equipped people with the ability to reason accurately about problems that involve the possibility of cheating when goods are exchanged.

330

Cosmides tested this by presenting different problems with an identical logical structure—

Some problems involved the detection of cheating in the exchange of goods. For example, one described a hypothetical cultural situation: “If a man eats cassava root, then he must have a tattoo on his face.” (Participants were told that, in this culture, cassava root is an aphrodisiac and a tattoo is a sign of being married.) If you ate cassava root but had no tattoo, you would be cheating, that is, breaking the social rule of no sex outside of marriage.

Other problems had nothing to do with cheating. For example, people checked, for a clerical job, whether documents followed this rule: “If a person has a D rating, then his documents must be marked code ‘y.’”

Participants performed poorly on problems that had nothing to do with cheating (the document filing problem). However, they performed excellently when the problem involved potential cheating by the breaking of a social rule (the cassava root problem; Cosmides, 1989). On the latter task, they avoided confirmation bias.

Why are people good at solving cheating-

Does Cosmides’s idea sound familiar? It should. Her idea about the origins of a brain mechanism for solving problems is essentially the same as Chomsky’s idea about the origins of a brain mechanism dedicated to processing grammar. Both claim that evolution gave rise to the brain mechanisms and associated mental abilities that people possess today (Buss, 2009).

WHAT DO YOU KNOW?…

Question 15

Which of the following statements are true?

- 9/lHjC7ihqzfk4ahseFrjQPUgM8IVM3xlqOTmYxPx6gMGNx63ZN69MT/c+QkG/GmEa5iNcWBGriwohi7s5ahfyA6xeyl6EpvW/JiS1Cn8ZxSYzOoTh6NQQ5v8NzKEzi6SYphvrDsfIx5LUJbKkdl5XtS+DVawOBf570LJvhz+oHbdrRwn4VjWS6ydj1j/XbcT8TZ04vYVe/itjXClnWhPxA6qBNu/F/iwfAEMHsLdxIMbspWM/LkFwYaXVonfFEQiCmxtgZRXBNX+vvPOlydFw==

- ZujzQvD+TJDEliHJrGx02adfxK/2SpxpqBjgQfXtdJ7MxhbRDe+Yr+5/9k75gX7okDnemMpRbRbiwW57cbRNYLlHscu/nu1IGisndfCI1J+PCAuHcKW26czF49IFu85oKrmN7hi4UNLFkoxJgYc0inDOJKQO1mlNBMwa7tC16iYnjacqhwjWN1UEFE48RqNhbmKFh0c99EbHcdem6bUBwCM7fZZVzPojepts3g==

- DQ5znsMIRu9T0m9bywTLafx3rnPuXHL0K32CiNWs72rDB2Dde6uP/jtUBPnGvOA2VGL8/iNW6kVjBL1CUp0zovMX7XuJ5a0HveCWZPT8eM8vpN079Ma4Lj36BQ8mSNbjzQUDvVAWWr1VmfCdRrOF+upVi3TY9X+owTWRW3MUOKiOfCoZa8JDAQxv+Q68BXMwew8JGgHrRcqFO3Bn

- qGOzdgtESHzB2nypFfknXCY7L56X6yTk1zp8IPnt5kI7Ml+bRqvMCaXsDkErkgIn1n7C2GQSs2Mgav2n+troJVNEtJikGGBRP+XNhnmFTDDffd/bJJHS882Z9QZdk8vsVeCIOU0MzRDZl+BouX3ghHb6D7homXz5W3c0letVLSksUfqfnQe8CHvLew+Zd3BvPzq3K4zvP88AQR8ZViIkfh+FDopvH/lD

Judgment Under Uncertainty

Preview Question

Question

How do people judge the likelihood of uncertain events?

How do people judge the likelihood of uncertain events?

Life is full of guesses, big and small. “Will I like this new romantic comedy with computer-

331

For each question, uncertainty exists. You don’t know for sure how things will turn out. You don’t even know the probability that things will turn out one way or the other: Are the chances that you’ll enjoy the movie more like a 50-

The question for psychology is how people make these guesses. What are the mental processes through which people make judgments under conditions of uncertainty? There are two general types of possibilities.

Mind like a computer. One possibility is that the mind works like a computer program that processes statistical information. Maybe people perform mental calculations in which their minds automatically combine large amounts of information to estimate the chances of different outcomes occurring, and their judgments are based on these calculations.

Simplifying rules of thumb: Heuristics. The second possibility is that people cannot perform computer-

like calculations because of memory limitations. Human short- term memory holds only a small amount of information (see Chapter 7). It thus may be impossible for people to keep track of the large numbers of facts and fi gures needed to make computations in the way a computer does (Simon, 1983). Instead, people may rely on heuristics that simplify the task of predicting events.

A heuristic is a rule of thumb, that is, a simple way of accomplishing something that otherwise would be done through a more complex procedure. For example, if you are in a residential area of a big city and wish to go downtown, you could follow step-

What heuristic do you apply for figuring out what to order in a restaurant whose cuisine is new to you?

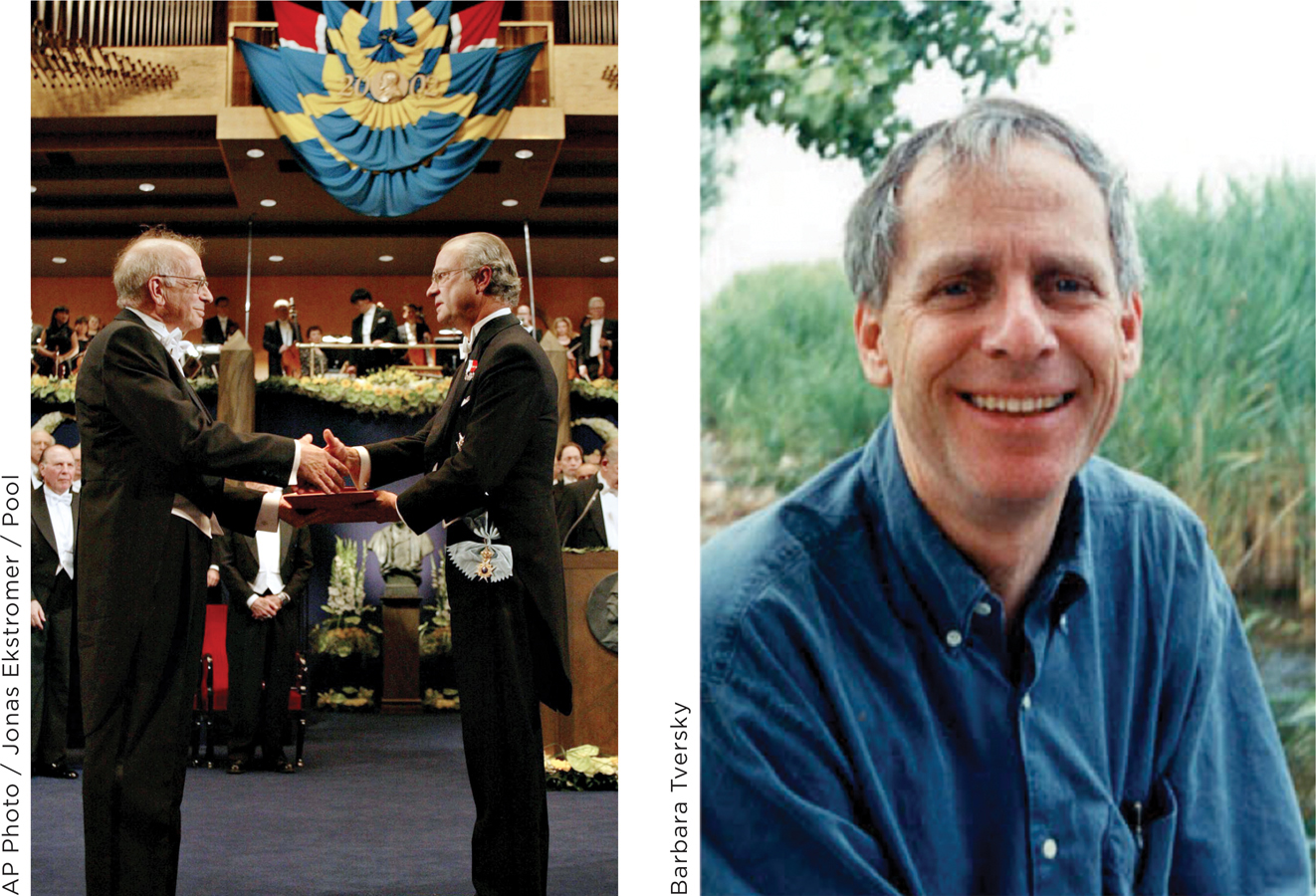

Judgmental heuristics are simple mental procedures for making judgments under conditions of uncertainty. Extraordinarily insightful research by Amos Tversky and Daniel Kahneman identified three judgmental heuristics that people use to make a wide variety of judgments: the availability, representativeness, and anchoring-

332

AVAILABILITY. In the availability heuristic, people base judgments on the ease with which information comes to mind. The rule of thumb—

In general, then, the availability heuristic works well. But sometimes it creates errors. This happens when factors other than actual frequency affect availability. Here’s an example.

What do you think is more common: words (of three or more letters) that start with the letter k or that have k as the third letter in the word? (Take a moment to guess.)

You probably thought something like this: “Hmm, first letter k; that’s easy: kite, kitten, kayak, kazoo, keep, keeper, keeping, keepsake, knife, knifing, knit, knitting…. Geez, there’s millions of ’em. K in third position … um … Bake, rake, make … um … ark.” Words starting with k come to mind easily. Most people, relying on the availability heuristic, thus say that words starting with k are more common. But in reality, words with k as the third letter are more common. The fact that we organize our “mental dictionary” according to words’ first letters makes them more available mentally, despite their being less frequent in reality.

REPRESENTATIVENESS. The representativeness heuristic is a psychological process that comes into play when people have to decide whether a person or thing is a member of a given category. When relying on representativeness, people base these judgments on the degree to which the person or object resembles the category. Suppose you meet a shy, middle-

The representativeness heuristic often produces accurate judgments. However, like availability, it can cause people to make judgmental errors. Consider this example from Tversky and Kahneman (1983):

Linda is 31 years old, single, outspoken, and very bright. She majored in philosophy. As a student, she was deeply concerned with issues of discrimination and social justice and also participated in anti-

Linda is a bank teller.

Linda is a bank teller and is active in the feminist movement.

Did you say “B”? Six out of seven people did when Tversky and Kahneman asked them. But “B” is wrong because everyone who is “a bank teller and active in the feminist movement” also is “a bank teller.” “B” therefore cannot be more probable than “A”; all “B”s are “A”s (all feminist bank tellers are bank tellers) and some “A”s are not “B”s (some bank tellers are not feminists). If the human mind worked like a statistical computer program, everyone would say “A.” However, the mind instead relies on representativeness and, since Linda resembles people’s conception of a feminist, most people say “B.”

In general, the representativeness heuristic can produce errors when it distracts people from the base rates of categories, that is, the overall likelihood that any item would be in the category. Consider our librarian/construction worker example. There are 8 times more construction workers in the United States than there are librarians (www.bls.gov); the base rate of the category “construction worker” is much higher. So the man—

333

TRY THIS!

These judgment problems should sound familiar to you; they were in this chapter’s Try This! activity. If you haven’t completed that yet, go to www.pmbpsychology.com and do it now.

ANCHORING AND ADJUSTMENT. The third judgmental heuristic, anchoring and adjustment, comes into play when people estimate an amount (e.g., “What GPA am I likely to earn next semester?” “How many people will show up at the party I’m planning?”). In the anchoring-

Anchoring can create judgmental errors. This happens when irrelevant anchor values bias people’s judgments. Tversky and Kaheman asked participants to estimate the percentage of world nations that are African. Before participants made their estimates, the researchers spun a wheel of fortune containing numbers from 0 to 100 and asked participants to indicate whether the correct answer was more or less than the random value generated by the wheel. The anchor values were random—

Random anchor values can affect important judgments, such as people’s judgments about how much money to spend on a purchase. Just prior to an auction for an item whose value was less than $100, researchers asked bidders to write down the last two digits of their Social Security number. “Huh?”—you should be saying to yourself. “What difference would writing down a Social Security number make to an auction bid?” Due to anchoring effects, it made a big difference. People’s bids were correlated with their Social Security numbers; people with higher Social Security numbers bid more money (Ariely, Loewenstein, & Prelec, 2004). The numbers came to mind, and served as anchors, when they asked themselves, “How much should I bid?”

Table 8.1 summarizes these three heuristics and reasons why reliance on heuristics can sometimes produce judgmental errors.

| Judgmental Heuristics | |||

|---|---|---|---|

|

Judgmental Heuristic |

Question That People Typically Answer Using the Heuristic |

Psychological Process |

Source of Error |

|

Availability |

How frequently does it occur? (e.g., Do more words start with k or have k as the third letter?) |

The ease with which information comes to mind. |

Some factors that affect the ease with which information comes to mind are unrelated to frequency. |

|

Representativeness |

Is an item a member of a given category? (e.g., Is Linda a bank teller or a bank teller active in the feminist movement?) |

The resemblance between the item and the category. |

Representativeness can distract people from information about base rates— |

|

Anchoring and Adjustment |

How many are there? (e.g., What percentage of the world’s nations is African?) |

Adjustment from an initial guess. |

Even irrelevant anchor values are influential, and adjustment away from them is often insufficient. |

WHAT DO YOU KNOW?…

Question 16

kUj3FDjuJ/POyH3jwoNQWblGeVOyx+Icj6dKm7NKxFpHzwuzByGpYTbnXCW21y7zwKhcxYIh92I2ZN+QisIm6GDG7gg8k/lSGswsxqC/8UYJq3Qw3lezNvtgSHxj78KD/UXhQ2tJVzDylDCUeHwseResEZWanyCCnMOk8aYo0QPriJKUPZ9rBgBccUnjEzeoGh2UPsdqS2sRVsptiyZYH2MoidJHgTcSP58Xsbh4DrJnJCir8bZuSsWCP1SegA7rmA/eyRYWaF8aCC5szr/CCjSF/V/yzy2hz7Afd8gdEAX/wYZhBr5opdMCZjvh0FqCVJwfNyLi+BwasklCR2g5/AWTBfTszmm+FzJVKwGS7u9x0hQ3dNGSQjWLk7eDR21Q8noA1MpdWc88F9osttIjXGQ6yNfSvHnrjz6FQQsqd4FKdm0kL/GUNhBWodbn6ZttW2wla5gR+2/MapaKDBdtRG1vg7lb8U91yh54gRLEEyEhHDbVgQX0XAK4Iq4sgblQpPHubWIsRaPIKfBr61HvJW9It33ntSGNf8HgsRQ2Q8hMoJKu9wU4faN1fVLonq9fqsxVqi3d13ai/mOuabUIRZ6W8EjCt3qmm/KISrNP2sgiP3o8J4eEmwte39bIFfobAer3TAndUG6hHpGvJVclHl1Pljw4sovPysKJBNSXqi3pezB2OCvCQZYZ+0xcCBRpsKu6v5gEoWxg8ReGXi/U+gMuJYNBku7NENSkxBNt6YW6gdbATUqQ+nxfrIX+v6Y13TNHUZZmNnv0Hq98iA3LzptL4rGJXRHKJY/D8D43RyERgQG4Pg8RTfOBgvdDxc+xN/a4KoDilxoO8lRZF3KKMnKm2ZgX2JLHqP9Rj0pXySlvLXpCYuZBgL4oZjTziYaUxhIlEeBUjdiBewn/j+X54R7HUveBC6neDdKfMGPIO0gJSyET5JghlaPtpCw+gDbH0UND7aprJRsxk/P2qjSpmhdRSdagKgyzLJgCEqrDEic6iYvjYxntgXfL3mY9nUleO0b6I+L/Mq1agsEQIDhffnuWx5AQPdDDwk+9dimWhb6xroiNHDbO5C8xvc0oHNiZWJc5/DyliaCoCiXOM30+eWtNtoCSpmuFdG1pXVo7tiE=334

Decision Making

Preview Question

Question

How logically do we make decisions?

How logically do we make decisions?

Cherry or blueberry pie? Rent a DVD or go to a movie theatre? Vacation in an exciting metropolis or a relaxing wilderness? Have surgery for your broken bone or put it in a cast and see if it heals by itself? Life presents decisions, large and small.

Decision making is the process of making a choice, that is, selecting among alternatives. Sometimes the benefits of the alternatives cannot be known for sure; you can’t tell how well the broken bone will heal if put in a cast. Other times, the benefits are known with certainty, yet it’s still difficult to decide. You know what cherry pie and blueberry pie taste like, yet it’s hard to choose between them.

How do people make decisions? To understand the psychology of decision making, it’s best first to consider a “standard model” of decision making and then to look at psychological factors that make the standard model inadequate. (The standard model was, for many years, the explanation of decision making in economics.)

The standard model of decision making has two components. One involves subjective value, the degree of personal worth that an individual places on an outcome. In the standard model of decision making, people choose the alternative available to them that has the highest subjective value. Note that subjective value can differ from objective value. Consider two cases: (1) Two people offer you a temporary one-

The second component of the standard theory is that, when making decisions, people consider how their choices will affect their net worth (i.e., the overall monetary assets they possess). You might enjoy a city vacation more than a wilderness vacation. But if either vacation would wipe out your personal savings, you’ll probably skip a vacation for this year.

In the standard model, people’s choices are highly logical. The decision maker compares subjective values, evaluates personal worth, and calculates a logical, rational decision. In reality, however, people aren’t as logical and rational as the standard model suggests. Research by Kahneman and Tversky (1979, 1984) undermined the standard model of decision making.

335

FRAMING EFFECTS. A major blow to the standard model was Kahneman and Tversky’s demonstration of framing effects. In a framing effect, decisions are influenced by the way alternative choices are described, or “framed.” According to the standard model, different framings should be inconsequential, because they don’t affect the actual value of alternatives. However, it turns out that framing has big effects. Consider the following two choices (from Kahneman & Tversky, 1984, p. 343):

Choice #1

Imagine that the United States is preparing for the outbreak of an unusual Asian disease, which is expected to kill 600 people. Two alternative programs to combat the disease have been proposed. Assume that the exact scientific estimates of the consequences of the programs are as follows:

If Program A is adopted, 200 people will be saved.

If Program B is adopted, there is a one-

third probability that 600 people will be saved and a two- thirds probability that no people will be saved.

Which of the two programs would you favor, A or B?

Choice #2

Imagine the same circumstance as above (an unusual Asian disease is expected to kill 600 people) and consider the following two programs:

If Program C is adopted, 400 people will die.

If Program D is adopted, there is a one-

third probability that nobody will die and a two- thirds probability that 600 people will die.

If you’re like most people, you chose Programs A and D. A seems better than B; if 200 people can be saved for sure (A), why choose a program whose most likely outcome is that no one will be saved (B)? D seems better than C; if one program leaves 400 people sure to die (C), why not choose an alternative that might prevent the deaths of everyone (D)?

About 3 out of 4 people chose Program A over B, and Program D over C. This seems sensible—

The standard model predicts that decisions would be the same in Choices #1 and 2, because the numbers of people saved are identical in both cases. But preferences actually reverse: People prefer the sure thing (save 200) in Choice #1, which is framed in terms of lives saved, and the risky option (maybe save 600, but a big chance of saving no one) in Choice #2, where choices are framed in terms of deaths. People think differently about gains and losses; framing reverses the preferences.

MENTAL ACCOUNTING. Kahneman and Tversky identified a second problem with the standard model. Recall an idea from that model: People base decisions on how alternative choices affect their net worth. This idea, too, fails to describe the way people actually make decisions. Consider the following choices (adapted from Kahneman & Tversky, 1984, p. 347):

Choice #1

Imagine that you have decided to see a play and paid the admission price of $20 per ticket. As you enter the theater, you discover that you have lost the ticket. The seat was not marked, and the ticket cannot be recovered. Would you pay $20 for another ticket?

336

Choice #2

Imagine that you have decided to see a play where admission is $20 per ticket. As you enter the theater, you discover that you have lost a $20 bill. Would you pay $20 for a ticket for the play?

In Choice #1, most people say no, they would not buy another ticket. In Choice #2, almost 9 out of 10 people say that, yes, they would buy one. Psychologically, then, the choices differ enormously. But in terms of how they affect net worth, they are identical. In both, if people see the play, they end up with $40 less net worth than when they started; $20 of that worth was lost and $20 was spent on the ticket to get in. Why, then, do people’s choices differ?

As Kahneman and Tversky explain, when making decisions, people usually do not consider their overall net worth—

In summary, Tversky and Kahneman revolutionized science’s understanding of judgment and decision making by humanizing it. Rather than performing calculations like a computer, people use simplifying strategies—

WHAT DO YOU KNOW?…

Question 17

Consider the following. A hurricane is coming and you have to figure out which of two protocols to follow to convince the town’s 3000 citizens to evacuate to safety. If they do not evacuate, they will die.

If Protocol 1 is followed, 2000 people will die.

If Protocol 2 is followed, there is a one-

third probability that nobody will die and a two- thirds probability that 3000 people will die.

Question 18

Consider the following two choices:

Choice #1: Imagine that you bought a voucher for $40 worth of food at a restaurant. When you get to the restaurant, you realize you have lost the voucher. Would you pay $40 for your meal?

Choice #2: Imagine that you go to a restaurant planning to spend about $40 for your meal. As you arrive, you realize you have lost two $20 bills. Would you still go to dinner as planned?