9.2 Attention: The Portal to Consciousness

Imagine one of our prehistoric hunting-and-gathering ancestors foraging for certain nutritious roots. Most of her conscious attention is devoted to perceiving and analyzing visual stimuli in the soil that tell her where the roots might be. At the same time, however, at some level of her mind, she must monitor many other sensory stimuli. The slight crackling of a twig in the distance could signal an approaching tiger. The stirring of the infant on her back could indicate that the baby needs comforting before it begins to cry and attract predators. A subtle darkening of the sky could foretell a dangerous storm. On the positive side, a visual clue in the foliage, unrelated to stimuli she is focusing on, could indicate that an even more nutritious form of vegetation exists nearby.

5

What two competing needs are met by our attentional system? How do the concepts of preattentive processing and top-down control of the attentive gate pertain to these two needs?

Natural selection endowed us with mechanisms of attention that can meet two competing needs. One need is to focus mental resources on the task at hand and not be distracted by irrelevant stimuli. The other, opposing need is to monitor stimuli that are irrelevant to the task at hand and to shift attention immediately to anything that signals some danger or benefit that outweighs that task. Cognitive psychologists have been much concerned with the question of how our mind manages these two competing needs.

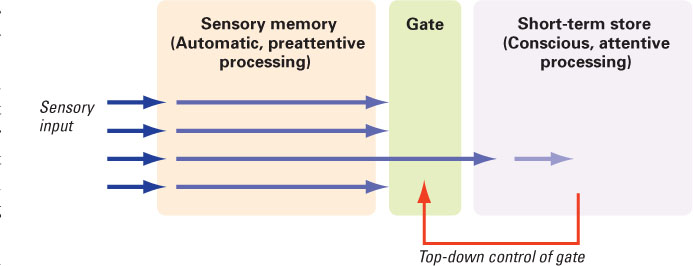

Figure 9.2 depicts a very general model, in which attention is portrayed as a gate standing between sensory memory and the short-term store. According to this model, all information that is picked up by the senses enters briefly into sensory memory and is analyzed to determine its relevance to the ongoing task and its potential significance for the person’s survival or well-being. That analysis occurs at an unconscious level and is referred to as preattentive processing. Logically, such processing must involve some comparison of the sensory input to information already stored in short-term or long-term memory. Without such comparison, there would be no basis for distinguishing what is relevant or significant from what is not. The portions of the brain that are involved in preattentive processing must somehow operate on the attention gate to help determine what items of information will be allowed to pass into the limited-capacity, conscious, short-term compartment at any given moment. In Figure 9.2, that top-down control is depicted by the arrow running from the short-term store compartment to the gate. The degree and type of preattentive processing that occurs, and the nature of the top-down control of the gate, are matters of much speculation and debate (Knudsen, 2007; Pashler, 1998).

327

With this general model in mind, let’s now consider some research findings that bear on the two competing problems that the attention system must solve: focusing attention narrowly on the task at hand and monitoring all stimuli for their potential significance.

The Ability to Focus Attention and Ignore the Irrelevant

6

What evidence from research shows that people very effectively screen out irrelevant sounds and sights when focusing on difficult perceptual tasks?

We are generally very good—in fact, surprisingly good—at attending to a relevant train of stimuli and ignoring stimuli that are irrelevant to the task we are performing. Here is some evidence for that.

Selective Listening

The pioneering research on attention, beginning in the 1940s and 1950s, centered on the so-called cocktail-party phenomenon, the ability to listen to and understand one person’s voice while disregarding other, equally loud or even louder voices nearby. In the laboratory, this ability is usually studied by playing recordings of two spoken messages at once and asking the subject to shadow one message—that is, to repeat immediately each of its words as they are heard—and ignore the other message. The experiments showed that people perform well at this as long as there is some physical difference between the two voices, such as in their general pitch levels or in the locations in the room from which they are coming (Hawkins & Presson, 1986; Haykin & Chen, 2005). When asked immediately after the shadowing task about the unattended voice, the subjects could usually report whether it was a woman’s voice or a man’s but were usually unaware of any of the message’s meaning or even whether the speaker switched to a foreign language partway through the session (Cherry, 1953; Cherry & Taylor, 1954).

Selective Viewing

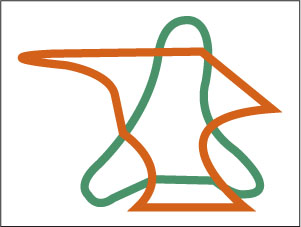

On the face of it, selective viewing seems to be a simpler task than selective listening; we can control what we see just by moving our eyes, whereas we have no easy control over what we hear. But we can also attend selectively to different, nearby parts of a visual scene without moving our eyes, as demonstrated in a classic experiment by Irvin Rock and Daniel Gutman (1981). These researchers presented, in rapid succession, a series of images to viewers whose eyes were fixed on a spot at the center of the screen. Each image contained two overlapping forms, one green and one red (see Figure 9.3), and subjects were given a task that required them to attend to just one color. Most of the forms were nonsense shapes, but some were shaped like a familiar object, such as a house or a tree. After viewing the sequence, subjects were tested for their ability to recognize which forms had been shown. The result was that they recognized most of the forms that had been presented in the attended color but performed only at chance level on those that had been presented in the unattended color, regardless of whether the form was nonsensical or familiar.

328

The most dramatic evidence of selective viewing comes from experiments in which subjects who are intent on a difficult visual task fail to see large, easily recognized objects directly in their line of sight. In one such experiment (Simons & Chabris, 1999), college students watched a 75-second video in which three black-shirted players tossed a basketball among themselves, and three white-shirted players tossed another basketball among themselves, while all six moved randomly around in the same small playing area. Each subject’s task was to count the number of passes made by one of the two groups of players while ignoring the other group. You can take this test for yourself. Before reading further about the results of this experiment, go to http://www.youtube.com/watch?v=vJG698U2Mvo or do an Internet search for “Simons & Chabris, 1999.” Watch the video, and count the number of times the players wearing white pass the basketball. Go ahead, we’ll wait.

Perhaps you noticed that midway through the video a woman dressed in a gorilla costume walked directly into the center of the two groups of players, faced the camera, thumped her chest, and then, several seconds later, continued walking across the screen and out of view. Remarkably, when questioned immediately after the video, 50 percent of the subjects claimed they had not seen the gorilla. When these subjects were shown the video again, without having to count passes, they expressed amazement—with such exclamations as, “I missed that?!”—when the gorilla came on screen.

Stage magicians and pickpockets have long made practical use of this phenomenon of inattentional blindness (Macknik et al., 2008). The skilled magician dramatically releases a dove with his right hand, while his left hand slips some new object into his hat. Nobody notices what his left hand is doing, even though it is in their field of view, because their attention is on the right hand and the dove. The skilled pickpocket creates a distraction with one hand while deftly removing your wallet with the other.

The Ability to Shift Attention to Significant Stimuli

There are limits to inattentional blindness. If our hunter-gatherer ancestors frequently missed real gorillas, or lions or tigers, because of their absorption in other tasks, our species would have become extinct long ago. Similarly, we would not be alive today if we didn’t shift our attention to unexpected dangers while crossing the street. We are good at screening out irrelevant stimuli when we need to focus intently on a task, but we are also good (although far from perfect) at shifting our attention to stimuli that signal danger or benefit or are otherwise significant to us.

Our ability to shift attention appears to depend, in part, on our capacity to listen or look backward in time and “hear” or “see” stimuli that were recorded a moment earlier in sensory memory. A major function of sensory memory, apparently, is to hold on to fleeting, unattended stimuli long enough to allow us to turn our attention to them and bring them into consciousness if they prove to be significant (Lachter et al., 2004). Here is some evidence for that idea.

Shifting Attention to Meaningful Information in Auditory Sensory Memory

7

How does sensory memory permit us to hear or see, retroactively, what we were not paying attention to? How have experimenters measured the duration of auditory and visual sensory memory?

Auditory sensory memory is also called echoic memory, and the brief memory trace for a specific sound is called the echo. Researchers have found that the echo fades over a period of seconds and vanishes within at most 10 seconds (Cowan et al., 2000; Gomes et al., 1999).

In a typical experiment on echoic memory, subjects are asked to focus their attention on a particular task, such as reading a prose passage that they will be tested on, and to ignore spoken words that are presented as they work. Occasionally, however, their work on the task is interrupted by a signal, and when that occurs they are to repeat the spoken words (usually digit names, such as “seven, two, nine, four”) that were most recently presented. Sometimes the signal comes immediately after the last word in the spoken list, and sometimes it comes after a delay of several seconds. The typical result is that subjects can repeat accurately the last few words from the spoken list if the signal follows immediately after the last word of the list, but performance drops off as the delay is increased, and it vanishes at about 8 to 10 seconds (Cowan et al., 2000). The subjective experience of people in such experiments is that they are only vaguely aware of the spoken words as they attend to their assigned task, but when the signal occurs, they can shift their attention back in time and “hear” the last few words as if the sounds were still physically present.

329

Shifting Attention to Meaningful Information in Visual Sensory Memory

Visual sensory memory is also called iconic memory, and the brief memory trace for a specific visual stimulus is called the icon. The first psychologist to suggest the existence of iconic memory was George Sperling (1960). In experiments on visual perception, Sperling found that when images containing rows of letters were flashed for one twentieth of a second, people could read the letters, as if they were still physically present, for up to one third of a second after the image was turned off. For instance, if an image was flashed containing three rows of letters and then a signal came on that told the subjects to read the third row, subjects could do that if the signal occurred within a third of a second after the image was flashed (see Figure 9.4). Such findings led Sperling to propose that a memory store must hold visually presented information, in essentially its original sensory form, for about a third of a second beyond the termination of the physical stimulus.

A number of experiments have shown that people who are attending to one set of visual stimuli and successfully ignoring another set will tend to notice stimuli in the ignored set that have special meaning to them. For example, if the task is to identify names of types of animals in sets of words and to ignore pictures, people are more likely to notice a picture of an animal than other pictures (Koivisto & Revonsuo, 2007). Similarly, people commonly notice their own name in a set of stimuli that they are supposed to ignore (Frings, 2006; Mack et al., 2002). In such cases, preattentive processes apparently analyze the picture or name, recognize it as significant, and shift the person’s attention to it while it is still available in sensory memory.

Effect of Practice on Attentional Capacity

8

Can people improve their attentional capacity by playing action video games?

The gate between sensory memory and the short-term store is narrow: Only a few items of information can cross through it at any given instant. If an array of visual stimuli—such as digits or simple shapes—is flashed quickly on a screen, and then a bright patterned stimulus (called a masking stimulus) is immediately flashed so as to erase the iconic memory for the original array, most people can identify only about three of the stimuli in the original array. In the past, some psychologists regarded this limitation to be an unalterable property of the nervous system, but others argued that the capacity to attend to several items at once can be increased with practice (Neisser, 1976). The best evidence that this capacity can indeed be improved comes from research on the effects of video-game playing.

In a series of experiments, C. Shawn Green and Daphne Bavelier (2003, 2006) compared the visual attentional capacity of young men who regularly played action video games with those who never or rarely played such games. On every measure, the video-game players greatly outperformed the other group. In one test, for example, the video-game players correctly identified an average of 4.9 items in a briefly flashed array, compared to an average of 3.3 for the non-video-game players. In another test, in which target stimuli appeared in random places amid distracting stimuli on the video screen, video-game players correctly located twice as many target stimuli as did the nonplayers. The same researchers also showed that nonplayers improved dramatically on all measures of visual attention if they went through a training program in which they played an action video game (such as “Medal of Honor”) that required them to track known enemies while watching constantly for new enemies or other dangers.

330

Although this and other research (Feng et al., 2007) demonstrates that training can enhance attentional capacity, this is not an endorsement of the current societal trend of “multitasking”—for example, doing homework while watching TV, or texting while listening to a lecture or while driving (see discussion of texting and driving later in this chapter). In fact, research has shown that multitasking generally does not result in getting more things accomplished efficiently, but rather actually impairs performance (Gingerich & Lineweaver, 2012).

Unconscious, Automatic Processing of Stimulus Input

The information-processing model depicted in Figure 9.1 (p. 322) is a useful beginning point for thinking about the mind, but it is far from complete. Perhaps its greatest deficiency is its failure to account for unconscious effects of sensory input. In the model, sensory information can influence behavior and consciousness only if it is attended to and enters the conscious short-term store compartment; otherwise, it is simply lost from the system. In fact, however, there is much evidence that sensory input can alter behavior and conscious thought without itself becoming conscious to the person. One means by which it can do this is called priming.

Unconscious Priming of Mental Concepts

Priming can be defined as the activation, by sensory input, of information that is already stored in long-term memory. The activated information then becomes more available to the person, altering the person’s perception or chain of thought. The activation is not experienced consciously, yet it influences consciousness. Most relevant for our present discussion, there is good evidence that such activation can occur even when the priming stimulus is not consciously perceived.

9

What is some evidence that concepts stored in long-term memory can be primed by stimuli that are not consciously perceived?

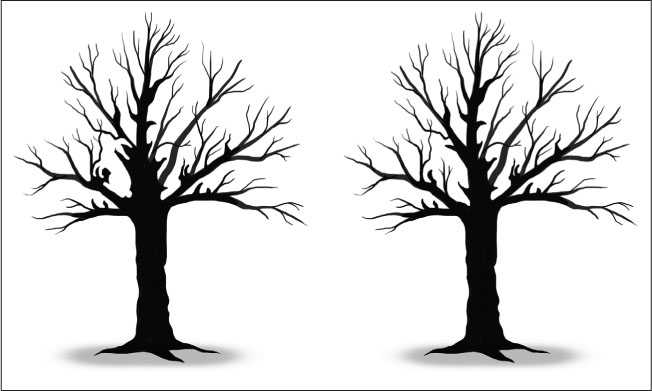

One of the earliest demonstrations of unconscious priming was an experiment in which researchers showed students either of two visual stimuli similar to those depicted in Figure 9.5 (Eagle et al., 1966). In this experiment, the left-hand stimulus contained the outline of a duck, formed by the tree trunk and its branches. The researchers found that subjects who were shown the duck-containing stimulus, in three 1-second flashes on a screen, did not consciously notice the duck. Yet when all subjects were subsequently asked to draw a nature scene, those who had been shown the duck-containing stimulus were significantly more likely to draw a scene containing a duck or a duck-related object (such as a pond) than were the other subjects.

331

Another early example involves a selective-listening procedure (MacKay, 1973). Subjects wore headphones that presented different messages to each ear and were instructed to shadow (repeat) what they heard in one ear and ignore what was presented to the other ear. The shadowed message included sentences, such as They threw stones at the bank, that contained words with two possible meanings. At the same time, the other ear was presented with a word that resolved the ambiguity (river or money in this example). After the shadowing task, the subjects were asked to choose from a pair of sentences the one that was most like the shadowed sentence. In the example just cited, the choice was between They threw stones at the savings and loan association and They threw stones toward the side of the river. Although the subjects could not report the nonshad-owed word, they usually chose the sentence that was consistent with the meaning of that word. Thus, the unattended word influenced their interpretation of the shadowed message, even though they were unaware of having heard that word.

In everyday life, priming provides a means by which contextual information that we are not attending to can help us make sense of information that we are attending to. I might not consciously notice a slight frown on the face of a person I am listening to, yet that frown might prime my concept of sadness and cause me to experience more clearly the sadness in what he is saying. As you will discover later in the chapter, priming also helps us retrieve memories from our long-term store at times when those memories are most useful.

Automatic, Obligatory Processing of Stimuli

10

How is the concept of automatic, unconscious processing of stimuli used to help explain the Stroop interference effect?

A wonderful adaptive characteristic of the mind is its capacity to perform routine tasks automatically, which frees its limited, effortful, conscious working memory for more creative purposes or for dealing with emergencies. Such automatization depends at least partly on the mind’s ability to process relevant stimuli preattentively (unconsciously) and to use the results of that processing to guide behavior. When you were first learning to drive, for example, you probably had to devote most of your attention to such perceptual tasks as monitoring the car right ahead of you, watching for traffic signals, and manipulating the steering wheel, brake, and accelerator. With time, however, these tasks became automatic, allowing you to devote ever more attention to other tasks, such as carrying on a conversation or looking for a particular street sign.

Another example of a skill at which most of us are experts is reading. When you look at a common printed word, you read it automatically, without any conscious effort. You unconsciously (preattentively) use the word’s individual letters to make sense of the word. Because of that, when you read a book you can devote essentially all of your attention to the meaningful words and ideas in the book and essentially no attention to the task of recognizing the individual letters that make up the words. In fact, researchers have found that, in some conditions at least, reading is not only automatic but also obligatory (impossible to suppress). In certain situations, people can’t refrain from reading words that are in front of their open eyes, even if they try.

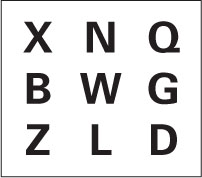

An often-cited demonstration of the obligatory nature of reading is the Stroop interference effect, named after J. Ridley Stroop (1935), who was the first to describe it. Stroop presented words or shapes printed in colored ink to subjects and asked them to name the ink color of each as rapidly as possible. In some cases each word was the name of the color in which it was printed (for example, the word red printed in red ink); in others it was the name of a different color (for example, the word blue printed in red ink); and in still others it was not a color name. Stroop found that subjects were slowest at naming the ink colors for words that named a color different from the ink color. To experience this effect yourself, follow the instructions in the caption of Figure 9.6.

332

A variety of specific explanations of the Stroop effect have been offered (Luo, 1999; Melara & Algom, 2003), but all agree that it depends on people’s inability to prevent themselves from reading the color words. Apparently, the preattentive processes involved in reading are so automatic that we cannot consciously stop them from occurring when we are looking at a word. We find it impossible not to read a color name that we are looking at, and that interferes with our ability to think of and say quickly the ink color name when the two are different.

Brain Mechanisms of Preattentive Processing and Attention

For many years psychologists were content to develop hypothetical models about mental compartments and processes, based on behavioral evidence, without much concern about what was physically happening in the brain. In recent years, with the advent of fMRI and other new methods for studying the intact brain, that has changed. Many studies have been conducted to see how the brain responds during preattentive processing of stimuli, during attentive processing of stimuli, and at moments when shifts in attention occur. Much is still to be learned, but so far three general conclusions have emerged from such research:

11

What three general conclusions have emerged from studies of brain mechanisms of preattentive processing and attention?

- Stimuli that are not attended to nevertheless activate sensory and perceptual areas of the brain. Sensory stimuli activate specific sensory and perceptual areas of the cerebral cortex whether or not the person consciously notices those stimuli. Such activation is especially apparent in the primary sensory areas, but it also occurs, to some degree, in areas farther forward in the cortex that are involved in the analysis of stimuli for their meaning. For instance, in several fMRI studies, words that were flashed on a screen too quickly to be consciously seen activated neurons in portions of the occipital, parietal, and frontal cortex that are known, from other studies, to be involved in reading (Dehaene et al., 2001, 2004). Apparently, the unconscious, preattentive analysis of stimuli for meaning involves many of the same brain mechanisms that are involved in the analysis of consciously perceived stimuli.

- Attention magnifies the activity that task-relevant stimuli produce in sensory and perceptual areas of the brain, and it diminishes the activity that task-irrelevant stimuli produce. Attention, at the neural level, seems to be a process that temporarily sensitizes the relevant neurons in sensory and perceptual areas of the brain, increasing their responsiveness to the stimuli that they are designed to analyze, while having the opposite effect on neurons whose responses are irrelevant to the task (Reynolds et al., 2012). For example, in a task that requires attention to dots moving upward and inattention to dots moving downward, those neurons in the visual system that respond to upward motion become more responsive, and those neurons that respond to downward motion become less responsive, than they normally are (Yantis, 2008).

- Neural mechanisms in anterior (forward) portions of the cortex are responsible for control of attention. Many research studies have shown that areas in the frontal lobe and in anterior portions of the temporal and parietal lobes become active at moments when shifts in attention occur (Ruff et al., 2007; Shipp, 2004). Other studies have shown that the prefrontal cortex (the most anterior portion of the frontal lobe) is especially active during tasks, such as the Stroop task, that require intense concentration on relevant stimuli and screening out of irrelevant stimuli (van Veen & Carter, 2006). Such findings suggest that these anterior regions control attention by acting top-down on sensory and perceptual areas farther back in the cerebral cortex.

333

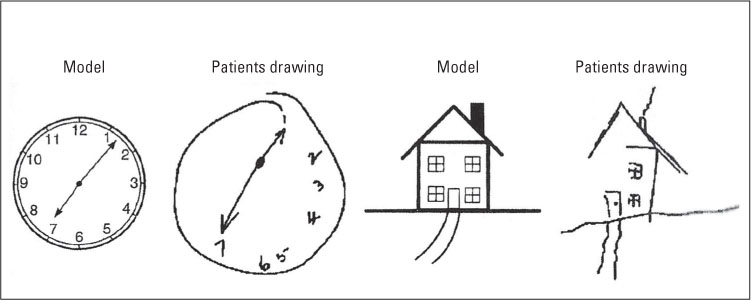

Other research has shown that some forms of brain damage can cause people to ignore, or not see, information in half of their visual field. Lesions in the parietal lobe, the frontal lobe, and the anterior singular cortex in one hemisphere can result in spatial neglect, with individuals being unable to “see” things in the contralateral visual field (the side opposite the brain injury) (Vallar, 1993). In such cases, a patient with a lesion on the left side of her brain may not see the food on the right side of her plate, the numbers on the right side of a clock, or the objects pictured on the right side of a photograph. Figure 9.7 shows the drawing of a patient with spatial neglect. In both cases, the subject was asked to copy the model exactly (from Reynolds et al., 2012).

Attention is a state of the brain in which neural resources are shifted such that more resources are devoted to analyzing certain selected stimuli and fewer resources are devoted to analyzing other stimuli that are picked up by the senses. Apparently, the more neural activity a stimulus produces, the more likely it is that we will experience that stimulus consciously.

SECTION REVIEW

Attention is the means by which information enters consciousness.

Focused Attention

- Selective listening and viewing studies show that we can effectively focus attention, screening out irrelevant stimuli.

- In general, we are aware of the physical qualities, but not the meaning, of stimuli that we do not attend to.

Shifting Attention

- We unconsciously monitor unattended stimuli in sensory memory so that we can shift our attention if something significant occurs.

- Such monitoring includes preattentive processing for meaning.

- Practice can enhance the capacity to attend to several items of information at once.

Preattentive Processing

- Through preattentive processing, unattended sensory information can affect conscious thought and behavior.

- For example, in unconscious priming, stimuli that are not consciously perceived can activate information in long-term memory, which can influence conscious thought.

- Preattentive processing is automatic and in some cases obligatory, as exemplified by the Stroop interference effect.

Brain Mechanisms

- Many of the same brain areas are involved in preattentive processing and conscious processing of stimuli for meaning.

- However, attention causes greater activation of the relevant sensory and perceptual areas.

- Areas of the cerebral cortex anterior to (forward of) sensory and perceptual areas control shifts in attention.

- Damage to lesions in one hemisphere of the brain can cause spatial neglect.

334