Biological and Cognitive Aspects of Learning

In our discussion of the classical conditioning of emotional responses, we mentioned biological predispositions to learning certain fears. Humans seem prepared to learn fears of animals or heights much easier than fears of toy blocks or curtains. Animals (snakes or spiders) are more dangerous to us than toy blocks, so such predispositions make evolutionary sense (Seligman, 1971). Are there any other predispositions that affect classical conditioning and are there any biological constraints on operant conditioning? In addition to addressing these questions about the biological aspects of learning, we will also discuss cognitive research on latent learning and learning through modeling that questions whether reinforcement is necessary for learning. Let’s start with the biological research.

Biological Preparedness in Learning

Do you have any taste aversions? Have you stopped eating a certain food or drinking a certain liquid because you once got miserably sick after doing so? If you have a really strong aversion, you may feel ill even when you think about that food or drink. If the illness involved a specific food from a particular restaurant, you may have even generalized the aversion to the restaurant. Just as we are biologically prepared to learn certain fears more than others (Öhman & Mineka, 2001), we are also prepared to learn taste aversions. Our preparedness to learn to fear objects and situations dangerous to us (animals and heights) and to avoid foods and drinks that make us sick has adaptive significance. Such learning enhances our chances of survival. It makes biological sense then that we should be predisposed to such learning. To see how psychologists have studied such predispositions, let’s take a closer look at some of the early research on taste aversion.

174

Taste aversion.

John Garcia and his colleagues conducted some of the most important early research on taste aversion in the 1960s. Their research challenged the prevailing behaviorist argument that an animal’s capacity for conditioning is not limited by its biology (Garcia, 2003). Garcia benefited from an accidental discovery while studying the effects of radiation on rats (Garcia, Kimeldorf, Hunt, & Davies, 1956). The rats would be moved from their home cages to experimental chambers for the radiation experiments. The radiation made the rats nauseous, and they would get very sick later back in their home cages. The rats, however, would still go back into the experimental chambers where they had been radiated, but they would no longer drink the water in these chambers. Why? The water bottles in the chambers were made of a different substance than those in the home cages—plastic versus glass. So, the water the rats drank in the experimental chambers had a different taste than that in their home cages, and the rats had quickly learned an aversion to it. They paired the different taste with their later sickness. Rats do not have the cognitive capacity to realize that they were radiated and that it was the radiation that made them sick. Note that the rats did not get sick immediately following the drinking or the radiation. The nausea came hours later. This means that learning a taste aversion is a dramatic counterexample to the rule that the UCS (sickness) in classical conditioning must immediately follow the CS (the different-tasting water) for learning to occur (Etscorn & Stephens, 1973). In fact, if the CS-UCS interval is less than a few minutes, a taste aversion will not be learned (Schafe, Sollars, & Bernstein, 1995). This makes sense because spoiled or poisoned food typically does not make an animal sick until a longer time period has elapsed.

So how did Garcia and his colleagues use these taste aversion results for rats to demonstrate biological preparedness in learning? Garcia and Koelling (1966) showed that the rats would not learn such aversions for just any pairing of cue and consequences. Those that seemed to make more biological sense (different-tasting water paired with later sickness) were easily learned, but other pairings that didn’t make biological sense did not even seem learnable. For example, they examined two cues that were both paired with sickness through radiation: (1) sweet-tasting water, or (2) normal-tasting water accompanied by clicking noises and flashing lights when the rats drank. The rats who drank the sweet-tasting water easily learned the aversion to the water, but the rats who drank normal-tasting water with the accompanying clicking noises and flashing lights did not. The rats just couldn’t learn to pair these environmental auditory and visual cues with their later sickness; this pairing didn’t make any biological sense to the rats. It’s important for rats to learn to avoid food and water that will make them sick, but in a natural environment, noises and lights don’t typically cause sickness for rats.

175

This doesn’t mean that other animals might not be predisposed to learn auditory or visual aversions. For example, many birds, such as quail, seem to learn visual aversions rather easily. A clever study demonstrated this difference among animals in types of learning predispositions. In this study, both quail and rats drank dark blue, sour-tasting water prior to being made ill (Wilcoxon, Dragoin, & Kral, 1971). Later, the animals were given a choice between dark blue water that tasted normal and sour-tasting water that visually appeared normal. The birds only avoided the dark blue water, and the rats only avoided the sour-tasting water. In general, an animal is biologically predisposed to learn more easily those associations that are relevant to its environment and important to its survival (Staddon & Ettinger, 1989). Rats are scavengers, so they eat whatever is available. They encounter many novel foods, so it makes biological sense that they should be prepared to learn taste aversions easily to enhance their survival. Birds hunt by sight, so visual aversions are more relevant to their survival. There are also biological preparedness effects on operant conditioning. We’ll take a look at one of the most important—instinctual drift.

tbkmedia.de/Alamy

Instinctual drift.

Keller and Marian Breland, two of Skinner’s former students, discovered an important biological preparedness effect on operant conditioning (Breland & Breland, 1961). The Brelands, who became animal trainers, employed operant conditioning to train thousands of animals to do all sorts of tricks. In doing this training, they discovered what has become known as instinctual drift—the tendency of an animal to drift back from a learned operant response to an object to an innate, instinctual response. For example, the Brelands used food reinforcement to train some animals to pick up oversized coins and put them in a bank. The Brelands did this with both pigs and raccoons. However, they observed that once the coins became associated with the food reinforcement, both types of animal drifted back to instinctual responses that were part of their respective food-gathering behaviors. The pigs began to push the coins with their snouts, and the raccoons started to rub the coins together in their forepaws. These more natural responses interfered with the Brelands’ operant training.

176

The important point of these findings is that biologically instinctual responses sometimes limit or hinder our ability to condition other, less natural responses. The Brelands’ work demonstrates a biological preparedness effect upon operant conditioning. Biological predispositions show that animals will learn certain associations (those consistent with their natural behavior) more easily than others (those less consistent with their natural behavior). Also note that this “misbehavior” of the pigs and the raccoons (their instinctual responses) continued without reinforcement from the trainers. In fact, it prevented the animals from getting reinforcement. This aspect of the Brelands’ work relates to the more general question of whether we can learn without reinforcement, which we will discuss in the next section.

Latent Learning and Observational Learning

Cognitively oriented learning researchers are interested in the mental processes involved in learning. These researchers have examined the question of whether we can learn without reinforcement in their studies of latent learning and observational learning. We’ll consider some of the classic research on these two types of learning.

Latent learning.

Think about studying for an exam in one of your courses. What you have learned is not openly demonstrated until you are tested on it by the exam. You learn, but you do not demonstrate the learning until reinforcement for demonstrating it (a good grade on the exam) is available. This is an example of what psychologists call latent learning, learning that occurs but is not demonstrated until there is incentive to do so. This is what Edward Tolman was examining in his pioneering latent-learning research with rats.

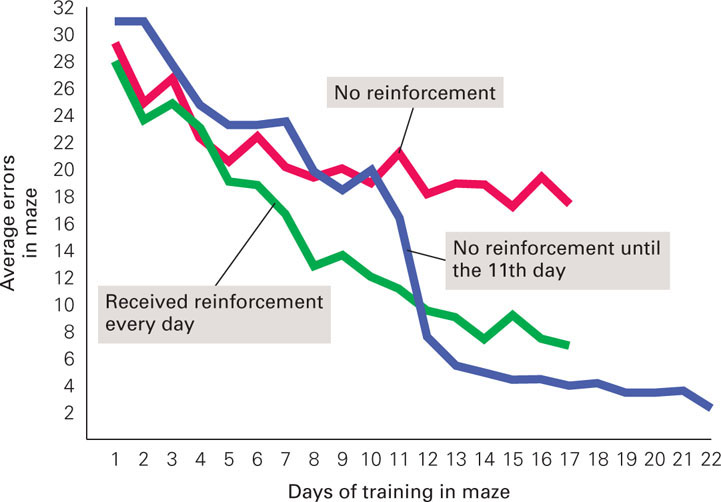

In this research, food-deprived rats had to negotiate a maze, and the number of wrong turns (errors) that a rat made was counted (Tolman & Honzik, 1930a, b, and c). In one study, there were three different groups of rats and about three weeks (one trial per day) of maze running. Food (reinforcement) was always available in the goal box at the maze’s end for one group but never available for another group. For the third group, there was no food available until the eleventh day. What happened? First, the number of wrong turns decreased over trials, but it decreased much more rapidly for the group who always got food reinforcement through the first 10 trials. Second, the performance for the group that only started getting food reinforcement on the eleventh day improved immediately on the next day (and thereafter), equaling that of the group that had always gotten food reinforcement (see Figure 4.10). It appears that the third group of rats had been learning the maze all along, but did not demonstrate their learning until the food reinforcement was made available. The rats’ learning had been latent. They had learned a cognitive map (a mental representation) of the maze, and when they needed to use it (when reinforcement became available), they did. This explanation was tested further by blocking the optimal route to the goal box to see if the rats would use their cognitive map to take the next best route. The rats did.

177

178

Observational learning.

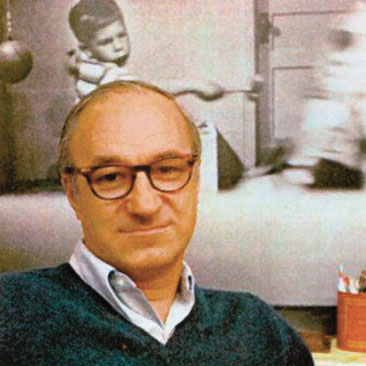

If such cognitive maps are within the abilities of rats, then it shouldn’t be surprising that much learning by humans doesn’t involve conditioning through direct experience. Observational learning (modeling)—learning by observing others and imitating their behavior—plays a major role in human learning (Bandura, 1973). For example, observational learning helps us learn how to play sports, write the letters of the alphabet, and drive a car. We observe others and then do our best to imitate their behavior. We also often learn our attitudes and appropriate ways to act out our feelings by observing good and bad models. Today’s sports and movie stars are powerful models for such learning.

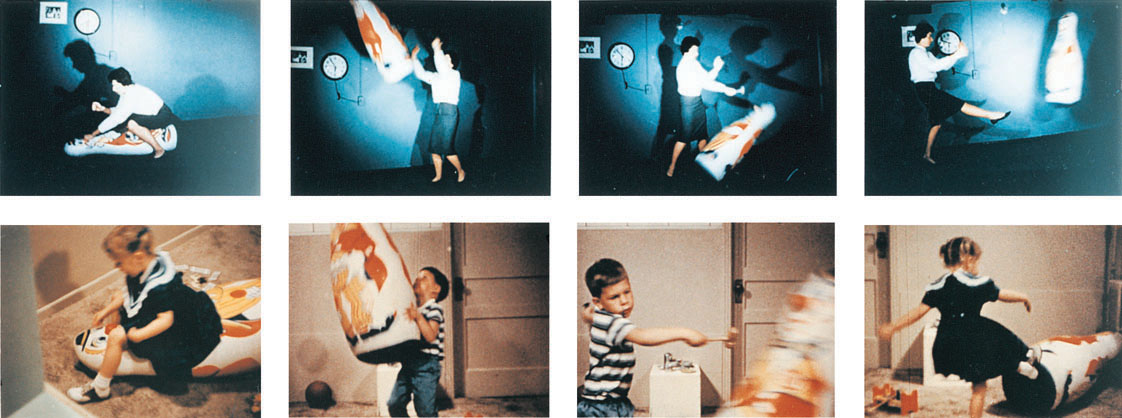

Albert Bandura’s famous experiments on learning through modeling involved a Bobo doll, a large inflated clown doll, and kindergarten-age children as participants (Bandura, 1965; Bandura, Ross, & Ross, 1961, 1963a, 1963b). In one experiment, some of the children in the study were exposed to an adult who beat, kicked, and yelled at the Bobo doll. After observing this behavior, each child was taken to a room filled with many appealing toys, but the experimenter upset the child by saying that these toys were being saved for other children. The child was then taken to a room that contained a few other toys, including the Bobo doll. Can you guess what happened? The child started beating on the Bobo doll just as the adult model had done. The children even repeated the same words that they heard the model use earlier while beating the doll. Children who had not observed the abusive adult were much less likely to engage in such behavior.

But what would happen if a child were exposed to a model that acted gently toward the Bobo doll? In another experiment, Bandura had children observe either an aggressive model, a gentle model, or no model. When allowed to play in a toy room that included a Bobo doll, what happened? The children exposed to the aggressive model acted more aggressively toward the doll than the children with no model, and the children with the gentle model acted more gently toward the doll than the children with no model. In general, the children’s behavior with the doll was guided by their model’s behavior. But the models in these experiments were not reinforced or punished for their behavior. Would this make a difference?

179

Bandura examined this question in another experiment. The young children watched a film of an adult acting aggressively toward the Bobo doll, but there were three different versions of the film. In one version, the adult’s behavior was reinforced; in another, the behavior was punished; and in the third, there were no consequences. Each child’s interactions with the Bobo doll varied depending upon which film the child had seen. The children who had watched the adult get reinforced for aggressive behavior acted more aggressively toward the Bobo doll than those who had seen the model act with no consequences. In addition, the children who had watched the adult get punished were less likely to act aggressively toward the doll than the children who had not been exposed to any consequences for acting aggressively toward the doll. The children’s behavior was affected by the consequences witnessed in the film. Then Bandura cleverly asked the children if they could imitate the behavior in the film for reinforcement (snacks). Essentially, all of the children could do so. This is an important point. It means that the children all learned the behavior through observation regardless of whether the behavior was reinforced, punished, or neither.

Much of the research on observational learning since Bandura’s pioneering studies has focused on the question of whether exposure to violence in media leads people to behave more aggressively. There is clearly an abundance of violence on television and in other media. It has been estimated that the average child has viewed 8,000 murders and 100,000 other acts of violence on television alone by the time he finishes elementary school (Huston et al., 1992), and more recent research indicates that the amount of violence on television and in other media has increased since Huston et al.’s study. For example, the Parents Television Council (2007) found that violence in prime-time television increased 75 percent between 1998 and 2006, and the National Television Violence Study evaluated almost 10,000 hours of broadcast programming from 1995 through 1997 and found that 61 percent of the programming portrayed interpersonal violence, much of it in an entertaining or glamorized manner, and that the highest proportion of violence was in children’s shows (American Academy of Pediatrics, 2009). But do we learn to be more aggressive from observing all of this violence on television and in other media?

Literally hundreds of studies have addressed this question, and as you would expect, the findings are both complicated and controversial. Leading scientists reviewing this literature, however, came to the following general conclusion: “Research on violent television and films, video games, and music reveals unequivocal evidence that media violence increases the likelihood of aggressive and violent behavior in both immediate and long-term contexts” (Anderson et al., 2003). Similarly, the authors of a recent meta-analysis of over 130 studies of video game play with 130,296 participants across Eastern and Western cultures concluded that exposure to violent video games is a causal risk factor for increased aggressive behavior, aggressive cognition, and aggressive affect and for decreased empathy and prosocial behavior (Anderson et al., 2010). Huesmann (2010, p. 179) argues that this meta-analysis “proves beyond a reasonable doubt that exposure to video game violence increases the risk that the observer will behave more aggressively and violently in the future” (cf. Ferguson & Kilburn, 2010). Finally, based on a review of research findings on the exposure to violence in television, movies, video games, cell phones, and on the Internet since the 1960s, Huesmann (2007) concluded that such exposure significantly increases the risk of violent behavior on the viewer’s or game player’s part, and that the size of this effect is large enough to be considered a public health threat (cf. Ferguson & Kilburn, 2009). Per the effect sizes for many common threats to public health given in Bushman & Huesmann (2001), there is only one greater than exposure to media violence and aggression and that is smoking and lung cancer.

180

The empirical evidence linking exposure to media violence to increased risk of aggressive behavior seems clear-cut (e.g., Murray, 2008; cf. Grimes, Anderson, & Bergen, 2008). However, as with other learned behaviors, many factors at the individual, family, and broader community levels contribute to the development of aggression. We must also remember that media exposure is not a necessary and sufficient cause of violence—not all viewers will be led to violence, with some more susceptible than others; but this is also true for other public health threats, such as exposure to cigarette smoke and the increased risk of lung cancer. It is also very difficult to determine whether cumulative exposure leads to increased aggression over the long term (cf. Hasan, Bègue, Scharkow, & Bushman, 2013). Regardless, it seems clear that exposure to media violence is a risk factor for aggression. In this vein, the American Academy of Pediatrics (2009, p. 1222) concluded that “exposure to violence in media, including television, movies, music, and video games, represents a significant risk to the health of children and adolescents. Extensive research evidence indicates that media violence can contribute to aggressive behavior, desensitization to violence, nightmares, and fear of being harmed.”

Recent research has also identified neurons that provide a neural basis for observational learning. These mirror neurons are neurons that fire both when performing an action and when observing another person perform that same action. When you observe someone engaging in an action, similar mirror neurons are firing in both your brain and in the other person’s brain. Thus, these neurons in your brain are “mirroring” the behavior of the person you are observing. Mirror neurons were first discovered in macaque monkeys via electrode recording by Giacomo Rizzolatti and his colleagues at the University of Parma in the mid-1990s (Iacoboni, 2009b), but it is not normally possible to study single neurons in humans because that would entail attaching neurons directly to the brain; therefore, most evidence is indirect. For example, using fMRI and other scanning techniques, neuroscientists have observed that the same cortical areas in humans are involved when performing an action and observing that action (Rizzolatti & Craighero, 2004). These areas are referred to as mirror neuron systems because groups of neurons firing together rather than single neurons firing separately were observed in these studies. Recent research, however, has provided the first direct evidence for mirror neurons in humans by recording the activity of individual neurons (Mukamel, Ekstrom, Kaplan, Iacoboni, & Fried, 2010). Mukamel et al. recorded from the brains of 21 patients who were being treated for intractable epilepsy. The patients had been implanted with electrodes to identify seizure locations for surgical treatment. With the patients’ consent, the researchers used the same electrodes for their study. They found neurons that behaved as mirror neurons, showing their greatest activity both when the patients performed a task and when they observed a task. We now know for sure that humans have mirror neurons (Keysers & Gazzola, 2010).

181

Because both human and nonhuman primates learn much through observation and imitation, the mirror neuron systems provide a way through which observation can be translated into action (Cattaneo & Rizzolatti, 2009; Iacoboni, 2005, 2009b). In addition to providing a neural basis for observational learning, researchers speculate that mirror neuron systems may also play a major role in empathy and understanding the intentions and emotions of others (Iacoboni, 2009a) and represent the basic neural mechanism from which language evolved (Rizzolatti & Arbib, 1998; Rizzolatti & Craighero, 2004). Some researchers even think that there may be a link between mirror neuron deficiency and social disorders, namely autism, in which individuals have difficulty in social interactions (Dapretto et al., 2006). Lastly, mirror neurons have proved useful in the rehabilitation of motor deficits in people who have had strokes (Ertelt et al., 2007). For example, patients who watch videos of people demonstrating various arm and hand movements (action observation) improved faster than those who did not watch the videos (Binkofski & Buccino, 2007). Mirror neurons are also likely involved in observation inflation—observing someone performing an action induces false memories of having actually performed the action yourself (Lindner, Echterhoff, Davidson, & Brand, 2010).

Observational learning, like latent learning, emphasizes the role of cognitive processes in learning. As demonstrated by our examples, just as Tolman’s rats seemed to have a cognitive map of the maze, Bandura’s children seemed to have a cognitive model of the actions of the adult models and their consequences. Cognitive psychologists have studied the development of such mental representations and their storage in memory and subsequent retrieval from memory in their attempts to understand how the human memory system works. We will take a detailed look at the human memory system in Chapter 5.

182

Section Summary

In this section, we learned about some of the effects of biological preparedness on learning and about latent learning and observational learning in which direct reinforcement is not necessary. Research on taste aversion indicated that rats could easily learn aversion to different-tasting water when it was paired with later sickness, but could not learn to pair auditory and visual cues with such sickness. This finding indicates that animals may be biologically predisposed to learn those associations that are important to their survival more easily than arbitrary associations. Similarly, the Brelands found in their animal training work that animals drift back to their instinctual responses from conditioned arbitrary ones, a phenomenon called instinctual drift. Findings such as these highlight the impact of biological predispositions upon learning.

Research has shown that reinforcement is not necessary for learning to occur. Tolman’s research with rats running mazes showed that without reinforcement, rats could learn a cognitive map of the maze that they could then use very efficiently when reinforcement became available at the end of the maze. This was an instance of latent learning, learning that is not demonstrated until there is an incentive to do so. Albert Bandura’s pioneering research on observational learning showed that much of human learning also doesn’t involve direct experience. The children in his study all learned a model’s behavior through observation, regardless of whether the behavior was reinforced, punished, or neither. Research in observational learning since Bandura’s studies has extended his findings by linking exposure to media violence to an increased likelihood of aggression in viewers. Other recent research by neuroscientists has led to the discovery of mirror neuron systems that provide a neural basis for observational learning.

ConceptCheck | 3

Explain why the ease of learning taste aversions is biologically adaptive for humans.

Explain why the ease of learning taste aversions is biologically adaptive for humans.Learning taste aversions quickly and easily is adaptive because it increases our chances of survival. If we eat or drink something that makes us terribly sick, it is adaptive to no longer ingest that food or drink because we might die. We have a greater probability of surviving if we learn such aversions easily.

Given Garcia and Koelling’s (1966) findings (discussed in this section) for rats’ pairing the cues of sweet-tasting water and normal-tasting water accompanied by clicking noises and flashing lights when the rats drank with a sickness consequence, what do you think they observed when they paired these two cues with an immediate electric shock consequence instead of a sickness consequence? Justify your answer in terms of biological preparedness in learning.

Given Garcia and Koelling’s (1966) findings (discussed in this section) for rats’ pairing the cues of sweet-tasting water and normal-tasting water accompanied by clicking noises and flashing lights when the rats drank with a sickness consequence, what do you think they observed when they paired these two cues with an immediate electric shock consequence instead of a sickness consequence? Justify your answer in terms of biological preparedness in learning.The rats easily learned (stopped drinking the water) when the normal-tasting water accompanied by clicking noises and flashing lights was paired with immediate electric shock, but they did not when the sweet-tasting water cue was paired with this consequence. In terms of biological preparedness, the former pairing makes biological sense to the rats whereas the latter pairing does not. In a natural environment, audiovisual changes typically signal possible external dangers, but sweet tasting water typically does not. External cues (noises and flashing lights) paired with an external dangerous consequence (shock) makes more biological sense than an internal cue (taste) paired with an external consequence (shock). For learning to occur, external cues should be paired with external consequences, and internal cues with internal consequences.

Explain why it would be easier to operantly condition a behavior that is “natural” for an animal than one that isn’t natural.

Explain why it would be easier to operantly condition a behavior that is “natural” for an animal than one that isn’t natural.It would be easier to operantly condition a “natural” response because it would lower the probability that instinctual drift will interfere with the conditioning. Because an animal would already be making its natural response to the object, there would be no other response to drift back to. In addition, the natural response to the object would be easier to shape because it would be given sooner and more frequently than an unnatural response.

Explain the relationship between latent learning and reinforcement.

Explain the relationship between latent learning and reinforcement.Latent learning occurs without direct reinforcement, but such learning is not demonstrated until reinforcement is made available for the learned behavior.

Explain how reinforcing and punishing models influenced observers in Bandura’s research.

Explain how reinforcing and punishing models influenced observers in Bandura’s research.In Bandura’s work, reinforcing the model increased the probability that the observed behavior would be displayed, and punishing the model decreased the probability that the observed behavior would be displayed. But Bandura demonstrated in both cases that the behavior was learned. The reinforcement or punishment only affected whether it was displayed.

183