How Others Influence Our Behavior

Social influence research examines how other people and the social forces they create influence an individual’s behavior. There are many types of social influence, including conformity, compliance, obedience, and group influences. In this section, we will discuss all of these types of social influence. We start with conformity.

Why We Conform

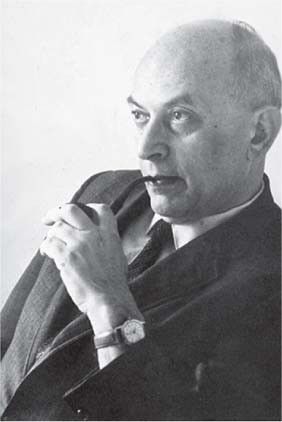

Conformity is usually defined as a change in behavior, belief, or both to conform to a group norm as a result of real or imagined group pressure. The word conformity has a negative connotation. We don’t like to be thought of as conformists. However, conformity research indicates that humans have a strong tendency to conform. To understand the two major types of social influence leading to conformity (informational social influence and normative social influence), we’ll consider two classic studies on conformity: Muzafer Sherif ’s study using the autokinetic effect and Solomon Asch’s study using a line-length judgment task.

The Sherif study and informational social influence.

In Sherif ’s study, the participants thought that they were part of a visual perception experiment (Sherif, 1937). Participants in completely dark rooms were exposed to a stationary point of light and asked to estimate the distance the light moved. Thanks to an illusion called the autokinetic effect, a stationary point of light appears to move in a dark room because there is no frame of reference and our eyes spontaneously move. How far and in what direction the light appears to move varies widely among different people. During the first session in Sherif’s study, each participant was alone in the dark room when making his judgments. Then, during the next three sessions, he was in the room with two other participants and could hear the others’ estimates of the illusory light movement. What happened?

The average of the individual estimates varied greatly during the first session. Over the next three group sessions, however, the individual estimates converged on a common group norm. See Figure 9.1. All of the participants in the group ended up making the same estimates. What do you think would happen if you brought the participants back a year later and had them make estimates again while alone? Would their estimates regress back to their earlier individual estimates or stay at the group norm? Surprisingly, they stay at the group norm (Rohrer, Baron, Hoffman, & Swander, 1954).

355

To understand why conformity was observed in Sherif’s study, we need to consider informational social influence. This effect stems from our desire to be right in situations in which the correct action or judgment is not obvious and we need information. In Sherif’s study, participants needed information because of the illusory nature of the judgment; thus, their conformity was due to informational social influence. When a task is ambiguous or difficult and we want to be correct, we look to others for information. But what about conformity when information about the correct way to proceed is not needed? To understand this type of conformity, we need to consider Asch’s study on line-length judgment and normative social influence.

The Asch study and normative social influence.

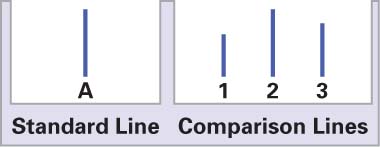

Student participants in Asch’s study made line-length judgments similar to the one in Figure 9.2 (Asch, 1955, 1956). These line-length judgments are easy. If participants are alone when making these judgments, they don’t make mistakes.

356

But in Asch’s study, they were not alone. There were others in the room who were Asch’s student confederates but who were playing the role of participants. Across various conditions in the study, the number of confederate participants varied from one to 15. On each trial, judgments were made orally, and Asch structured the situation so that confederate participants responded before the actual participant. Seating was arranged so that the actual participant was the next to last to respond. The confederate participants purposely made judgmental errors on certain trials. Asch wondered what the actual participant would do when confronted with other participants unanimously voicing an obviously incorrect judgment. For example, if all of the other participants said that “1” was the answer to the sample judgment in Figure 9.2, what would you say?

Surprisingly, a large number of the actual participants conformed to the obviously incorrect judgments offered by the confederates. About 75 percent of the participants conformed some of the time, and overall, participants conformed 37 percent of the time. Asch’s results have been replicated many times and in many different countries (Bond & Smith, 1996). The correct answers are clear in the Asch judgment task, and there are no obvious reasons to conform. The students in the experimental room didn’t know each other, and they were all of the same status. The judgment task was incredibly easy. Why, then, was conformity observed?

The reason for the conformity in Asch’s study is normative social influence, an effect stemming from our desire to gain the approval and to avoid the disapproval of others. We change our behavior to meet the expectations of others and to get the acceptance of others. We go along with the crowd. But Asch, who died in 1996, always wondered whether the subjects who conformed did so only out of normative needs, knowing that their answers were wrong, or whether they did so because the social pressure of the situation also actually changed their perceptions to agree with the group—what the confederates said changed what the participants saw (Blakeslee, 2005). Berns et al. (2005) found some evidence that the latter may possibly be the case. Using fMRI (see Chapter 2), they scanned subjects’ brain activity while participating in an Asch-type conformity experiment employing a mental rotation task instead of a line-length judgment task. As in Asch’s studies, conformity was observed. Subjects gave the group’s incorrect answers (conformed) 41 percent of the time. Surprisingly, the brain activity for conforming responses was in the cortical areas dedicated to visual and spatial awareness, regions devoted to perception. However, the brain activity for independent responses was in the cortical areas devoted to emotion, such as the amygdala, indicating that nonconformity creates an emotional cost. These findings led the study’s lead author, Gregory Berns, to conclude that, “We like to think that seeing is believing, but the study’s findings show that seeing is believing what the group tells you to believe” (Blakeslee, 2005). This conclusion seems too strong given limitations on deciding the exact nature of the visual processing leading to the activity in the indicated brain areas. While it can be concluded that different brain areas were active during conforming versus nonconforming responding, clearly, more research is needed before it can be concluded that the conformers actually change their perceptions.

357

Situational, cultural, and gender factors that impact conformity.

Asch and other conformity researchers have found many situational factors that affect whether we conform. Let’s consider three. (1) Unanimity of the group is important. It’s difficult to be a minority of one, but not two. For example, Asch found that the amount of conformity drops considerably if just one of the confederate participants gives an answer—right or wrong—that differs from the rest of the group. (2) The mode of responding (voting aloud versus secret ballot) is also important. In Asch’s study, if the actual participant did not have to respond aloud after hearing the group’s responses, but rather could write her response, the amount of conformity dropped dramatically. So, in the various groups to which you belong, be sure to use secret ballots when voting on issues if you want the true opinions of your group members. (3) Finally, more conformity is observed from a person who is of lesser status than the other group members or who is attracted to the group and wants to be a part of it. These situational factors are especially effective in driving conformity when there is a probationary period for attaining group membership.

Cultural factors also seem to impact the amount of conformity that is observed. Bond and Smith (1996) conducted a meta-analysis of 133 conformity studies drawn from 17 countries using an Asch-type line-length judgment task to investigate whether the level of conformity has changed over time and whether it is related cross-culturally to individualism–collectivism. Broadly defined, individualism emphasizes individual needs and achievement. Collectivism, in contrast, emphasizes group needs, thereby encouraging conformity and discouraging dissent with the group. Analyzing just the studies conducted in the United States, they found that the amount of conformity has declined since the 1950s, paralleling the change in our culture toward more individualism. Similarly, they found that collectivist countries (e.g., Hong Kong) tended to show higher levels of conformity than individualist countries (e.g., the United States). Thus, whereas Asch’s basic conformity findings have been replicated in many different countries, cultural factors do play a role in determining the amount of conformity that is observed.

358

In their meta-analysis, Bond and Smith (1996) also found evidence for gender differences in conformity. They observed a higher level of conformity for female participants, which is consistent with earlier reviews of conformity studies (e.g., Eagly & Carli, 1981). They also found that this gender difference in conformity had not narrowed over time. Mori and Arai (2010) recently replicated this gender difference finding, using a very clever presentation technique called the fMORI technique that eliminates the need for confederates. This technique allowed the researchers to present different stimuli to the minority participants and the majority participants on critical trials without their awareness. The top part of the standard lines appeared in either green or magenta so that the two groups of participants would see them differently when wearing different types of polarizing sunglasses that filtered either green or magenta to make the lines appear longer or shorter. Mori and Arai’s study, incorporating a new presentation technique, testifies to the continuing interest in Asch’s conformity research, which is over a half-century old.

Why We Comply

Conformity is a form of social influence in which people change their behavior or attitudes in order to adhere to a group norm. Compliance is acting in accordance to a direct request from another person or group. Think about how often others—your parents, roommates, friends, salespeople, and so on—make requests of you. Social psychologists have identified many different techniques that help others to achieve compliance with such requests. Salespeople, fundraisers, politicians, and anyone else who wants to get people to say “yes” use these compliance techniques. After reading this section you should be much more aware of how other people—especially salespeople—attempt to get you to comply with their requests. Of course, you’ll also be better equipped to get other people to comply with your requests. As we discuss these compliance techniques, note how each one involves two requests, and how it is the second request with which the person wants compliance. We’ll start our discussion with a compliance technique that you have probably encountered—the foot-in-the-door technique.

359

The foot-in-the-door technique.

In the foot-in-the-door technique, compliance to a large request is gained by preceding it with a very small request. The tendency is for people who have complied with the small request to comply with the next, larger request. The principle is simply to start small and build. One classic experimental demonstration of this technique involved a large, ugly sign about driving carefully (Freedman & Fraser, 1966). People were asked directly if this ugly sign could be put in their front yards, and the vast majority of them refused. However, the majority of the people who had complied with a much smaller request two weeks earlier (for example, to sign a safe-driving petition) agreed to have the large, ugly sign put in their yard. The smaller request had served as the “foot in the door.”

In another study, people who were first asked to wear a pin publicizing a cancer fund-raising drive and then later asked to donate to a cancer charity were far more likely to donate to the cancer charity than were people who were asked only to contribute to the charity (Pliner, Hart, Kohl, & Saari, 1974). Why does the foot-in-the-door technique work? Its success seems to be partially due to our behavior (complying with the initial request) affecting our attitudes both to be more positive about helping and to view ourselves as generally charitable people. In addition, once we have made a commitment (such as signing a safe-driving petition), we feel pressure to remain consistent (putting up the large ugly sign) with this earlier commitment.

This technique was used by the Chinese Communists during the Korean War on prisoners of war to help brainwash them about Communism (Ornstein, 1991). Many prisoners returning after the war had praise for the Chinese Communists. This attitude had been cultivated by having the prisoners first do small things like writing out some questions and then the pro-Communist answers, which they might just copy from a notebook, and then later writing essays in the guise of students summarizing the Communist position on various issues such as poverty. Just as the participants’ attitudes changed in the Freedman and Fraser study and they later agreed to put the big, ugly sign in their yard, the POWs became more sympathetic to the Communist cause. The foot-in-the-door technique is a very powerful technique. Watch out for compliance requests of increasing size. Say no, before it is too late to do so.

The door-in-the-face technique.

The door-in-the-face technique is the opposite of the foot-in-the-door technique (Cialdini, Vincent, Lewis, Catalan, Wheeler, & Danby, 1975). In the door-in-the-face technique, compliance is gained by starting with a large, unreasonable request that is turned down and following it with a more reasonable, smaller request. The person who is asked to comply appears to be slamming the door in the face of the person making the large request. It is the smaller request, however, that the person making the two requests wants all along. For example, imagine that one of your friends asked you to watch his pet for a month while he is out of town. You refuse. Then your friend asks for what he really wanted, which was for you to watch the pet over the following weekend. You agree. What has happened? You’ve succumbed to the door-in-the-face technique.

360

The success of the door-in-the-face technique is probably due to our tendency toward reciprocity, making mutual concessions. The person making the requests appears to have made a concession by moving to the much smaller request. Shouldn’t we reciprocate and comply with this smaller request? Fear that others won’t view us as fair, helpful, and concerned for others likely also plays a role in this compliance technique’s success. The door-in-the-face technique seems to have even been involved in G. Gordon Liddy getting the Watergate burglary approved by the Committee to Reelect the President, CREEP (Cialdini, 1993). The committee approved Liddy’s plan with a bare bones $250,000 budget, after they had disapproved plans with $1 million and $500,000 proposed budgets. The only committee person who opposed acceptance had not been present for the previous two more costly proposal meetings. Thus, he was able to see the irrationality of the plan and was not subject to the door-in-the-face reciprocity influence felt by other committee members.

The low-ball technique.

Consider the following scenario (Cialdini, 1993). You go to buy a new car. The salesperson gives you a great price, much better than you ever imagined. You go into the salesperson’s office and start filling out the sales forms and arranging for financing. The salesperson then says that before completing the forms, she forgot that she has to get approval from her sales manager. She leaves for a few minutes and returns looking rather glum. She says, regretfully, that the sales manager said that he couldn’t give you that great price you thought you had. The sales price has to be a higher one. What do most people do in this situation? You probably are thinking that you wouldn’t buy the car. However, research on this compliance tactic, called the low-ball technique, indicates that it does work—people buy the car at the higher price (Cialdini, Cacioppo, Bassett, & Miller, 1978).

In the low-ball technique, compliance to a costly request is achieved by first getting compliance to an attractive, less costly request and then reneging on it. This is similar to the foot-in-the-door technique in that a second, larger request is the one desired. In the low-ball technique, however, the first request is one that is very attractive to you. You are not making a concession (as in the foot-in-the-door technique), but rather getting a good deal. However, the “good” part of the deal is then taken away. Why does the low-ball technique work? The answer is that many of us feel obligated to go through with the deal after we have agreed to the earlier deal (request) even if the deal has changed for the worse. This is similar to the pressure we feel to remain consistent in our commitment that helps drive the foot-in-the-door technique. So remember, if somebody tries to use the low-ball technique on you, walk away. You are not obligated to comply with the new request.

361

The that’s-not-all technique.

There’s another compliance technique, which is often used in television infomercials. Just after the price for the product is given and before you can decide yes or no about it, the announcer says, “But wait, that’s not all, there’s more,” and the price is lowered or more merchandise is included, or both, in order to sweeten the deal. Sometimes an initial price is not even given. Rather, the announcer says something like, “How much would you pay for this incredible product?” and then goes on to sweeten the deal before you can answer. As in the low-ball technique, the final offer is the one that was planned from the start. However, you are more likely to comply and take the deal after all of the build up than if this “better” deal were offered directly (Burger, 1986). This technique is called the that's-not-all technique—to gain compliance, a planned second request with additional benefits is made before a response to a first request can be made. Like the door-in-the-face technique, salespeople also use this technique. For example, before you can answer yes or no to a price offered by a car salesperson, he throws in some “bonus options” for the car. As in the door-in-the-face technique, reciprocity is at work here. The seller has made a concession (the bonus options), so shouldn’t you reciprocate by taking the offer, complying? We often do.

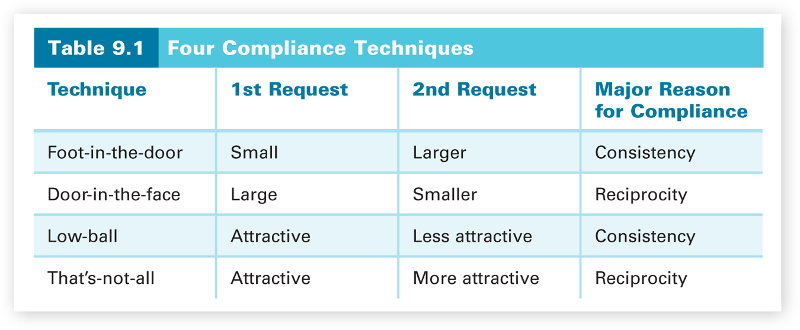

In summary, each of these compliance techniques involves two requests (see Table 9.1). In the foot-in-the-door technique, a small request is followed by a larger request. In the door-in-the-face technique, a large request is followed by a smaller request. In the low-ball technique, an attractive first request is taken back and followed by a less-attractive request. In the that’s-not-all technique, a more attractive request is made before a response can be given to an initial request. In all cases, the person making the requests is attempting to manipulate you with the first request. It is the second request for which compliance is desired. The foot-in-the-door and low-ball techniques both lead to commitment to the first request with the hope that the person will feel pressure to remain true to his initial commitment and accede to the second request. The other two techniques involve reciprocity. Once the other person has made a concession (accepted our refusal in the door-in-the-face technique) or done us a favor (an even better deal in the that’s-not-all technique), we think we should reciprocate and accede to the second request.

362

Why We Obey

Compliance is agreeing to a request from a person. Obedience is following the commands of a person in authority. Obedience is sometimes constructive and beneficial to us. It would be difficult for a society to exist, for instance, without obedience to its laws. Young children need to obey their caretakers for their own well-being. Obedience can also be destructive, though. There are many real-world examples of its destructive nature. Consider Nazi Germany, in which millions of innocent people were murdered, or the My Lai massacre in Vietnam, in which American soldiers killed hundreds of innocent children, women, and old people. In the My Lai massacre, the soldiers were ordered to shoot the innocent villagers, and they did. The phrase “I was only following orders” usually surfaces in such cases. When confronted with these atrocities, we wonder what type of person could do such horrible things. Often, however, it’s the situational social forces, and not the person, that should bear more of the responsibility for the actions. Just as we found that situational factors lead us to conform and comply, we find that such social forces can lead us to commit such acts of destructive obedience.

Milgram’s basic experimental paradigm.

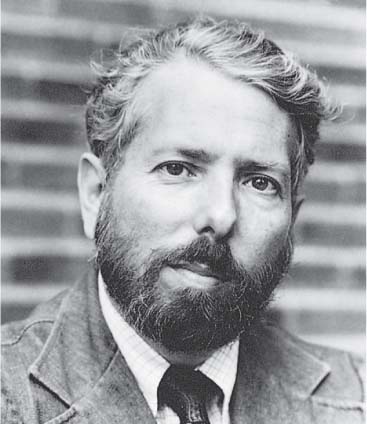

The experiments that point to social forces as causes of destructive obedience—probably the most famous and controversial ones in psychology—are Stanley Milgram’s obedience studies done at Yale University in the early 1960s (Milgram, 1963, 1965, 1974). We will describe Milgram’s experimental paradigm and findings in more detail than usual. It is also worth noting that Milgram was one of Asch’s graduate students and actually got the initial idea for his obedience studies from conducting some of Asch’s conformity studies (Blass, 2004). What exactly did Milgram do?

363

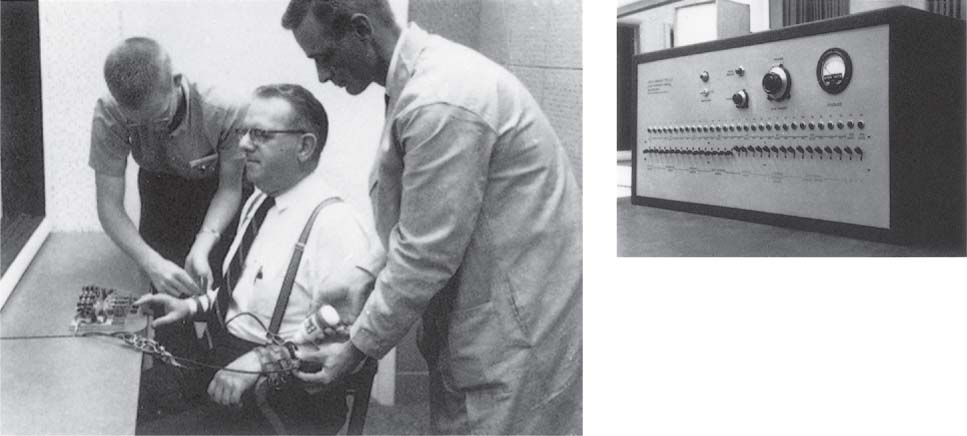

First, let’s consider Milgram’s basic experimental procedure from the perspective of a participant in this study. Imagine that you have volunteered to be in an experiment on learning and memory. You show up at the assigned time and place, where you encounter two men, the experimenter and another participant, a middle-aged man. The experimenter explains that the study is examining the effects of punishment by electric shock on learning, specifically learning a list of word pairs (for example, blue—box). When tested, the learner would have to indicate for each of the first words in the word pairs, which of four words had originally been paired with it. He would press one of four switches, which would light up one of the four answer boxes located on top of the shock generator. The experimenter further explains that one participant will be the teacher and the other the learner. You draw slips for the two roles, and the other participant draws “learner,” making you the teacher. You accompany the learner to an adjoining room where he is strapped into a chair with one arm hooked up to the shock generator in the other room. The shock levels in the experiment will range from 15 volts to 450 volts. The experimenter explains that high levels of shock need to be used in order for the study to be a valid test of its effectiveness as punishment. The experimenter gives you a sample shock of 45 volts so that you have some idea of the intensity of the various shock levels.

You return to the other room with the experimenter and sit down at the shock generator, which has a switch for each level of shock, starting at 15 volts and going up to 450 volts in 15-volt increments. There are also some labels below the switches—“Slight Shock,” “Very Strong Shock,” “Danger: Severe Shock,” and, under the last two switches, “XXX” in red. The experimenter reminds you that when the learner makes a mistake on the word pair task, you are to administer the shock by pushing the appropriate switch. You are to start with 15 volts and increase the shock level by 15 volts for each wrong answer.

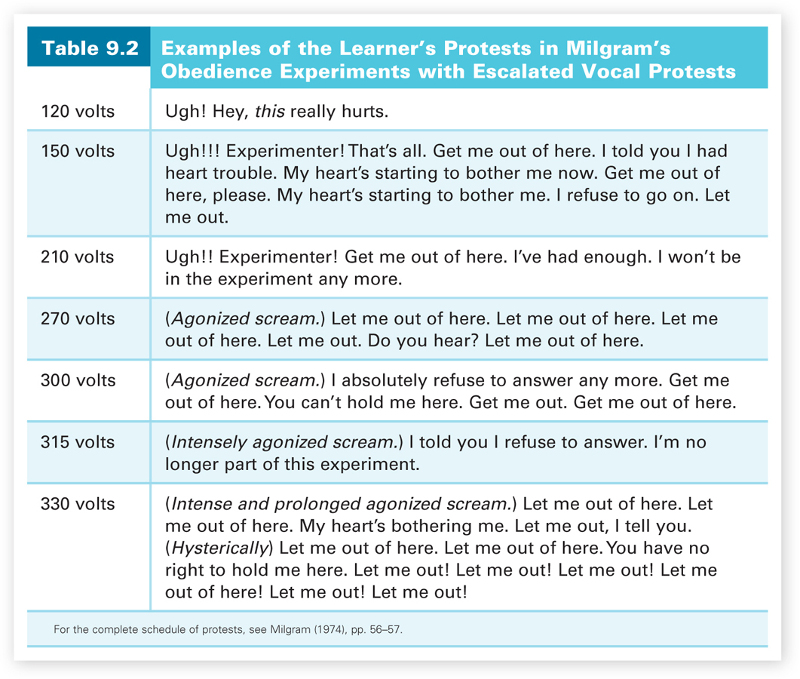

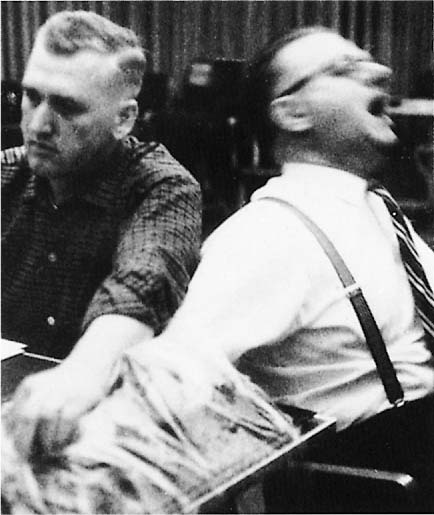

The experiment begins, and the learner makes errors. Nothing else happens except for a few “Ugh!”s until at 120 volts the learner cries out that the shocks really hurt. As the shock level increases, you continue hearing him cry out, and his screams escalate with the increasing voltage (see Table 9.2). At higher levels, he protests and says that he no longer wants to participate and that he isn’t going to respond anymore. After 330 volts, he doesn’t respond. You turn to the experimenter to see what to do. The experimenter says to treat a nonresponse as a wrong answer and to continue with the experiment. The learner never responds again.

This is basically the situation that Milgram’s participants confronted. What would you do? If you are like most people, you say you would stop at a rather low level of shock. Before this experiment was conducted, Milgram asked various types of people (college students, nonstudent adults, and psychiatrists) what they thought they and other people would do. Inevitably, the response was that they would stop at a fairly low level of shock, less than 150 volts, that others would also do so, and that virtually no one would go to the end of the shock generator. The psychiatrists said that maybe one person in a thousand would go to the end of the shock generator.

364

365

Milgram’s initial obedience finding.

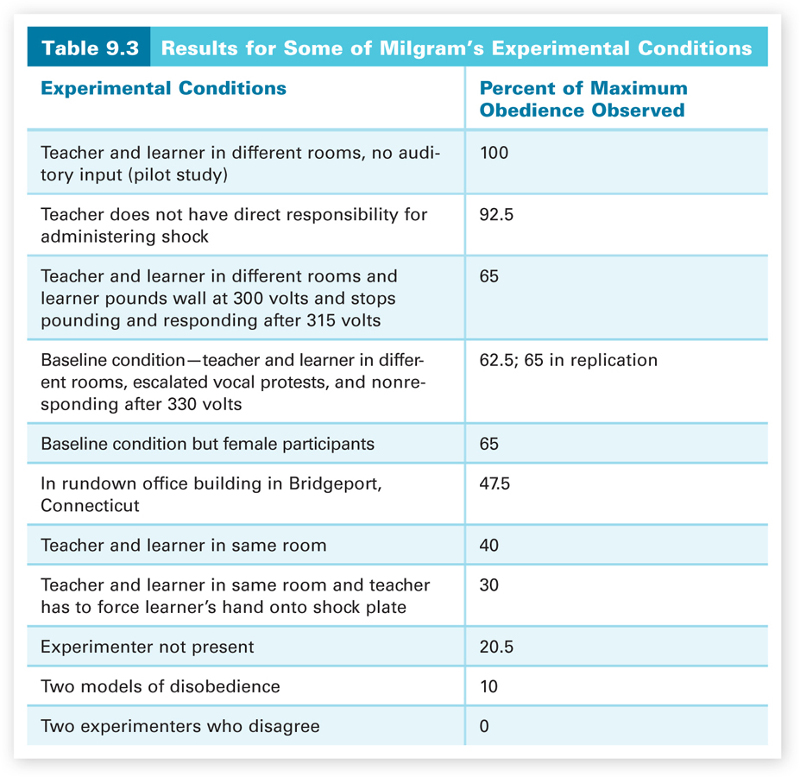

As you have probably already guessed, Milgram didn’t find what these people predicted. For the conditions just described (learner in another room, escalated moaning and screaming, and nonresponding at higher levels of shock), almost two out of every three participants (62.5 percent) continued to obey the experimenter and administered the maximum possible shock (450 volts). This is even worse than it appears, as the learner had mentioned a slight heart condition before the experiment began and mentioned it again during his protests (see Table 9.2). There is a sad irony here in that the learner did have a heart problem and died from it about three years later (Blass, 2009). Milgram replicated this finding with new participants and observed the same result—65 percent obeyed and administered the maximum shock. He also observed a 65 percent maximal obedience rate in another experiment when the learner made no vocal protests but rather just pounded the laboratory wall in protest at 300 volts and stopped pounding and responding after 315 volts. Even more frightening is Milgram’s finding in a pilot study that without any auditory input (no vocal protests or pounding the wall), virtually every participant continued to obey the experimenter and administer the maximum possible shock!

It is important to realize that the learner (a confederate of the experimenter) was never really shocked. The teacher only thought he was administering the shocks. The true participants, who always drew the role of teacher, were carefully debriefed after the experiment was over and told that they did not really shock the other person. Critics of Milgram’s study, however, argue that Milgram inflicted irreparable harm on them (Baumrind, 1964). Participants were put into an emotional situation in which many of them appeared to be on the point of nervous collapse (many bit their lips, dug their fingernails into their own flesh, laughed hysterically, and pushed their fists into their foreheads). Participants also learned something disturbing about themselves—that they would follow the commands of an authority figure to harm another human being. In his rebuttal to Baumrind (1964) and his other critics, Milgram (1964) reported the findings of a survey sent to all 856 participants in the obedience experiments. The survey asked the participants to reflect on their experience during the experiment. Milgram ended up with a 92 percent return rate, a remarkable return rate for a mailed survey. What were the survey results? Most participants in the obedience experiments had positive feelings about their participation. For example, almost 84 percent said that they were glad to have participated, and only 1.3 percent said that they were sorry they had. Eighty percent agreed that more studies of this sort should be carried out. Milgram also had a psychiatrist interview 40 participants with the aim of identifying any who might have been harmed by the experiment. The psychiatrist found no harm in any of the participants he interviewed.

This difference between what we say we will do and what we actually do illustrates the power of situational social forces on our behavior. When we are not in the situation, we may say that we would act one way; however, when in the situation, we may act in the opposite way. Why does the situation have such a strong impact on our behavior? What situational factors are important? These are the questions that Milgram tried to answer in his 20 or so experiments with over 1,000 participants using variants of the procedure described above.

366

Do you realize how Milgram used the foot-in-the-door technique in achieving obedience (Gilbert, 1981)? He had the participant start by administering very small shocks (beginning at 15 volts) and increased the level slowly (in 15-volt increments). The learner didn’t protest these mild, early shocks. The teacher had already obeyed several times by the time the learner started his protests (at 120 volts), and by the time the shock level was high, the teacher had obeyed numerous times. Milgram’s results might have been very different if he would have had participants start at a high level of shock or if the learner had protested the first few small shocks. It is also important to realize that if at any time the teacher asked the experimenter about stopping, the experimenter would respond with a series of four standardized prompts, such as “Please go on” or “The experiment requires that you continue.” If the teacher refused to go on, the experimenter would stop the experiment; therefore, the participants were not coerced or forced to obey.

In addition, the participants in Milgram’s experiments were not college students. He conducted the study at Yale University in New Haven, Connecticut, and used volunteer participants from the New Haven community. They were paid $4, plus bus fare. The experimenter and the confederate participants were always men. In the experiments that I described and in all of his other experiments except one, Milgram used male participants from age 20 to age 50. In just one experiment, female participants served as teachers with male learners, and the maximal obedience rate was the same as with male teachers, 65 percent. This finding of no gender differences in obedience has been replicated by other researchers (Blass, 1999). Milgram’s basic obedience finding has also been replicated in many different countries, including Jordan, Spain, Italy, and Austria (Blass, 1999). This means that Milgram’s basic obedience-to-authority finding is definitely reliable. With this in mind, let’s see what some of the situational factors are that are critical to obtaining such obedience.

Situational factors that impact obedience.

Let’s label the Milgram experiment described earlier in this chapter as the baseline condition (the teacher and learner are in separate rooms but the teacher can hear the learner’s escalated screams and protests and his refusal to continue at higher voltage levels, and the learner does not respond after 330 volts). One important factor is the physical presence of the experimenter (the person with authority). Milgram found that if the experimenter left the laboratory and gave his commands over the telephone, maximum obedience (administering the highest possible shock) dropped to 20.5 percent. The closeness of the teacher and the learner is also important (as the auditory input indicates). Remember, virtually every participant administered the maximum shock when the learner did not vocally protest or pound the wall, but only about two of every three participants did so when the teacher could hear the learner’s protests or pounding. In another experiment, Milgram made the teacher and learner even closer by having them both in the same room instead of different rooms, and maximum obedience dropped to 40 percent. It dropped even further, to 30 percent, when the teacher had to administer the shock directly by forcing the learner’s hand onto a shock plate. This means that obedience decreases as the teacher and learner are physically closer to each other. Interestingly, though, the maximum obedience rate doesn’t drop to zero percent even when they touch; it is still 30 percent.

367

Some critics thought that the location of the experiments, at prestigious Yale University, contributed to the high maximum obedience rate. To check this hypothesis, Milgram ran the same baseline condition in a rundown office building in nearby Bridgeport, Connecticut, completely dissociated from Yale. What do you think happened? The maximum obedience rate went down, but it didn’t go down much. Milgram found a maximum obedience rate of 47.5 percent. The prestige and authority of the university setting did contribute to the obedience rate, but not nearly as much as the presence of the experimenter or the closeness of the teacher and the learner. The presence of two confederate disobedient teachers dropped the obedience rate to 10 percent. To get the maximum obedience to zero percent, Milgram set up a situation with two experimenters who at some point during the experiment disagreed. One said to stop the experiment, while the other said to continue. In this case, when one of the people in authority said to stop, all of the teachers stopped.

What about getting the maximum obedience rate to increase from that observed in the baseline condition? Milgram tried to do that by taking the direct responsibility for shocking away from the learner. Instead, the teacher only pushed the switch on the shock generator to indicate to another teacher (another confederate) in the room with the learner how much shock to administer. With this direct responsibility for shocking the learner lifted off of their shoulders, almost all of the participants (92.5 percent) obeyed the experimenter and administered the maximum shock level. This finding and all of the others that we have discussed are summarized in Table 9.3, so that you can compare them. For more detail on the experiments that were described, see Milgram (1974).

Do you think that Milgram’s findings could be replicated today? Aren’t people today more aware of the dangers of blindly following authority than they were in the early 1960s? If so, wouldn’t they disobey more often than Milgram’s participants? To test this question, Burger (2009) conducted a replication of Milgram’s baseline condition. His participants were men and women who responded to newspaper advertisements and flyers distributed locally. Their ages ranged from 20 to 81 years, with a mean age of 42.9 years. Some procedural changes were necessary to obtain permission to run the study, which otherwise would not have met the current American Psychological Association guidelines for ethical treatment of human subjects. The main procedural change of interest to us was that once participants pressed the 150-volt switch and started to read the next test item, the experiment was stopped—leading Elmes (2009) to refer to this procedure as “obedience lite.” The 150-volt point was chosen because in Milgram’s study, once participants went past 150 volts, the vast majority continued to obey up to the highest shock level (Burger, 2009). In a meta-analysis of data from eight of Milgram’s obedience experiments, Packer (2008) also found that the 150-volt point was the critical juncture for disobedience (the voltage level at which participants were most likely to disobey the experimenter). Thus, in Burger’s study, it is a reasonable assumption that the percentage of participants that go past 150 volts is a good estimate of the percentage that would go to the end of the shock generator. Of course, the experimenter also ended the experiment when a participant refused to continue after hearing all of the experimenter’s prods. What do you think Burger found for male participants? What about female participants? 66.7 percent of the men continued after 150 volts, and 72.7 percent of the women did so. Although these percentages have to be adjusted down slightly because not every participant in Milgram’s study who went past 150 volts maximally obeyed, these results are very close to Milgram’s finding of 65 percent obedience for both men and women in the baseline condition. Burger’s findings indicate that the average American reacted in this laboratory obedience situation today much like they did almost 50 years ago.

368

369

Some European psychologists have found an alternative way forward to reopening the study of obedience using the Milgram experimental paradigm. They have gotten around the ethical limitations by placing participants in an immersive virtual environment that reprises Milgram’s experimental setting (Cheetham, Pedroni, Antley, Slater, & Jäncke, 2009; Dambrun & Vatiné, 2010; Slater et al., 2006). The evidence thus far indicates that people tend to respond to the situation as if it were real. For example, in Slater et al., participants, wearing a virtual reality headset, were instructed to administer shocks to a computerized female whenever the word association memory tests were answered incorrectly. In the Visible condition, the learner (the female avatar) was seen and heard, and she responded to the shocks with increasing signs of discomfort including screaming and said that she wanted to stop. At the penultimate shock, her head slumped forward and she made no further responses. In the Hidden condition, her answers were communicated only through text and there were no protests. Replicating Milgram’s finding that the closeness of the teacher and learner impacts the amount of obedience, all 11 participants in the Hidden condition administered the strongest shock, and in the Visible condition, 17 of the 20 participants did so. Skin conductance analysis indicated that in spite of their knowledge that the situation was artificial, participants were aroused and distressed as if it were real. Using fMRI, Cheetham et al. found supportive evidence that this was indeed personal distress and not empathic concern. In sum, it appears that the immersive virtual environment procedure opens the door to future behavioral and neuroimaging studies of obedience and other extreme social situations.

Aside from ethical considerations, another criticism of Milgram’s experiments was that participants were in an experiment in which they had agreed to participate and were paid for their participation. Some thought that participants may have felt an obligation to obey the experimenter and continue in the experiment because of this agreement. However, the very different levels of obedience that were observed when various situational factors were manipulated shows that these situational factors were more important in obtaining the obedience than a feeling of experimental obligation. In addition, Milgram’s results have also been replicated without paying people for their participation. Let’s see how this was done.

The “Astroten” study.

The fascinating aspect of the “Astroten” study is that the participants did not even know they were in the study! The results, then, could hardly be due to a feeling of experimental obligation. The participants in this study were nurses on duty alone in a hospital ward (Hofling, Brotzman, Dalrymple, Graves, & Pierce, 1966). Each nurse received a call from a person using the name of a staff doctor not personally known by the nurse. The doctor ordered the nurse to give a dose exceeding the maximum daily dosage of an unauthorized medication (“Astroten”) to an actual patient in the ward. This order violated many hospital rules—medication orders need to be given in person and not over the phone, it was a clear overdose, and the medication was not even authorized. Twenty-two nurses, in different hospitals and at different times, were confronted with such an order. What do you think they did? Remember that this is not an experiment. These are real nurses who are on duty in hospitals doing their jobs.

370

Twenty-one of the twenty-two nurses did not question the order and went to give the medication, though they were intercepted before getting to the patient. Now what do you think that the researchers found when they asked other nurses and nursing students what they would do in such a situation? Of course, they said the opposite of what the nurses actually did. Nearly all of them (31 out of 33) said that they would have refused to give the medication, once again demonstrating the power of situational forces on obedience. These findings are far from isolated. For example, Krackow and Blass (1995) conducted a survey study of registered nurses on the subject of carrying out a physician’s order that could have harmful consequences for the patient. Nearly half (46 percent) of the nurses reported that they had carried out such orders, assigning most of the responsibility to the physicians and little to themselves. These findings are truly worrisome, because these were real patients who, through blind obedience, were placed into potentially life-threatening situations.

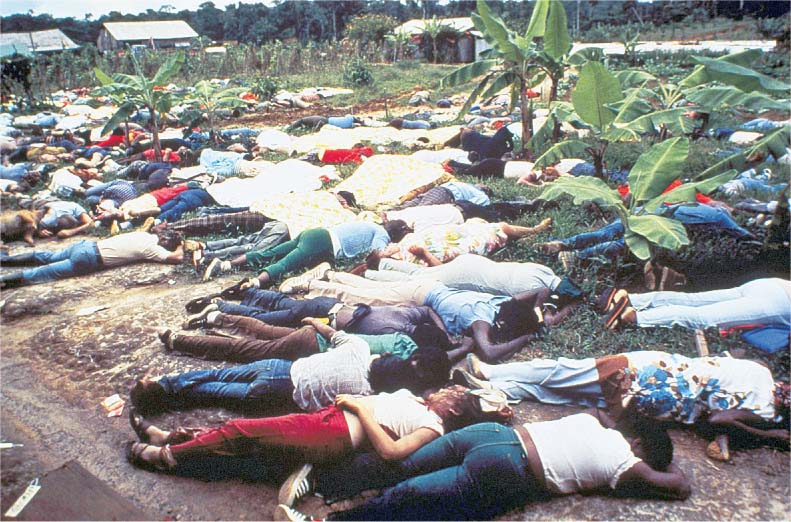

The Jonestown massacre.

Given all of this research pointing to our tendency to obey people in authority, even if it is destructive, the Jonestown mass suicide orchestrated by the charismatic Reverend Jim Jones should not be that surprising. People are willing to obey an authority, especially one with whom they are familiar and they view as their leader. Using various compliance techniques, Jones fostered unquestioned faith in himself as the cult leader and discouraged individualism. For example, using the foot-in-the-door technique, he slowly increased the financial support required of Peoples Temple members until they had turned over essentially everything they had (Levine, 2003). He even used the door-in-the-face and foot-in-the-door techniques to recruit members (Ornstein, 1991). He had his recruiters ask people walking by to help the poor. When they refused, the recruiters then asked them just to donate five minutes of their time to put letters in envelopes (door-in-the-face). Once they agreed to this small task, they were then given information about future, related charitable work. Having committed to the small task, they then returned later as a function of the consistency aspect of the foot-in-the-door technique. As they contributed more and more of their time, they became more involved in the Peoples Temple and were then easily persuaded to join.

There is one situational factor leading to the Jonestown tragedy that is not so obvious, though—the importance of Jones moving his almost 1,000 followers from San Francisco to the rain forests of Guyana, an alien environment in the jungle of an unfamiliar country (Cialdini, 1993). In such an uncertain environment, the followers would look to the actions of others (informational social influence) to guide their own actions. The Jonestown followers looked to the settlement leaders and other followers, which then helped Jones to manage such a large group of followers. With this in mind, think about the day of the commanded suicide. Why was the mass suicide so orderly, and why did the people seem so willing to commit suicide? The most fanatical followers stepped forward immediately and drank the poison. Because people looked to others to define the correct response, they followed the lead of those who quickly and willingly drank the poison. In other words, drinking the poison seemed to be the correct thing to do. This situation reflects a “herd mentality,” getting some members going in the right direction so that others will follow like cattle being led to the slaughterhouse. The phrase “drinking the Kool-Aid” later became synonymous with blind allegiance.

371

How Groups Influence Us

Usually when we think of groups, we think of formalized groups such as committees, sororities and fraternities, classes, or trial juries. Social psychologists, however, have studied the influences of all sorts of groups, from less formal ones, such as an audience at some event, to these more formal ones. Our discussion of the influences of such groups begins with one of the earliest ones that was studied, social facilitation.

Social facilitation.

How would your behavior be affected by the presence of other people, such as an audience? Do you think the audience would help or hinder? One of the earliest findings for such situations was social facilitation, improvement in performance in the presence of others. This social facilitative effect is limited, however, to familiar tasks for which the person’s response is automatic (such as doing simple arithmetic problems). When people are faced with difficult unfamiliar tasks that they have not mastered (such as solving a complex maze), performance is hindered by the presence of others. Why? One explanation proposes that the presence of others increases a person’s drive and arousal, and research studies have found that under increased arousal, people tend to give whatever response is dominant (most likely) in that situation (Bond & Titus, 1983; Zajonc, 1965). This means that when the task is very familiar or simple, the dominant response tends to be the correct one; thus performance improves. When the task is unfamiliar or complex, however, the dominant response is likely not the correct one; thus performance is hindered. This means that people who are very skilled at what they do will usually do better in front of an audience than by themselves, and those who are novices will tend to do worse. This is why it is more accurate to define social facilitation as facilitation of the dominant response on a task due to social arousal, leading to improvement on simple or well-learned tasks and worse performance on complex or unlearned tasks when other people are present.

372

Social loafing and the diffusion of responsibility.

Social facilitation occurs for people on tasks for which they can be evaluated individually. Social loafing occurs when people are pooling their efforts to achieve a common goal (Karau & Williams, 1993). Social loafing is the tendency for people to exert less effort when working toward a common goal in a group than when individually accountable. Social loafing is doing as little as you can get away with. Think about the various group projects that you have participated in, both in school and outside of school. Didn’t some members contribute very little to the group effort? Why? A major reason is the diffusion of responsibility—the responsibility for the task is diffused across all members of the group; therefore, individual accountability is lessened.

Behavior often changes when individual responsibility is lifted. Remember that in Milgram’s study the maximum obedience rate increased to almost 100 percent when the direct responsibility for administering the shock was lifted from the teacher’s shoulders. This diffusion of responsibility can also explain why social loafing tends to increase as the size of the group increases (Latané, Williams, & Harkins, 1979). The larger the group, the less likely it is that a social loafer will be detected, and the more the responsibility for the task gets diffused. Think about students working together on a group project for a shared grade. Social loafing would be greater when the group size is seven or eight than when it is only two or three. However, for group tasks in which individual contributions are identifiable and evaluated, social loafing decreases (Williams, Harkins, & Latané, 1981; Harkins & Jackson, 1985). Thus, in a group project for a shared grade, social loafing decreases if each group member is assigned and responsible for a specific part of the project.

373

The bystander effect and the Kitty Genovese case.

Now let’s think about the Kitty Genovese case described at the beginning of this chapter. Many people saw or heard the prolonged fatal attack. No one intervened until it was too late. Why? Was it big-city apathy, as proposed by many media people? Experiments by John Darley, Bibb Latané, and other social psychologists indicate that it wasn’t apathy, but rather that the diffusion of responsibility, as in social loafing, played a major role in this failure to help (Latané & Darley, 1970; Latané & Nida, 1981). Conducting experiments in which people were faced with emergency situations, Darley and Latané found what they termed the bystander effect—the probability of an individual helping in an emergency is greater when there is only one bystander than when there are many bystanders.

To understand this effect, Darley and Latané developed a model of the intervention process in emergencies. According to this model, for a person to intervene in an emergency, he must make not just one, but a series of decisions, and only one set of choices will lead him to take action. In addition, these decisions are typically made under conditions of stress, urgency, and threat of possible harm. The decisions to be made are 1) noticing the relevant event or not, 2) defining the event as an emergency or not, 3) feeling personal responsibility for helping or not, and 4) having accepted responsibility for helping, deciding what form of assistance he should give (direct or indirect intervention). If the event is not noticed or not defined as an emergency or if the bystander does not take responsibility for helping, he will not intervene. Darley and Latané’s research (e.g., Latané & Darley, 1968, and Latané & Rodin, 1969) demonstrated that the presence of other bystanders negatively impacted all of these decisions, leading to the bystander effect.

Let’s take a closer look at one of these experiments (Darley & Latané, 1968) to help you better understand the bystander effect. Imagine that you are asked to participate in a study examining the adjustments you’ve experienced in attending college. You show up for the experiment, are led to a booth, and are told that you are going to participate in a round-robin discussion of adjustment problems over the laboratory intercom. You put on earphones so that you can hear the other participants, but you cannot see them. The experimenter explains that this is to guarantee each student’s anonymity. The experimenter tells you that when a light in the booth goes on, it is your turn to talk. She also says that she wants the discussion not to be inhibited by her presence, so she is not going to listen to the discussion. The study begins. The first student talks about how anxious he has been since coming to college and that sometimes the anxiety is so overwhelming, he has epileptic seizures. Another student talks about the difficulty she’s had in deciding on a major and how she misses her boyfriend who stayed at home to go to college. It’s your turn, and you talk about your adjustment problems. The discussion then returns to the first student, and as he is talking, he seems to be getting very anxious. Suddenly, he starts having a seizure and cries out for help. What would you do?

374

Like most people, you would likely say that you would go to help him. However, this is not what was found. Whether a participant went for help depended upon how many other students the participant thought were available to help the student having the seizure (the bystander effect). Darley and Latané manipulated this number, so there were zero, one, or four others. In actuality, there were no other students present; the dialogue was all tape-recorded. There was only one participant. The percentage of participants who attempted to help before the victim’s cries for help ended decreased dramatically as the presumed number of bystanders increased, from 85 percent when alone to only 31 percent when four other bystanders who could help were assumed to be present. The probability of helping decreased as the responsibility for helping was diffused across more participants. Those participants who did not go for help were not apathetic, however. They were very upset and seemed to be in a state of conflict, even though they did not leave the booth to help. They appeared to want to help, but the situational forces (the presumed presence of other bystanders and the resulting diffusion of responsibility) led them not to do so. The bystander effect has been replicated many times for many different types of emergencies. Latané and Nida (1981) analyzed almost 50 bystander intervention studies with thousands of participants and found that bystanders were more likely to help when alone than with others about 90 percent of the time.

Now let’s apply the bystander effect to the Kitty Genovese case. The responsibility for helping was diffused across all of the witnesses to the attack. Because the bystanders could not see or hear one another, each bystander likely assumed that someone else had called the police, so they didn’t need to do so. A situational factor, the presence of many bystanders, resulted in no one intervening until it was too late. Based on the results of the bystander intervention studies, Kitty Genovese might have received help and possibly might have survived if there had been only one bystander to her attack. With the total responsibility on the one bystander’s shoulders, intervention would have been far more likely.

Deindividuation.

Diffusion of responsibility also seems to play a role in deindividuation, the loss of self-awareness and self-restraint in a group situation that fosters arousal and anonymity. The responsibility for the group’s actions is defused across all the members of the group. Deindividuation can be thought of as combining the increased arousal in social facilitation with the diminished sense of responsibility in social loafing. Deindividuated people feel less restrained, and therefore may forget their moral values and act spontaneously without thinking. The result can be damaging, as seen in mob violence, riots, and vandalism. In one experiment on deindividuation, college women wearing Ku Klux Klan– type white hoods and coats delivered twice as much shock to helpless victims than did similar women not wearing Klan clothing who were identifiable by name tags (Zimbardo, 1970). Once people lose their sense of individual responsibility, feel anonymous, and are aroused, they are capable of terrible things.

375

Group polarization and groupthink.

Two other group influences, group polarization and groupthink, apply to more structured, task-oriented group situations (such as committees and panels). Group polarization is the strengthening of a group’s prevailing opinion about a topic following group discussion of the topic. The group members already share the same opinion on an issue, and when they discuss it among themselves, this opinion is further strengthened as members gain additional information from other members in support of the opinion. This means that the initially held view becomes even more polarized following group discussion.

In addition to this informational influence of the group discussion, there is a type of normative influence. Because we want others to like us, we may express stronger views on a topic after learning that other group members share our opinion. Both informational and normative influences lead members to stronger, more extreme opinions. A real-life example is the accentuation phenomenon in college students—initial differences in college students become more accentuated over time (Myers, 2002). For example, students who do not belong to fraternities and sororities tend to be more liberal politically, and this difference grows during college at least partially because group members reinforce and polarize each other’s views. Group polarization for some groups may lead to destructive behavior, encouraging group members to go further out on a limb through mutual reinforcement. For example, group polarization within community gangs tends to increase the rate of their criminal behavior. Within terrorist organizations, group polarization leads to more extreme acts of violence. Alternatively, association with a quiet, nonviolent group, such as a quilter’s guild, strengthens a person’s tendency toward quieter, more peaceful behavior. In summary, group polarization may exaggerate prevailing attitudes among group members, leading to more extreme behavior.

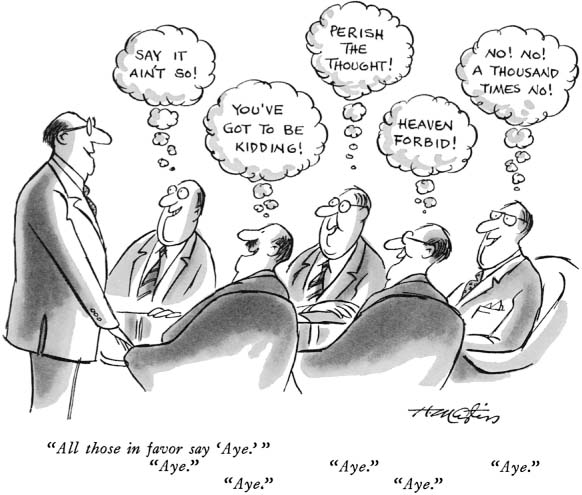

Groupthink is a mode of group thinking that impairs decision making; the desire for group harmony overrides a realistic appraisal of the possible decisions. The primary concern is to maintain group consensus. Pressure is put on group members to go along with the group’s view and external information that disagrees with the group’s view is suppressed, which leads to the illusion of unanimity. Groupthink also leads to an illusion of infallibility—the belief that the group cannot make mistakes.

376

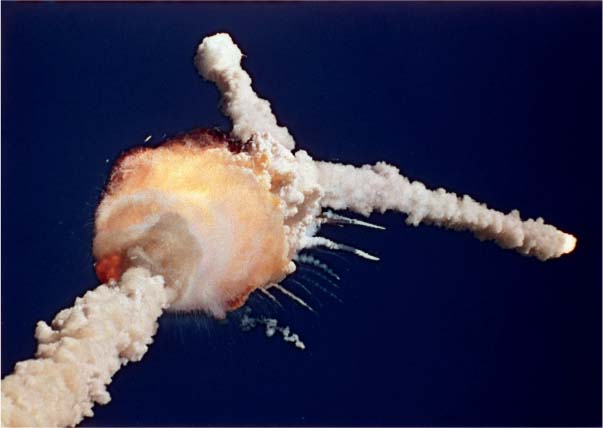

Given such illusory thinking, it is not surprising that groupthink often leads to very bad decisions and poor solutions to problems (Janis, 1972, 1983). The failure to anticipate Pearl Harbor, the disastrous Bay of Pigs invasion of Cuba, and the space shuttle Challenger disaster are a few examples of real-world bad decisions that have been linked to groupthink. In the case of the Challenger disaster, for example, the engineers who made the shuttle’s rocket boosters opposed the launch because of dangers posed by the cold temperatures to the seals between the rocket segments (Esser & Lindoerfer, 1989). However, the engineers were unsuccessful in arguing their case with the group of NASA officials, who were suffering from an illusion of infallibility. To maintain an illusion of unanimity, these officials didn’t bother to make the top NASA executive who made the final launch decision aware of the engineers’ concerns. The result was tragedy.

Sadly, the NASA groupthink mentality reared its head again with the space shuttle Columbia disaster. It appears that NASA management again ignored safety warnings from engineers about probable technical problems. The Columbia accident investigation board strongly recommended that NASA change its “culture of invincibility.” To prevent groupthink from impacting your group decisions, make your group aware of groupthink and its dangers, and then take explicit steps to ensure that minority opinions, critiques of proposed group actions, and alternative courses of action are openly presented and fairly evaluated.

Section Summary

In this section, we discussed many types of social influence, how people and the social forces they create influence a person’s thinking and behavior. Conformity—a change in behavior, belief, or both to conform to a group norm as a result of real or imagined group pressure—is usually due to either normative social influence or informational social influence. Normative social influence leads people to conform to gain the approval and avoid the disapproval of others. Informational social influence leads people to conform to gain information from others in an uncertain situation. Several situational factors impact the amount of conformity that is observed. For example, nonconsensus among group members reduces the amount of conformity, and responding aloud versus anonymously increases conformity. In addition, culture and gender impact the amount of conformity observed. Collectivist cultures tend to lead to more conformity than individualistic cultures, and women conform more than men.

377

In conformity, people change their behavior or attitudes to adhere to a group norm, but in compliance, people act in accordance with a direct request from another person or group. We discussed four techniques used to obtain compliance. Each technique involves two requests, and it is always the second request for which compliance is desired. In the foot-in-the-door technique, a small request is followed by the desired larger request. In the door-in-the-face technique, a large first request is followed by the desired second smaller request. In the low-ball technique, an attractive first request is followed by the desired and less attractive second request. In the that’s-not-all technique, the desired and more attractive second request is made before a response can be made to an initial request. The foot-in-the-door and low-ball techniques work mainly because the person has committed to the first request and complies with the second in order to remain consistent. The door-in-the-face and that’s-not-all techniques work mainly because of reciprocity. Because the other person has made a concession on the first request, we comply with the second in order to reciprocate.

Obedience, following the commands of a person in authority, was the subject of Stanley Milgram’s controversial experimental studies done at Yale University in the early 1960s. The studies are controversial because they demonstrate our tendency toward destructive obedience, bringing harm to others through our obedient behavior. Milgram identified numerous situational factors that determine the amount of obedience observed. For example, a very high rate of obedience is observed when the direct responsibility for one’s acts is removed. Less obedience is observed when we view models of disobedience, or when another authority commands us not to obey. The “Astroten” study and a survey study with nurses found high rates of obedience in the real world, indicating that it was not just a feeling of experimental obligation that led to Milgram’s results. The varying amounts of obedience in relation to varying situational factors in his experiments also argue against a feeling of experimental obligation leading to Milgram’s findings. In addition, Milgram’s baseline obedience finding has recently been replicated, demonstrating that people today react in the laboratory obedience situation like Milgram’s participants did almost 50 years ago.

Even the mere presence of other people can influence our behavior. This is demonstrated in social facilitation, an improvement in simple or well-learned tasks but worse performance on complex or unlearned tasks when other people are observing us. Some group influences occur when the responsibility for a task is diffused across all members of the group. For example, social loafing is the tendency for people to exert less effort when working in a group toward a common goal than when individually accountable. Social loafing increases as the size of the group increases and decreases when each group member feels more responsible for his contribution to the group effort. Diffusion of responsibility also contributes to the bystander effect, the greater probability of an individual helping in an emergency when there is only one bystander versus when there are many bystanders. Diffusion of responsibility also contributes to deindividuation, the loss of self-awareness and self-restraint in a group situation that promotes arousal and anonymity. The results of deindividuation can be tragic, such as mob violence and rioting.

378

Two other group influences, group polarization and groupthink, apply to more structured, task-oriented situations, and refer to effects on the group’s decision making. Group polarization is the strengthening of a group’s prevailing opinion following group discussion of the topic. Like-minded group members reinforce their shared beliefs, which leads to more extreme attitudes and behavior among all group members. Groupthink is a mode of group thinking that impairs decision making. It stems from the group’s illusion of infallibility and its desire for group harmony, which over-ride a realistic appraisal of decision alternatives, often leading to bad decisions.

ConceptCheck | 1

Explain the difference between normative social influence and informational social influence.

Explain the difference between normative social influence and informational social influence.The main difference between normative social influence and informational social influence concerns the need for information. When normative social influence is operating, information is not necessary for the judgment task. The correct answer or action is clear. People are conforming to gain the approval of others in the group and avoid their disapproval. When informational social influence is operating, however, people conform because they need information as to what the correct answer or action is. Conformity in this case is due to the need for information, which we use to guide our behavior.

Explain how both the door-in-the-face technique and the that’s-not-all technique involve reciprocity.

Explain how both the door-in-the-face technique and the that’s-not-all technique involve reciprocity.In the door-in-the-face technique, the other person accepts your refusal to the first request, so you reciprocate by agreeing to her second smaller request, the one she wanted you to comply with. In the that’s-not-all technique, you think that the other person has done you a favor by giving you an even better deal with the second request, so you reciprocate and do her a favor and agree to the second request.

Milgram found that zero percent of the participants continued in the experiment when one of two experimenters said to stop. Based on this finding, predict what he found when he had two experimenters disagree, but the one who said to stop was substituting for a participant and serving as the learner. Explain the rationale for your prediction.

Milgram found that zero percent of the participants continued in the experiment when one of two experimenters said to stop. Based on this finding, predict what he found when he had two experimenters disagree, but the one who said to stop was substituting for a participant and serving as the learner. Explain the rationale for your prediction.If you predicted that the result was the same (zero percent maximum obedience), you are wrong. The result was the same as in Milgram’s baseline condition, 65 percent maximum obedience. An explanation involves how we view persons of authority who lose their authority (being demoted). In a sense, by agreeing to serve as the learner, the experimenter gave up his authority, and the teachers no longer viewed him as an authority figure. He had been demoted.

According to the bystander effect, explain why you would be more likely to be helped if your car broke down on a little-traveled country road than on an interstate highway.

According to the bystander effect, explain why you would be more likely to be helped if your car broke down on a little-traveled country road than on an interstate highway.According to the bystander effect, you would be more likely to receive help on the little-traveled country road, because any passing bystander would feel the responsibility for helping you. She would realize that there was no one else available to help you, so she would do so. On a busy interstate highway, however, the responsibility for stopping to help is diffused across hundreds of people passing by, each thinking that someone else would help you.