The Computer Revolution

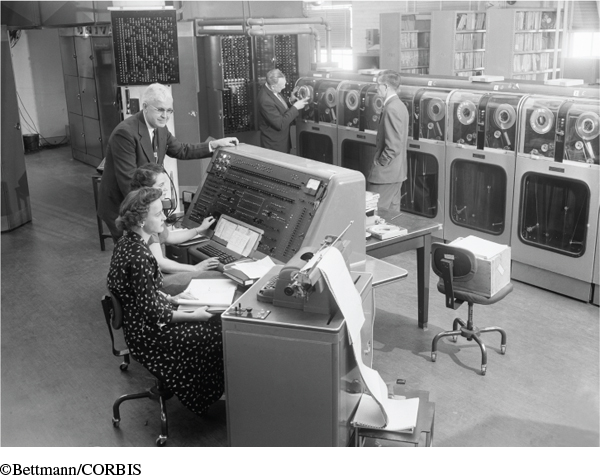

The first working computers were developed for military purposes during World War II and the Cold War and were enormous in size and cost. Engineers began to resolve the size issue with the creation of transistors. Invented in the late 1940s, these small electronic devices came into widespread use in running computers during the 1960s. The design of integrated circuits in the 1970s led to the production of microcomputers in which a silicon chip the size of a nail head did the work once performed by huge computers. Bill Gates was not the only one to recognize the potential market of microcomputers for home and business use. Steve Jobs, like Gates a college dropout, founded Apple Computer Company in 1976, turned it into a publicly traded corporation, and became a multimillionaire.

Microchips and digital technology found a market beyond home and office computers. Over the last two decades of the twentieth century, computers came to operate everything from standard appliances such as televisions and telephones, to new electronic devices such as CD players, fax machines, and cell phones. Computers controlled traffic lights on the streets and air traffic in the skies. They changed the leisure patterns of youth: Many young people preferred to play video games indoors than to engage in outdoor activities. Consumers purchased goods online, and companies such as Amazon sold merchandise through the Internet without any retail stores. Soon computers became the stars of movies such as The Matrix (1999), A.I. Artificial Intelligence (2001), and Iron Man (2008).

The Internet—an open, global series of interconnected computer networks that transmit data, information, electronic mail, and other services—grew out of military research in the 1970s, when the Department of Defense constructed a system of computer servers connected to one another throughout the United States. The main objective of this network was to preserve military communications in the event of a Soviet nuclear attack. At the end of the Cold War, the Internet was repurposed for nonmilitary use, linking government, academic, business, and organizational systems. In 1991 the World Wide Web came into existence as a way to access the Internet and connect documents and other resources to one another through hyperlinks. By 2015 about 84 percent of people in the United States used the Internet, up from 50 percent in 2000. Internet use worldwide leapt by more than 800 percent, from nearly 361 million people in 2000 to more than 3 billion in 2015.

Exploring American HistoriesPrinted Page 966

Exploring American Histories Value EditionPrinted Page 714

Chapter Timeline