7.2 Solving Problems and Making Decisions

KEY THEME

Problem solving refers to thinking and behavior directed toward attaining a goal that is not readily available.

KEY QUESTIONS

What are some advantages and disadvantages of each problem-solving strategy?

What is insight, and how does intuition work?

How can functional fixedness and mental set interfere with problem solving?

From fixing flat tires to figuring out how to pay for college classes, we engage in the cognitive task of problem solving so routinely that we often don’t even notice the processes we follow. Formally, problem solving refers to thinking and behavior directed toward attaining a goal that is not readily available (Novick & Bassok, 2005).

problem solving

Thinking and behavior directed toward attaining a goal that is not readily available.

Before you can solve a problem, you must develop an accurate understanding of the problem. Correctly identifying the problem is a key step in successful problem solving (Bransford & Stein, 1993). If your representation of the problem is flawed, your attempts to solve it will also be flawed.

Problem-Solving Strategies

As a general rule, people tend to attack a problem in an organized or systematic way. Usually, the strategy you select is influenced by the nature of the problem and your degree of experience, familiarity, and knowledge about the problem you are confronting (Chrysikou, 2006; Leighton & Sternberg, 2013). In this section, we’ll look at some of the common strategies used in problem solving.

TRIAL AND ERROR: A PROCESS OF ELIMINATION

The strategy of trial and error involves actually trying a variety of solutions and eliminating those that don’t work. When there is a limited range of possible solutions, trial and error can be a useful problem-solving strategy. If you were trying to develop a new spaghetti sauce recipe, for example, you might use trial and error to finetune the seasonings.

trial and error

A problem-solving strategy that involves attempting different solutions and eliminating those that do not work.

When the range of possible answers or solutions is large, however, trial and error can be very time-consuming. For example, your author Sandy has a cousin who hates reading written directions, especially for projects like assembling Ikea furniture or making minor household repairs. Rather than taking the time to read through the directions, he’ll spend hours trying to figure out how the pieces fit together.

ALGORITHMS: GUARANTEED TO WORK

Unlike trial and error, an algorithm is a procedure or method that, when followed step by step, always produces the correct solution. Mathematical formulas are examples of algorithms. For instance, the formula used to convert temperatures from Celsius to Fahrenheit (multiply C by 9/5, then add 32) is an algorithm.

algorithm

A problem-solving strategy that involves following a specific rule, procedure, or method that inevitably produces the correct solution.

Even though an algorithm may be guaranteed to eventually produce a solution, using an algorithm is not always practical. For example, imagine that while rummaging in a closet you find a combination lock with no combination attached. Using an algorithm will eventually produce the correct combination. You can start with 0–

HEURISTICS: RULES OF THUMB

In contrast to an algorithm, a heuristic is a general rule-of-thumb strategy that may or may not work. Although heuristic strategies are not guaranteed to solve a given problem, they tend to simplify problem solving because they let you reduce the number of possible solutions. With a more limited range of solutions, you can use trial and error to eventually arrive at the correct one. In this way, heuristics may serve an adaptive purpose by allowing us to use patterns of information to solve problems quickly and accurately (Gigerenzer & Goldstein, 2011).

heuristic

A problem-solving strategy that involves following a general rule of thumb to reduce the number of possible solutions.

Here’s an example. Creating footnotes is described somewhere in the onscreen “Help” documentation for a word-processing software program. If you use the algorithm of scrolling through every page of the Help program, you’re guaranteed to solve the problem eventually. But you can greatly simplify your task by using the heuristic of entering “footnotes” in the Help program’s search box. This strategy does not guarantee success, however, because the search term may not be indexed.

One common heuristic is to break a problem into a series of subgoals. This strategy is often used in writing a term paper. Choosing a topic, locating information about the topic, organizing the information, and so on become a series of subproblems. As you solve each subproblem, you move closer to solving the larger problem. Another useful heuristic involves working backward from the goal. Starting with the end point, you determine the steps necessary to reach your final goal. For example, when making a budget, people often start off with the goal of spending no more than a certain total each month, then work backward to determine how much of the target amount they will allot for each category of expenses.

Perhaps the key to successful problem solving is flexibility. A good problem solver is able to recognize that a particular strategy is unlikely to yield a solution—and knows to switch to a different approach (Bilalic´ & others, 2008). And, sometimes, the reality is that a problem may not have a single “best” solution.

Remember Tom, whose story we told in the Prologue? One characteristic of autism spectrum disorder is cognitive rigidity and inflexible thinking (Kleinhans & others, 2005; Toth & King, 2008). Like Tom, many people can become frustrated when they are “stuck” on a problem. Unlike Tom, most people are able to sense when it’s time to switch to a new strategy, take a break for a few hours, seek assistance from experts or others who may be more knowledgeable—or accept defeat and give up. In Tom’s case, rather than give up on a problem or seek a different approach to solving it, Tom will persevere in his attempt to solve it. For example, faced with a difficult homework problem in an advanced mathematics class, Tom often stayed up until 2:00 or 3:00 a.m., struggling to solve a single problem until he literally fell asleep at his desk.

Similarly, successful problem solving sometimes involves accepting a less-than-perfect solution to a particular problem—knowing when a solution is “good enough,” even if not perfect. But to many with autism spectrum disorder, things are either right or wrong—there is no middle ground (Toth & King, 2008). So when Tom got a 98 rather than 100 on a difficult math test, he was inconsolable. When he ranked in the top five in his class, he was upset because he wasn’t first. Tom would sometimes be unable to write an essay because he couldn’t think of a perfect opening sentence, or turn in an incomplete essay because he couldn’t think of the perfect closing sentence.

INSIGHT AND INTUITION

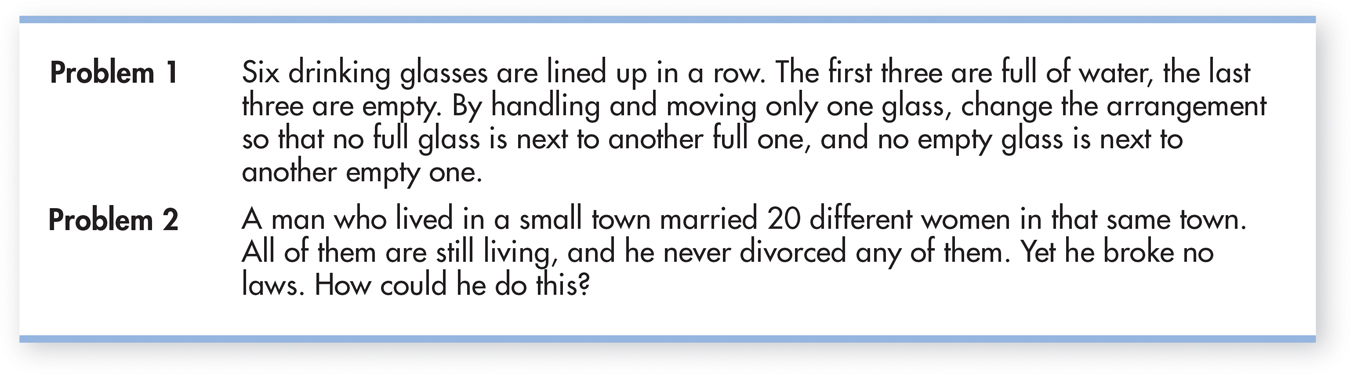

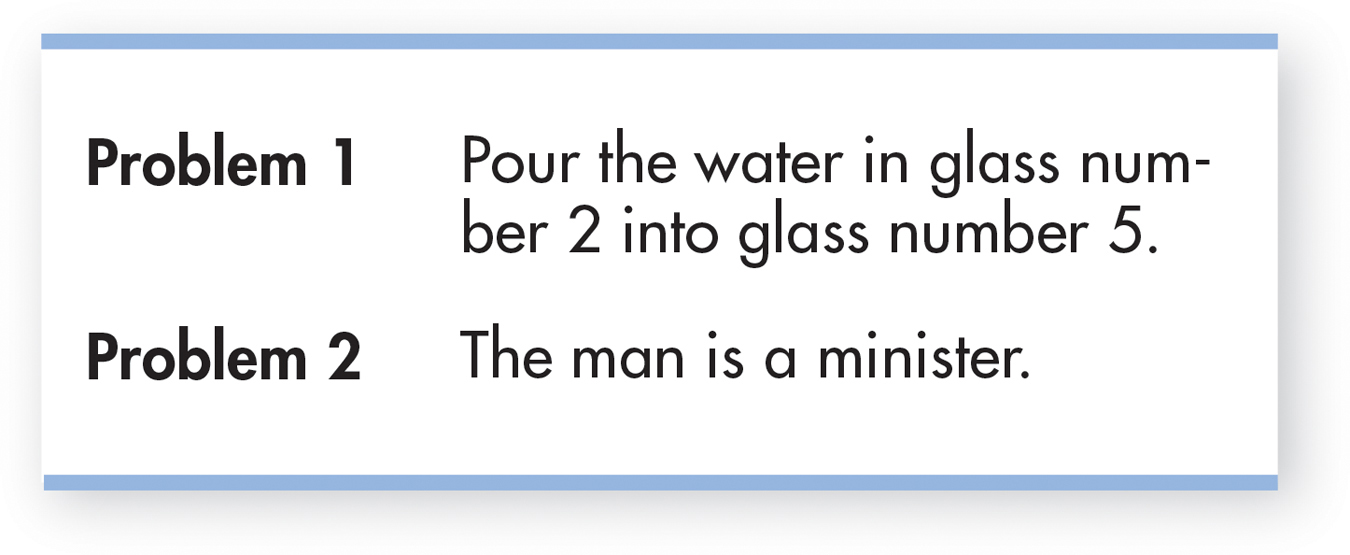

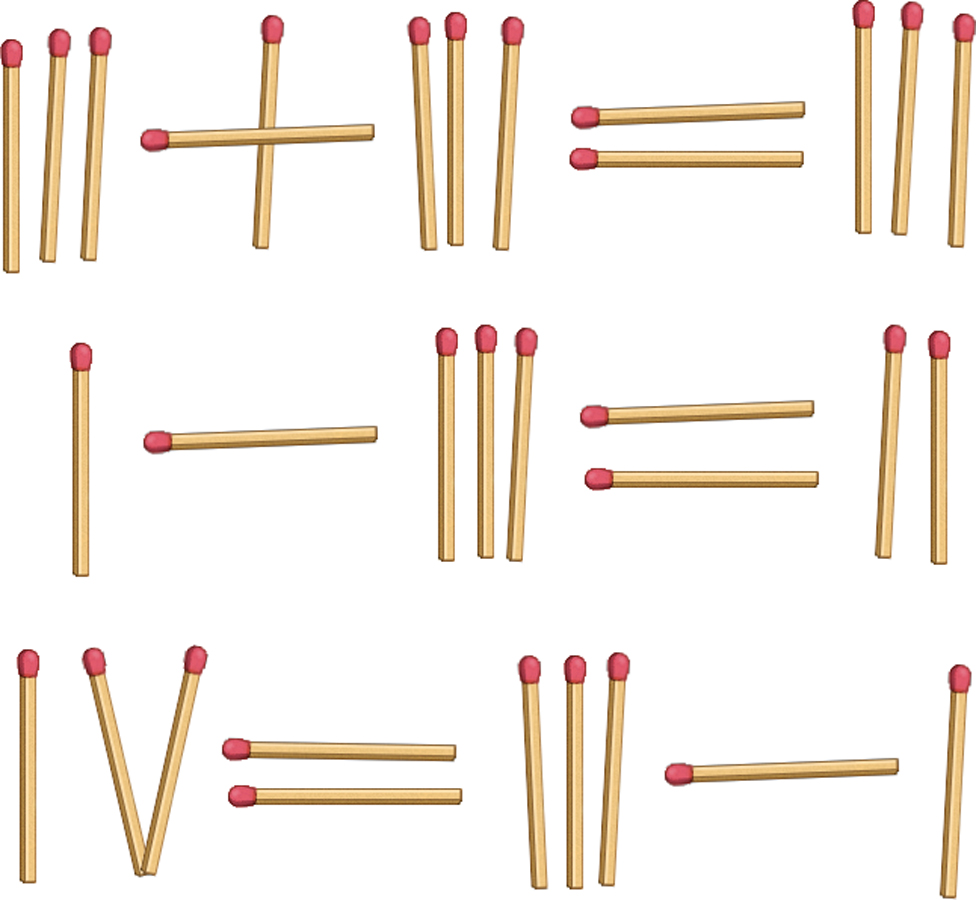

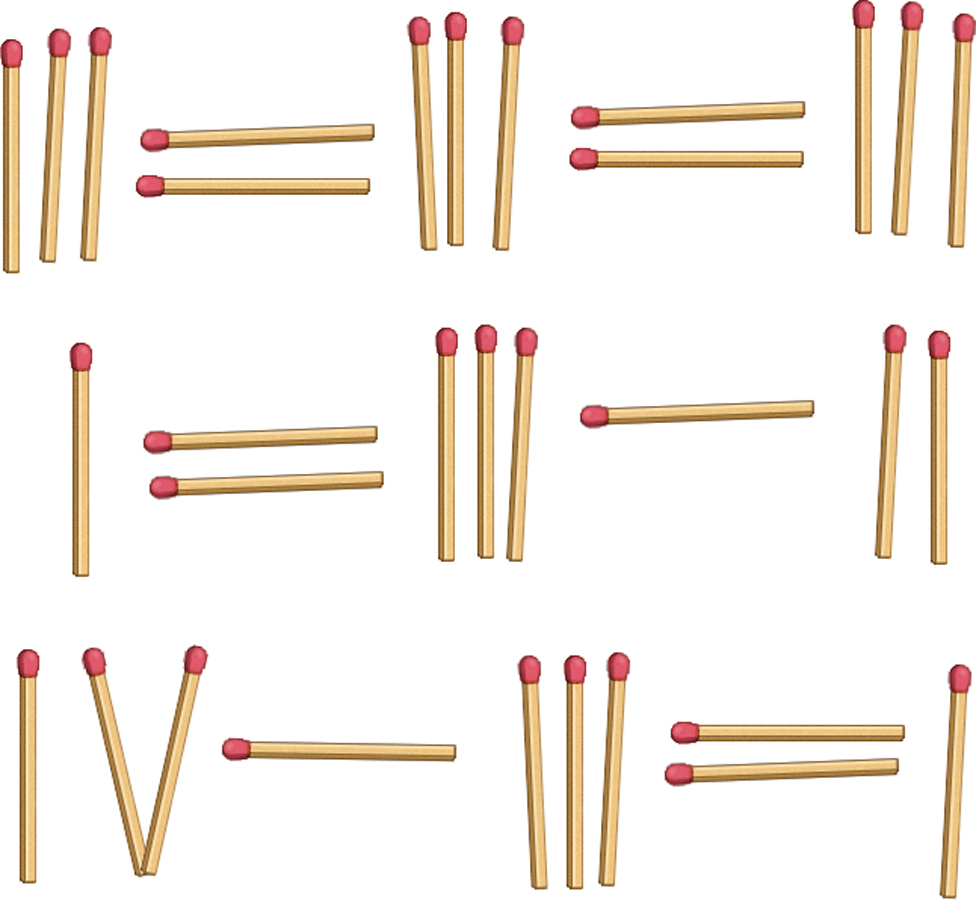

The solution to some problems seems to arrive in a sudden realization, or flash of insight, that happens after you mull a problem over (Ohlsson, 2010; Öllinger & others, 2008). Sometimes an insight will occur when you recognize how the problem is similar to a previously solved problem. Or an insight can involve the sudden realization that an object can be used in a novel way. Try your hand at the two problems in FIGURE 7.3. The solution to each of those problems is often achieved by insight.

insight

The sudden realization of how a problem can be solved.

Insights rarely occur through the conscious manipulation of concepts or information. In fact, you’re usually not aware of the thought processes that lead to an insight. Increasingly, cognitive psychologists and neuroscientists are investigating nonconscious processes, including unconscious problem solving, insight, and intuition (Hogarth, 2010). Intuition means coming to a conclusion or making a judgment without conscious awareness of the thought processes involved.

intuition

Coming to a conclusion or making a judgment without conscious awareness of the thought processes involved.

One influential model of intuition is the two-stage model (Bowers & others, 1990; Hodgkinson & others, 2008). In the first stage, called the guiding stage, you perceive a pattern in the information you’re considering, but not consciously. The perception of such patterns is based on your expertise in a given area and your memories of related information.

In the second stage, the integrative stage, a representation of the pattern becomes conscious, usually in the form of a hunch or hypothesis. At this point, conscious analytic thought processes take over. You systematically attempt to prove or disprove the hypothesis. For example, an experienced doctor might integrate both obvious and subtle cues to recognize a pattern in a patient’s symptoms, a pattern that takes the form of a hunch or an educated guess. Once the hunch is consciously formulated, she might order lab tests to confirm or disprove her tentative diagnosis.

An intuitive hunch, then, is a new idea that integrates new information with existing knowledge stored in long-term memory. Such hunches are likely to be accurate only in contexts in which you already have a broad base of knowledge and experience (Jones, 2003; M. Lieberman, 2000).

Obstacles to Solving Problems: THINKING OUTSIDE THE BOX

Sometimes, past experience or expertise in a particular domain can actually interfere with effective problem solving. If we’re used to always doing something in a particular way, we may not be open to new or better solutions. When we can’t move beyond old, inappropriate heuristics, ideas, or problem-solving strategies, fixation can block the generation of new, more effective approaches (Moss & others, 2011; Storm & Angello, 2010).

When we view objects as functioning only in the usual or customary way, we’re engaging in a tendency called functional fixedness. Functional fixedness often prevents us from seeing the full range of ways in which an object can be used. To get a feel for how functional fixedness can interfere with your ability to find a solution, try the problem in FIGURE 7.4.

functional fixedness

The tendency to view objects as functioning only in their usual or customary way.

For example, consider the problem of disposing of plastic bags, which take decades to centuries to degrade and clog landfills and waterways. Hundreds of U.S. cities, including Los Angeles and Chicago, have dealt with the problem by passing ordinances banning or restricting their use. Functional fixedness kept people from thinking of the bags as anything but trash. But it turns out that the indestructible nature of these single-use bags can be advantageous: the bags can be turned into “plarn,” a plastic yarn that can be repurposed to create durable, waterproof sleeping mats for the homeless. A Chicago-based group, New Life for Old Bags, estimates that it takes between 600-700 plastic bags to create one six-by-two foot sleeping mat, which are distributed to homeless shelters throughout the city (Stuart, 2013).

Another common obstacle to problem solving is mental set—the tendency to persist in solving problems with solutions that have worked in the past (Öllinger & others, 2008). Obviously, if a solution has worked in the past, there’s good reason to consider using it again. However, if we approach a problem with a rigid mental set, we may not see other possible solutions (Kershaw & Ohlsson, 2004).

mental set

The tendency to persist in solving problems with solutions that have worked in the past.

Ironically, mental set is sometimes most likely to block insight in areas in which you are already knowledgeable or well trained. Before you read any further, try solving the simple arithmetic problems in FIGURE 7.5. If you’re having trouble coming up with the answer, it’s probably because your existing training in solving arithmetic problems is preventing you from seeing the equations from a different perspective than what you have been taught (Knoblich & Öllinger, 2006; Öllinger & others, 2008).

Mental sets can sometimes suggest a useful heuristic. But they can also prevent us from coming up with new, and possibly more effective, solutions. If we try to be flexible in our thinking and overcome the tendency toward mental sets, we can often identify simpler solutions to many common problems.

Decision-Making Strategies

KEY THEME

Different cognitive strategies are used when making decisions, depending on the type and number of options available to us.

KEY QUESTIONS

What are the single-feature model, the additive model, and the elimination-by-aspects model of decision making?

Under what conditions is each strategy most appropriate?

How do we use the availability and representativeness heuristics to help us estimate the likelihood of an event?

Who hasn’t felt like flipping a coin when faced with an important or complicated decision? Fortunately, most of the decisions we make in everyday life are relatively minor. But every now and then we have to make a decision where much more is at stake. When a decision is important or complex, we’re more likely to invest time, effort, and other resources in considering different options.

The decision-making process becomes complicated when each option involves the consideration of several features. It’s rare that one alternative is superior in every category. So, what do you do when each alternative has pros and cons? In this section, we’ll describe three common decision-making strategies.

THE SINGLE-FEATURE MODEL

One decision-making strategy is called the single-feature model. In order to simplify the choice among many alternatives, you base your decision on a single feature. When the decision is a minor one, the single-feature model can be a good decision-making strategy. For example, faced with an entire supermarket aisle of laundry detergents, you could simplify your decision by deciding to buy the cheapest brand. When a decision is important or complex, however, making decisions on the basis of a single feature can increase the riskiness of the decision.

THE ADDITIVE MODEL

A better strategy for complex decisions is to systematically evaluate the important features of each alternative. One such decision-making model is called the additive model.

In this model, you first generate a list of the factors that are most important to you. For example, suppose you need off-campus housing. Your list of important factors might include cost, proximity to campus, compatibility with roommates, or having a private bathroom. Then, you rate each alternative for each factor using an arbitrary scale, such as from –5 to +5. If a particular factor has strong advantages or appeal, such as compatible roommates, you give it the maximum rating (+5). If a particular factor has strong drawbacks or disadvantages, such as distance from campus, you give it the minimum rating (–5). Finally, you add up the ratings for each alternative. This strategy can often reveal the best overall choice. If the decision involves a situation in which some factors are more important than others, you can emphasize the more important factors by multiplying the rating.

Taking the time to apply the additive model to important decisions can greatly improve your decision making. By allowing you to evaluate the features of one alternative at a time, then comparing the alternatives, the additive model provides a logical strategy for identifying the most acceptable choice from a range of possible decisions. Although we seldom formally calculate the subjective value of individual features for different options, we often informally use the additive model by comparing two choices feature by feature. The alternative with the “best” collection of features is then selected.

THE ELIMINATION-BY-ASPECTS MODEL

Psychologist Amos Tversky (1972) proposed another decision-making model called the elimination-by-aspects model. Using this model, you evaluate all of the alternatives one characteristic at a time, typically starting with the feature you consider most important. If a particular alternative fails to meet that criterion, you scratch it off your list of possible choices, even if it possesses other desirable attributes. As the range of possible choices is narrowed down, you continue to compare the remaining alternatives, one feature at a time, until just one alternative is left.

For example, suppose you want to buy a new computer. You might initially eliminate all the models that aren’t powerful enough to run the software you need to use, then the models outside your budget, and so forth. Continuing in this fashion, you would progressively narrow down the range of possible choices to the one choice that satisfies all your criteria.

Good decision makers adapt their strategy to the demands of the specific situation. If there are just a few choices and features to compare, people tend to use the additive method, at least informally. However, when the decision is complex, involving the comparison of many choices that have multiple features, people often use more than one strategy. That is, we usually begin by focusing on the critical features, using the elimination-by-aspects strategy to quickly narrow down the range of acceptable ]choices. Once we have narrowed the list of choices down to a more manageable short list, we tend to use the additive model to make a final decision.

Decisions Involving Uncertainty: ESTIMATING THE PROBABILITY OF EVENTS

Some decisions involve a high degree of uncertainty. In these cases, you need to make a decision, but you are unable to predict with certainty that a given event will occur. Instead, you have to estimate the probability of an event occurring. But how do you actually make that estimation?

For example, imagine that you’re running late for a very important appointment. You may be faced with this decision: “Should I risk a speeding ticket to get to the appointment on time?” In this case, you would have to estimate the probability of a particular event occurring—getting pulled over for speeding.

In such instances, we often estimate the likelihood that certain events will occur, then gamble. In deciding what the odds are that a particular gamble will go our way, we tend to rely on two rule-of-thumb strategies to help us estimate the likelihood of events: the availability heuristic and the representativeness heuristic (Tversky & Kahneman, 1982; Kahneman, 2003).

THE AVAILABILITY HEURISTIC

When we use the availability heuristic, we estimate the likelihood of an event on the basis of how readily available other instances of the event are in our memory. When instances of an event are easily recalled, we tend to consider the event as being more likely to occur. So, we’re less likely to exceed the speed limit if we can readily recall that a friend recently got a speeding ticket.

availability heuristic

A strategy in which the likelihood of an event is estimated on the basis of how readily available other instances of the event are in memory.

However, when a rare event makes a vivid impression on us, we may overestimate its likelihood (Tversky & Kahneman, 1982). State lottery commissions capitalize on this cognitive tendency by running many TV commercials showing that lucky person who won the $100 million Powerball. A vivid memory is created, which leads viewers to an inaccurate estimate of the likelihood that the event will happen to them.

The key point here is that the less accurately our memory of an event reflects the actual frequency of the event, the less accurate our estimate of the event’s likelihood will be. That’s why the lottery commercials don’t show the other 50 million people staring dejectedly at their TV screens because they did not win the $100 million.

THE REPRESENTATIVENESS HEURISTIC

The other heuristic we often use to make estimates is called the representativeness heuristic (Kahneman & Tversky, 1982; Kahneman, 2003). Here, we estimate an event’s likelihood by comparing how similar its essential features are to our prototype of the event. Remember, a prototype is the most typical example of an object or an event.

representativeness heuristic

A strategy in which the likelihood of an event is estimated by comparing how similar it is to the prototype of the event.

To go back to our example of deciding whether to speed, we are more likely to risk speeding if we think that we’re somehow significantly different from the prototype of the driver who gets a speeding ticket. If our prototype of a speeder is a teenager driving a flashy, high-performance car, and we’re an adult driving a minivan with a baby seat, then we will probably estimate the likelihood of our getting a speeding ticket as low.

Like the availability heuristic, the representativeness heuristic can lead to inaccurate judgments. Consider the following description:

Maria is a perceptive, sensitive, introspective woman. She is very articulate, but measures her words carefully. Once she’s certain she knows what she wants to say, she expresses herself easily and confidently. She has a strong preference for working alone.

On the basis of this description, is it more likely that Maria is a successful fiction writer or that Maria is a registered nurse? Most people guess that she is a successful fiction writer. Why? Because the description seems to mesh with what many people think of as the typical characteristics of a writer.

However, when you compare the number of registered nurses (which is very large) to the number of successful female fiction writers (which is very small), it’s actually much more likely that Maria is a nurse. Thus, the representativeness heuristic can produce faulty estimates if (1) we fail to consider possible variations from the prototype or (2) we fail to consider the approximate number of prototypes that actually exist.

What determines which heuristic is more likely to be used? Research suggests that the availability heuristic is most likely to be used when people rely on information held in their long-term memory to determine the likelihood of events occurring. On the other hand, the representativeness heuristic is more likely to be used when people compare different variables to make predictions (Harvey, 2007).

The Critical Thinking box “The Persistence of Unwarranted Beliefs” below discusses some of the other psychological factors that can influence the way in which we evaluate evidence, make decisions, and draw conclusions.

CONCEPT REVIEW 7.1

Problem-Solving and Decision-Making Strategies

Identify the strategy used in each of the following examples. Choose from: trial and error, algorithm, heuristic, insight, representativeness heuristic, availability heuristic, and single-feature model.

Question 7.1

| 1. | RPfgOa9cFCjxdHGdcN4aDg== As you try to spell the word deceive, you recite “i before e except after c.” |

Question 7.2

| 2. | 5qxRO5rm0JiYASjr You are asked to complete the following sequence: O, T, T, F, F, _____, _____, _____, _____, _____. You suddenly realize that the answer is obvious: S, S, E, N, T. |

Question 7.3

| 3. | cLlpPTZGh+q5v/zxZPXPJRcNOcA= One by one, Professor Goldstein tries each key on the key chain until she finds the one that opens the locked filing cabinet. |

Question 7.4

| 4. | 58I11SXDUx2xSj1GLvGNTg== Laura is trying to construct a house out of LEGO building blocks. She tries blocks of different shapes until she finds one that fits. |

Question 7.5

| 5. | nRI7v8OjaPzw81vnMOTbQhwzOnyXU5hC78QvPw== After seeing news reports about a teenager being killed in a freak accident on a roller coaster, Angela refuses to allow her son to go to an amusement park with his friends. |

Question 7.6

| 6. | WjDH3wSXsFftOdxDwvB6TXd+3lktmNUl Jacob decided to rent an apartment at the apartment complex that was closest to his college campus. |

Question 7.7

| 7. | AxEQ0vvxRELgeQyhrWtcp5hHZnCjLw8sKIGfXCm6I/Y= Jack, who works as a bank teller, is stunned when a well-dressed, elderly woman pulls out a gun and tells him to hand over all the money in his cash drawer. |

Test your understanding of Introduction to Thinking, Language and Intelligence; Solving Problems; and Making Decisions with

.

.

CRITICAL THINKING

The Persistence of Unwarranted Beliefs

Throughout this text, we show that many pseudoscientific claims fail when subjected to scientific scrutiny. However, once a belief in a pseudoscience or paranormal phenomenon is established, the presentation of contradictory evidence often has little impact (Lester, 2000). Ironically, contradictory evidence can actually strengthen a person’s established beliefs (Lord & others, 1979). How do psychologists account for this?

Several psychological studies have explored how people deal with evidence, especially evidence that contradicts their beliefs (see Ross & Anderson, 1982; Zusne & Jones, 1989). The four obstacles to logical thinking described here can account for much of the persistence of unwarranted beliefs in pseudosciences or other areas (Risen & Gilovich, 2007).

Obstacle 1: The Belief-Bias Effect

The belief-bias effect occurs when people accept only the evidence that conforms to their belief, rejecting or ignoring any evidence that does not. For example, in a classic study conducted by Warren Jones and Dan Russell (1980), ESP believers and ESP disbelievers watched two attempts at telepathic communication. In each attempt, a “receiver” tried to indicate what card the “sender” was holding.

In reality, both attempts were rigged. One attempt was designed to appear to be a successful demonstration of telepathy, with a significant number of accurate responses. The other attempt was designed to convincingly demonstrate failure. In this case, the number of accurate guesses was no more than chance and could be produced by simple random guessing.

MYTH !rhtriangle! SCIENCE

Is it true that people tend to cling to their beliefs even when they are presented with solid evidence that contradicts those beliefs?

Following the demonstration, the participants were asked what they believed had taken place. Both believers and disbelievers indicated that ESP had occurred in the successful attempt. But only the believers said that ESP had also taken place in the clearly unsuccessful attempt. In other words, the ESP believers ignored or discounted the evidence in the failed attempt. This is the essence of the belief-bias effect.

Obstacle 2: Confirmation Bias

Confirmation bias is the strong tendency to search for information or evidence that confirms a belief, while making little or no effort to search for information that might disprove the belief (Gilovich, 1997; Masnick & Zimmerman, 2009). For example, we tend to visit Web sites that support our own viewpoints and read blogs and editorial columns written by people who interpret events from our perspective. At the same time, we avoid the Web sites, blogs, and columns written by people who don’t see things our way (Ruscio, 1998).

confirmation bias

The tendency to seek out evidence that confirms an existing belief while ignoring evidence that might contradict or undermine that belief.

People also tend to believe evidence that confirms what they want to believe is true, a bias that is sometimes called the wishful thinking bias (Bastardi & others, 2011). Faced with evidence that seems to contradict a hoped-for finding, people may object to the study’s methodology. And, evaluating evidence that seems to confirm a wished-for finding, people may overlook flaws in the research or argument. For example, parents with children in day care may be motivated to embrace research findings that emphasize the benefits of day care for young children and discount findings that emphasize the benefits of home-based care.

Obstacle 3: The Fallacy of Positive Instances

The fallacy of positive instances is the tendency to remember uncommon events that seem to confirm our beliefs and to forget events that disconfirm our beliefs. Often, the occurrence is really nothing more than coincidence. For example, you find yourself thinking of an old friend. A few moments later, the phone rings and it’s him. You remember this seemingly telepathic event but forget all the times that you’ve thought of your old friend and he did not call. In other words, you remember the positive instance but fail to notice the negative instances when the anticipated event did not occur (Gilovich, 1997).

Obstacle 4: The Overestimation Effect

The tendency to overestimate the rarity of events is referred to as the overestimation effect. Suppose a “psychic” comes to your class of 23 students. Using his psychic abilities, the visitor “senses” that two people in the class were born on the same day. A quick survey finds that, indeed, two people share the same month and day of birth. This is pretty impressive evidence of clairvoyance, right? After all, what are the odds that two people in a class of 23 would have the same birthday?

When we perform this “psychic” demonstration in class, our students usually estimate that it is very unlikely that 2 people in a class of 23 will share a birthday. In reality, the odds are 1 in 2, or 50–

Thinking Critically About the Evidence

On the one hand, it is important to keep an open mind. Simply dismissing an idea as impossible shuts out the consideration of evidence for new and potentially promising ideas or phenomena. At one time, for example, scientists thought it impossible that rocks could fall from the sky (Hines, 2003).

On the other hand, the obstacles described here underscore the importance of choosing ways to gather and think about evidence that will help us avoid unwarranted beliefs and self-deception.

The critical thinking skills we described in Chapter 1 are especially useful in this respect. The boxes “What Is a Pseudoscience?” and “How to Think Like a Scientist” provided guidelines that can be used to evaluate all claims, including pseudoscientific or paranormal claims. In particular, it’s important to stress again that good critical thinkers strive to evaluate all the available evidence before reaching a conclusion, not just the evidence that supports what they want to believe.

CRITICAL THINKING AND QUESTIONS

How can using critical thinking skills help you avoid these obstacles to logical thinking?

Beyond the logical fallacies described here, what might motivate people to maintain beliefs in the face of contradictory evidence?