4.4 4.4 Means and Variances of Random Variables

When you complete this section, you will be able to:

• Use a probability distribution to find the mean of a discrete random variable.

• Apply the law of large numbers to describe the behavior of the sample mean as the sample size increases.

• Find means using the rules for means of linear transformations, sums, and differences.

• Use a probability distribution to find the variance and the standard deviation of a discrete random variable.

• Find variances and standard deviations using the rules for variances and standard deviations for linear transformations.

• Find variances and standard deviations using the rules for variances and standard deviations for sums of and differences between two random variables and for uncorrelated and for correlated random variables.

The probability histograms and density curves that picture the probability distributions of random variables resemble our earlier pictures of distributions of data. In describing data, we moved from graphs to numerical measures such as means and standard deviations. Now we will make the same move to expand our descriptions of the distributions of random variables. We can speak of the mean winnings in a game of chance or the standard deviation of the randomly varying number of calls a travel agency receives in an hour. In this section, we will learn more about how to compute these descriptive measures and about the laws they obey.

The mean of a random variable

In Chapter 1 (page 28), we learned that the mean is the average of the observations in a sample. Recall that a random variable X is a numerical outcome of a random process. Think about repeating the random process many times and recording the resulting values of the random variable. You can think of the value of a random variable as the average of a very large sample where the relative frequencies of the values are the same as their probabilities.

247

If we think of the random process as corresponding to the population, then the mean of the random variable is a characteristic of this population. Here is an example.

EXAMPLE 4.27

The Tri-State Pick 3 lottery. Most states and Canadian provinces have government-sponsored lotteries. Here is a simple lottery wager from the Tri-State Pick 3 game that New Hampshire shares with Maine and Vermont. You choose a three-digit number, 000 to 999. The state chooses a three-digit winning number at random and pays you $500 if your number is chosen.

Because there are 1000 three-digit numbers, you have probability 1/1000 of winning. Taking X to be the amount your ticket pays you, the probability distribution of X is

| Payoff X | $0 | $500 |

| Probability | 0.999 | 0.001 |

The random process consists of drawing a three-digit number. The population consists of the numbers 000 to 999. Each of these possible outcomes is equally likely in this example. In the setting of sampling in Chapter 3 (page 191), we can view the random process as selecting an SRS of size 1 from the population. The random variable X is 1 if the selected number is equal to the one that you chose and 0 if it is not.

What is your average payoff from many tickets? The ordinary average of the two possible outcomes $0 and $500 is $250, but that makes no sense as the average because $500 is much less likely than $0. In the long run, you receive $500 once in every 1000 tickets and $0 on the remaining 999 of 1000 tickets. The long-run average payoff is

or 50 cents. That number is the mean of the random variable X. (Tickets cost $1, so in the long run, the state keeps half the money you wager.)

If you play Tri-State Pick 3 several times, we would—as usual—call the mean of the actual amounts you win . The mean in Example 4.27 is a different quantity—it is the long-run average winnings you expect if you play a very large number of times.

USE YOUR KNOWLEDGE

Question 4.63

4.63 Find the mean of the probability distribution. You toss a fair coin. If the outcome is heads, you win $10.00; if the outcome is tails, you win nothing. Let X be the amount that you win in a single toss of a coin. Find the probability distribution of this random variable and its mean.

Just as probabilities are an idealized description of long-run proportions, the mean of a probability distribution describes the long-run average outcome. We can’t call this mean , so we need a different symbol. The common symbol for the mean of a probability distributionmean μ is μ, the Greek letter mu. We used μ in Chapter 1 for the mean of a Normal distribution, so this is not a new notation. We will often be interested in several random variables, each having a different probability distribution with a different mean.

248

To remind ourselves that we are talking about the mean of X, we often write μX rather than simply μ. In Example 4.27, μX = $0.50. Notice that, as often happens, the mean is not a possible value of X. You will often find the mean of a random variable X called the expected valueexpected value of X. This term can be misleading because we don’t necessarily expect one observation on X to be close to its expected value.

The mean of any discrete random variable is found just as in Example 4.27. It is an average of the possible outcomes, but a weighted average in which each outcome is weighted by its probability. Because the probabilities add to 1, we have total weight 1 to distribute among the outcomes. An outcome that occurs half the time has probability one-half and gets one-half the weight in calculating the mean. Here is the general definition.

MEAN OF A DISCRETE RANDOM VARIABLE

Suppose that X is a discrete random variable whose distribution is

| Value of X | x1 | x2 | x3 | . . . |

| Probability | p1 | p2 | p3 | . . . |

To find the mean of X, multiply each possible value by its probability, then add all the products:

EXAMPLE 4.28

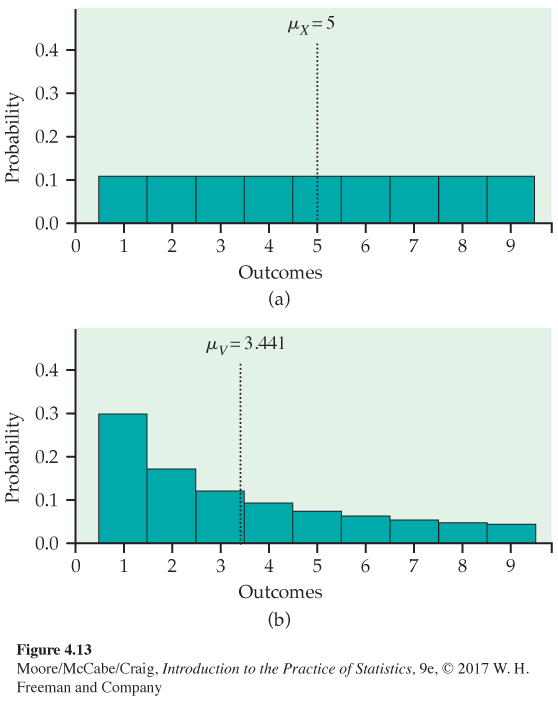

The mean of equally likely first digits. If first digits in a set of data all have the same probability, the probability distribution of the first digit X is then

| First digit X | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 |

| Probability | 1/9 | 1/9 | 1/9 | 1/9 | 1/9 | 1/9 | 1/9 | 1/9 | 1/9 |

The mean of this distribution is

Suppose that the random digits in Example 4.28 had a different probability distribution. In Example 4.12 (page 226), we described Benford’s law as a probability distribution that describes first digits of numbers in many real situations. Let’s calculate the mean for Benford’s law.

249

EXAMPLE 4.29

The mean of first digits that follow Benford’s law. Here is the distribution of the first digit for data that follow Benford’s law. We use the letter V for this random variable to distinguish it from the one that we studied in Example 4.28. The distribution of V is

| First digit V | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 |

| Probability | 0.301 | 0.176 | 0.125 | 0.097 | 0.079 | 0.067 | 0.058 | 0.051 | 0.046 |

The mean of V is

μV = (1)(0.301) + (2)(0.176) + (3)(0.125) + (4)(0.097) + (5)(0.079)

+ (6)(0.067) + (7)(0.058) + (8)(0.051) + (9)(0.046)

= 3.441

The mean reflects the greater probability of smaller first digits under Benford’s law than when first digits 1 to 9 are equally likely.

Figure 4.13 locates the means of X and V on the two probability histograms. Because the discrete uniform distribution of Figure 4.13(a) is symmetric, the mean lies at the center of symmetry. We can’t locate the mean of the right-skewed distribution of Figure 4.13(b) by eye—calculation is needed.

What about continuous random variables? The probability distribution of a continuous random variable X is described by a density curve. Chapter 1 (page 54) showed how to find the mean of the distribution: it is the point at which the area under the density curve would balance if it were made out of solid material. The mean lies at the center of symmetric density curves such as the Normal curves. Exact calculation of the mean of a distribution with a skewed density curve requires advanced mathematics.13 The idea that the mean is the balance point of the distribution applies to discrete random variables as well, but in the discrete case, we have a formula that gives us this point.

250

Statistical estimation and the law of large numbers

We would like to estimate the mean height μ of the population of all American women between the ages of 18 and 24 years. This μ is the mean μX of the random variable X obtained by choosing a young woman at random and measuring her height. To estimate μ, we choose an SRS of young women and use the sample mean to estimate the unknown population mean μ. In the language of Section 5.1 (page 282), μ is a parameter and is a statistic.

Statistics obtained from probability samples are random variables because their values vary in repeated sampling. The sampling distributions of statistics are just the probability distributions of these random variables.

It seems reasonable to use to estimate μ. An SRS should fairly represent the population, so the mean of the sample should be somewhere near the mean μ of the population. Of course, we don’t expect to be exactly equal to μ, and we realize that if we choose another SRS, the luck of the draw will probably produce a different .

If is rarely exactly right and varies from sample to sample, why is it nonetheless a reasonable estimate of the population mean μ? If we keep on adding observations to our random sample, the statistic is guaranteed to get as close as we wish to the parameter μ and then stay that close. We have the comfort of knowing that if we can afford to keep on measuring more women, eventually we will estimate the mean height of all young women very accurately. This remarkable fact is called the law of large numbers. It is remarkable because it holds for any population, not just for some special class such as Normal distributions.

LAW OF LARGE NUMBERS

Draw independent observations at random from any population with finite mean μ. Decide how accurately you would like to estimate μ. As the number of observations drawn increases, the mean of the observed values eventually approaches the mean μ of the population as closely as you specified and then stays that close.

The behavior of is similar to the idea of probability. In the long run, the proportion of outcomes taking any value gets close to the probability of that value, and the average outcome gets close to the distribution mean. Figure 4.1 (page 216) shows how proportions approach probability in one example. Here is an example of how sample means approach the distribution mean.

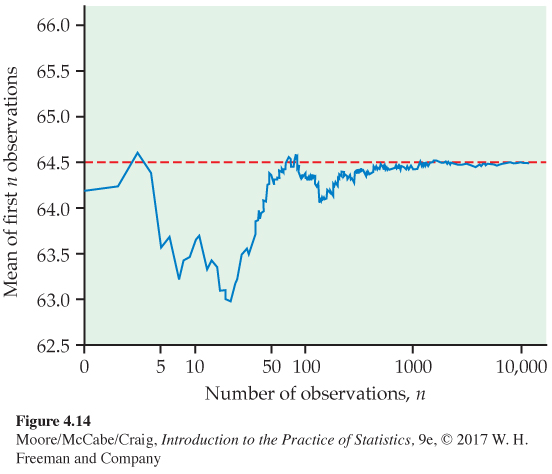

EXAMPLE 4.30

Heights of young women. The distribution of the heights of all young women is close to the Normal distribution with mean 64.5 inches and standard deviation 2.5 inches. Suppose that μ = 64.5 were exactly true.

251

Figure 4.14 shows the behavior of the mean height of n women chosen at random from a population whose heights follow the N(64.5, 2.5) distribution. The graph plots the values of as we add women to our sample. The first woman drawn had height 64.21 inches, so the line starts there. The second had height 64.35 inches, so for n = 2 the mean is

This is the second point on the line in the graph.

At first, the graph shows that the mean of the sample changes as we take more observations. Eventually, however, the mean of the observations gets close to the population mean μ = 64.5 and settles down at that value. The law of large numbers says that this always happens.

USE YOUR KNOWLEDGE

Question 4.64

![]()

4.64 Use the Law of Large Numbers applet. The Law of Large Numbers applet animates a graph like Figure 4.14 for rolling dice. Use it to better understand the law of large numbers by making a similar graph.

The mean μ of a random variable is the average value of the variable in two senses. By its definition, μ is the average of the possible values, weighted by their probability of occurring. The law of large numbers says that μ is also the long-run average of many independent observations on the variable. The law of large numbers can be proved mathematically starting from the basic laws of probability.

Thinking about the law of large numbers

The law of large numbers says broadly that the average results of many independent observations are stable and predictable. The gamblers in a casino may win or lose, but the casino will win in the long run because the law of large numbers says what the average outcome of many thousands of bets will be. An insurance company deciding how much to charge for life insurance and a fast-food restaurant deciding how many beef patties to prepare also rely on the fact that averaging over many individuals produces a stable result. It is worth the effort to think a bit more closely about so important a fact.

252

The “law of small numbers” Both the rules of probability and the law of large numbers describe the regular behavior of chance phenomena in the long run. Psychologists have discovered that our intuitive understanding of randomness is quite different from the true laws of chance.14 For example, most people believe in an incorrect “law of small numbers.” That is, we expect even short sequences of random events to show the kind of average behavior that in fact appears only in the long run.

Some teachers of statistics begin a course by asking students to toss a coin 50 times and bring the sequence of heads and tails to the next class. The teacher then announces which students just wrote down a random-looking sequence rather than actually tossing a coin. The faked tosses don’t have enough “runs” of consecutive heads or consecutive tails. Runs of the same outcome don’t look random to us but are, in fact, common. For example, the probability of a run of three or more consecutive heads or tails in just 10 tosses is greater than 0.8.15 The runs of consecutive heads or consecutive tails that appear in real coin tossing (and that are predicted by the mathematics of probability) seem surprising to us. Because we don’t expect to see long runs, we may conclude that the coin tosses are not independent or that some influence is disturbing the random behavior of the coin.

EXAMPLE 4.31

The “hot hand” in basketball. Belief in the law of small numbers influences behavior. If a basketball player makes several consecutive shots, both the fans and her teammates believe that she has a “hot hand” and is more likely to make the next shot. This is doubtful.

Careful study suggests that runs of baskets made or missed are no more frequent in basketball than would be expected if each shot were independent of the player’s previous shots. Baskets made or missed are just like heads and tails in tossing a coin. (Of course, some players make 30% of their shots in the long run and others make 50%, so a coin-toss model for basketball must allow coins with different probabilities of a head.) Our perception of hot or cold streaks simply shows that we don’t perceive random behavior very well.16

![]()

Our intuition doesn’t do a good job of distinguishing random behavior from systematic influences. This is also true when we look at data. We need statistical inference to supplement exploratory analysis of data because probability calculations can help verify that what we see in the data is more than a random pattern.

How large is a large number? The law of large numbers says that the actual mean outcome of many trials gets close to the distribution mean μ as more trials are made. It doesn’t say how many trials are needed to guarantee a mean outcome close to μ. That depends on the variability of the random outcomes. The more variable the outcomes, the more trials are needed to ensure that the mean outcome is close to the distribution mean μ. Casinos understand this: the outcomes of games of chance are variable enough to hold the interest of gamblers. Only the casino plays often enough to rely on the law of large numbers. Gamblers get entertainment; the casino has a business.

253

BEYOND THE BASICS

More Laws of Large Numbers

The law of large numbers is one of the central facts about probability. It helps us understand the mean μ of a random variable. It explains why gambling casinos and insurance companies make money. It assures us that statistical estimation will be accurate if we can afford enough observations. The basic law of large numbers applies to independent observations that all have the same distribution. Mathematicians have extended the law to many more general settings. Here are two of these.

Is there a winning system for gambling? Serious gamblers often follow a system of betting in which the amount bet on each play depends on the outcome of previous plays. You might, for example, double your bet on each spin of the roulette wheel until you win—or, of course, until your fortune is exhausted. Such a system tries to take advantage of the fact that you have a memory even though the roulette wheel does not. Can you beat the odds with a system based on the outcomes of past plays? No. Mathematicians have established a stronger version of the law of large numbers that says that, if you do not have an infinite fortune to gamble with, your long-run average winnings μ remain the same as long as successive trials of the game (such as spins of the roulette wheel) are independent.

What if observations are not independent? You are in charge of a process that manufactures video screens for computer monitors. Your equipment measures the tension on the metal mesh that lies behind each screen and is critical to its image quality. You want to estimate the mean tension μ for the process by the average of the measurements. Alas, the tension measurements are not independent. If the tension on one screen is a bit too high, the tension on the next is more likely to also be high. Many real-world processes are like this—the process stays stable in the long run, but two observations made close together are likely to both be above or both be below the long-run mean. Again the mathematicians come to the rescue: as long as the dependence dies out fast enough as we take measurements farther and farther apart in time, the law of large numbers still holds.

Rules for means

You are studying flaws in the painted finish of refrigerators made by your firm. Dimples and paint sags are two kinds of surface flaw. Not all refrigerators have the same number of dimples: many have none, some have one, some two, and so on. You ask for the average number of imperfections on a refrigerator. The inspectors report finding an average of 0.7 dimple and 1.4 sags per refrigerator. How many total imperfections of both kinds (on the average) are there on a refrigerator? That’s easy: if the average number of dimples is 0.7 and the average number of sags is 1.4, then counting both gives an average of 0.7 + 1.4 = 2.1 flaws.

In more formal language, the number of dimples on a refrigerator is a random variable X that varies as we inspect one refrigerator after another. We know only that the mean number of dimples is μX = 0.7. The number of paint sags is a second random variable Y having mean μX = 1.4. (As usual, the subscripts keep straight which variable we are talking about.) The total number of both dimples and sags is another random variable, the sum X + Y. Its mean μX+Y is the average number of dimples and sags together. It is just the sum of the individual means μX and μY. That’s an important rule for how means of random variables behave.

254

Here’s another rule. The crickets living in a field have mean length of 1.2 inches. What is the mean in centimeters? There are 2.54 centimeters in an inch, so the length of a cricket in centimeters is 2.54 times its length in inches. If we multiply every observation by 2.54, we also multiply their average by 2.54. The mean in centimeters must be 2.54 × 1.2, or about 3.05 centimeters. More formally, the length in inches of a cricket chosen at random from the field is a random variable X with mean μX. The length in centimeters is 2.54X, and this new random variable has mean 2.54μX.

The point of these examples is that means behave like averages. Here are the rules we need.

RULES FOR MEANS OF LINEAR TRANSFORMATIONS, SUMS, AND DIFFERENCES

Rule 1. If X is a random variable and a and b are fixed numbers, then

Rule 2. If X and Y are random variables, then

Rule 3. If X and Y are random variables, then

linear transformation, p. 44

Note that a + bX is a linear transformation of the random variable X.

EXAMPLE 4.32

How many courses? In Exercise 4.45 (page 244) you described the probability distribution of the number of courses taken in the fall by students at a small liberal arts college. Here is the distribution:

| Courses in the fall | 1 | 2 | 3 | 4 | 5 | 6 |

| Probability | 0.05 | 0.05 | 0.13 | 0.26 | 0.36 | 0.15 |

For the spring semester, the distribution is a little different.

| Courses in the spring | 1 | 2 | 3 | 4 | 5 | 6 |

| Probability | 0.06 | 0.08 | 0.15 | 0.25 | 0.34 | 0.12 |

For a randomly selected student, let X be the number of courses taken in the fall semester, and let Y be the number of courses taken in the spring semester. The means of these random variables are

255

μX = (1)(0.05) + (2)(0.05) + (3)(0.13) + (4)(0.26) + (5)(0.36) + (6)(0.15)

= 4.28

μY = (1)(0.06) + (2)(0.08) + (3)(0.15) + (4)(0.25) + (5)(0.34) + (6)(0.12)

= 4.09

The mean course load for the fall is 4.28 courses, and the mean course load for the spring is 4.09 courses. We assume that these distributions apply to students who earned credit for courses taken in the fall and the spring semesters. The mean of the total number of courses taken for the academic year is X + Y. Using Rule 2, we calculate the mean of the total number of courses:

μZ = μX + μY

= 4.28 + 4.09 = 8.37

Note that it is not possible for a student to take 8.37 courses in an academic year. This number is the mean of the probability distribution.

EXAMPLE 4.33

What about credit hours? In the previous exercise, we examined the number of courses taken in the fall and in the spring at a small liberal arts college. Suppose that we were interested in the total number of credit hours earned for the academic year. We assume that for each course taken at this college, three credit hours are earned. Let T be the mean of the distribution of the total number of credit hours earned for the academic year. What is the mean of the distribution of T? To find the answer, we can use Rule 1 with a = 0 and b = 3. Here is the calculation:

The mean of the distribution of the total number of credit hours earned is 25.11.

USE YOUR KNOWLEDGE

Question 4.65

4.65 Find μY. The random variable X has mean μX = 12. If Y = 12 + 6X, what is μY?

Question 4.66

4.66 Find μW. The random variable U has mean μU = 25, and the random variable V has mean μV = 25. If W = 0.5U + 0.5V, find μW.

The variance of a random variable

The mean is a measure of the center of a distribution. A basic numerical description requires, in addition, a measure of the spread or variability of the distribution. The variance and the standard deviation are the measures of spread that accompany the choice of the mean to measure center. Just as for the mean, we need a distinct symbol to distinguish the variance of a random variable from the variance s2 of a data set. We write the variance of a random variable X as . Once again, the subscript reminds us which variable we have in mind. The definition of the variance of a random variable is similar to the definition of the variance s2 given in Chapter 1 (page 38). That is, the variance is an average value of the squared deviation (X − μX)2 of the variable X from its mean μX. As for the mean, the average we use is a weighted average in which each outcome is weighted by its probability in order to take account of outcomes that are not equally likely. Calculating this weighted average is straightforward for discrete random variables but requires advanced mathematics in the continuous case. Here is the definition.

256

VARIANCE OF A DISCRETE RANDOM VARIABLE

Suppose that X is a discrete random variable whose distribution is

| Value of X | x1 | x2 | x3 | . . . |

| Probability | p1 | p2 | p3 | . . . |

and that μX is the mean of X. The variance of X is

The standard deviation σX of X is the square root of the variance.

EXAMPLE 4.34

Find the mean and the variance. In Example 4.32 (pages 254–255) , we saw that the distribution of the number X of fall courses taken by students at a small liberal arts college is

| Courses in the fall | 1 | 2 | 3 | 4 | 5 | 6 |

| Probability | 0.05 | 0.05 | 0.13 | 0.26 | 0.36 | 0.15 |

We can find the mean and variance of X by arranging the calculation in the form of a table. Both μX and are sums of columns in this table.

| xi | pi | xi pi | (xi − μX)2pi |

|---|---|---|---|

| 1 | 0.05 | 0.05 | (1 − 4.28)2(0.05) = 0.53792 |

| 2 | 0.05 | 0.10 | (2 − 4.28)2(0.05) = 0.25992 |

| 3 | 0.13 | 0.39 | (3 − 4.28)2 (0.13) = 0.21299 |

| 4 | 0.26 | 1.04 | (4 − 4.28)2(0.26) = 0.02038 |

| 5 | 0.36 | 1.80 | (5 − 4.28)2(0.36) = 0.18662 |

| 6 | 0.15 | 0.90 | (6 − 4.28)2(0.15) = 0.44376 |

| μX = 4.28 | = 1.662 | ||

We see that = 1.662. The standard deviation of X is . The standard deviation is a measure of the variability of the number of fall courses taken by the students at the small liberal arts college. As in the case of distributions for data, the standard deviation of a probability distribution is easiest to understand for Normal distributions.

257

USE YOUR KNOWLEDGE

Question 4.67

4.67 Find the variance and the standard deviation. The random variable X has the following probability distribution:

| Value of X | 0 | 3 |

| Probability | 0.3 | 0.7 |

Find the variance and the standard deviation σX for this random variable.

Rules for variances and standard deviations

![]()

What are the facts for variances that parallel Rules 1, 2, and 3 for means? The mean of a sum of random variables is always the sum of their means, but this addition rule is true for variances only in special situations. To understand why, take X to be the percent of a family’s after-tax income that is spent, and take Y to be the percent that is saved. When X increases, Y decreases by the same amount. Though X and Y may vary widely from year to year, their sum X + Y is always 100% and does not vary at all. It is the association between the variables X and Y that prevents their variances from adding.

If random variables are independent, this kind of association between their values is ruled out and their variances do add. Two random variables X and Y are independentindependence if knowing that any event involving X alone did or did not occur tells us nothing about the occurrence of any event involving Y alone.

Probability models often assume independence when the random variables describe outcomes that appear unrelated to each other. You should ask in each instance whether the assumption of independence seems reasonable.

When random variables are not independent, the variance of their sum depends on the correlationcorrelation between them as well as on their individual variances. In Chapter 2, we met the correlation r between two observed variables measured on the same individuals. We defined (page 101) the correlation r as an average of the products of the standardized x and y observations. The correlation between two random variables is defined in the same way, once again using a weighted average with probabilities as weights. We won’t give the details—it is enough to know that the correlation between two random variables has the same basic properties as the correlation r calculated from data. We use ρ, the Greek letter rho, for the correlation between two random variables. The correlation ρ is a number between −1 and 1 that measures the direction and strength of the linear relationship between two variables. The correlation between two independent random variables is zero.

Returning to family finances, if X is the percent of a family’s after-tax income that is spent and Y is the percent that is saved, then Y = 100 − X. This is a perfect linear relationship with a negative slope, so the correlation between X and Y is ρ = −1. With the correlation at hand, we can state the rules for manipulating variances.

258

RULES FOR VARIANCES AND STANDARD DEVIATIONS OF LINEAR TRANSFORMATIONS, SUMS, AND DIFFERENCES

Rule 1. If X is a random variable and a and b are fixed numbers, then

Rule 2. If X and Y are independent random variables, then

This is the addition rule for variances of independent random variables.

Rule 3. If X and Y have correlation ρ, then

This is the general addition rule for variances of random variables.

To find the standard deviation, take the square root of the variance.

![]()

Because a variance is the average of squared deviations from the mean, multiplying X by a constant b multiplies by the square of the constant. Adding a constant a to a random variable changes its mean but does not change its variability. The variance of X + a is, therefore, the same as the variance of X. Because the square of −1 is 1, the addition rule says that the variance of a difference between independent random variables is the sum of the variances. For independent random variables, the difference X − Y is more variable than either X or Y alone because variations in both X and Y contribute to variation in their difference.

![]()

As with data, we prefer the standard deviation to the variance as a measure of the variability of a random variable. Rule 2 for variances implies that standard deviations of independent random variables do not add. To combine standard deviations, use the rules for variances. For example, the standard deviations of 2X and −2X are both equal to 2σX because this is the square root of the variance .

EXAMPLE 4.35

Payoff in the Tri-State Pick 3 lottery. The payoff X of a $1 ticket in the Tri-State Pick 3 game is $500 with probability 1/1000 and 0 the rest of the time. Here is the combined calculation of mean and variance:

| xi | pi | xi pi | (xi − μX)2pi | |

|---|---|---|---|---|

| 0 | 0.999 | 0.0 | (0 − 0.5)2(0.999) = | 0.24975 |

| 500 | 0.001 | 0.5 | (500 − 0.5)2(0.001) = | 249.50025 |

| μX = 0.5 | = 249.75 | |||

The mean payoff is 50 cents. The standard deviation is 15.80. It is usual for games of chance to have large standard deviations because large variability makes gambling exciting.

259

If you buy a Pick 3 ticket, your winnings are W = X − 1 because the dollar you paid for the ticket must be subtracted from the payoff. Let’s find the mean and variance for this random variable.

EXAMPLE 4.36

Winnings in the Tri-State Pick 3 lottery. By the rules for means, the mean amount you win is

μW = μX − 1 = −$0.50

That is, you lose an average of 50 cents on a ticket. The rules for variances remind us that the variance and standard deviation of the winnings W = X −1 are the same as those of X. Subtracting a fixed number changes the mean but not the variance.

Suppose now that you buy a $1 ticket on each of two different days. The payoffs X and Y on the two tickets are independent because separate drawings are held each day. Your total payoff is X + Y. Let’s find the mean and standard deviation for this payoff.

EXAMPLE 4.37

Two tickets. The mean for the payoff for the two tickets is

μX+Y = μX + μY = $0.50 + $0.50 = $1.00

Because X and Y are independent, the variance of X + Y is

The standard deviation of the total payoff is

This is not the same as the sum of the individual standard deviations, which is $15.80 + $15.80 = $31.60. Variances of independent random variables add; standard deviations do not.

When we add random variables that are correlated, we need to use the correlation for the calculation of the variance but not for the calculation of the mean. Here is an example.

EXAMPLE 4.38

Utility bills. Consider a household where the monthly bill for natural-gas averages $125 with a standard deviation of $75, while the monthly bill for electricity averages $174 with a standard deviation of $41. The correlation between the two bills is −0.55.

Let’s compute the mean and standard deviation of the sum of the natural-gas bill and the electricity bill. We let X stand for the natural-gas bill and Y stand for the electricity bill. Then the total is X + Y. Using the rules for means, we have

μX+Y = μX + μY = 125 + 174 = 299

To find the standard deviation, we first find the variance and then take the square root to determine the standard deviation. From the general addition rule for variances of random variables,

260

Therefore, the standard deviation is

The total of the natural-gas bill and the electricity bill has mean $299 and standard deviation $63.

The negative correlation in Example 4.38 is due to the fact that, in this household, natural gas is used for heating and electricity is used for air-conditioning. So, when it is warm, the electricity charges are high and the natural-gas charges are low. When it is cool, the reverse is true. This causes the standard deviation of the sum to be less than it would be if the two bills were uncorrelated (see Exercise 4.79, page 263).

There are situations where we need to combine several of our rules to find means and standard deviations. Here is an example.

EXAMPLE 4.39

Calcium intake. To get enough calcium for optimal bone health, tablets containing calcium are often recommended to supplement the calcium in the diet. One study designed to evaluate the effectiveness of a supplement followed a group of young people for seven years. Each subject was assigned to take either a tablet containing 1000 milligrams of calcium per day (mg/d) or a placebo tablet that was identical except that it had no calcium.17 A major problem with studies like this one is compliance: subjects do not always take the treatments assigned to them.

In this study, the compliance rate declined to about 47% toward the end of the seven-year period. The standard deviation of compliance was 22%. Calcium from the diet averaged 850 mg/d with a standard deviation of 330 mg/d. The correlation between compliance and dietary intake was 0.68. Let’s find the mean and standard deviation for the total calcium intake. We let S stand for the intake from the supplement and D stand for the intake from the diet.

We start with the intake from the supplement. Because the compliance is 47% and the amount in each tablet is 1000 mg, the mean for S is

μS = 1000(0.47) = 470

Because the standard deviation of the compliance is 22%, the variance of S is

The standard deviation is

Be sure to verify which rules for means and variances are used in these calculations.

We can now find the mean and standard deviation for the total intake. The mean is

μS+D = μS + μD = 470 + 850 = 1320

the variance is

261

and the standard deviation is

The mean of the total calcium intake is 1320 mg/d and the standard deviation is 506 mg/d.

The correlation in this example illustrates an unfortunate fact about compliance and having an adequate diet. Some of the subjects in this study have diets that provide an adequate amount of calcium while others do not. The positive correlation between compliance and dietary intake tells us that those who have relatively high dietary intakes are more likely to take the assigned supplements. On the other hand, those subjects with relatively low dietary intakes, the ones who need the supplement the most, are less likely to take the assigned supplements.