10-1 Sound Waves: Stimulus for Audition

323

The nervous system produces movement within a perceptual world the brain constructs.

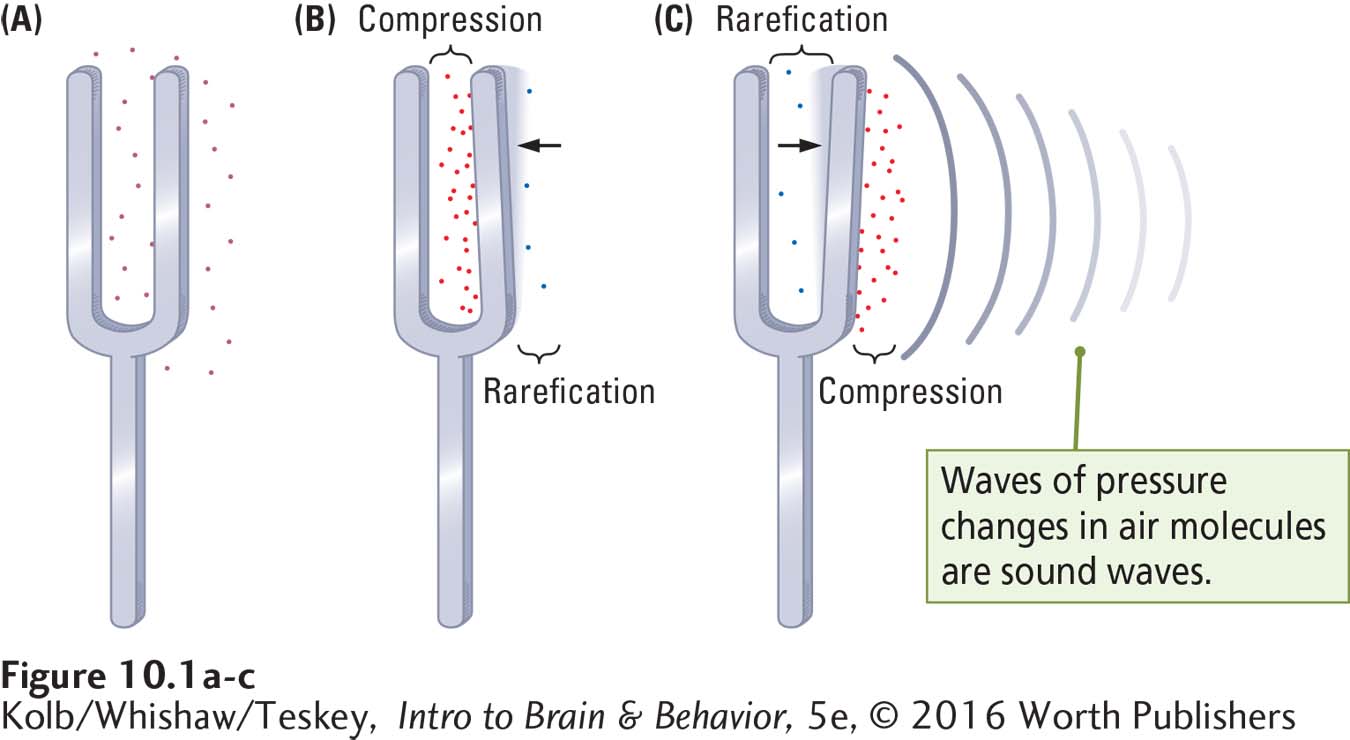

What we experience as sound is the brain’s construct, as is what we see. Without a brain, sound and sight do not exist. When you strike a tuning fork, the energy of its vibrating prongs displaces adjacent air molecules. Figure 10-1 shows how, as one prong moves to the left, air molecules to the left compress (grow more dense) and air molecules to the right become more rarefied (grow less dense). The opposite happens when the prong moves to the right. The undulating energy generated by this displacement of molecules causes compression waves of changing air pressure to emanate from the fork. These sound waves move through compressible media—

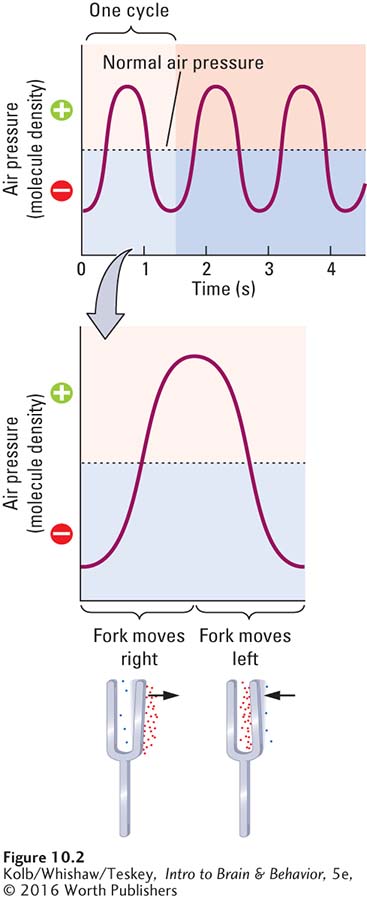

The top graph in Figure 10-2 represents waves of changing air pressure emanating from a tuning fork by plotting air molecule density against time at a single point. The bottom graph shows how the energy from the right-

Physical Properties of Sound Waves

Section 9-4 explains how we see shapes and colors.

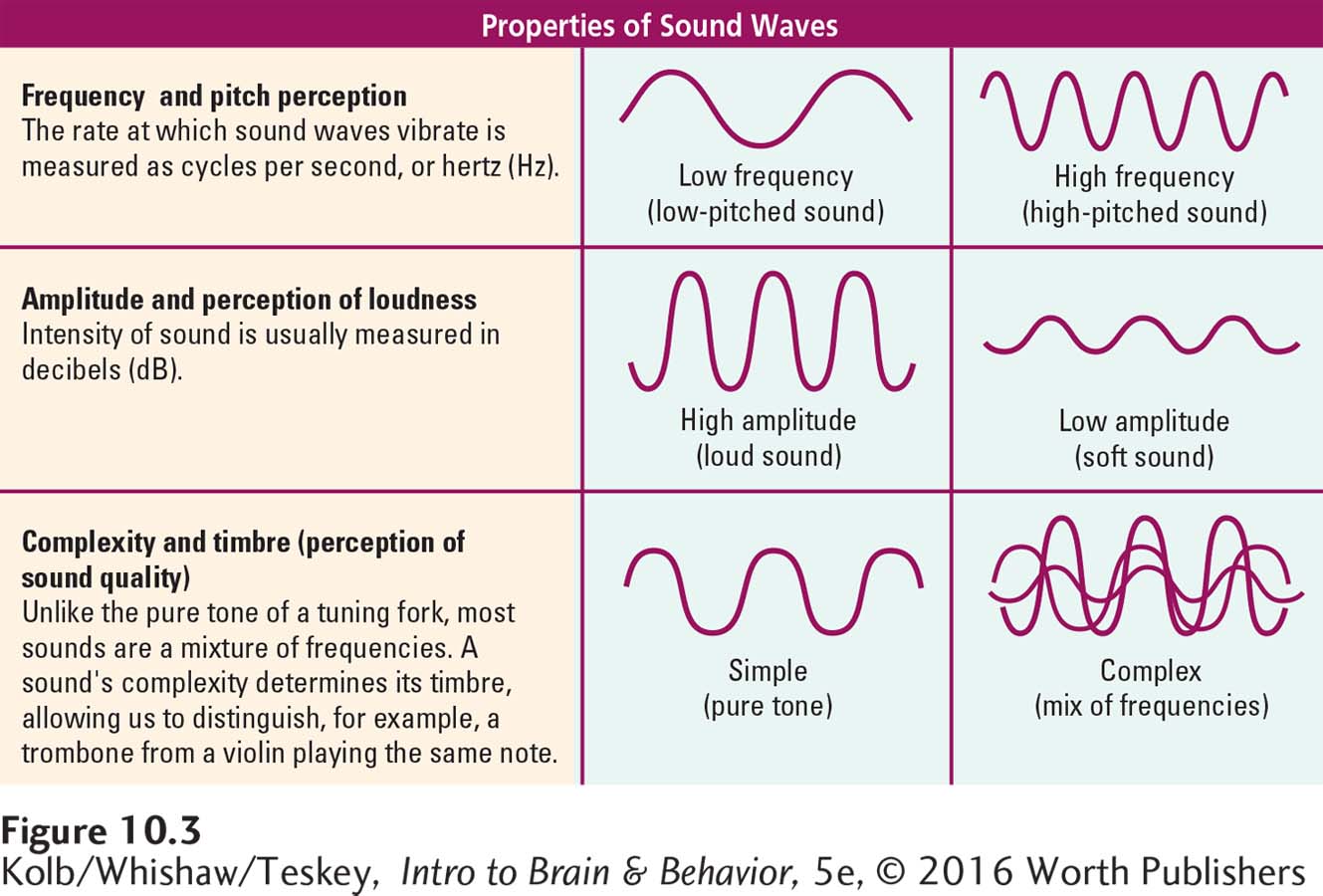

Light is electromagnetic energy we see; sound is mechanical energy we hear. Sound wave energy has three physical attributes—

Sound Wave Frequency

Sound waves in air travel at a fixed speed of 1100 feet (343 meters) per second and more than four times faster in water, but sound energy varies in wavelength. Frequency is the number of cycles a wave completes in a given amount of time. Sound wave frequencies are measured in cycles per second, called hertz (Hz), for the German physicist Heinrich Rudolph Hertz.

One hertz is 1 cycle per second, 50 hertz is 50 cycles per second, 6000 hertz is 6000 cycles per second, and so on. Sounds we perceive as low pitched have fewer wave frequencies (fewer cycles per second), whereas sounds that we perceive as high pitched have more wave frequencies (many cycles per second), as shown in the top panel of Figure 10-3.

324

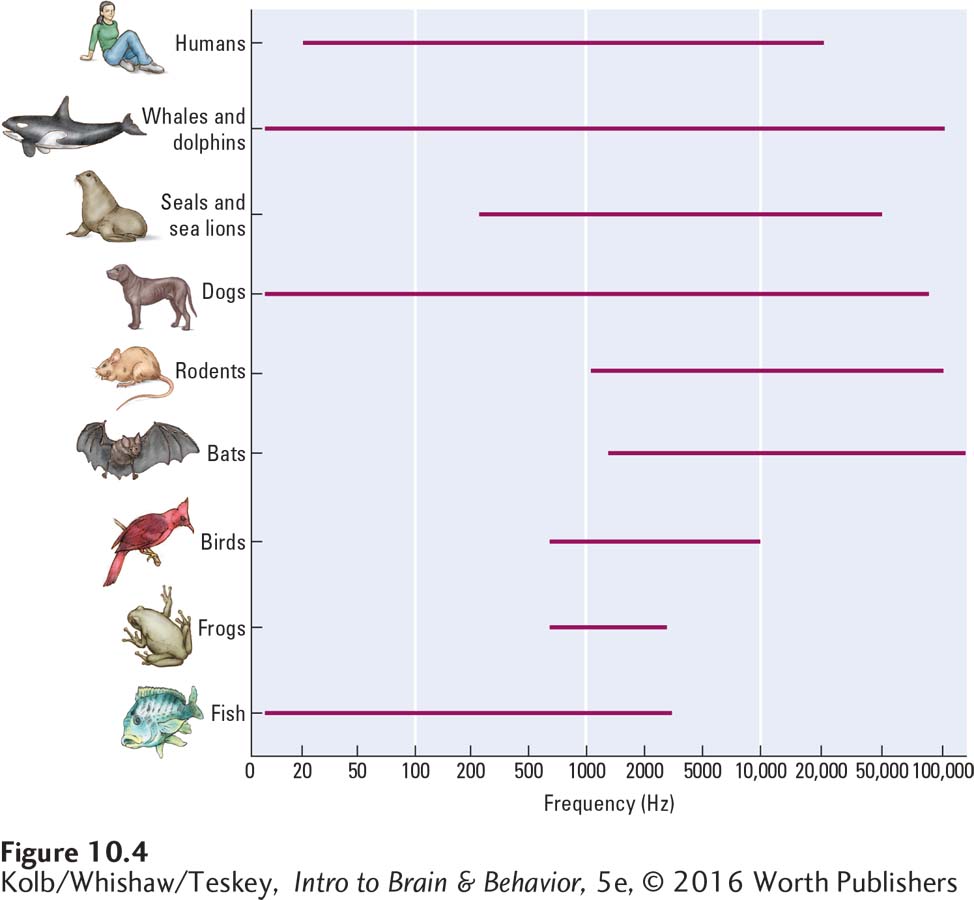

Just as we can perceive light only at visible wavelengths, we can perceive sound waves only in the limited range of frequencies plotted in Figure 10-4. Healthy young adult humans’ hearing range is from about 20 to 20,000 hertz. Many animals communicate with sound: their auditory system is designed to interpret their species-

The range of sound wave frequencies heard by different species varies extensively. Some (such as frogs and birds) have a rather narrow hearing range; others (such as dogs, whales, and humans) have a broad range. Some species use extremely high frequencies (bats are off the scale in Figure 10-4); others (fish, for example) use the low range.

The auditory systems of whales and dolphins are responsive to a remarkably wide range of sound waves. Characteristics at the extremes of these frequencies allow marine mammals to use them in different ways. Very low frequency sound waves travel long distances in water. Whales produce them for underwater communication over hundreds of miles. High-

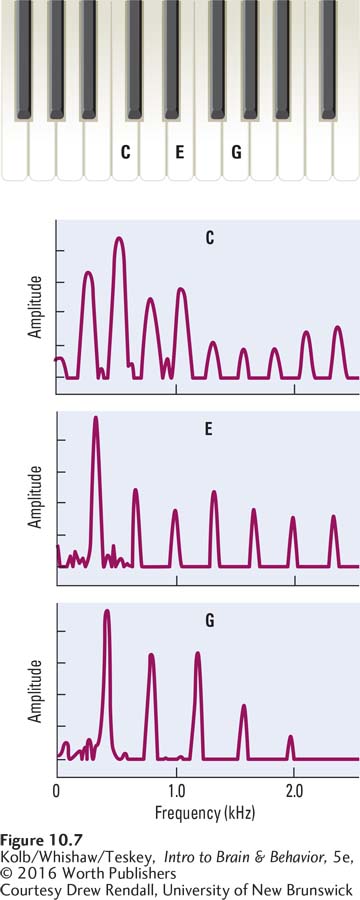

Differences in sound wave frequencies become differences in pitch when heard. Each note in a musical scale must have a different frequency, because each has a different pitch. Middle C on the piano, for instance, has a frequency of 264 hertz.

Section 8-4 suggests a critical period in brain development most sensitive to musical training. Figure 15-

Most people can discriminate between one musical note and another, but some can actually name any note they hear (A, B flat, C sharp, and so forth). This perfect (or absolute) pitch runs in families, suggesting a genetic influence. On the side of experience, most people who develop perfect pitch also receive musical training in matching pitch to note from an early age.

Sound Wave Amplitude

Sound waves vary not only in frequency, which causes differences in perceived pitch, but also in amplitude (strength), which causes differences in perceived intensity, or loudness. If you hit a tuning fork lightly, it produces a tone with a frequency of, say, 264 hertz (middle C). If you hit it harder, the frequency remains 264 hertz, but you also transfer more energy into the vibrating prong, increasing its amplitude.

325

The fork now moves farther left and right but at the same frequency. Increased air molecule compression intensifies the energy in a sound wave, which amps the sound—

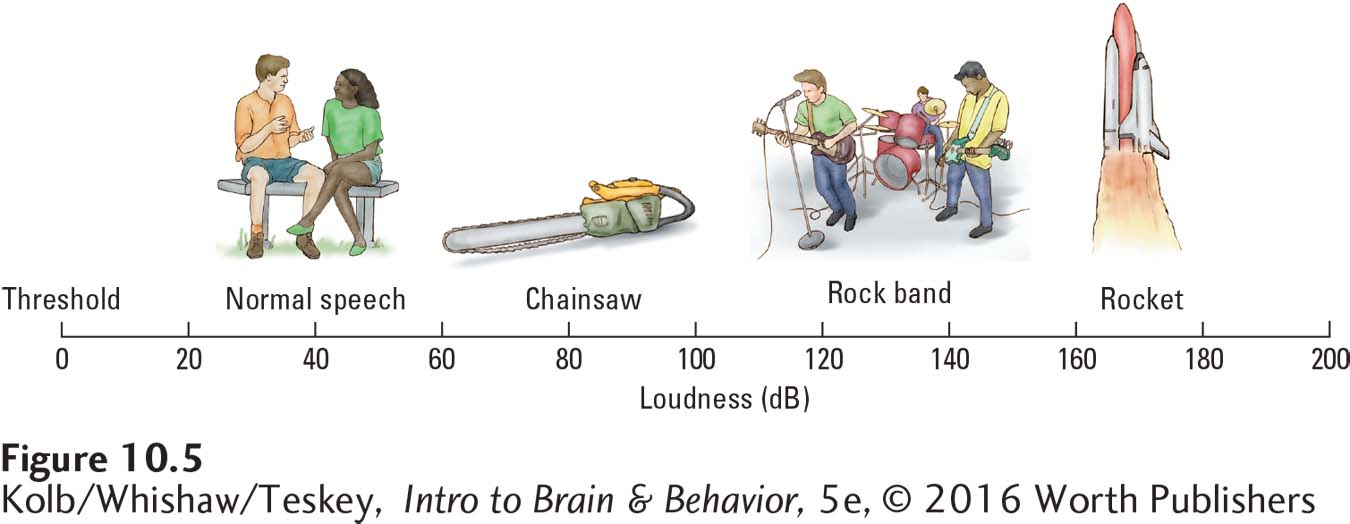

Sound wave amplitude is usually measured in decibels (dB), the strength of a sound relative to the threshold of human hearing as a standard, pegged at 0 decibels (Figure 10-5). Typical speech sounds, for example, measure about 40 decibels. Sounds that register more than about 70 dB we perceive as loud; those of less than about 20 dB we perceive as soft, or quiet.

The human nervous system evolved to be sensitive to soft sounds and so is actually blown away by extremely loud ones. People regularly damage their hearing through exposure to very loud sounds (such as rifle fire at close range) or even by prolonged exposure to sounds that are only relatively loud (such as at a live concert). Prolonged exposure to sounds louder than 100 decibels is likely to damage our hearing.

Rock bands, among others, routinely play music that registers higher than 120 decibels and sometimes as high as 135 decibels. Drake-

Sound Wave Complexity

326

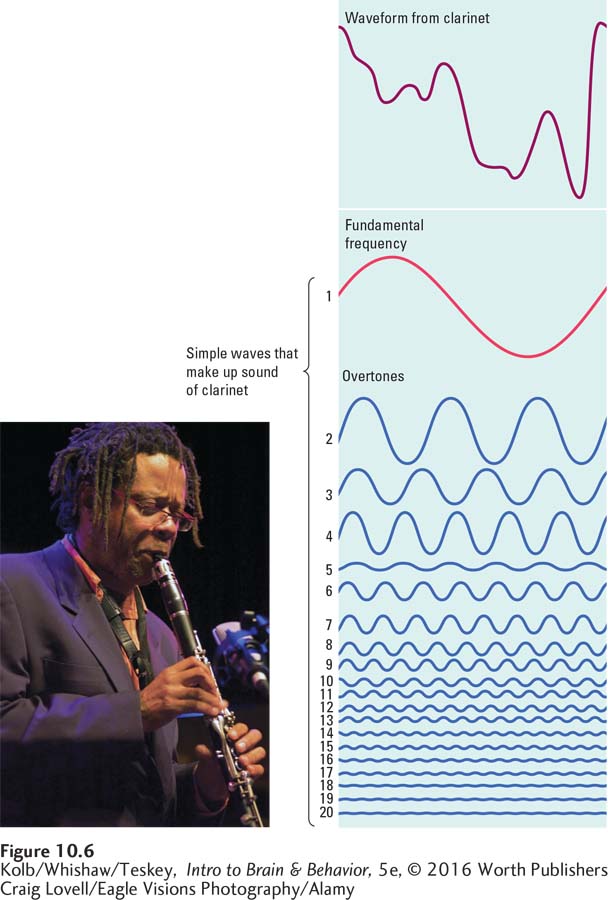

Sounds with a single frequency wave are pure tones, much like those that emanate from a tuning fork or pitch pipe, but most sounds mix wave frequencies together in combinations called complex tones (see Figure 10-3, bottom panel). To better understand the blended nature of a complex tone, picture a clarinetist, such as Don Byron in Figure 10-6, playing a steady note. The upper graph in Figure 10-6 represents the sound wave a clarinet produces.

The waveform pattern is more complex than the simple, regular waves visualized in Figures 10-2 or 10-3. Even when a musician plays a single note, the instrument is making a complex tone. Using a mathematical technique known as Fourier analysis, we can break down this complex tone into its many component pure tones, the numbered waves traced at the bottom of Figure 10-6.

The fundamental frequency (wave 1) is the rate at which the complex waveform pattern repeats. Waves 2 through 20 are overtones, a set of higher-

As primary colors blend into near-

Perception of Sound

Visualize what happens when you toss a pebble into a pond. Waves of water emanate from the point where the pebble enters the water. These waves produce no audible sound. But if your skin were able to convert the water wave energy (sensation) into neural activity that stimulated your auditory system, you would hear the waves when you placed your hand in the rippling water (perception). When you removed your hand, the sound would stop.

The pebble hitting the water is much like a tree falling to the ground, and the waves that emanate from the pebble’s entry point are like the air pressure waves that emanate from the place where the tree strikes the ground. The frequency of the waves determines the pitch of the sound heard by the brain, whereas the height (amplitude) of the waves determines the sound’s loudness.

327

1 picometer = one-

Our sensitivity to sound waves is extraordinary. At the threshold of human hearing, we can detect the displacement of air molecules of about 10 picometers. We are rarely in an environment where we can detect such a small air pressure change: there is usually too much background noise. A quiet, rural setting is probably as close as we ever get to an environment suitable for testing the acuteness of our hearing. The next time you visit the countryside, take note of the sounds you can hear. If there is no sound competition, you can often hear a single car engine miles away.

In addition to detecting minute changes in air pressure, the auditory system is also adept at simultaneously perceiving different sounds. As you read this chapter, you can differentiate all sorts of sounds around you—

You can perceive more than one sound simultaneously because each frequency of change in air pressure (each different sound wave) stimulates different neurons in your auditory system. Sound perception is only the beginning of your auditory experience. Your brain interprets sounds to obtain information about events in your environment, and it analyzes a sound’s meaning. Your use of sound to communicate with other people through both language and music clearly illustrate these processes.

Properties of Language and Music as Sounds

328

Language and music differ from other auditory sensations in fundamental ways. Both convey meaning and evoke emotion. Analyzing meaning in sound is a considerably more complex behavior than simply detecting a sound and identifying it. The brain has evolved systems that analyze sounds for meaning, speech in the left temporal lobe and music in the right.

Infants are receptive to speech and musical cues before they have any obvious utility, which suggests both the innate presence of these skills and the effects of prenatal experiences. Humans have an amazing capacity for learning and remembering linguistic and musical information. We are capable of learning a vocabulary of tens of thousands of words, often in many languages, and a capacity for recognizing thousands of songs.

Language facilitates communication. We can organize our complex perceptual worlds by categorizing information with words. We can tell others what we think and know and imagine. Imagine the efficiency that gestures and language added to the cooperative food hunting and gathering behaviors of early humans.

All these benefits of language seem obvious, but the benefits of music may seem less straightforward. In fact, music helps us to regulate our own emotions and to affect the emotions of others. After all, when do people most commonly make music? We sing and play music to communicate with infants and put children to sleep. We play music to enhance social interactions and gatherings and romance. We use music to bolster group identification—

Another characteristic that distinguishes speech and musical sounds from other auditory inputs is their delivery speed. Nonspeech and nonmusical noise produced at a rate of about five segments per second is perceived as a buzz. (A sound segment is a distinct unit of sound.) Normal speed for speech is on the order of 8 to 10 segments per second, and we are capable of understanding speech at nearly 30 segments per second. Speech perception at these higher rates is astounding, because the input speed far exceeds the auditory system’s ability to transmit all the speech segments as separate pieces of information.

Properties of Language

Experience listening to a particular language helps the brain to analyze rapid speech, which is one reason people who are speaking languages unfamiliar to you often seem to be talking incredibly fast. Your brain does not know where the foreign words end and begin, so they seem to run together in a rapid-

Auditory constancy is reminiscent of the visual system’s capacity for object constancy; see Section 9-4.

A unique characteristic of our perception of speech sounds is our tendency to hear variations of a sound as if they were identical, even though the sound varies considerably from one context to another. For instance, the English letter d is pronounced differently in the words deep, deck, and duke, yet a listener perceives the pronunciations to be the same d sound.

The auditory system must therefore have a mechanism for categorizing sounds as being the same despite small differences in pronunciation. Experience must affect this mechanism, because different languages categorize speech sounds differently. A major obstacle to mastering a foreign language after age 10 is the difficulty of learning which sound categories are treated as equivalent.

Properties of Music

As with other sounds, the subjective properties that people perceive in musical sounds differ from one another. One subjective property is loudness, the magnitude of the sound as judged by a person. Loudness is related to the amplitude of a sound wave measured in decibels, but loudness is also subjective. What is very loud music for one person may be only moderately loud for another, whereas music that seems soft to one listener may not seem at all soft to someone else. Your perception of loudness also changes with context. After you’ve slowed down from driving fast on a highway, for example, your car’s music system seems louder. The reduction in road noise alters your perception of the music’s loudness.

Another subjective property of musical sounds is pitch, the position of each tone on a musical scale as judged by the listener. Although pitch is clearly related to sound wave frequency, there is more to it than that. Consider the note middle C as played on a piano. This note can be described as a pattern of sound frequencies, as is the clarinet note in Figure 10-6.

Like the note played on the piano, any musical note is defined by its fundamental frequency—

An important feature of the human brain’s analysis of music is that middle C is perceived as being the same note whether it is played on a piano or on a guitar, even though the sounds of these instruments differ widely. The right temporal lobe extracts pitch from sound, whether the sound is speech or music. In speech, pitch contributes to the perceived melodic tone of a voice, or prosody.

A final property of musical sound is quality, or timbre, the perceived characteristics that distinguish a particular sound from all others of similar pitch and loudness. We can easily distinguish the timbre of a violin from that of a trombone, even if both instruments are playing the same note at the same loudness. The quality of their sounds differs.

10-1 REVIEW

Sound Waves: Stimulus for Audition

Before you continue, check your understanding.

Question 1

The physical stimulus for audition, produced by changes in ____________, is a form of mechanical energy converted in the ear to neural activity.

329

Question 2

Sound waves have three physical attributes: ____________, ____________, and ____________.

Question 3

Four properties of musical sounds are ____________, ____________, ____________, and ____________.

Question 4

Sound is processed in the ____________ lobes.

Question 5

What distinguishes speech and musical sounds from other auditory inputs?

Answers appear in the Self Test section of the book.