10-3 Neural Activity and Hearing

We now turn to the neuronal activity in the auditory system that produces our perception of sound. Neurons at different levels in this system serve different functions. To get an idea of what individual hair cells and cortical neurons do, we consider how the auditory system codes sound wave energy so that we perceive pitch, loudness, location, and pattern.

Hearing Pitch

Tonotopic literally means of a tone place.

Recall that perception of pitch corresponds to the frequency (repetition rate) of sound waves measured in hertz (cycles per second). Hair cells in the cochlea code frequency as a function of their location on the basilar membrane. In this tonotopic representation, hair cell cilia at the base of the cochlea are maximally displaced by high-

A hair cell’s frequency range parallels a photoreceptor’s response to light wavelengths. See Figure 9-6.

Recordings from single fibers in the cochlear nerve reveal that although each axon transmits information about only a small part of the auditory spectrum, each cell does respond to a range of sound wave frequencies—

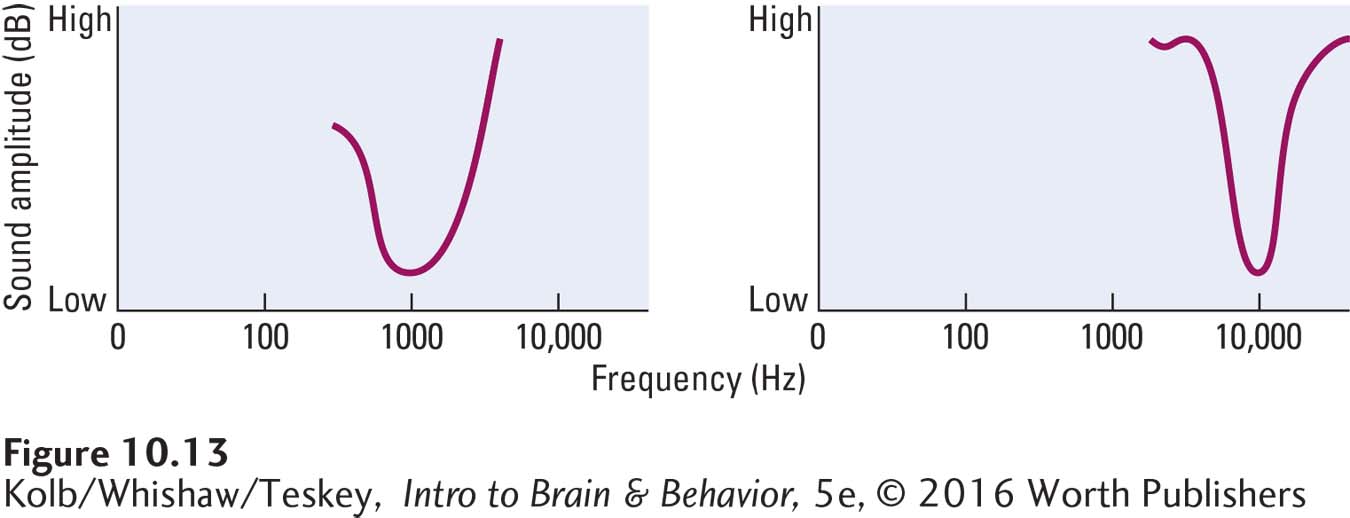

We can plot this range of hair cell responses to different frequencies at different amplitudes as a tuning curve. As graphed in Figure 10-13, each hair cell receptor is maximally sensitive to a particular wavelength but still responds somewhat to nearby wavelengths.

337

Bipolar cell axons in the cochlea project to the cochlear nucleus in an orderly manner (see Figure 10-11). Axons entering from the base of the cochlea connect with one location; those entering from the middle connect to another location; and those entering from the apex connect to yet another. Thus the basilar membrane’s tonotopic representation is reproduced in the hindbrain cochlear nucleus.

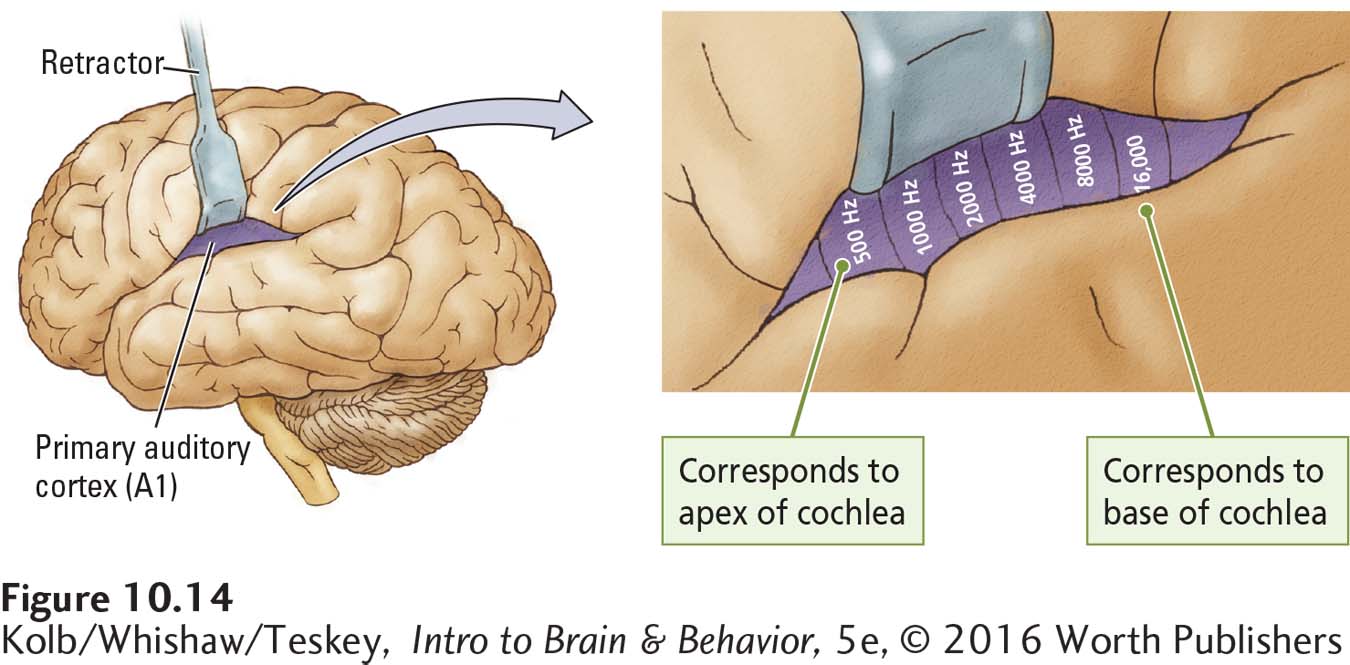

This systematic representation is maintained throughout the auditory pathways and into the primary auditory cortex. Figure 10-14 shows the distribution of projections from the base and apex of the cochlea across area A1. Similar tonotopic maps can be constructed for each level of the auditory system.

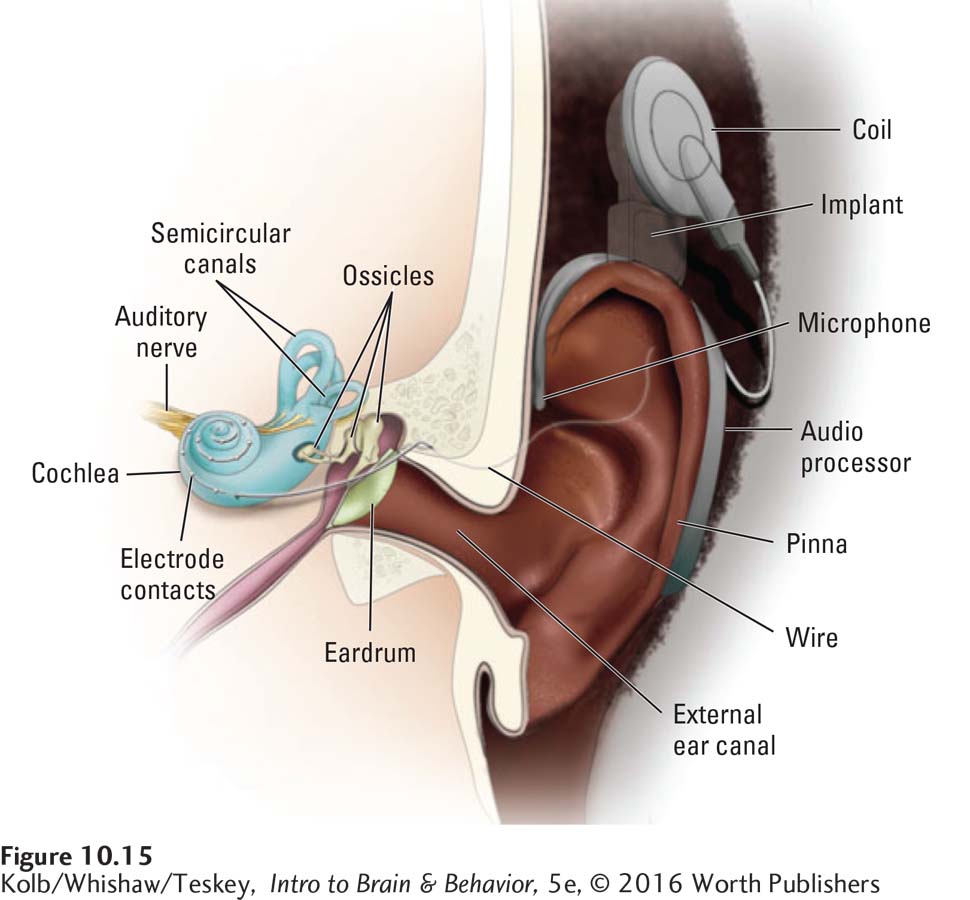

This systematic auditory organization has enabled the development of cochlear implants—electronic devices surgically inserted in the inner ear that serve as prostheses to allow deaf people to hear (see Loeb, 1990). Cochlear implants are no cure for deafness but rather are a hearing substitute. In Figure 10-15, a miniature microphonelike processor secured to the skull detects the component frequencies of incoming sound waves and sends them to the appropriate place on the basilar membrane through tiny wires. The nervous system does not distinguish between stimulation coming from this artificial device and stimulation coming through the middle ear.

As long as appropriate signals go to the correct locations on the basilar membrane, the brain will hear. Cochlear implants work well, allowing the deaf to detect even the fluctuating pitches of speech. Their success corroborates the tonotopic representation of pitch in the basilar membrane.

338

Even so, the quality of sound cochlear implants create is impoverished relative to natural hearing. Adults who lose their hearing and then get cochlear implants describe the sounds as “computerized” and “weird.” Many people with implants find music unpleasant and difficult to listen to. Graeme Clark (2015) developed a prototype high-

One minor difficulty with frequency detection is that the human cochlea does not respond in a tonotopic manner to frequencies below about 200 Hz, yet we can hear frequencies as low as 20 Hz. At its apex, all the cells respond to movement of the basilar membrane, but they do so in proportion to the frequency of the incoming wave (see Figure 10-9B). Higher rates of bipolar cell firing signal a higher frequency, whereas lower rates of firing signal a lower frequency.

Why the cochlea uses a different system to differentiate pitch within this range of very low frequency sound waves is not clear. It probably has to do with the physical limitations of the basilar membrane. Discriminating among low-

Detecting Loudness

The simplest way for cochlear (bipolar) cells to indicate sound wave intensity is to fire at a higher rate when amplitude is greater, which is exactly what happens. More intense air pressure changes produce more intense basilar membrane vibrations and therefore greater shearing of the cilia. Increased shearing leads to more neurotransmitter released onto bipolar cells. As a result, the bipolar axons fire more frequently, telling the auditory system that the sound is getting louder.

Detecting Location

Psychologist Albert Bregman devised a visual analogy to describe what the auditory system is doing when it detects sound location:

Imagine a game played at the side of a lake. Two small channels are dug, side by side, leading away from the lake, and the lake water is allowed to fill them up. Partway up each channel, a cork floats, moving up and down with the waves. You stand with your back to the lake and are allowed to look only at the two floating corks. Then you are asked questions about what is happening on the lake. Are there two motorboats on the lake or only one? Is the nearer one going from left to right or right to left? Is the wind blowing? Did something heavy fall into the water? You must answer these questions just by looking at the two corks. This would seem to be an impossible task. Yet consider an exactly analogous problem. As you sit in a room, a lake of air surrounds you. Running off this lake, into your head, are two small channels – your ear canals. At the end of each is a membrane (the ear drum) that acts like the floating corks in the channels running off the lake, moving in and out with the sound waves that hit it. Just as the game at the lakeside offered no information about the happenings on the lake except for the movements of the corks, the sound-

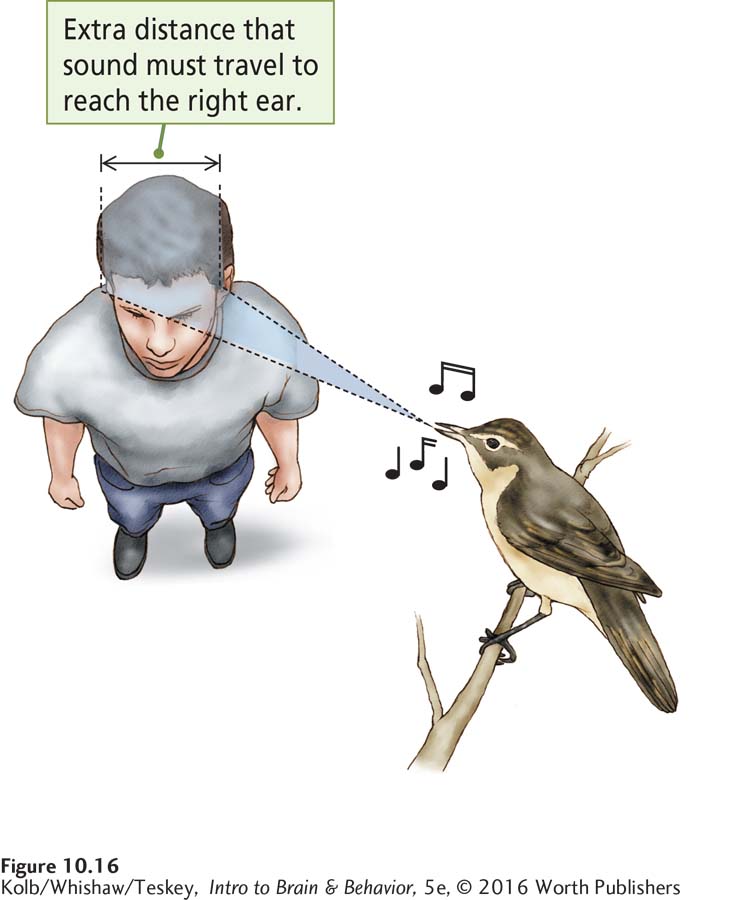

We estimate the location of a sound both by taking cues derived from one ear and by comparing cues received at both ears. The fact that each cochlear nerve synapses on both sides of the brain provides mechanisms for locating a sound source. In one mechanism, neurons in the brainstem compute the difference in a sound wave’s arrival time at each ear—

This computation of left-

339

Figure 10-16 shows how sound waves originating on the left reach the left ear slightly before they reach the right ear. As the sound source moves from the side of the head toward the middle, a person has greater and greater difficulty locating it: the ITD becomes smaller and smaller until there is no difference at all. When we detect no difference, we infer that the sound is either directly in front of us or directly behind us. To locate it, we turn our head, making the sound waves strike one ear sooner. We have a similar problem distinguishing between sounds directly above and below us. Again, we solve the problem by tilting our head, thus causing the sound waves to strike one ear before the other.

Another mechanism used by the auditory system to detect the source of a sound is the sound’s relative loudness on the left and the right—

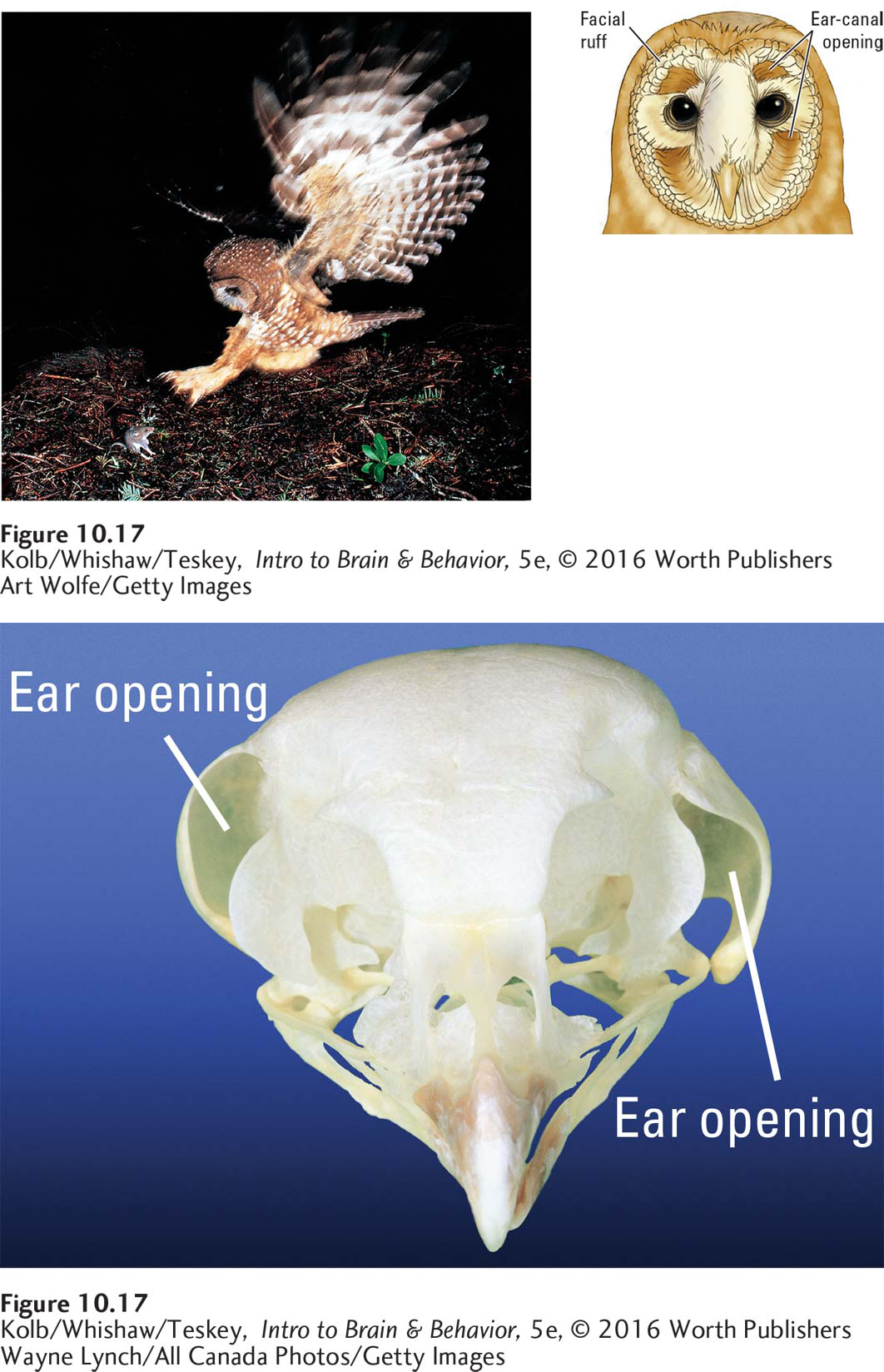

Head tilting and turning take time, which is important for animals, such as owls, that hunt using sound. Owls need to know the location of a sound simultaneously in at least two directions—

Wayne Lynch/All Canada Photos/Getty Images

Detecting Patterns in Sound

Music and language are perhaps the primary sound wave patterns that humans recognize. Perceiving sound wave patterns as meaningful units thus is fundamental to our auditory analysis. Because music perception and language perception are lateralized in the right and left temporal lobes, respectively, we can guess that neurons in the right and left temporal cortex take part in pattern recognition and analysis of both auditory experiences. Studying the activities of auditory neurons in humans is not easy, however.

Audition for action parallels unconscious visually guided movements by the dorsal stream; see Figure 9-42.

Most of what neuroscientists know comes from studies of how individual neurons respond in nonhuman primates. Both human and nonhuman primates have a ventral and dorsal cortical pathway for audition. Neurons in the ventral pathway decode spectrally complex sounds—

10-3 REVIEW

340

Neural Activity and Hearing

Before you continue, check your understanding.

Question 1

Bipolar neurons in the cochlea form ____________ maps that code sound wave frequencies.

Question 2

Loudness is decoded by the firing rate of cells in the ____________.

Question 3

Detecting the location of a sound is a function of neurons in the ____________ and ____________ of the brainstem.

Question 4

The function of the dorsal auditory pathway can be described as ____________.

Question 5

Explain how the brain detects a sound’s location.

Answers appear in the Self Test section of the book.