How to sample badly

For many years in Rapides Parish, Louisiana, only one company had been allowed to provide ambulance service. In 1999, the local paper, the Town Talk, asked readers to call in to offer their opinion on whether the company should keep its monopoly. Call-in polls are generally automated: call one telephone number to vote Yes and call another number to vote No. Telephone companies often charge callers to these numbers.

22

The Town Talk got 3763 calls, which suggests unusual interest in ambulance service. Investigation showed that 638 calls came from the ambulance company office or from the homes of its executives. Many more, no doubt, came from lower-level employees. “We’ve got employees who are concerned about this situation, their job stability, and their families and maybe called more than they should have,” said a company vice president. Other sources said employees were told to, as they say in Chicago, “vote early and often.”

As the Town Talk learned, it is easier to sample badly than to sample well. The paper relied on voluntary response, allowing people to call in rather than actively selecting its own sample. The result was biased—the sample was overweighted with people favoring the ambulance monopoly. Voluntary response samples attract people who feel strongly about the issue in question. These people, like the employees of the ambulance company, may not fairly represent the opinions of the entire population.

There are other ways to sample badly. Suppose that we sell your company several crates of oranges each week. You examine a sample of oranges from each crate to determine the quality of our oranges. It is easy to inspect a few oranges from the top of each crate, but these oranges may not be representative of the entire crate. Those on the bottom are more often damaged in shipment. If we were less than honest, we might make sure that the rotten oranges are packed on the bottom, with some good ones on top for you to inspect. If you sample from the top, your sample results are again biased—the sample oranges are systematically better than the population they are supposed to represent.

Biased sampling methods

The design of a statistical study is biased if it systematically favors certain outcomes.

Selection of whichever individuals are easiest to reach is called convenience sampling.

A voluntary response sample chooses itself by responding to a general appeal. Write-in or call-in opinion polls are examples of voluntary response samples.

Convenience samples and voluntary response samples are often biased.

23

EXAMPLE 1 Interviewing at the mall

Squeezing the oranges on the top of the crate is one example of convenience sampling. Mall interviews are another. Manufacturers and advertising agencies often use interviews at shopping malls to gather information about the habits of consumers and the effectiveness of ads. A sample of mall shoppers is fast and cheap. But people contacted at shopping malls are not representative of the entire U.S. population. They are richer, for example, and more likely to be teenagers or retired. Moreover, the interviewers tend to select neat, safe-looking individuals from the stream of customers. Mall samples are biased: they systematically overrepresent some parts of the population (prosperous people, teenagers, and retired people) and underrepresent others. The opinions of such a convenience sample may be very different from those of the population as a whole.

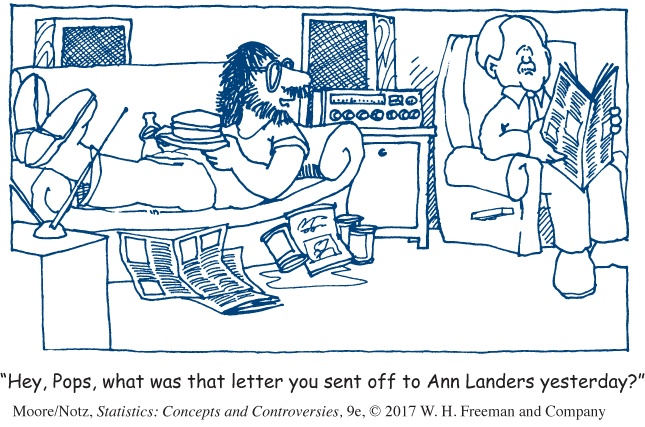

EXAMPLE 2 Write-in opinion polls

Ann Landers once asked the readers of her advice column, “If you had it to do over again, would you have children?” She received nearly 10,000 responses, almost 70% saying, “NO!” Can it be true that 70% of parents regret having children? Not at all. This is a voluntary response sample. People who feel strongly about an issue, particularly people with strong negative feelings, are more likely to take the trouble to respond. Ann Landers’s results are strongly biased—the percentage of parents who would not have children again is much higher in her sample than in the population of all parents.

On August 24, 2011, Abigail Van Buren (the niece of Ann Landers) revisited this question in her column “Dear Abby.” A reader asked, “I’m wondering when the information was collected and what the results of that inquiry were, and if you asked the same question today, what the majority of your readers would answer.”

Ms. Van Buren responded, “The results were considered shocking at the time because the majority of responders said they would NOT have children if they had it to do over again. I’m printing your question because it will be interesting to see if feelings have changed over the intervening years.”

In October 2011, Ms. Van Buren wrote that this time the majority of respondents would have children again. That is encouraging, but this was, again, a write-in poll.

24

Write-in and call-in opinion polls are almost sure to lead to strong bias. In fact, only about 15% of the public have ever responded to a call-in poll, and these tend to be the same people who call radio talk shows. That’s not a representative sample of the population as a whole.