2.4 The Ethics of Science: First, Do No Harm

Somewhere along the way, someone probably told you that it isn’t nice to treat people like objects. And yet, it may seem that psychologists do just that by creating situations that cause people to feel fearful or sad, to do things that are embarrassing or immoral, and to learn things about themselves and others that they might not really want to know. Don’t be fooled by appearances. The fact is that psychologists go to great lengths to protect the well-

Respecting People

During World War II, Nazi doctors performed truly barbaric experiments on human subjects, such as removing organs or submerging them in ice water just to see how long it would take them to die. When the war ended, the international community developed the Nuremberg Code of 1947 and then the Declaration of Helsinki in 1964, which spelled out rules for the ethical treatment of human subjects. Unfortunately, not everyone obeyed them. For example, from 1932 until 1972, the U.S. Public Health Service conducted the infamous Tuskegee experiment in which 399 African American men with syphilis were denied treatment so that researchers could observe the progression of the disease. As one journalist noted, the government “used human beings as laboratory animals in a long and inefficient study of how long it takes syphilis to kill someone” (Coontz, 2008).

What are three features of ethical research?

71

In 1974, the U.S. Congress created the National Commission for the Protection of Human Subjects of Biomedical and Behavioral Research. In 1979, the U.S. Department of Health, Education and Welfare released what came to be known as the Belmont Report, which described three basic principles that all research involving human subjects should follow. First, research should show respect for persons and their right to make decisions for and about themselves without undue influence or coercion. Second, research should be beneficent, which means that it should attempt to maximize benefits and reduce risks to the participant. Third, research should be just, which means that it should distribute benefits and risks equally to participants without prejudice toward particular individuals or groups.

The specific ethical code that psychologists follow incorporates these basic principles and expands them. (You can find the American Psychological Association’s Ethical Principles of Psychologists and Code of Conduct (2002) at http:/

Informed consent: Participants may not take part in a psychological study unless they have given informed consent, which is a written agreement to participate in a study made by an adult who has been informed of all the risks that participation may entail. This doesn’t mean that the person must know everything about the study (e.g., the hypothesis), but it does mean that the person must know about anything that might potentially be harmful or painful. If people cannot give informed consent (e.g., because they are minors or are mentally incapable), then informed consent must be obtained from their legal guardians. And even after people give informed consent, they always have the right to withdraw from the study at any time without penalty.

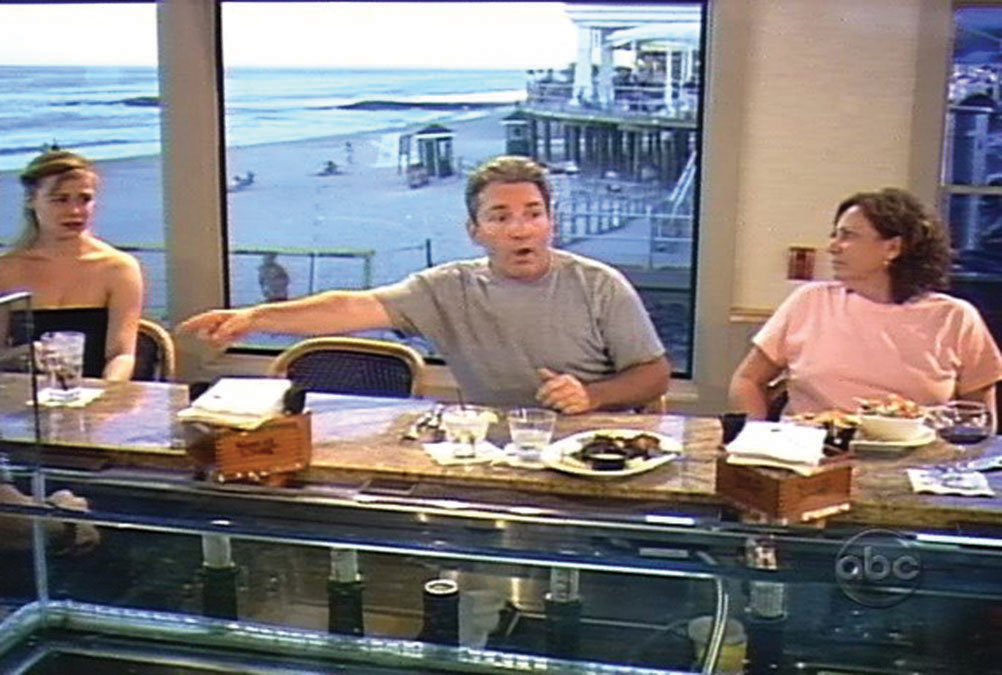

Informed consent: Participants may not take part in a psychological study unless they have given informed consent, which is a written agreement to participate in a study made by an adult who has been informed of all the risks that participation may entail. This doesn’t mean that the person must know everything about the study (e.g., the hypothesis), but it does mean that the person must know about anything that might potentially be harmful or painful. If people cannot give informed consent (e.g., because they are minors or are mentally incapable), then informed consent must be obtained from their legal guardians. And even after people give informed consent, they always have the right to withdraw from the study at any time without penalty. The man at this bar is upset. He just saw another man slip a drug into a woman’s drink and he is alerting the bartender. What he doesn’t know is that all the people at the bar are actors and that he is being filmed for the television show What Would You Do? Was it ethical for ABC to put this man in such a stressful situation without his consent? And how did men who didn’t alert the bartender feel when they turned on their televisions months later and were confronted by their own shameful behavior?© AMERICAN BROADCASTING COMPANIES, INC.

The man at this bar is upset. He just saw another man slip a drug into a woman’s drink and he is alerting the bartender. What he doesn’t know is that all the people at the bar are actors and that he is being filmed for the television show What Would You Do? Was it ethical for ABC to put this man in such a stressful situation without his consent? And how did men who didn’t alert the bartender feel when they turned on their televisions months later and were confronted by their own shameful behavior?© AMERICAN BROADCASTING COMPANIES, INC. Freedom from coercion: Psychologists may not coerce participation. Coercion not only means physical and psychological coercion but monetary coercion as well. It is unethical to offer people large amounts of money to persuade them to do something that they might otherwise decline to do. College students may be invited to participate in studies as part of their training in psychology, but they are ordinarily offered the option of learning the same things by other means.

Freedom from coercion: Psychologists may not coerce participation. Coercion not only means physical and psychological coercion but monetary coercion as well. It is unethical to offer people large amounts of money to persuade them to do something that they might otherwise decline to do. College students may be invited to participate in studies as part of their training in psychology, but they are ordinarily offered the option of learning the same things by other means. Protection from harm: Psychologists must take every possible precaution to protect their research participants from physical or psychological harm. If there are two equally effective ways to study something, the psychologist must use the safer method. If no safe method is available, the psychologist may not perform the study.

Protection from harm: Psychologists must take every possible precaution to protect their research participants from physical or psychological harm. If there are two equally effective ways to study something, the psychologist must use the safer method. If no safe method is available, the psychologist may not perform the study. Risk-

Risk-benefit analysis: Although participants may be asked to accept small risks, such as a minor shock or a small embarrassment, they may not even be asked to accept large risks, such as severe pain, psychological trauma, or any risk that is greater than the risks they would ordinarily take in their everyday lives. Furthermore, even when participants are asked to take small risks, the psychologist must first demonstrate that these risks are outweighed by the social benefits of the new knowledge that might be gained from the study.  Deception: Psychologists may only use deception when it is justified by the study’s scientific, educational, or applied value and when alternative procedures are not feasible. They may never deceive participants about any aspect of a study that could cause them physical or psychological harm or pain.

Deception: Psychologists may only use deception when it is justified by the study’s scientific, educational, or applied value and when alternative procedures are not feasible. They may never deceive participants about any aspect of a study that could cause them physical or psychological harm or pain. Debriefing: If a participant is deceived in any way before or during a study, the psychologist must provide a debriefing, which is a verbal description of the true nature and purpose of a study. If the participant was changed in any way (e.g., made to feel sad), the psychologist must attempt to undo that change (e.g., ask the person to do a task that will make him or her happy) and restore the participant to the state he or she was in before the study.

Debriefing: If a participant is deceived in any way before or during a study, the psychologist must provide a debriefing, which is a verbal description of the true nature and purpose of a study. If the participant was changed in any way (e.g., made to feel sad), the psychologist must attempt to undo that change (e.g., ask the person to do a task that will make him or her happy) and restore the participant to the state he or she was in before the study. Confidentiality: Psychologists are obligated to keep private and personal information obtained during a study confidential.

Confidentiality: Psychologists are obligated to keep private and personal information obtained during a study confidential.

These are just some of the rules that psychologists must follow. But how are those rules enforced? Almost all psychology studies are done by psychologists who work at colleges and universities. These institutions have institutional review boards (IRBs) that are composed of instructors and researchers, university staff, and laypeople from the community (e.g., business leaders or members of the clergy). If the research is federally funded (as much research is), then the law requires that the IRB include at least one nonscientist and one person who is not affiliated with the institution. A psychologist may conduct a study only after the IRB has reviewed and approved it.

As you can imagine, the code of ethics and the procedure for approval are so strict that many studies simply cannot be performed anywhere, by anyone, at any time. For example, psychologists would love to know how growing up without exposure to language affects a person’s subsequent ability to speak and think, but they cannot ethically manipulate that variable in an experiment. They can only study the natural correlations between language exposure and speaking ability, and so may never be able to firmly establish the causal relationships between these variables. Indeed, there are many questions that psychologists will never be able to answer definitively because doing so would require unethical experiments that violate basic human rights.

Respecting Animals

Not all research participants have human rights because not all research participants are human. Some are chimpanzees, rats, pigeons, or other nonhuman animals. The American Psychological Association’s code specifically describes the special rights of these nonhuman participants, and some of the more important ones are these:

All procedures involving animals must be supervised by psychologists who are trained in research methods and experienced in the care of laboratory animals and who are responsible for ensuring appropriate consideration of the animal’s comfort, health, and humane treatment.

All procedures involving animals must be supervised by psychologists who are trained in research methods and experienced in the care of laboratory animals and who are responsible for ensuring appropriate consideration of the animal’s comfort, health, and humane treatment.What steps must psychologists take to protect nonhuman subjects?

Psychologists must make reasonable efforts to minimize the discomfort, infection, illness, and pain of animals.

Psychologists must make reasonable efforts to minimize the discomfort, infection, illness, and pain of animals. Psychologists may use a procedure that subjects an animal to pain, stress, or privation only when an alternative procedure is unavailable and when the procedure is justified by the scientific, educational, or applied value of the study.

Psychologists may use a procedure that subjects an animal to pain, stress, or privation only when an alternative procedure is unavailable and when the procedure is justified by the scientific, educational, or applied value of the study. Psychologists must perform all surgical procedures under appropriate anesthesia and must minimize an animal’s pain during and after surgery.

Psychologists must perform all surgical procedures under appropriate anesthesia and must minimize an animal’s pain during and after surgery.

That’s good—

73

We have seen our cars and homes firebombed or flooded, and we have received letters packed with poisoned razors and death threats via e-

Where do most people stand on this issue? The vast majority of Americans consider it morally acceptable to use nonhuman animals in research and say they would reject a governmental ban on such research (Kiefer, 2004; Moore, 2003). Indeed, most Americans eat meat, wear leather, and support the rights of hunters, which is to say that most Americans see a sharp distinction between animal and human rights. Science is not in the business of resolving moral controversies, and every individual must draw his or her own conclusions about this issue. But whatever position you take, it is important to note that only a small percentage of psychological studies involve animals, and only a small percentage of those studies cause animals pain or harm. Psychologists mainly study people, and when they do study animals, they mainly study their behavior.

Respecting Truth

Institutional review boards ensure that data are collected ethically. But once the data are collected, who ensures that they are ethically analyzed and reported? No one does. Psychology, like all sciences, works on the honor system. No authority is charged with monitoring what psychologists do with the data they’ve collected, and no authority is charged with checking to see if the claims they make are true. You may find that a bit odd. After all, we don’t use the honor system in stores (“Take the television set home and pay us next time you’re in the neighborhood”), banks (“I don’t need to look up your account, just tell me how much money you want to withdraw”), or courtrooms (“If you say you’re innocent, well then, that’s good enough for me”), so why would we expect it to work in science? Are scientists more honest than everyone else?

74

Definitely! Okay, we just made that up. But the honor system doesn’t depend on scientists being especially honest, but on the fact that science is a community enterprise. When scientists claim to have discovered something important, other scientists don’t just applaud, they start studying it too. When physicist Jan Hendrik Schön announced in 2001 that he had produced a molecular-

What are psychologists expected to do when they report the results of their research?

What exactly are psychologists on their honor to do? At least three things. First, when they write reports of their studies and publish them in scientific journals, psychologists are obligated to report truthfully on what they did and what they found. They can’t fabricate results (e.g., claiming to have performed studies that they never really performed) or fudge results (e.g., changing records of data that were actually collected), and they can’t mislead by omission (e.g., by reporting only the results that confirm their hypothesis and saying nothing about the results that don’t). Second, psychologists are obligated to share credit fairly by including as co-

Institutional review boards ensure that the rights of human beings who participate in scientific research are based on the principles of respect for persons, beneficence, and justice.

Institutional review boards ensure that the rights of human beings who participate in scientific research are based on the principles of respect for persons, beneficence, and justice. Psychologists are obligated to uphold these principles by getting informed consent from participants, not coercing participation, protecting participants from harm, weighing benefits against risks, avoiding deception, and keeping information confidential.

Psychologists are obligated to uphold these principles by getting informed consent from participants, not coercing participation, protecting participants from harm, weighing benefits against risks, avoiding deception, and keeping information confidential. Psychologists are obligated to respect the rights of animals and treat them humanely. Most people are in favor of using animals in scientific research.

Psychologists are obligated to respect the rights of animals and treat them humanely. Most people are in favor of using animals in scientific research. Psychologists are obligated to tell the truth about their studies, to share credit appropriately, and to grant others access to their data.

Psychologists are obligated to tell the truth about their studies, to share credit appropriately, and to grant others access to their data.

75

OTHER VOICES: Can We Afford Science?

Who pays for all the research described in textbooks like this one? The answer is you. By and large, scientific research is funded by governmental agencies, such as the National Science Foundation, which give scientists grants (also known as money) to do particular research projects that the scientists proposed. Of course, this money could be spent on other things, for example, feeding the poor, housing the homeless, caring for the ill and elderly, and so on. Does it make sense to spend taxpayer dollars on psychological science when some of our fellow citizens are cold and hungry?

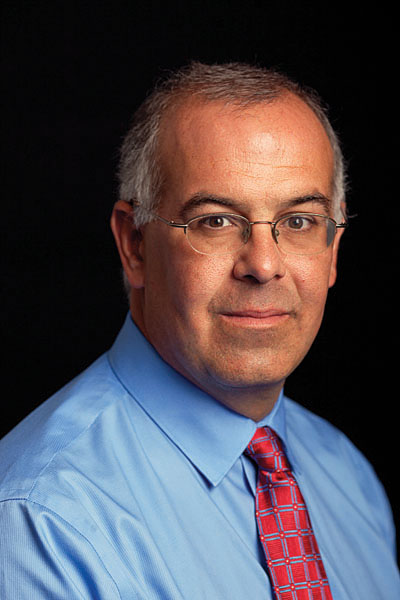

Journalist and author David Brooks (2011) argued that research in the behavioral sciences is not an expenditure—

Over the past 50 years, we’ve seen a number of gigantic policies produce disappointing results—

Fortunately, today we are in the middle of a golden age of behavioral research. Thousands of researchers are studying the way actual behavior differs from the way we assume people behave. They are coming up with more accurate theories of who we are, and scores of real-

When you renew your driver’s license, you have a chance to enroll in an organ donation program. In countries like Germany and the U.S., you have to check a box if you want to opt in. Roughly 14 percent of people do. But behavioral scientists have discovered that how you set the defaults is really important. So in other countries, like Poland or France, you have to check a box if you want to opt out. In these countries, more than 90 percent of people participate.

This is a gigantic behavior difference cued by one tiny and costless change in procedure.

Yet in the middle of this golden age of behavioral research, there is a bill working through Congress that would eliminate the National Science Foundation’s Directorate for Social, Behavioral and Economic Sciences. This is exactly how budgets should not be balanced—

Let’s say you want to reduce poverty. We have two traditional understandings of poverty. The first presumes people are rational. They are pursuing their goals effectively and don’t need much help in changing their behavior. The second presumes that the poor are afflicted by cultural or psychological dysfunctions that sometimes lead them to behave in shortsighted ways. Neither of these theories has produced much in the way of effective policies.

Eldar Shafir of Princeton and Sendhil Mullainathan of Harvard have recently, with federal help, been exploring a third theory, that scarcity produces its own cognitive traits.

A quick question: What is the starting taxi fare in your city? If you are like most upper-

These questions impose enormous cognitive demands. The brain has limited capacities. If you increase demands on one sort of question, it performs less well on other sorts of questions.

Shafir and Mullainathan gave batteries of tests to Indian sugar farmers. After they sell their harvest, they live in relative prosperity. During this season, the farmers do well on the I.Q. and other tests. But before the harvest, they live amid scarcity and have to think hard about a thousand daily decisions. During these seasons, these same farmers do much worse on the tests. They appear to have lower I.Q.’s. They have more trouble controlling their attention. They are more shortsighted. Scarcity creates its own psychology.

Princeton students don’t usually face extreme financial scarcity, but they do face time scarcity. In one game, they had to answer questions in a series of timed rounds, but they could borrow time from future rounds. When they were scrambling amid time scarcity, they were quick to borrow time, and they were nearly oblivious to the usurious interest rates the game organizers were charging. These brilliant Princeton kids were rushing to the equivalent of payday lenders, to their own long-

Shafir and Mullainathan have a book coming out next year, exploring how scarcity—

People are complicated. We each have multiple selves, which emerge or don’t depending on context. If we’re going to address problems, we need to understand the contexts and how these tendencies emerge or don’t emerge. We need to design policies around that knowledge. Cutting off financing for this sort of research now is like cutting off navigation financing just as Christopher Columbus hit the shoreline of the New World.

What do you think? Is Brooks right? Is psychological science a wise use of public funds, or is it a luxury that we simply can’t afford?

From the New York Times, July 7, 2011 © 2011 The New York Times. All rights reserved. Used by permission and protected by the Copyright Laws of the United States. The printing, copying, redistribution, or retransmission of this Content without express written permission is prohibited. http:/

76