4.1 Sensation and Perception Are Distinct Activities

To us, sensation and perception appear to be one seamless event. However, they are two distinct activities.

Sensation is simple stimulation of a sense organ. It is the basic registration of light, sound, pressure, odor, or taste as parts of your body interact with the physical world.

Sensation is simple stimulation of a sense organ. It is the basic registration of light, sound, pressure, odor, or taste as parts of your body interact with the physical world. After a sensation registers in your central nervous system, perception takes place at the level of your brain: the organization, identification, and interpretation of a sensation in order to form a mental representation.

After a sensation registers in your central nervous system, perception takes place at the level of your brain: the organization, identification, and interpretation of a sensation in order to form a mental representation.

As an example, your eyes are coursing across these sentences right now. The sensory receptors in your eyeballs are registering different patterns of light reflecting off the page. Your brain, however, is integrating and processing that light information into the meaningful perception of words, such as meaningful, perception, and words. Your eyes—

What role does the brain play in what we see and hear?

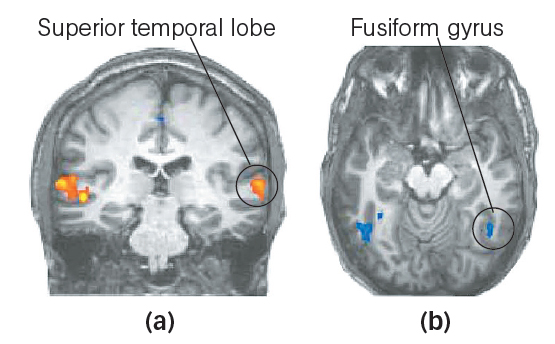

If all of this sounds a little peculiar, it’s because, from the vantage point of your conscious experience, it seems as if you’re reading words directly. If you think of the discussion of brain damage in the Neuroscience and Behavior chapter, you’ll recall that sometimes a person’s eyes can work just fine, yet the individual is still “blind” to faces she has seen for many years. Damage to the visual processing centers in the brain can interfere with the interpretation of information coming from the eyes: The senses are intact, but perceptual ability is compromised. Sensation and perception are related—

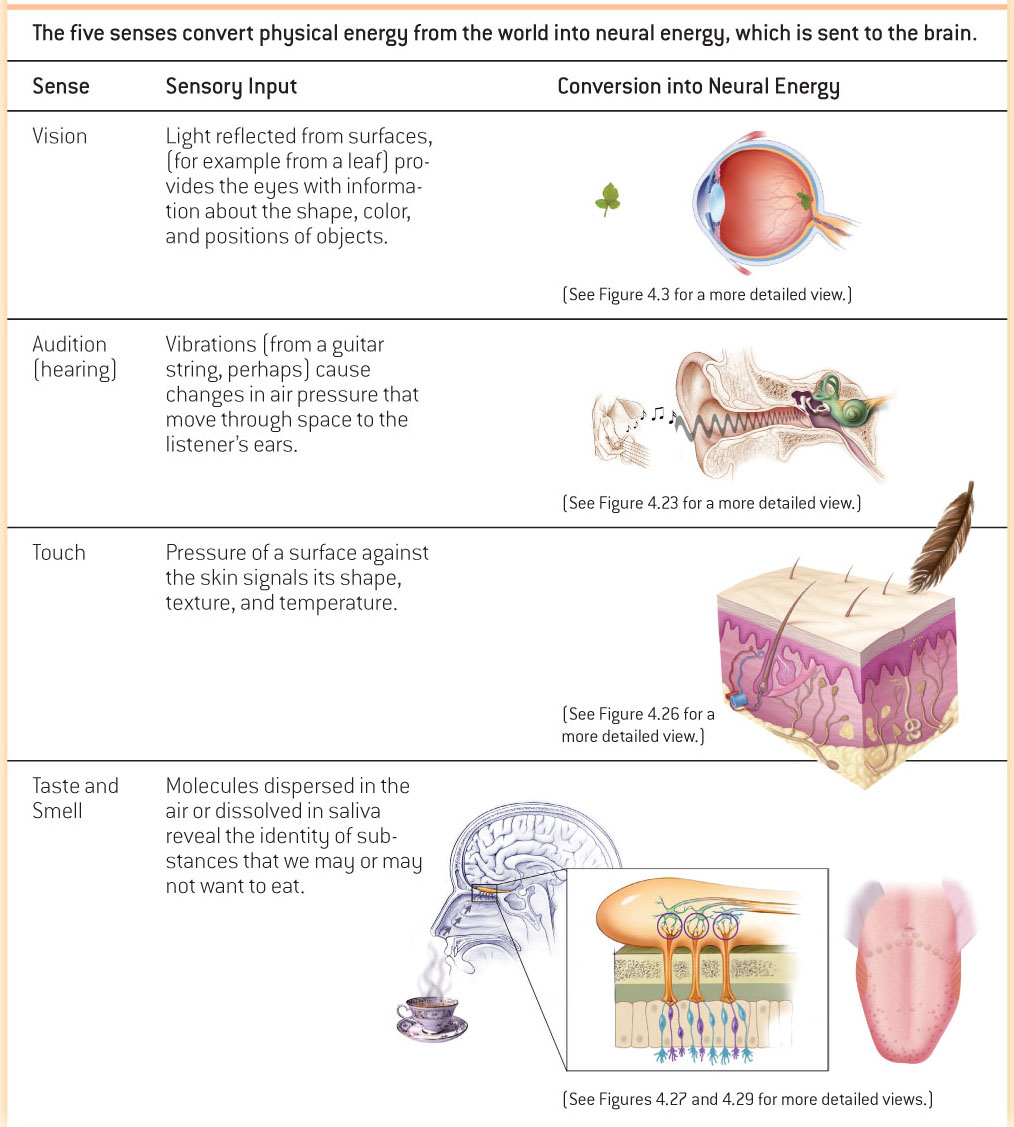

How do sensory receptors communicate with the brain? It all depends on the process of transduction, which occurs when many sensors in the body convert physical signals from the environment into encoded neural signals sent to the central nervous system. In vision, light reflected from surfaces provides the eyes with information about the shape, color, and position of objects. In audition, vibrations (from vocal cords or a guitar string, perhaps) cause changes in air pressure that propagate through space to a listener’s ears. In touch, the pressure of a surface against the skin signals its shape, texture, and temperature. In taste and smell, molecules dispersed in the air or dissolved in saliva reveal the identity of substances that we may or may not want to eat. In each case physical energy from the world is converted to neural energy inside the central nervous system (see TABLE 4.1). We’ll discuss in more detail how transduction works with each of the five primary senses—

131

Psychophysics

Knowing that perception takes place in the brain, you might wonder if two people see the same colors in the sunset when looking at the evening sky. It’s intriguing to consider the possibility that our basic perceptions of sights or sounds might differ fundamentally from those of other people. How can we measure such a thing objectively? Measuring the physical energy of a stimulus, such as the wavelength of a light, is easy enough: You can probably buy the necessary instruments online to do that yourself. But how do you quantify a person’s private, subjective perception of that light?

132

Why isn’t it enough for a psychophysicist to measure only the strength of a stimulus?

The structuralists, led by Wilhelm Wundt and Edward Titchener, tried using introspection to measure perceptual experiences (see the Psychology: Evolution of a Science chapter). They failed miserably at this task. After all, two people may both describe their experience of the sunset in the same words (“orange” and “beautiful”), but neither can directly perceive the other’s experience of the same event. In the mid-

Measuring Thresholds

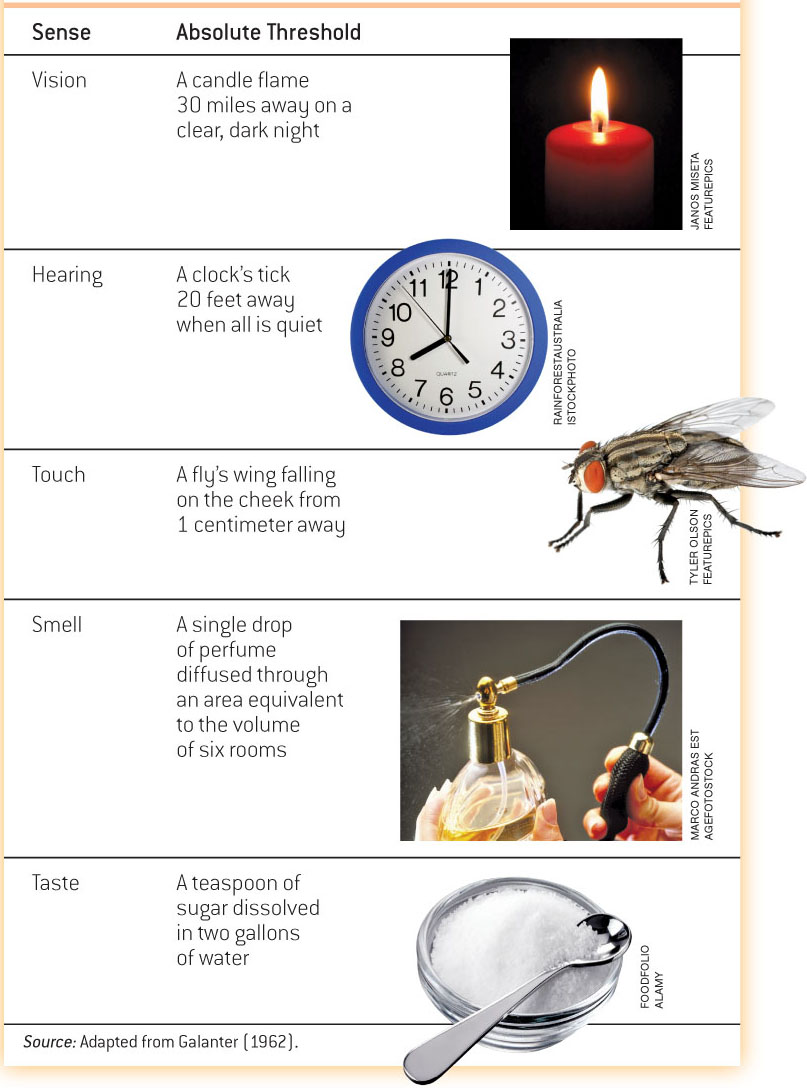

Psychophysicists begin the measurement process with a single sensory signal to determine precisely how much physical energy is required for an observer to become aware of a sensation. The simplest quantitative measurement in psychophysics is the absolute threshold, the minimal intensity needed to just barely detect a stimulus in 50% of the trials. A threshold is a boundary. The doorway that separates the inside from the outside of a house is a threshold, as is the boundary between two psychological states (awareness and unawareness, for example). In finding the absolute threshold for sensation, the two states in question are sensing and not sensing some stimulus. TABLE 4.2 lists the approximate sensory thresholds for each of the five senses.

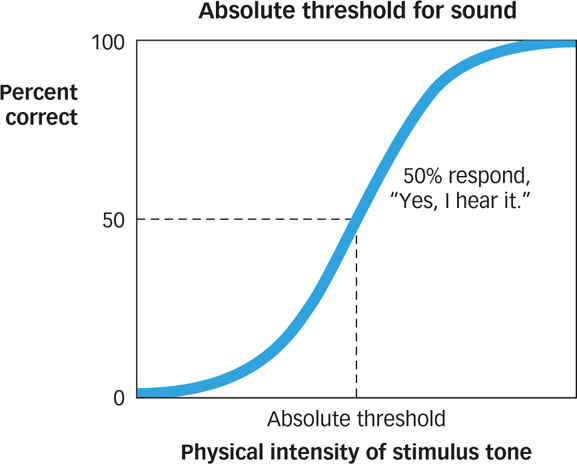

To measure the absolute threshold for detecting a sound, for example, an observer sits in a soundproof room wearing headphones linked to a computer. The experimenter presents a pure tone (the sort of sound made by striking a tuning fork) using the computer to vary the loudness or the length of time each tone lasts and recording how often the observer reports hearing that tone under each condition. The outcome of such an experiment is graphed in FIGURE 4.1. Notice from the shape of the curve that the transition from not hearing to hearing is gradual rather than abrupt.

If we repeat this experiment for many different tones, we can observe and record the thresholds for tones ranging from very low to very high pitch. It turns out that people tend to be most sensitive to the range of tones corresponding to human conversation. If the tone is low enough, such as the lowest note on a pipe organ, most humans cannot hear it at all; we can only feel it. If the tone is high enough, we likewise cannot hear it, but dogs and many other animals can.

The absolute threshold is useful for assessing how sensitive we are to faint stimuli, but the human perceptual system is better at detecting changes in stimulation than the simple onset or offset of stimulation. When parents hear their infant’s cry, it’s useful to be able to differentiate the “I’m hungry” cry from the “I’m cranky” cry from the “something is biting my toes” cry. The just noticeable difference (JND) is the minimal change in a stimulus that can just barely be detected.

The JND is not a fixed quantity; rather, it depends on how intense the stimuli being measured are and on the particular sense being measured. Consider measuring the JND for a bright light. An observer in a dark room is shown a light of fixed intensity, called the standard (S), next to a comparison light that is slightly brighter or dimmer than the standard. When S is very dim, observers can see even a very small difference in brightness between the two lights: The JND is small. But if S is bright, a much larger increment is needed to detect the difference: The JND is larger.

133

What is the importance of proportion to the measurement of just noticeable difference?

When calculating a difference threshold, it is the proportion between stimuli that is important. This relationship was first noticed in 1834 by German physiologist Ernst Weber (Watson, 1978). Fechner applied Weber’s insight directly to psychophysics, resulting in a formal relationship called Weber's law: The just noticeable difference of a stimulus is a constant proportion despite variations in intensity. As an example, if you picked up a 1-

Signal Detection

Measuring absolute and difference thresholds requires a critical assumption: that a threshold exists! But much of what scientists know about biology suggests that such a discrete, all-

How accurate and complete are our perceptions of the world?

Our accurate perception of a sensory stimulus, then, can be somewhat haphazard. Whether in the psychophysics lab or out in the world, sensory signals face a lot of competition, or noise, which refers to all the other stimuli coming from the internal and external environment. Memories, moods, and motives intertwine with what you are seeing, hearing, and smelling at any given time. This internal “noise” competes with your ability to detect a stimulus with perfect, focused attention. Other sights, sounds, and smells in the world at large also compete for attention; you rarely have the luxury of attending to just one stimulus apart from everything else. As a consequence of noise, you may not perceive everything that you sense, and you may even perceive things that you haven’t sensed. Think of the last time you had a hearing test. You no doubt missed some of the quiet beeps that were presented, but you also probably said you heard beeps that weren’t really there.

134

An approach to psychophysics called signal detection theory holds that the response to a stimulus depends both on a person’s sensitivity to the stimulus in the presence of noise and on a person’s decision criterion. That is, observers consider the sensory evidence evoked by the stimulus and compare it to an internal decision criterion (Green & Swets, 1966; Macmillan & Creelman, 2005). If the sensory evidence exceeds the criterion, the observer responds by saying, “Yes, I detected the stimulus,” and if it falls short of the criterion, the observer responds by saying, “No, I did not detect the stimulus.”

Signal detection theory allows researchers to quantify an observer’s response in the presence of noise. In a signal detection experiment, a stimulus, such as a dim light, is randomly presented or not. If you’ve ever taken an eye exam that checks your peripheral vision, you have an idea about this kind of setup: Lights of varying intensity are flashed at various places in the visual field, and your task is to respond anytime you see one. Observers in a signal detection experiment must decide whether they saw the light or not. If the light is presented and the observer correctly responds yes, the outcome is a hit. If the light is presented and the observer says no, the result is a miss. However, if the light is not presented and the observer nonetheless says it was, a false alarm has occurred. Finally, if the light is not presented and the observer responds no, a correct rejection has occurred: The observer accurately detected the absence of the stimulus.

Signal detection theory proposes a way to measure perceptual sensitivity (how effectively the perceptual system represents sensory events) separately from the observer’s decision-

Signal detection theory has practical applications at home, school, work, and even while driving. For example, a radiologist may have to decide whether a mammogram shows that a woman has breast cancer. The radiologist knows that certain features, such as a mass of a particular size and shape, are associated with the presence of cancer. But noncancerous features can have a very similar appearance to cancerous ones. The radiologist may decide on a strictly liberal criterion and check every possible case of cancer with a biopsy. This decision strategy minimizes the possibility of missing a true cancer but leads to many unnecessary biopsies. A strictly conservative criterion will cut down on unnecessary biopsies but will miss some treatable cancers.

As another example, imagine that police are on the lookout for a suspected felon who they have reason to believe will be at a crowded soccer match. Although the law enforcement agency provided a fairly good description (6 feet tall, sandy brown hair, beard, glasses) there are still thousands of people to scan. Rounding up all men between 5′5″ and 6′5″ would probably produce a hit (the felon is caught) but at the expense of an extraordinary number of false alarms (many innocent people are detained and questioned).

These different types of errors have to be weighed against one another in setting the decision criterion. Signal detection theory offers a practical way to choose among criteria that permit decision makers to take into account the consequences of hits, misses, false alarms, and correct rejections (McFall & Treat, 1999; Swets, Dawes, & Monahan, 2000). For an example of a common everyday task that can interfere with signal detection, see the Real World box.

135

THE REAL WORLD: Multitasking

By one estimate, using a cell phone while driving makes having an accident four times more likely (McEvoy et al., 2005). In response to highway safety experts and statistics such as this, state legislatures are passing laws that restrict, and sometimes ban, using mobile phones while driving. You might think that’s a fine idea…for everyone else on the road. But surely you can manage to punch in a number on a phone, carry on a conversation, or maybe even text-

The issue here is selective attention, or perceiving only what’s currently relevant to you. Perception is an active, moment-

This kind of multitasking creates problems when you need to react suddenly while driving. Researchers have tested experienced drivers in a highly realistic driving simulator, measuring their response times to brake lights and stop signs while they listened to the radio or carried on phone conversations about a political issue, among other tasks (Strayer, Drews, & Johnston, 2003). These experienced drivers reacted significantly more slowly during phone conversations than during the other tasks. This is because a phone conversation requires memory retrieval, deliberation, and planning what to say and often carries an emotional stake in the conversation topic. Tasks such as listening to the radio require far less attention.

The tested drivers became so engaged in their conversations that their minds no longer seemed to be in the car. Their slower braking response translated into an increased stopping distance that, depending on the driver’s speed, would have resulted in a rear-

Interestingly, people who report that they multitask frequently in everyday life have difficulty in laboratory tasks that require focusing attention in the face of distractions compared with individuals who do not multitask much in daily life (Ophir, Nass, & Wagner, 2009). So how well do we multitask in several thousand pounds of metal hurtling down the highway? Unless you have two heads with one brain each—

Sensory Adaptation

What conditions have you already adapted to today? Sounds? Smells?

When you walk into a bakery, the aroma of freshly baked bread overwhelms you, but after a few minutes the smell fades. If you dive into cold water, the temperature is shocking at first, but after a few minutes you get used to it. When you wake up in the middle of the night for a drink of water, the bathroom light blinds you, but after a few minutes you no longer squint. These are all examples of sensory adaptation: Sensitivity to prolonged stimulation tends to decline over time as an organism adapts to current conditions.

Sensory adaptation is a useful process for most organisms. Imagine what your sensory and perceptual world would be like without it. When you put on your jeans in the morning, the feeling of rough cloth against your bare skin would be as noticeable hours later as it was in the first few minutes. The stink of garbage in your apartment when you first walk in would never dissipate. If you had to be constantly aware of how your tongue feels while it is resting in your mouth, you’d be driven to distraction. Our sensory systems respond more strongly to changes in stimulation than to constant stimulation. A stimulus that doesn’t change usually doesn’t require any action; your car probably emits a certain hum all the time that you’ve gotten used to. But a change in stimulation often signals a need for action. If your car starts making different kinds of noises, you’re not only more likely to notice them, but you’re also more likely to do something about it.

136

Sensation and perception are critical to survival. Sensation is the simple stimulation of a sense organ, whereas perception organizes, identifies, and interprets sensation at the level of the brain.

Sensation and perception are critical to survival. Sensation is the simple stimulation of a sense organ, whereas perception organizes, identifies, and interprets sensation at the level of the brain. All of our senses depend on the process of transduction, which converts physical signals from the environment into neural signals carried by sensory neurons into the central nervous system.

All of our senses depend on the process of transduction, which converts physical signals from the environment into neural signals carried by sensory neurons into the central nervous system. In the 19th century, researchers developed psychophysics, an approach to studying perception that measures the strength of a stimulus and an observer’s sensitivity to that stimulus. Psychophysicists have developed procedures for measuring an observer’s absolute threshold, or the smallest intensity needed to just barely detect a stimulus, and the just noticeable difference (JND), or the smallest change in a stimulus that can just barely be detected.

In the 19th century, researchers developed psychophysics, an approach to studying perception that measures the strength of a stimulus and an observer’s sensitivity to that stimulus. Psychophysicists have developed procedures for measuring an observer’s absolute threshold, or the smallest intensity needed to just barely detect a stimulus, and the just noticeable difference (JND), or the smallest change in a stimulus that can just barely be detected. Signal detection theory allows researchers to distinguish between an observer’s perceptual sensitivity to a stimulus and criteria for making decisions about the stimulus.

Signal detection theory allows researchers to distinguish between an observer’s perceptual sensitivity to a stimulus and criteria for making decisions about the stimulus. Sensory adaptation occurs because sensitivity to lengthy stimulation tends to decline over time.

Sensory adaptation occurs because sensitivity to lengthy stimulation tends to decline over time.