4.2 Operant Conditioning

We are pulled as well as pushed by events in our environment. That is, we do not just respond to stimuli, we also respond for stimuli. We behave in ways that are designed to obtain certain stimuli, or changes in our environment. My dog rubs against the door to be let out. I flip a switch to illuminate a room, press keys on my computer to produce words on a screen, and say, “Please pass the potatoes” to get potatoes. Most of my day seems to consist of behaviors of this sort, and I expect that most of my dog’s day would, too, if there were more things that he could control. Surely, if Pavlov’s dogs had had some way to control the delivery of food into their mouths, they would have done more than salivate; they would have pushed a lever, bitten open a bag, or done whatever was required to get the food.

115

Such actions are called operant responses because they operate on the world to produce some effect. They are also called instrumental responses because they function like instruments, or tools, to bring about some change in the environment. The process by which people or other animals learn to make operant responses is called operant conditioning, or instrumental conditioning. Operant conditioning can be defined as a learning process by which the effect, or consequence, of a response influences the future rate of production of that response. In general, operant responses that produce effects that are favorable to the animal increase in rate, and those that produce effects that are unfavorable to the animal decrease in rate.

From the Law of Effect to Operant Conditioning: From Thorndike to Skinner

Although the labels “operant” and “instrumental” were not coined until the 1930s, this learning process was studied well before that time, most notably by E. L. Thorndike.

Thorndike’s Puzzle-Box Procedure

17

How did Thorndike train cats to escape from a puzzle box? How did this research contribute to Thorndike’s formulation of the law of effect?

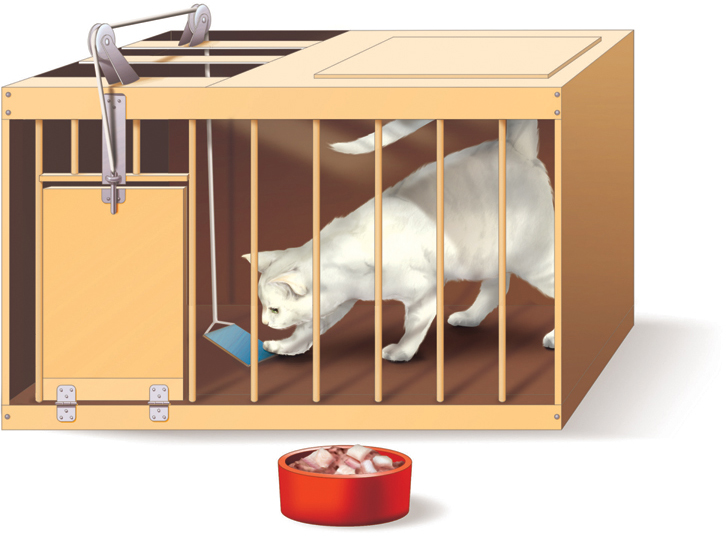

At about the same time that Pavlov began his research on conditioning, a young American student of psychology, Edward Lee Thorndike (1898), published a report on his own learning experiments with various animals, including cats. Thorndike’s training method was quite different from Pavlov’s, and so was his description of the learning process. His apparatus, which he called a puzzle box, was a small cage that could be opened from inside by some relatively simple act, such as pulling a loop or pressing a lever (see Figure 4.7).

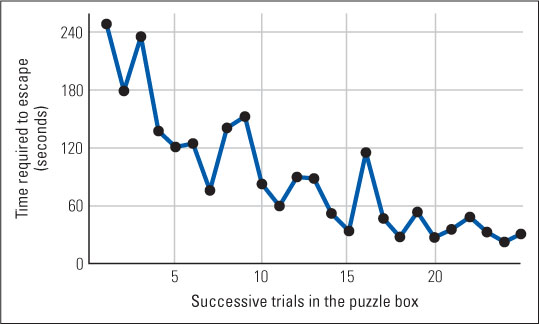

In one experiment, Thorndike deprived cats of food long enough to make them hungry and then placed them inside the puzzle box, one at a time, with food just outside it. When first placed inside, a cat would engage in many actions—such as clawing at the bars or pushing at the ceiling—in an apparent attempt to escape from the box and get at the food. Finally, apparently by accident, the cat would pull the loop or push the lever that opened the door to freedom and food. Thorndike repeated this procedure many times with each cat. He found that in early trials the animals made many useless movements before happening on the one that released the latch, but, on average, they escaped somewhat more quickly with each successive trial. After about 20 to 30 trials, most cats would trip the latch to freedom and food almost as soon as they were shut in (see Figure 4.8).

116

An observer who joined Thorndike on trial 31 might have been quite impressed by the cat’s intelligence; but, as Thorndike himself suggested, an observer who had sat through the earlier trials might have been more impressed by the creature’s stupidity. In any event, Thorndike came to view learning as a trial-and-error process, through which an individual gradually becomes more likely to make responses that produce beneficial effects.

Thorndike’s Law of Effect

Thorndike’s basic training procedure differed fundamentally from Pavlov’s. Pavlov produced learning by controlling the relationship between two stimuli in the animal’s environment so that the animal learned to use one stimulus to predict the occurrence of the other. Thorndike, in contrast, produced learning by altering the consequence of some aspect of the animal’s behavior. Thorndike’s cats, unlike Pavlov’s dogs, had some control over their environment. They could do more than merely predict when food would come; they could gain access to it through their own efforts.

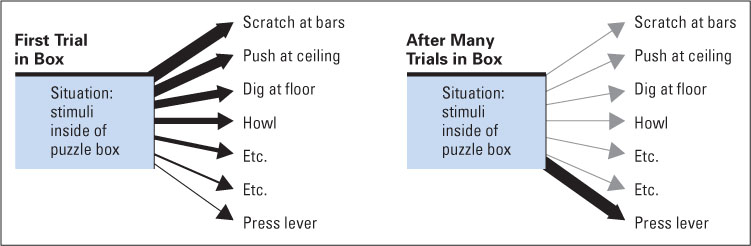

Partly on the basis of his puzzle-box experiments, Thorndike (1898) formulated the law of effect, which can be stated briefly as follows: Responses that produce a satisfying effect in a particular situation become more likely to occur again in that situation, and responses that produce a discomforting effect become less likely to occur again in that situation. In Thorndike’s puzzle-box experiments, the situation presumably consisted of all the sights, sounds, smells, internal feelings, and so on that were experienced by the hungry animal in the box. None of them elicited the latch-release response in reflex-like fashion; rather, taken as a whole, they set the occasion for many possible responses to occur, only one of which would release the latch. Once the latch was released, the satisfying effect, including freedom from the box and access to food, caused that response to strengthen; so the next time the cat was in the same situation, the probability of that response’s recurrence was increased (see Figure 4.9).

Skinner’s Method of Studying and Describing Operant Conditioning

18

How did Skinner’s method for studying learning differ from Thorndike’s, and why did he prefer the term reinforcement to Thorndike’s satisfaction?

The psychologist who did the most to extend and popularize the law of effect for more than half a century was B. F. (Burrhus Fredric) Skinner. Like Watson, Skinner was a confirmed behaviorist. He believed that principles of learning are the most fundamental principles in psychology, and he wanted to study and describe learning in ways that do not entail references to mental events. Unlike Watson, however, he did not consider the stimulus-response reflex to be the fundamental unit of all of behavior. Thorndike’s work provided Skinner with a model of how nonreflexive behaviors could be altered through learning.

117

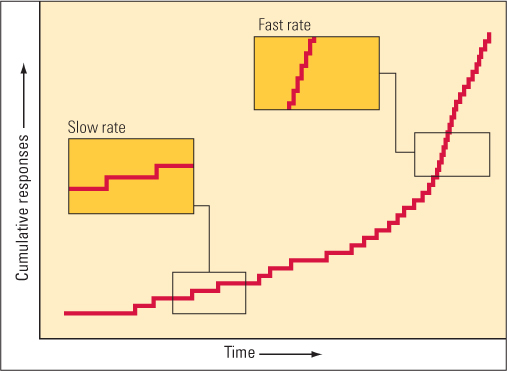

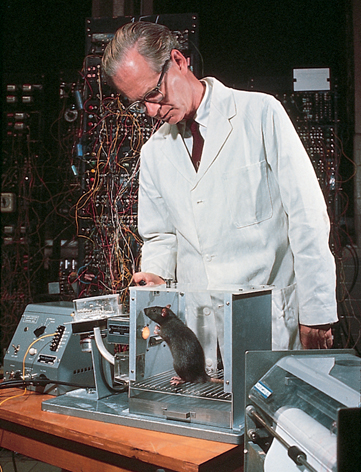

As a graduate student at Harvard University around 1930, Skinner developed an apparatus for studying animals’ learning that was considerably more efficient than Thorndike’s puzzle boxes. His device, commonly called a “Skinner box,” is a cage with a lever or another mechanism in it that the animal can operate to produce some effect, such as delivery of a pellet of food or a drop of water (see Figure 4.10). The advantage of Skinner’s apparatus is that the animal, after completing a response and experiencing its effect, is still in the box and free to respond again. With Thorndike’s puzzle boxes and similar apparatuses such as mazes, the animal has to be put back into the starting place at the end of each trial. With Skinner’s apparatus, the animal is simply placed in the cage and left there until the end of the session. Throughout the session, there are no constraints on when the animal may or may not respond. Responses (such as lever presses) can easily be counted automatically, and the learning process can be depicted as changes in the rate of responses (see Figure 4.11). Because each pellet of food or drop of water is very small, the hungry or thirsty animal makes many responses before becoming satiated.

Skinner developed not only a more efficient apparatus for studying such learning but also a new vocabulary for talking about it. He coined the terms operant response to refer to any behavioral act that has some effect on the environment and operant conditioning to refer to the process by which the effect of an operant response changes the likelihood of the response’s recurrence. Thus, in a typical experiment with a Skinner box, pressing the lever is an operant response, and the increased rate of lever pressing that occurs when the response is followed by a pellet of food exemplifies operant conditioning. Applying the same terms to Thorndike’s experiments, the movement that opens the latch is an operant response, and the increase from trial to trial in the speed with which that movement is made exemplifies operant conditioning.

Skinner (1938) proposed the term reinforcer, as a replacement for such words as satisfaction and reward, to refer to a stimulus change that follows a response and increases the subsequent frequency of that response. Skinner preferred this term because it makes no assumptions about anything happening in the mind; it merely refers to the effect that the presentation of the stimulus has on the animal’s subsequent behavior. Thus, in a typical Skinner-box experiment, the delivery of a pellet of food or drop of water following a lever-press response is a reinforcer. In Thorndike’s experiment, escape from the cage and access to the food outside it were reinforcers. Some stimuli, such as food for a food-deprived animal or water for a water-deprived one, are naturally reinforcing. Other stimuli have reinforcing value only because of previous learning, and Skinner referred to these as conditioned reinforcers. An example of a conditioned reinforcer for humans is money. Once a person learns what money can buy, he or she will learn to behave in ways that yield more of it.

118

There is one fundamental difference between Skinner’s theory of operant conditioning and Pavlov’s theory of classical conditioning: In operant conditioning the individual emits, or generates, a behavior that has some effect on the environment, whereas in classical conditioning a stimulus elicits a response from the organism. Like Darwin’s theory of evolution, Skinner’s theory of learning is a selectionist theory from this perspective (Skinner, 1974). Animals (including humans) emit behaviors, some of which get reinforced (selected) by the environment. Those that get reinforced increase in frequency in those environments, and those that do not get reinforced decrease in frequency in those environments. Skinner’s adoption of selectionist thinking is a clear demonstration of the application of Darwin’s ideas to changes, not over evolutionary time, but over the lifetime of an individual.

Operant Conditioning Without Awareness

To Skinner and his followers, operant conditioning is not simply one kind of learning to be studied; it represents an entire approach to psychology. In his many books and articles, Skinner argued that essentially all the things we do, from the moment we arise in the morning to the moment we fall asleep at night, can be understood as operant responses that occur because of their past reinforcement. In some cases, we are clearly aware of the relationship between our responses and reinforcers, as when we place money in a vending machine for a candy bar or study to obtain a good grade on a test. In other cases, we may not be aware of the relationship, yet it exists and, according to Skinner (1953, 1966), is the real reason for our behavior. Skinner argued that awareness—which refers to a mental phenomenon—is not a useful construct for explaining behavior. We can never be sure what a person is aware of, but we can see directly the relationship between responses and reinforcers and use that to predict what a person will learn to do.

19

What is some evidence that people can be conditioned to make an operant response without awareness of the conditioning process? How is this relevant for understanding how people acquire motor skills?

An illustration of conditioning without awareness is found in an experiment conducted many years ago, in which adults listened to music over which static was occasionally superimposed (Hefferline et al., 1959). Unbeknownst to the subjects, they could turn off the static by making an imperceptibly small twitch of the left thumb. Some subjects (the completely uninformed group) were told that the experiment had to do with the effect of music on body tension; they were told nothing about the static or how it could be turned off. Others (the partly informed group) were told that static would sometimes come on, that they could turn it off with a specific response, and that their task was to discover that response.

The result was that all subjects in both groups learned to make the thumb-twitch response, thereby keeping the static off for increasingly long periods. But, when questioned afterward, none were aware that they had controlled the static with thumb twitches. Subjects in the uninformed group said that they had noticed the static decline over the session but were unaware that they had caused the decline. Most in the partly informed group said that they had not discovered how to control the static. Only one participant believed that he had discovered the effective response, and he claimed that it involved “subtle rowing movements with both hands, infinitesimal wriggles of both ankles, a slight displacement of the jaw to the left, breathing out, and then waiting” (Hefferline et al., 1959, p. 1339)! While he was consciously making this superstitious response, he was unconsciously learning to make the thumb twitch.

If you think about it, the results of this experiment should not come as a great surprise. We constantly learn finely tuned muscle movements as we develop skill at riding a bicycle, hammering nails, making a jump shot, playing a musical instrument, or any other activity. The probable reinforcers are, respectively, the steadier movement on the bicycle, the straight downward movement of the nail we are pounding, the “swoosh” when the ball goes through the hoop, and the improved sound from the instrument; but often we do not know just what we are doing differently to produce these good effects. Our knowledge is often similar to that of the neophyte carpenter who said, after an hour’s practice at hammering, “The nails you’re giving me now don’t bend as easily as the ones you were giving me before.”

119

Principles of Reinforcement

Skinner and his followers identified and studied many behavioral phenomena associated with operant conditioning, including the ones described in this section.

Shaping of New Operant Responses

20

How can we use operant conditioning to get an animal to do something that it currently doesn’t do?

Suppose you put a rat in a Skinner box, and it never presses the lever. Or you place a cat in a puzzle box, and it never pulls the loop. Or you want to train your dog to jump through a hoop, but it never makes that leap. In operant conditioning, the reinforcer comes after the subject produces the desired response. But if the desired response never occurs, it can never be reinforced. The solution to this problem is a technique called shaping, in which successively closer approximations to the desired response are reinforced until the desired response finally occurs and can be reinforced.

Imagine that you want to shape a lever-press response in a rat whose initial rate of lever pressing is zero. To begin, you might present the reinforcer (such as a pellet of food) whenever the rat goes anywhere near the lever. As a result, the rat will soon be spending most of its time near the lever and occasionally will touch it. When that begins to happen, you might withhold the reinforcer until each time the rat touches the lever, which will increase the rate of touching. Some touches will be more vigorous than others and produce the desired lever movement. When that has happened a few times, you can stop reinforcing any other response; your animal’s lever pressing has now been shaped.

Animal trainers regularly use this technique in teaching domestic or circus animals to perform new tricks or tasks (Pryor, 2006), and we all tend to use it, more or less deliberately and not always skillfully, when we teach new skills to other people. For example, when teaching a novice to play tennis, we tend at first to offer praise for any swing of the racket that propels the ball in the right general direction, but as improvement occurs, we begin to bestow our praise only on closer and closer approximations of an ideal swing.

Extinction of Operantly Conditioned Responses

21

In what ways is extinction in operant conditioning similar to extinction in classical conditioning?

An operantly conditioned response declines in rate and eventually disappears if it no longer results in a reinforcer. Rats stop pressing levers if no food pellets appear, cats stop scratching at doors if nobody responds by letting them out, and people stop smiling at those who don’t smile back. The absence of reinforcement of the response and the consequent decline in response rate are both referred to as extinction. Extinction in operant conditioning is analogous to extinction in classical conditioning. Just as in classical conditioning, extinction in operant conditioning is not true “unlearning” of the response. Passage of time following extinction can lead to spontaneous recovery of responding, and a single reinforced response following extinction can lead the individual to respond again at a rapid rate.

Schedules of Partial Reinforcement

In many cases, in the real world as well as in laboratory experiments, a particular response only sometimes produces a reinforcer. This is referred to as partial reinforcement, to distinguish it on the one hand from continuous reinforcement, where the response is always reinforced, and on the other hand from extinction, where the response is never reinforced. In initial training, continuous reinforcement is most efficient, but once trained, an animal will continue to perform for partial reinforcement. Skinner and other operant researchers have described the following four basic types of partial-reinforcement schedules:

120

22

How do the four types of

partial-reinforcement schedules differ from one another, and why do responses generally occur faster to ratio schedules than to interval schedules?

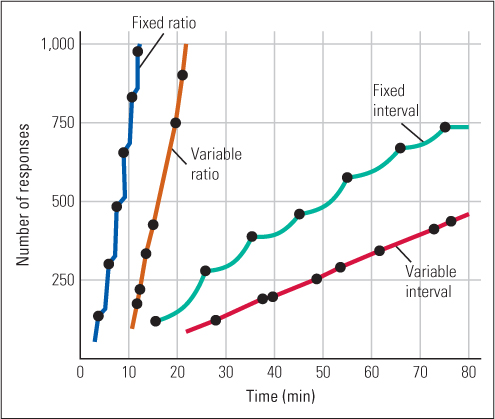

- In a fixed-ratio schedule, a reinforcer occurs after every nth response, where n is some whole number greater than 1. For example, in a fixed-ratio 5 schedule every fifth response is reinforced.

- A variable-ratio schedule is like a fixed-ratio schedule except that the number of responses required before reinforcement varies unpredictably around some average. For example, in a variable-ratio 5 schedule reinforcement might come after 7 responses on one trial, after 3 on another, and so on, in random manner, but the average number of responses required for reinforcement would be 5.

- In a fixed-interval schedule, a fixed period of time must elapse between one reinforced response and the next. Any response occurring before that time elapses is not reinforced. For example, in a fixed-interval 30-second schedule the first response that occurs at least 30 seconds after the last reinforcer is reinforced.

- A variable-interval schedule is like a fixed-interval schedule except that the period that must elapse before a response will be reinforced varies unpredictably around some average. For example, in a variable-interval 30-second schedule the average period required before the next response will be reinforced is 30 seconds.

The different schedules produce different response rates in ways that make sense if we assume that the person or animal is striving to maximize the number of reinforcers and minimize the number of unreinforced responses (see Figure 4.12). Ratio schedules (whether fixed or variable) produce reinforcers at a rate that is directly proportional to the rate of responding, so, not surprisingly, such schedules typically induce rapid responding. With interval schedules, in contrast, the maximum number of reinforcers available is set by the clock, and such schedules result—also not surprisingly—in relatively low response rates that depend on the length of the fixed or average interval.

23

How do variable-ratio and variable-interval schedules produce behavior that is highly resistant to extinction?

Behavior that has been reinforced on a variable-ratio or variable-interval schedule is often very difficult to extinguish. If a rat is trained to press a lever only on continuous reinforcement and then is shifted to extinction conditions, the rat will typically make a few bursts of lever-press responses and then quit. But if the rat has been shifted gradually from continuous reinforcement to an ever-stingier variable schedule and then finally to extinction, it will often make hundreds of unreinforced responses before quitting. Rats and humans who have been reinforced on stingy variable schedules have experienced reinforcement after long, unpredictable periods of no reinforcement, so they have learned (for better or worse) to be persistent.

Skinner (1953) and others (Rachlin, 1990) have used this phenomenon to explain why gamblers often persist at the slot machine or dice game even after long periods of losing: They are hooked by the variable-ratio schedule of payoff that characterizes nearly every gambling device or game. From a cognitive perspective, we could say that the gambler keeps playing because of his or her knowledge that the very next bet or throw of the dice might be the one that pays off.

Distinction Between Positive and Negative Reinforcement

24

How does negative reinforcement differ from positive reinforcement?

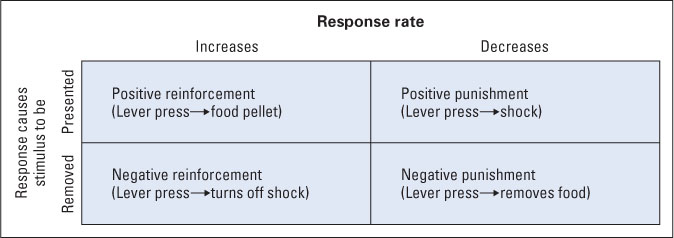

In Skinner’s terminology, reinforcement refers to any process that increases the likelihood that a particular response will occur. Reinforcement can be positive or negative. Positive reinforcement occurs when the arrival of some stimulus following a response makes the response more likely to recur. The stimulus in this case is called a positive reinforcer. Food pellets, money, words of praise, and anything else that organisms will work to obtain can be used as positive reinforcers. Negative reinforcement, in contrast, occurs when the removal of some stimulus following a response makes the response more likely to recur. The stimulus in this case is called a negative reinforcer. Electric shocks, loud noises, unpleasant company, scoldings, and everything else that organisms will work to get away from can be used as negative reinforcers. The one example of negative reinforcement discussed so far was the experiment in which a thumb-twitch response was reinforced by the temporary removal of unpleasant static.

121

© Peter Titmuss/Alamy

Notice that positive and negative here do not refer to the direction of change in the response rate; that increases in either case. Rather, the terms indicate whether the reinforcing stimulus appears (positive) or disappears (negative) as a result of the operant response. Negative reinforcement is not the same a punishment, a mistake that many students of psychology make.

Distinction Between Reinforcement and Punishment

25

How does punishment differ from reinforcement, and how do the two kinds of punishment parallel the two kinds of reinforcement?

In Skinner’s terminology, punishment is the opposite of reinforcement. It is the process through which the consequence of a response decreases the likelihood that the response will recur. As with reinforcement, punishment can be positive or negative. In positive punishment, the arrival of a stimulus, such as electric shock for a rat or scolding for a person, decreases the likelihood that the response will occur again. In negative punishment, the removal of a stimulus, such as taking food away from a hungry rat or money away from a person, decreases the likelihood that the response will occur again. Both types of punishment can be distinguished from extinction, which, you recall, is the decline in a previously reinforced response when it no longer produces any effect.

To picture the distinction between positive and negative punishment, and to see their relation to positive and negative reinforcement, look at Figure 4.13. The terms are easy to remember if you recall that positive and negative always refer to the arrival or removal of a stimulus and that reinforcement and punishment always refer to an increase or decrease in the likelihood that the response will recur.

The figure also makes it clear that the same stimuli that can serve as positive reinforcers when presented can serve as negative punishers when removed, and the same stimuli that can serve as positive punishers when presented can serve as negative reinforcers when removed. It is easy to think of the former as “desired” stimuli and the latter as “undesired,” but Skinner avoided such mentalistic terms. He argued that the only way to tell whether a stimulus is desired or undesired is by observing its reinforcing or punishing effects, so these mentalistic terms add nothing to our understanding. For instance, an adult who scolds a child for misbehaving may think that the scolding is an undesired stimulus that will punish the behavior, but, in fact, it may be a desired stimulus to the child who seeks attention. To Skinner, the proof is in the result. If scolding causes the undesired behavior to become less frequent, then scolding is acting as a positive punisher; if scolding causes the behavior to become more frequent, it is acting as a positive reinforcer.

122

Discrimination Training in Operant Conditioning

26

How can an animal be trained to produce an operant response only when a specific cue is present?

Through discrimination training, an animal can be conditioned to make an operant response to a stimulus more specific than the entire inside of a Skinner box. Discrimination training in operant conditioning is analogous to discrimination training in classical conditioning. The essence of the procedure is to reinforce the animal’s response when a specific stimulus is present and to extinguish the response when the stimulus is absent. Thus, to train a rat to respond to a tone by pressing a lever, a trainer would alternate between reinforcement periods with the tone on (during which the animal gets food pellets for responding) and extinction periods with the tone off. After considerable training of this sort, the rat will begin pressing the lever as soon as the tone comes on and stop as soon as it goes off. The tone in this example is called a discriminative stimulus.

Operant discrimination training, like the analogous procedure in classical conditioning, can be used to study the sensory abilities of animals and human infants, who cannot describe their sensations in words. In one experiment, for example, researchers trained newborns to change their sucking rate on a nipple to hear (through headphones) a story their mothers had read to them during the last few weeks of the fetal period versus another story (DeCasper & Spence, 1986). Thus, the babies learned to make two different responses (increase or decrease sucking rate) to two different discriminative stimuli. This demonstrated, among other things, that the newborns could hear the difference between the two sounds and would alter their sucking rate to hear the story that their mothers had read to them while they were still in the womb. Operant discrimination training can also be used to study animals’ understanding of concepts.

Discrimination and Generalization as Indices of Concept Understanding

In classical conditioning, as you may recall, animals that have learned to respond to a conditioned stimulus will respond also to new stimuli that they perceive as similar to the original conditioned stimulus, a phenomenon referred to as generalization. Generalization also occurs in operant conditioning. After operant discrimination training, animals will respond to new stimuli that they perceive as similar to the discriminative stimulus. Such generalization can be used to test an animal’s understanding of a concept.

Consider an experiment conducted by Richard Herrnstein (1979), who operantly conditioned pigeons to peck a key for grain, using photographs of natural scenes as discriminative stimuli. Herrnstein divided the photos into two categories—those that had at least one tree or portion of a tree somewhere in the scene and those that didn’t. The pigeons received grain (on a variable-ratio schedule of reinforcement) for pecking the key whenever a “tree” photo was shown and nothing when a “no tree” photo was shown. In the first phase, 80 photos were presented each day, 40 of which contained trees and 40 of which didn’t. By the end of 5 days of such training—that is, after five presentations of all 80 photos—all the birds were successfully discriminating between the two categories of photos, pecking when the photo contained a tree and not pecking otherwise (see Figure 4.14).

27

How was discrimination training used to demonstrate that pigeons understand the concept of a tree?

Now, the question is: What had Herrnstein’s pigeons learned? Did they learn each photo as a separate stimulus, unrelated to the others, or did they learn a rule for categorizing the photos? Such a rule might be stated by a person as follows: “Respond whenever a photo includes a tree or part of a tree, and don’t respond otherwise.” To determine whether the pigeons had acquired such a rule, Herrnstein tested them with new photos, which they had never seen before, under conditions in which no grain was given. He found that the pigeons immediately pecked at a much higher rate when a new photo contained a tree than when it did not. In fact, the pigeons were as accurate with new photos as they were with photos that had been used during training. The birds apparently based their responses on a concept of trees (Herrnstein, 1990). A concept, as the term is used here, can be defined as a rule for categorizing stimuli into groups. The pigeons’ tree concept, in this case, must have guided their decision to peck or not to peck.

123

iStockphoto/Thinkstock

How might one describe the pigeons’ tree concept? That question is not easily answered. It is not the case, for example, that the pigeons simply learned to peck at photos that included a patch of green. Many of the “no tree” photos had green grass, and some of the tree photos were of fall or winter scenes in New England, where the trees had red and yellow leaves or none at all. In some photos, only a small portion of a tree was apparent or the tree was in the distant background. So the method by which the pigeons distinguished tree photos from others cannot be easily stated in stimulus terms, although ultimately it must have been based on the birds’ analysis of the stimulus material. Similar experiments have shown that pigeons can acquire concepts pertaining to such objects as cars, chairs, the difference between male and female human faces, and even abstract symbols (Cook & Smith, 2006; Loidolt et al., 2003; Wasserman, 1995). The point is that, even for pigeons—and certainly for humans—sophisticated analysis of the stimulus information occurs before the stimulus is used to guide behavior.

When Rewards Backfire: The Overjustification Effect in Humans

28

Why might a period of reward lead to a subsequent decline in response rate when the reward is no longer available?

In human beings, rewards have a variety of effects, either positive or negative, depending on the conditions in which they are used and the meanings that they engender in those who receive them.

Consider an experiment conducted with nursery-school children (Lepper & Greene, 1978). Children in one group were rewarded with attractive “Good Player” certificates for drawing with felt-tipped pens. This had the immediate effect of leading them to spend more time at this activity than did children in the other group (who were not rewarded). Later, however, when certificates were no longer given, the previously rewarded children showed a sharp drop in their use of the pens—to a level well below that of children in the unrewarded group. So, the long-term effect of the period of reward was to decrease the children’s use of felt-tipped pens.

124

Many subsequent experiments, with people of various ages, have produced similar results. For example, explicitly rewarding children to share leads to less, not more, sharing later on. In studies with 20-month-old toddlers (Warneken & Tomasello, 2008) and with 6- to 12-year-old children (Fabes et al., 1989), children who were rewarded for sharing (for example, permitted to play with a special toy) later shared less when given the opportunity compared to children who received only praise or no reward. Other research shows that rewards tied to specific performance or completion of a task are negatively associated with creativity (Byron & Khazanchi, 2012). The drop in performance following a period of reward is particularly likely to occur when the task is something that is initially enjoyed for its own sake and the reward is given in such a manner that it seems to be designed deliberately to motivate the participants to engage in the task (Lepper & Henderlong, 2000). This decline is called the overjustification effect because the reward presumably provides an unneeded extra justification for engaging in the behavior. The result is that people come to regard the task as something that they do for an external reward rather than for its own sake—that is, as work rather than play. When they come to regard the task as work, they stop doing it when they no longer receive a payoff for it, even though they would have otherwise continued to do it for fun.

Such findings suggest that some rewards used in schools may have negative long-term effects. For example, rewarding children for reading might cause them to think of reading as work rather than fun, which would lead them to read less on their own. The broader point is that one must take into account the cognitive consequences of rewards in predicting their long-term effects, especially when dealing with human beings.

Behavior Analysis

29

How are Skinner’s techniques of operant conditioning being used to deal with “problem” behaviors?

Following in the tradition of Skinner, the field of behavior analysis uses principles of operant conditioning to predict behavior (Baer et al., 1968). Recall from earlier in this chapter that, in operant conditioning, discrimination and generalization serve as indicators of whether the subject has acquired concept understanding. From this perspective, one has achieved “understanding” to the degree to which one can predict and influence future occurrences of behavior. Behavioral techniques are frequently used to address a variety of real-world situations and problems, including dealing with phobias, developing classroom curricula (for college courses such as General Psychology; Chase & Houmanfar, 2009), educating children and adults with special needs (for example, behavioral techniques used with children with autism and intellectual impairments), helping businesses and other organizations run more effectively (Dickinson, 2000), and helping parents deal with misbehaving children. When behavior analysis is applied specifically to modify problem behaviors, especially as part of a learning or treatment process, it is referred to as applied behavior analysis (often abbreviated as ABA; Bijou & Baer, 1961).

125

Consider the behavior analysis implications of “reality” TV programs in which experts (and TV cameras) go into people’s homes to help them deal with their unruly children or pets. In one show, Supernanny, parents are taught to establish and enforce rules. When giving their child a “time-out,” for example, they are told to make certain that the child spends the entire designated time in the “naughty corner.” To accomplish this, parents have sometimes had to chase the escaping child repeatedly until the child finally gave in. By enforcing the timeout period, even if it took over an hour to do so, the child’s “escape” behavior was extinguished, and—coupled with warmth and perhaps something to distract the child from the offending behavior—discipline became easier.

The first thing one does in behavior analysis is to define some socially significant behaviors that are in need of changing. These target behaviors may be studying for tests for a fourth-grade student, dressing oneself for a person with intellectual impairment, discouraging nail biting by a school-age child, or reducing occurrences of head banging for a child with autism. Then, a schedule of reinforcement is implemented to increase, decrease, or maintain the targeted behavior (Baer et al., 1968).

Teachers use behavioral techniques as a way of managing their classrooms (Alberto & Troutman, 2005). For instance, experienced teachers know that desirable behavior can be modeled or shaped through a series of reinforcements (successive approximations), initially providing praise when a child improves his or her performance in a subject by a marginal amount (“That’s great! Much better than before!”), then insisting on improved performance before subsequent reinforcement (in this case, social praise) is given, until eventually performance is mostly correct. Other times, teachers learn to remove all possible reinforcement for disruptive behavior, gradually extinguishing it and possibly using praise to reinforce more desirable behavior.

Behavioral techniques have been especially useful for working with people with certain developmental disabilities, including intellectual impairment, attention-deficit with hyperactivity disorder (ADHD), and autism. For example, token economies are often used with people with intellectual impairment. In these systems, teachers or therapists deliver a series of tokens, or artificial reinforcers (for example, a “gold” coin), when a target behavior is performed. Tokens can be given for brushing one’s teeth, making eye contact with a therapist, or organizing the contents of one’s desk. The tokens can later be used to buy products or privileges (for instance, 3 gold coins = 1 pad of paper; 6 gold coins = a snack; 20 gold coins = an excursion out of school).

Behavior analysis has been extensively used in treating children with autism. Recall from Chapter 2 that autism is a disorder characterized by deficits in emotional connections and communication. In severe cases, it may involve absent or abnormal speech, low IQ, ritualistic behaviors, aggression, and self-injury. Ivar Lovaas and his colleagues were the first to develop behavioral techniques for dealing with children with severe autism (Lovaas, 1987, 2003; McEachin et al., 1993). For example, Lovaas reported that 47 percent of children with severe autism receiving his behavioral intervention attained first-grade level academic performance and a normal level of IQ by 7 years of age, in contrast to only 2 percent of children with autism in a control group. Although many studies report success using behavior analysis with children with autism (Sallows & Graupner, 2005), others claim that the effects are negligible or even harmful, making the therapy controversial (Dawson, 2004).

Behavior analysis provides an excellent framework for assessing and treating many “problem” behaviors, ranging from the everyday difficulties of parents dealing with a misbehaving child, to the often self-destructive behaviors of children with severe developmental disabilities.

126

SECTION REVIEW

Operant conditioning reflects the impact of an action’s consequences on its recurrence.

Work of Thorndike and Skinner

- An operant response is an action that produces an effect.

- Thorndike’s puzzle box experiments led him to postulate the law of effect.

- Skinner defined reinforcer as a stimulus change that follows an operant response and increases the frequency of that response.

- Operant conditioning can occur without awareness.

Variations in Availability of Reinforcement

- Shaping occurs when successive approximations to the desired response are reinforced.

- Extinction is the decline in response rate that occurs when an operant response is no longer reinforced.

- Partial reinforcement can be contrasted with continuous reinforcement and can occur on various schedules of reinforcement. The type of schedule affects the response rate and resistance to extinction.

Reinforcement vs. Punishment

- Reinforcement increases response rate; punishment decreases response rate.

- Reinforcement can be either positive (such as praise is given) or negative (such as pain goes away).

- Punishment can be either positive (such as a reprimand is given) or negative (such as computer privileges are taken away).

Operant conditioning entails learning about conditions and consequences.

Discrimination Training

- If reinforcement is available only when a specific stimulus is present, that stimulus becomes a discriminative stimulus. Subjects learn to respond only when it is present.

Discrimination and Generalization as Indices of Concept Understanding

- Learners generalize to stimuli that they perceive as similar to the discriminative stimulus, but can be trained to discriminate.

- Discrimination and generalization of an operantly condition behavior can be used to identify concepts a subject has learned.

Overjustification

- In humans, rewards can have a positive or negative effect, depending on the conditions in which they are used and the meanings that they engender in those who receive them.

- In the overjustification effect, previously reinforced behavior declines because the reward presumably provides an unneeded extra justification for engaging in the behavior.

Behavior analysis uses Skinner’s principle of operant conditioning.

- Teachers use behavioral techniques as a way of managing their classrooms.

- Token economies involve delivering a series of tokens, or artificial reinforcers, for performing target behaviors. They are often used with people with intellectual impairment.

- Work by Lovaas and his colleagues demonstrated the usefulness of behavior analysis for children with severe forms of autism.