7.6 Hearing

Among mammals, those with the keenest hearing are the bats. In complete darkness, and even if blinded, bats can flit around obstacles such as tree branches and capture insects that themselves are flying in erratic patterns to escape. Bats navigate and hunt by sonar, that is, by reflected sound waves. They send out high-pitched chirps, above the frequency range that humans can hear, and analyze the echoes in a way that allows them to hear such characteristics as the size, shape, position, direction of movement, and texture of a target insect (Feng & Ratnam, 2000).

Hearing may not be as well developed or as essential for humans as it is for bats, but it is still enormously effective and useful. It allows us to detect and respond to potentially life-threatening or life-enhancing events that occur in the dark, or behind our backs, or anywhere out of view. We use it to identify animals and such natural events as upcoming storms. And, perhaps most important, it is the primary sensory modality of human language. We learn from each other through our ears.

Sound and Its Transduction by the Ear

If a tree falls in the forest and no one is there to hear it, does it make a sound? This old riddle plays on the fact that the term sound refers both to a type of physical stimulus and to the sensation produced by that stimulus.

Sound as a Physical Stimulus

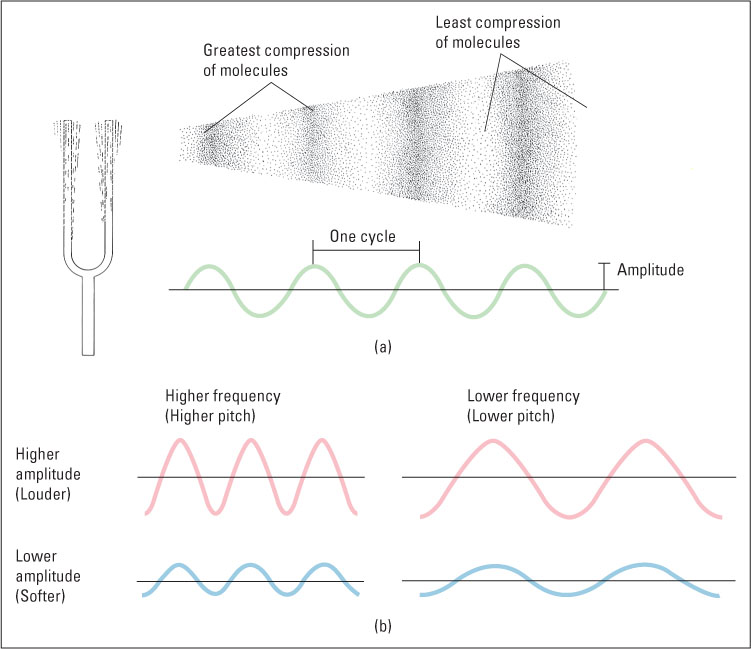

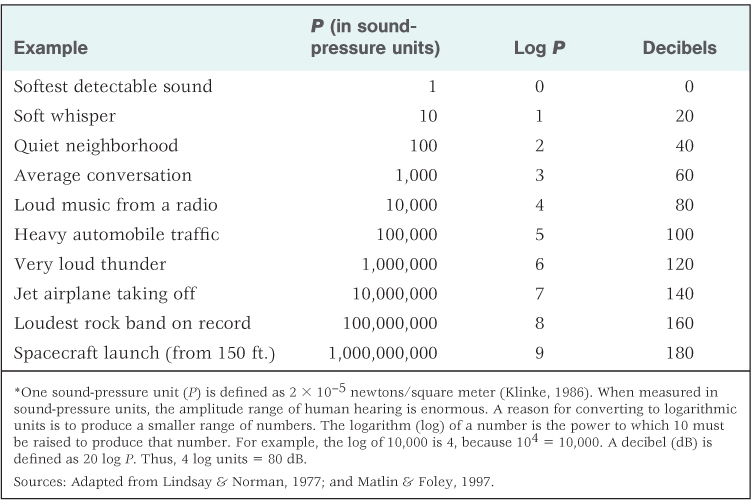

As a physical stimulus, sound is the vibration of air or some other medium produced by an object such as a tuning fork, one’s vocal cords, or a falling tree. The vibration moves outward from the sound source in a manner that can be described as a wave (see Figure 7.11). The height of the wave indicates the total pressure exerted by the molecules of air (or another medium) as they move back and forth. This is referred to as the sound’s amplitude, or intensity, and corresponds to what we hear as the sound’s loudness. Sound amplitude is usually measured in logarithmic units of pressure called decibels (abbreviated dB). (See Table 7.3, which further defines decibels and contains the decibel ratings for various common sounds.)

Sound-pressure amplitudes of various sounds, and conversion to decibels*

In addition to varying in amplitude, sound waves vary in frequency, which we hear as the sound’s pitch. The frequency of a sound is the rate at which the molecules of air or another medium move back and forth. Frequency is measured in hertz (abbreviated Hz), which is the number of complete waves (or cycles) per second generated by the sound source. Sounds that are audible to humans have frequencies ranging from about 20 to 20,000 Hz. To give you an idea of the relationship between frequency and pitch, the dominant (most audible) frequency of the lowest note on a piano is about 27 Hz, that of middle C is about 262 Hz, and that of the highest piano note is about 4,186 Hz. The simplest kind of sound is a pure tone, which is a constant-frequency sound wave that can be described mathematically as a sine wave (depicted in Figure 7.11). Pure tones, which are useful in auditory experiments, can be produced in the laboratory, but they rarely if ever occur in other contexts. Natural sources of sound, including even musical instruments and tuning forks, vibrate at several frequencies at once and thus produce more complex waves than that shown in Figure 7.11. The pitch that we attribute to a natural sound depends on its dominant (largest-amplitude) frequency.

270

271

How the Ear Works

23

What are the functions of the outer ear, middle ear, and inner ear?

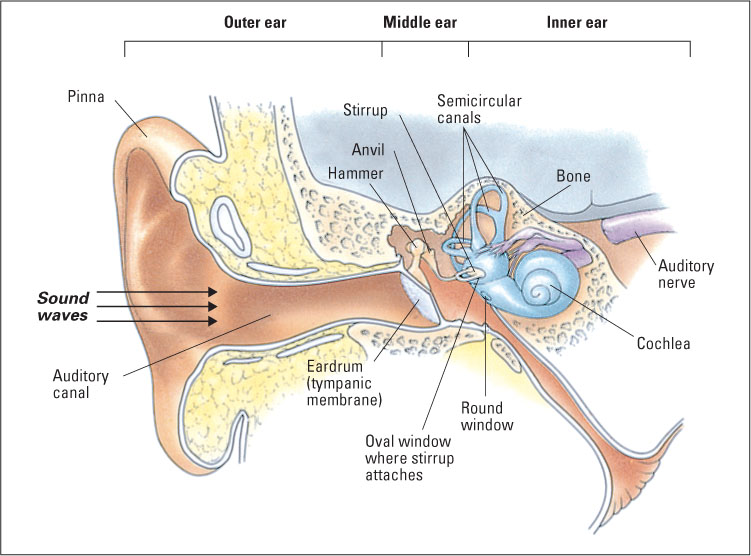

Hearing originated, in the course of evolution, from the sense of touch. Touch is sensitivity to pressure on the skin, and hearing is sensitivity to pressure on a special sensory tissue in the ear. In some animals, such as moths, sound is sensed through modified touch receptors located on flexible patches of skin that vibrate in response to sound waves. In humans and other mammals, the special patches of skin for hearing have migrated to a location inside the head, and special organs, the ears, have developed to magnify the pressure exerted by sound waves as they are transported inward. A diagram of the human ear is shown in Figure 7.12. To review its structures and their functions, we will begin from the outside and work inward.

The outer ear consists of the pinna, which is the flap of skin and cartilage forming the visible portion of the ear, and the auditory canal, which is the opening into the head that ends at the eardrum. The whole outer ear can be thought of as a funnel for receiving sound waves and transporting them inward. The vibration of air outside the head (the physical sound) causes air in the auditory canal to vibrate, which, in turn, causes the eardrum to vibrate.

The middle ear is an air-filled cavity, separated from the outer ear by the eardrum (technically called the tympanic membrane). The middle ear’s main structures are three tiny bones collectively called ossicles (and individually called the hammer, anvil, and stirrup, because of their respective shapes), which are linked to the eardrum at one end and to another membrane called the oval window at the other end. When sound causes the eardrum to vibrate, the ossicles vibrate and push against the oval window. Because the oval window has only about one-thirtieth the area of the tympanic membrane, the pressure (force per unit area) that is funneled to it by the ossicles is about 30 times greater than the pressure on the eardrum. Thus, the main function of the middle ear is to increase the amount of pressure that sound waves exert upon the inner ear so that transduction can occur.

272

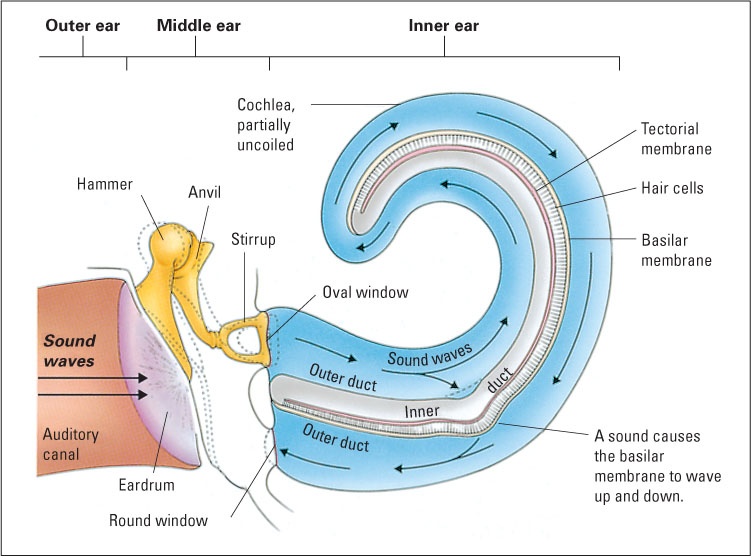

The oval window separates the middle ear from the inner ear, which consists primarily of the cochlea, a coiled structure where transduction takes place. As depicted in the partially uncoiled view in Figure 7.13, the cochlea contains a fluid-filled outer duct, which begins at the oval window, runs to the tip of the cochlea, and then runs back again to end at another membrane, the round window. Sandwiched between the outgoing and incoming portions of the outer duct is another fluid-filled tube, the inner duct. Forming the floor of the inner duct is the basilar membrane, on which are located the receptor cells for hearing, called hair cells. There are four rows of hair cells (three outer rows and one inner row), each row running the length of the basilar membrane. Tiny hairs (called cilia) protrude from each hair cell into the inner duct and abut against another membrane, called the tectorial membrane. At the other end from its hairs, each hair cell forms synapses with several auditory neurons, whose axons form the auditory nerve (eighth cranial nerve), which runs to the brain.

24

How does transduction occur in the inner ear?

The process of transduction in the cochlea can be summarized as follows: The sound-induced vibration of the ossicles against the oval window initiates vibration in the fluid in the outer duct of the cochlea, which produces an up-and-down waving motion of the basilar membrane, which is very flexible. The tectorial membrane, which runs parallel to the basilar membrane, is less flexible and does not move when the basilar membrane moves. The hairs of the hair cells are sandwiched between the basilar membrane and the tectorial membrane, so they bend each time the basilar membrane moves toward the tectorial membrane. This bending causes tiny channels to open up in the hair cell’s membrane, which leads to a change in the electrical charge across the membrane (the receptor potential) (Brown & Santos-Sacchi, 2012; Corey, 2007). This in turn causes each hair cell to release neurotransmitter molecules at its synapses upon auditory neurons, thereby increasing the rate of action potentials in those neurons (Hudspeth, 2000b).

Deafness and Hearing Aids

25

How do two kinds of deafness differ in their physiological bases and in possible treatment?

This knowledge of the ear enables understanding of the physiological bases for deafness. It is important, however, to note that there are two general categories of deafness. The first, called conduction deafness, occurs when the ossicles of the middle ear become rigid and cannot carry sounds inward from the tympanic membrane to the cochlea. People with conduction deafness can hear vibrations that reach the cochlea by routes other than the middle ear. A conventional hearing aid is helpful for such people because it magnifies the sound pressure sufficiently for vibrations to be conducted by other bones of the face into the cochlea.

273

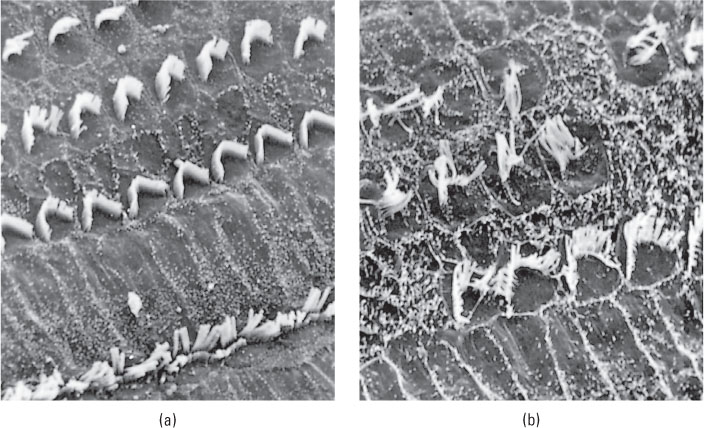

The other form of deafness is sensorineural deafness, which results from damage to the hair cells of the cochlea or damage to the auditory neurons. Damage to hair cells is particularly likely to occur among people who are regularly exposed to loud sounds (see Figure 7.14). Indeed, some experts on human hearing are concerned that the regular use of portable music players, which can play music at sound pressure levels of more than 100 decibels through their stock earbuds, may cause partial deafness in many young people today as they grow older (Nature Neuroscience editorial, 2007). Congenital deafness (deafness present at birth) may involve damage to either the hair cells or the auditory neurons.

People with complete sensorineural deafness are not helped by a conventional hearing aid, but can in many cases regain hearing with a surgically implanted hearing aid called a cochlear implant (Niparko et al., 2000). This device performs the transduction task normally done by the ear’s hair cells (though not nearly as well). It transforms sounds into electrical impulses and sends the impulses through thin wires permanently implanted into the cochlea, where they stimulate the terminals of auditory neurons directly. A cochlear implant may enable adults who became deaf after learning language to regain much of their ability to understand speech (Gifford et al., 2008). Some children who were born deaf are able to acquire some aspects of vocal language at a nearly normal rate through the combination of early implantation, improvements in implant technology, and aggressive post-implant speech therapy. However, outcomes remain highly variable, and most children receiving these interventions still do not attain age-appropriate performance in speaking or listening (Ertmer, 2007; Svirsky et al., 2000). It is important to note that cochlear implants are effective when deafness has resulted from the destruction of hair cells, but they do not help individuals whose auditory nerve has been destroyed.

Pitch Perception

The aspect of hearing that allows us to tell how high or low a given tone is, and to recognize a melody, is pitch perception. The first step in perceiving pitch is that receptor cells on the basilar membrane respond differently to different sound frequencies. How does that occur? The first real breakthrough in answering that question came in the 1920s, with the work of Georg von Békésy, which eventuated in a Nobel Prize.

The Traveling Wave as a Basis for Frequency Coding

26

How does the basilar membrane of the inner ear operate to ensure that different neurons are maximally stimulated by sounds of different frequencies?

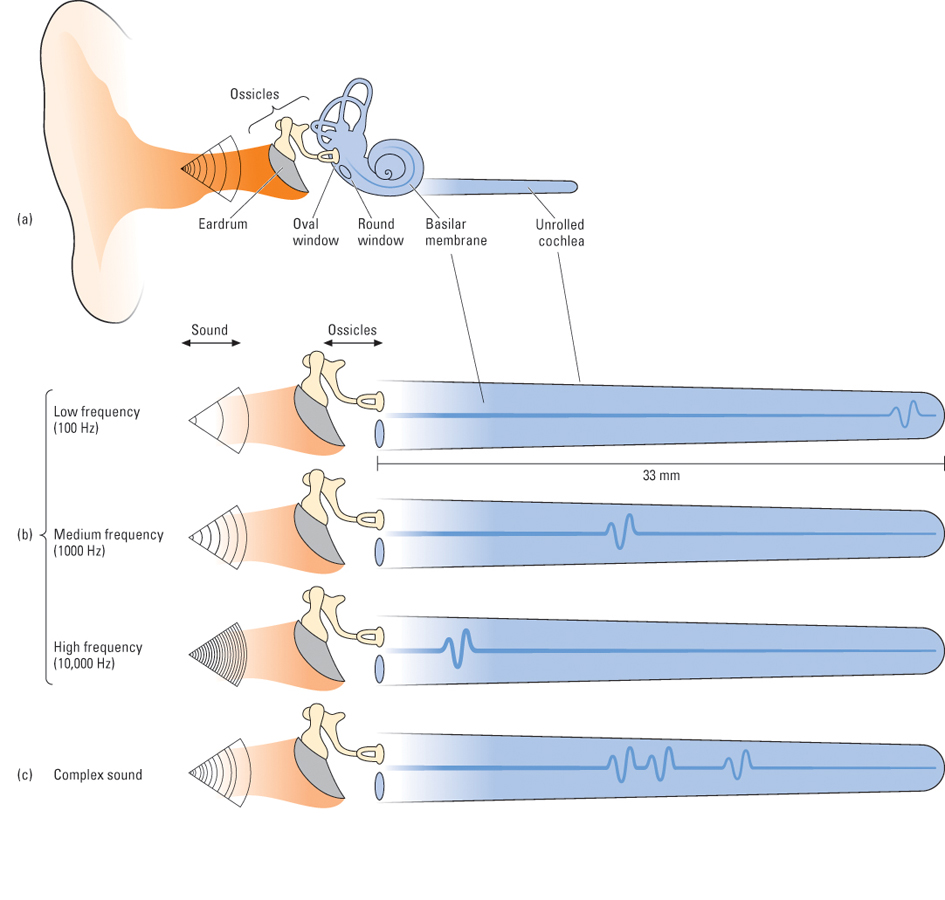

In order to study frequency coding, von Békésy developed a way to observe directly the action of the basilar membrane. He discovered that sound waves entering the cochlea set up traveling waves on the basilar membrane, which move from the proximal end (closest to the oval window) toward the distal end (farthest away from the oval window), like a bedsheet when someone shakes it at one end. As each wave moves, it increases in amplitude up to a certain maximum and then rapidly dissipates. Of most importance, von Békésy found that the position on the membrane at which the waves reach their peak amplitude depends on the frequency of the tone. High frequencies produce waves that travel only a short distance, peaking near the proximal end, not far from the oval window. Low frequencies produce waves that travel farther, peaking near the distal end, nearer to the round window. For an illustration of the effects that different tones and complex sounds have on the basilar membrane, see Figure 7.15.

274

From this observation, von Békésy hypothesized that (a) rapid firing in neurons that come from the proximal end of the membrane, accompanied by little or no firing in neurons coming from more distal parts, is interpreted by the brain as a high-pitched sound; and (b) rapid firing in neurons coming from a more distal portion of the membrane is interpreted as a lower-pitched sound.

Subsequent research has confirmed the general validity of von Békésy’s hypothesis and has shown that the waves on the intact, living basilar membrane are in fact much more sharply defined than those that von Békésy had observed. There is now good evidence that the primary receptor cells for hearing are the inner row of hair cells and that the outer three rows serve mostly a different function. They have the capacity to stiffen when activated, and they do so in a manner that amplifies and sharpens the traveling wave (Géléoc & Holt, 2003).

275

Two Sensory Consequences of the Traveling-Wave Mechanism

27

How does the traveling-wave theory explain (a) an asymmetry in auditory masking and (b) the pattern of hearing loss that occurs as we get older?

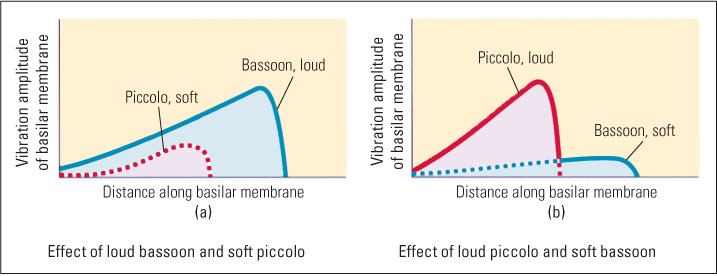

The manner by which the basilar membrane responds to differing frequencies helps us make sense of a number of auditory phenomena. One such phenomenon is asymmetry in auditory masking, which is especially noticeable in music production. Auditory masking refers to the ability of one sound to mask (prevent the hearing of) another sound. Auditory masking is asymmetrical in that low-frequency sounds mask high-frequency sounds much more effectively than the reverse (Scharf, 1964). For example, a bassoon can easily drown out a piccolo, but a piccolo cannot easily drown out a bassoon. Figure 7.16 illustrates the waves the two instruments produce on the basilar membrane. The wave produced by a low-frequency bassoon note encompasses the entire portion of the basilar membrane that is encompassed by the piccolo note (and more). Thus, if the bassoon note is of sufficient amplitude, it can interfere with the effect of the piccolo note; but the piccolo note, even at great amplitude, cannot interfere with the effect that the bassoon note has on the more distal part of the membrane because the wave produced by the piccolo note never travels that far down the membrane.

Another effect of the traveling-wave mechanism concerns the pattern of hearing loss that occurs as we get older. We lose our sensitivity to high frequencies to a much greater degree than to low frequencies. Thus, young children can hear frequencies as high as 30,000 Hz, and young adults can hear frequencies as high as 20,000 Hz, but a typical 60-year-old cannot hear frequencies above about 15,000 Hz. This decline is greatest for people who live or work in noisy environments and is caused by the wearing out of hair cells with repeated use (Kryter, 1985). But why should cells responsible for coding high frequencies wear out faster than those for coding low frequencies? The most likely answer is that the cells coding high frequencies are acted upon by all sounds (as shown in Figure 7.16), while those coding low frequencies are acted upon only by low-frequency sounds.

Another Code for Frequency

28

How does the timing of action potentials code sound frequency? How do cochlear implants produce perception of pitch?

Although the traveling-wave theory of frequency coding has been well validated, it is not the whole story. For frequencies below about 4,000 Hz (which include most of the frequencies in human speech), perceived pitch depends not just on which part of the basilar membrane is maximally active but also on the timing of that activity (Moore, 1997). The electrical activity triggered in sets of auditory neurons tends to be locked in phase with sound waves, such that a separate burst of action potentials occurs each time a sound wave peaks. The frequency at which such bursts occur contributes to the perception of pitch.

Consistent with what is known about normal auditory coding, modern cochlear implants use both place and timing to produce pitch perception (Dorman & Wilson, 2004). These devices break a sound signal into several (typically six) different frequency ranges and send electrical pulses from each frequency range through a thin wire to a different portion of the basilar membrane. Pitch perception is best when the electrical signal sent to a given locus of the membrane is pulsed at a frequency similar to that of the sound wave that would normally act at that location.

276

Further Pitch Processing in the Brain

29

How is tone frequency represented in the primary auditory cortex? What evidence suggests a close relationship between musical pitch perception and visual space perception?

Auditory sensory neurons send their output to nuclei in the brainstem, which in turn send axons upward, ultimately to the primary auditory area of the cerebral cortex, located in each temporal lobe (refer back to Figure 7.1, on p. 247). Neurons in the primary auditory cortex are tonotopically organized. That is, each neuron there is maximally responsive to sounds of a particular frequency, and the neurons are systematically arranged such that high-frequency tones activate neurons at one end of this cortical area and low-frequency tones activate neurons at the other end. Ultimately, the pitch or set of pitches we hear depends largely on which neurons in the auditory cortex are most active.

As is true of other sensory areas in the cerebral cortex, the response characteristics of neurons in the primary auditory cortex are very much influenced by experience. When experimental animals are trained to use a particular tone frequency as a cue guiding their behavior, the number of auditory cortical neurons that respond to that frequency greatly increases (Bakin et al., 1996). Heredity determines the general form of the tonotopic map, but experience determines the specific amount of cortex that is devoted to any particular range of frequencies. A great deal of research, with humans as well as with laboratory animals, shows that the brain’s response to sound frequencies, and to other aspects of sound as well, is very much affected by previous auditory experience. For example, musicians’ brains respond more strongly to the sounds of the instruments they play than to the sounds of other instruments (Kraus & Banai, 2007).

Our capacity to distinguish pitch depends not just upon the primary auditory cortex, but also upon activity in an area of the parietal lobe of the cortex called the intra-parietal sulcus, which receives input from the primary auditory cortex. This part of the brain is involved in both music perception and visual space perception. In one research study, people who described themselves as “tone deaf” and performed poorly on a test of ability to distinguish among different musical notes also performed poorly on a visual-spatial test that required them to mentally rotate pictured objects in order to match them to pictures of the same objects from other viewpoints (Douglas & Bilkey, 2007). Perhaps it is no coincidence that we (and also people who speak other languages) describe pitch in spatial terms—“high” and “low.” Our brain may, in some way, interpret a high note as high and a low note as low, using part of the same neural system as is used to perceive three-dimensional space.

Making Sense of Sounds

Think of the subtlety and complexity of our auditory perception. With no cues but sound, we can locate a sound source within about 5 to 10 degrees of its true direction (Hudspeth, 2000a). At a party we can distinguish and listen to one person’s voice in a noisy environment that includes not only many other voices but also a band playing in the background. To comprehend speech, we hear the tiny differences between plot and blot, while ignoring the much larger differences between two different voices that speak either of those words. All sounds set up patterns of waves on our basilar membranes, and from those seemingly chaotic patterns our nervous system extracts all the information needed for auditory perception.

Locating Sounds

The ability to detect the direction of a sound source contributes greatly to the usefulness of hearing. When startled by an unexpected rustling, we reflexively turn toward it to see what might be causing the disturbance. Even newborn infants do this (Muir & Field, 1979), indicating that the ability to localize a sound does not require learning, although this ability improves markedly by the end of the first year (Johnson et al., 2005). Such localization is also a key component of our ability to keep one sound distinct from others in a noisy environment. People can attend to one voice and ignore another much more easily if the two voices come from different locations in the room than if they come from the same location (Feng & Ratnam, 2000).

277

30

How does the difference between the two ears in their distance from a sound source contribute our ability to locate that source?

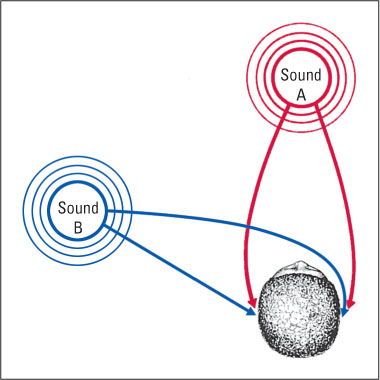

Sound localization depends at least partly, and maybe mostly, on the time at which each sound wave reaches one ear compared to the other. A wave of sound coming from straight ahead reaches the two ears simultaneously, but a wave from the right or the left reaches one ear slightly before the other (see Figure 7.17). A sound wave that is just slightly to the left of straight ahead reaches the left ear a few microseconds (millionths of a second) before it reaches the right ear, and a sound wave that is 90 degrees to the left reaches the left ear about 700 microseconds before it reaches the right ear. Many auditory neurons in the brainstem receive input from both ears. Some of these neurons respond most to waves that reach both ears at once; others respond most to waves that reach one ear some microseconds—ranging from just a few on up to about 700—before, or after, reaching the other ear. These neurons, presumably, are part of the brain’s mechanism for perceiving the direction from which a sound is coming (Recanzone & Sutter, 2008; Thompson et al., 2006).

Analyzing Patterns of Auditory Input

In the real world (outside of the laboratory), sounds rarely come in the form of pure tones. The sounds we hear, and from which we extract meaning, consist of highly complex patterns of waveforms. How is it that you identify the sweet word psychology regardless of whether it is spoken in a high-pitched or low-pitched voice, or spoken softly or loudly? You identify the word not from the absolute amplitude or frequency of the sound waves, but from certain patterns of change in these that occur over time as the word is spoken.

Beyond the primary auditory area are cortical areas for analyzing such patterns (Lombar & Malhotra, 2008; Poremba et al., 2003). For example, some neurons in areas near the primary auditory area respond only to certain combinations of frequencies, others only to rising or falling pitches, others only to brief clicks or bursts of sound, and still others only to sound sources that are moving in a particular direction (Baumgart et al., 1999; Phillips, 1989). In macaque monkeys, some cortical neurons respond selectively to particular macaque calls (Rauschecker et al., 1995). In the end, activity in some combination of neurons in your cerebral cortex must provide the basis for each of your auditory experiences, but researchers are only beginning to learn how that is accomplished.

Phonemic Restoration: An Auditory Illusion

In certain situations our auditory system provides us with the perception of sounds that are not really present as physical stimuli. A well-documented example is the sensory illusion of phonemic restoration. Phonemes are the individual vowel and consonant sounds that make up words, and phonemic restoration is an illusion in which people hear phonemes that have been deleted from words or sentences as if they were still there. The perceptual experience is that of really hearing the missing sound, not that of inferring what sound must be there.

31

Under what conditions does our auditory system fill in a missing sound? What might be the value of such an illusion?

Richard Warren (1970) first demonstrated this illusion in an experiment in which he removed an s sound and spliced in a coughing sound of equal duration in the following tape-recorded sentence at the place marked by an asterisk: The state governors met with their respective legi*latures convening in the capital city. People listening to the doctored recording could hear the cough, but it did not seem to coincide with any specific portion of the sentence or block out any sound in the sentence. Even when they listened repeatedly, with instructions to determine what sound was missing, subjects were unable to detect that any sound was missing. After they were told that the first “s” sound in “legislatures” was missing, they still claimed to hear that sound each time they listened to the recording. In other experiments, thousands of practice trials failed to improve people’s abilities to judge which phoneme was missing (Samuel, 1991). Not surprisingly, phonemic restoration has been found to be much more reliable for words that are very much expected to occur in the sentence than for words that are less expected (Sivonen et al., 2006).

278

Which sound is heard in phonemic restoration depends on the surrounding phonemes and the meaningful words and phrases they produce. The restored sound is always one that turns a partial word into a whole word that is consistent in meaning with the rest of the sentence. Most remarkably, even words that occur after the missing phoneme can influence which phoneme is heard. For example, people heard the stimulus sound *eel (again, the asterisk represents a cough-like sound) as peel, heel, or wheel, depending on whether it occurred in the phrase The *eel was on the orange, The *eel was on the shoe, or The *eel was on the axle (Warren, 1984). The illusory phoneme was heard as occurring at its proper place, in the second word of the sentence, even though it depended on the words that followed it.

One way to make sense of this phenomenon is to assume that much of our perceptual experience of hearing derives from a brief auditory sensory memory, which lasts for a matter of seconds and is modifiable. The later words generate a momentary false memory of hearing, earlier—a phoneme that wasn’t actually present—and that memory is indistinguishable in type from memories of phonemes that actually did occur. Illusory restoration has also been demonstrated in music perception. People hear a missing note in a familiar tune as if it were present (DeWitt & Samuel, 1990).

A limiting factor in these illusions is that the gap in the sentence or tune must be filled with noise; it can’t be a silent gap. In everyday life the sounds we listen to are often masked by bits of noise, never by bits of silence, so perhaps illusory sound restorations are an evolutionary adaptation by which our auditory system allows us to hear meaningful sound sequences in a relatively uninterrupted stream. When a burst of noise masks a phoneme or a note, our auditory system automatically, after the fact, in auditory memory, replaces that burst with the auditory experience that, according to our previous experience, belongs there. Auditory restoration nicely exemplifies the general principle that our perceptual systems often modify sensory input in ways that help to make sense of that input. We will present more examples of this principle in Chapter 8, which deals with vision.

SECTION REVIEW

Hearing allows us to glean information from patterns of vibrations carried by air.

Basic Facts of Hearing

- Physically, sound is the vibration of air or another medium caused by a vibrating object. A sound wave’s amplitude is related to its perceived loudness, and its frequency is related to its perceived pitch.

- The outer ear funnels sound inward, the middle ear amplifies it, and the inner ear transduces and codes it.

- Conduction deafness is due to middle ear rigidity; sensorineural deafness is due to inner ear or auditory nerve damage.

Pitch Perception

- Sounds set up traveling waves on the basilar membrane, which peak at different positions depending on frequency. We decode frequency as pitch.

- The traveling-wave theory helps to explain the asymmetry of auditory masking and the typical pattern of age-related hearing loss.

- For frequencies below 4,000 Hz, the timing of action potentials also codes sound frequency.

- The primary auditory cortex is tonotopically organized. The pitch we hear depends on which cortical neurons become most active.

Making Sense of Sounds

- Brain neurons that compare the arrival time of sound waves at our two ears enable us locate a sound’s source.

- Most sounds are complex waveforms requiring analysis in cortical areas beyond the primary auditory area.

- The phonemic restoration effect illustrates the idea that context and meaning influence sensory experience.