America’s History: Printed Page 985

America: A Concise History: Printed Page 896

America’s History: Value Edition: Printed Page 875

Morning in America

During his first run for governor of California in 1966, Reagan held a revelatory conversation with a campaign consultant. “Politics is just like the movies,” Reagan told him. “You have a hell of an opening, coast for a while, and then have a hell of a close.” Reagan indeed had a “hell of an opening”: one of the most lavish and expensive presidential inaugurations in American history in 1981 (and another in 1985), showing that he was unafraid to celebrate luxury and opulence, even with millions of Americans unemployed.

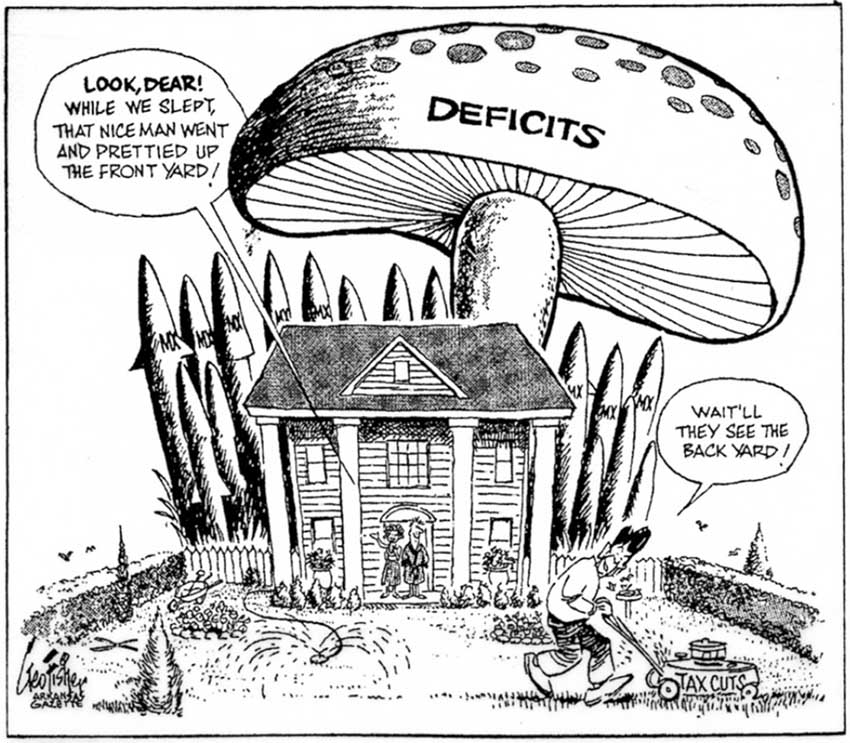

Following his spectacular inauguration, Reagan quickly won passage of his tax reduction bill and launched his plan to bolster the Pentagon. But then a long “coasting” period descended on his presidency, during which he retreated on tax cuts and navigated a major foreign policy scandal. Finally, toward the end of his two-term presidency, Reagan found his “hell of a close,” leaving office as major reforms — which he encouraged from afar — had begun to tear apart the Soviet Union and bring an end to the Cold War. Through all the ups and downs, Reagan remained a master of the politics of symbolism, championing a resurgent American economy and reassuring the country that the pursuit of wealth was noble and that he had the reins of the nation firmly in hand.

Reagan’s tax cuts had barely taken effect when he was forced to reverse course. High interest rates set by the Federal Reserve Board had cut the runaway inflation of the Carter years. But these rates — as high as 18 percent — sent the economy into a recession in 1981–1982 that put 10 million Americans out of work and shuttered 17,000 businesses. Unemployment neared 10 percent, the highest rate since the Great Depression. These troubles, combined with the booming deficit, forced Reagan to negotiate a tax increase with Congress in 1982 — to the loud complaints of supply-side diehards. The president’s job rating plummeted, and in the 1982 midterm elections Democrats picked up twenty-six seats in the House of Representatives and seven state governorships.

Election of 1984 Fortunately for Reagan, the economy had recovered by 1983, restoring the president’s job approval rating just in time for the 1984 presidential election. During the campaign, Reagan emphasized the economic resurgence, touring the country promoting his tax policies and the nation’s new prosperity. The Democrats nominated former vice president Walter Mondale of Minnesota. With strong ties to labor unions, ethnic and racial minority groups, and party leaders, Mondale epitomized the New Deal coalition. He selected Representative Geraldine Ferraro of New York as his running mate — the first woman to run on the presidential ticket of a major political party. Neither Ferraro’s presence nor Mondale’s credentials made a difference, however: Reagan won a landslide victory, losing only Minnesota and the District of Columbia. Still, Democrats retained their majority in the House and, in 1986, regained control of the Senate.

Reagan’s 1984 campaign slogan, “It’s Morning in America,” projected the image of a new day dawning on a confident people. In Reagan mythology, the United States was an optimistic nation of small towns, close-knit families, and kindly neighbors. “The success story of America,” he once said, “is neighbor helping neighbor.” The mythology may not have reflected the actual nation — which was overwhelmingly urban and suburban, and in which the hard knocks of capitalism held down more than opportunity elevated — but that mattered little. Reagan’s remarkable ability to produce positive associations and feelings, alongside robust economic growth after the 1981–1982 recession, helped make the 1980s a decade characterized by both backward-looking nostalgia and aggressive capitalism.

Return to Prosperity Between 1945 and the 1970s, the United States was the world’s leading exporter of agricultural products, manufactured goods, and investment capital. Then American manufacturers lost market share, undercut by cheaper and better-designed products from West Germany and Japan. By 1985, for the first time since 1915, the United States registered a negative balance of international payments. It now imported more goods and capital than it exported. The country became a debtor (rather than a creditor) nation. The rapid ascent of the Japanese economy to become the world’s second largest was a key factor in this historic reversal (America Compared). More than one-third of the American annual trade deficit of $138 billion in the 1980s was from trade with Japan, whose corporations exported huge quantities of electronic goods and made nearly one-quarter of all cars bought in the United States.

Meanwhile, American businesses grappled with a worrisome decline in productivity. Between 1973 and 1992, American productivity (the amount of goods or services per hour of work) grew at the meager rate of 1 percent a year — a far cry from the post-World War II rate of 3 percent. Because managers wanted to cut costs, the wages of most employees stagnated. Further, because of foreign competition, the number of high-paying, union-protected manufacturing jobs shrank. By 1985, more people in the United States worked for McDonald’s slinging Big Macs than rolled out rails, girders, and sheet steel in the nation’s steel industry.

A brief return to competitiveness in the second half of the 1980s masked the steady long-term transformation of the economy that had begun in the 1970s. The nation’s heavy industries — steel, autos, chemicals — continued to lose market share to global competitors. Nevertheless, the U.S. economy grew at the impressive average rate of 2 to 3 percent per year for much of the late 1980s and 1990s (with a short recession in 1990–1991). What had changed was the direction of growth and its beneficiaries. Increasingly, financial services, medical services, and computer technology — service industries, broadly speaking — were the leading sectors of growth. This shift in the underlying foundation of the American economy, from manufacturing to service, from making things to producing services, would have long-term consequences for the global competitiveness of U.S. industries and the value of the dollar.

Culture of Success The economic growth of the second half of the 1980s popularized the materialistic values championed by the free marketeers. Every era has its capitalist heroes, but Americans in the 1980s celebrated wealth accumulation in ways unseen since the 1920s. When the president christened self-made entrepreneurs “the heroes for the eighties,” he probably had people like Lee Iacocca in mind. Born to Italian immigrants and trained as an engineer, Iacocca rose through the ranks to become president of the Ford Motor Corporation. In 1978, he took over the ailing Chrysler Corporation and made it profitable again — by securing a crucial $1.5 billion loan from the U.S. government, pushing the development of new cars, and selling them on TV. His patriotic commercials in the 1980s echoed Reagan’s rhetoric: “Let’s make American mean something again.” Iacocca’s restoration would not endure, however: in 2009, Chrysler declared bankruptcy and was forced to sell a majority stake to the Italian company Fiat.

If Iacocca symbolized a resurgent corporate America, high-profile financial wheeler-dealers also captured Americans’ imagination. One was Ivan Boesky, a white-collar criminal convicted of insider trading (buying or selling stock based on information from corporate insiders). “I think greed is healthy,” Boesky told a business school graduating class. Boesky inspired the fictional film character Gordon Gekko, who proclaimed “Greed is good!” in 1987’s Wall Street. A new generation of Wall Street executives, of which Boesky was one example, pioneered the leveraged buyout (LBO). In a typical LBO, a financier used heavily leveraged (borrowed) capital to buy a company, quickly restructured that company to make it appear spectacularly profitable, and then sold it at a higher price.

|

To see a movie still from Wall Street, along with other primary sources from this period, see Sources for America’s History. |

Americans had not set aside the traditional work ethic, but the Reagan-era public was fascinated with money and celebrity. (The documentary television show Lifestyles of the Rich and Famous began its run in 1984.) One of the most enthralling of the era’s money moguls was Donald Trump, a real estate developer who craved publicity. In 1983, the flamboyant Trump built the equally flamboyant Trump Towers in New York City. At the entrance of the $200 million apartment building stood two enormous bronze T’s, a display of self-promotion reinforced by the media. Calling him “The Donald,” a nickname used by Trump’s first wife, TV reporters and magazines commented relentlessly on his marriages, divorces, and glitzy lifestyle.

The Computer Revolution While Trump grabbed headlines and made splashy real estate investments, a handful of quieter, less flashy entrepreneurs was busy changing the face of the American economy. Bill Gates, Paul Allen, Steve Jobs, and Steve Wozniak were four entrepreneurs who pioneered the computer revolution in the late 1970s and 1980s (Thinking Like a Historian). They took a technology that had been used exclusively for large-scale enterprises — the military and multinational corporations — and made it accessible to individual consumers. Scientists had devised the first computers for military purposes during World War II. Cold War military research subsequently funded the construction of large mainframe computers. But government and private-sector first-generation computers were bulky, cumbersome machines that had to be placed in large air-conditioned rooms.

Between the 1950s and the 1970s, concluding with the development of the microprocessor in 1971, each generation of computers grew faster and smaller. By the mid-1970s, a few microchips the size of the letter O on this page provided as much processing power as a World War II–era computer. The day of the personal computer (PC) had arrived. Working in the San Francisco Bay Area, Jobs and Wozniak founded Apple Computers in 1976 and within a year were producing small, individual computers that could be easily used by a single person. When Apple enjoyed success, other companies scrambled to get into the market. International Business Machines (IBM) offered its first personal computer in 1981, but Apple Corporation’s 1984 Macintosh computer (later shortened to “Mac”) became the first runaway commercial success for a personal computer.

Meanwhile, two former high school classmates, Gates, age nineteen, and Allen, age twenty-one, had set a goal in the early 1970s of putting “a personal computer on every desk and in every home.” They recognized that software was the key. In 1975, they founded the Microsoft Corporation, whose MS-DOS and Windows operating systems soon dominated the software industry. By 2000, the company’s products ran nine out of every ten personal computers in the United States and a majority of those around the world. Gates and Allen became billionaires, and Microsoft exploded into a huge company with 57,000 employees and annual revenues of $38 billion. In three decades, the computer had moved from a few military research centers to thousands of corporate offices and then to millions of people’s homes. Ironically, in an age that celebrated free-market capitalism, government research and government funding had played an enormous role in the development of the most important technology since television.

UNDERSTAND POINTS OF VIEW

Question

In what ways did American society embrace economic success and individualism in the 1980s?