12.2 12.2 Comparing the Means

When you complete this section, you will be able to:

• Distinguish between the use of contrasts to examine particular versions of the alternative hypothesis and the use of a multiple-

comparisons method to compare pairs of means.• Construct a level C confidence interval for a comparison of means expressed as a contrast.

• Perform a t significance test for a contrast and summarize the results.

• Summarize the trade-

off of a multiple- comparisons method in terms of controlling false rejections and not detecting true differences in means. • Describe and use the Bonferroni method to control the probability of a false rejection.

• Interpret statistical software ANOVA output and draw conclusions regarding differences in population means.

• Determine the power of the ANOVA F test for a given set of population means and sample sizes.

The ANOVA F test gives a general answer to a general question: are the differences among observed group means statistically significant? Unfortunately, a small P-value simply tells us that the group means are not all the same. It does not tell us specifically which means differ from each other. Plotting and inspecting the means give us some indication of where the differences lie, but we would like to supplement inspection with formal inference. This section presents two approaches to the task of comparing group means.

Contrasts

In the ideal situation, specific questions regarding comparisons among the means are posed before the data are collected. We can answer specific questions of this kind and attach a level of confidence to the answers we give. We now explore these ideas through a different Facebook study.

EXAMPLE 12.17

How do users spend their time on Facebook? An online study was designed to compare the amount of time a Facebook user devotes to reading positive, negative, and neutral Facebook profiles. Each participant was randomly assigned to one of five Facebook profile groups:

1. Positive female

2. Positive male

3. Negative female

4. Negative male

5. Gender neutral with neutral content

and provided an email link to a survey on Survey Monkey. As part of the survey, the participant was directed to view the assigned Facebook profile page and then answer some additional questions. The amount of time (in minutes) the participant spent viewing the profile was recorded as the response.7

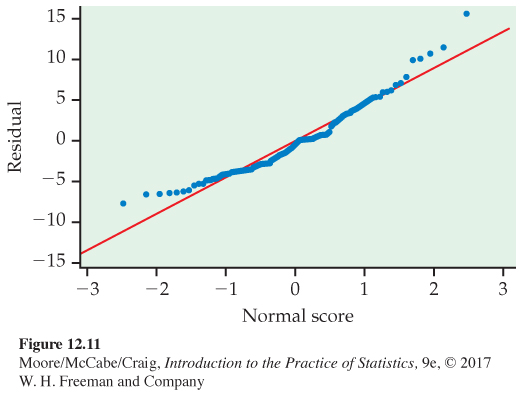

We begin our analysis with a check of the data. Time-

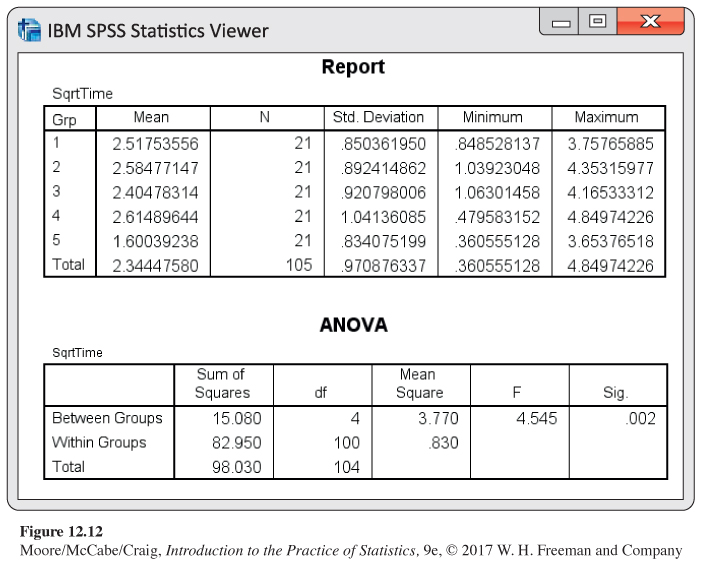

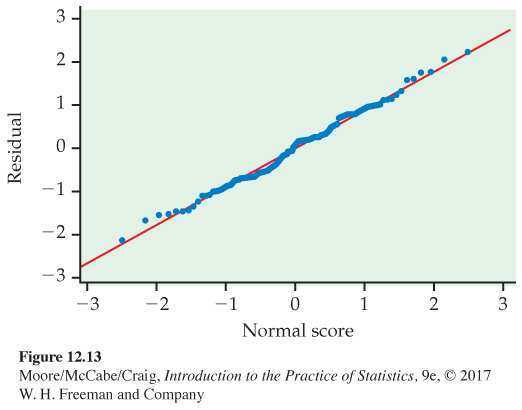

As a result, we consider the square root of time for analysis. These results are summarized in Figure 12.12 and 12.13. The residuals appear Normal (Figure 12.13), and our rule for examining standard deviations indicates we can assume equal population standard deviations (1.041 < 2(0.834)). The F test is significant with a P-value of 0.002. It’s testing the null hypothesis

H0: μ1 = μ2 = μ3 = μ4 = μ5

versus the alternative that the five population means are not all the same. Because the P-value is very small, there is strong evidence against H0 and we can conclude that the five population means are not all the same (F(4,100) = 4.55 with P = 0.002).

However, having evidence that the five population means are not the same does not tell us all we’d like to know. We would really like our analysis to provide us with more specific information. For example, the alternative hypothesis is true if

μ1 < μ2 = μ3 = μ4 = μ5

or if

μ1 = μ2 > μ3 = μ4 > μ5

or if

μ1 < μ3 < μ4 < μ2 < μ5

![]()

When you reject the ANOVA null hypothesis, additional analyses are required to clarify the nature of the differences between the means.

For this study, the researcher predicted that participants would spend more time viewing the negative Facebook pages compared to the positive or neutral pages because the negative pages would stand out more and thus garner more attention (this is called cognitive salience). How do we take these predictions and translate them into testable hypotheses?

EXAMPLE 12.18

A comparison of interest. The researcher hypothesizes that participants exposed to a negative Facebook profile would spend more time viewing the page than would participants who are exposed to a positive Facebook profile. Because two groups are exposed to negative profiles and two are exposed to positive profiles, we can consider the following null hypothesis:

H01:12(μ3+μ4)=12(μ1+μ2)

versus the two-

Ha1:12(μ3+μ4)≠12(μ1+μ2)

We could argue that the one-

Ha1:12(μ3+μ4)>12(μ1+μ2)

is appropriate for this problem provided other evidence suggests this direction and is not just what the researcher wants to see.

In the preceding example, we used H01 and Ha1 to designate the null and alternative hypotheses. The reason for this is that there is an additional set of hypotheses to assess. We use H02 and Ha2 for this set.

EXAMPLE 12.19

Another comparison of interest. This comparison tests if there is a difference in time between groups exposed to a negative page and the group exposed to the neutral page. Here are the null and alternative hypotheses:

H02:12(μ3+μ4)=μ5

Ha2:12(μ3+μ4)≠μ5

Each of H01 and H02 says that a combination of population means is 0. These combinations of means are called contrasts because the coefficients sum to zero. We use ψ, the Greek letter psi, for contrasts among population means. For our first comparison, we have

ψ1=−12 (μ1+μ2)+12 (μ3+μ4)=(−0.5)μ1+(−0.5)μ2+(0.5)μ3+(0.5)μ4

and for the second comparison

ψ2=12 (μ3+μ4)−μ5=(0.5)μ3+(0.5)μ4+(−1)μ5

![]()

In each case, the value of the contrast is 0 when H0 is true. Note that we have chosen to define the contrasts so that they will be positive when the alternative of interest (what we expect) is true. Whenever possible, this is a good idea because it makes some computations easier.

A contrast expresses an effect in the population as a combination of population means. To estimate the contrast, form the corresponding sample contrastsample contrast by using sample means in place of population means. Under the ANOVA assumptions, a sample contrast is a linear combination of independent Normal variables and, therefore, has a Normal distribution (page 304). We can obtain the standard error of a contrast by using the rules for variances. Inference is based on t statistics. Here are the details.

rules for variances, p. 258

CONTRASTS

A contrast is a combination of population means of the form

ψ=∑aiμi

where the coefficients ai sum to 0. The corresponding sample contrast is

c=∑aiˉxi

The standard error of c is

SEc=sp√∑a2ini

To test the null hypothesis

H0: ψ = 0

use the t statistic

t=cSEc

with degrees of freedom DFE that are associated with sp. The alternative hypothesis can be one-

A level C confidence interval for c is

c ± t*SEc

where t* is the value for the t(DFE) density curve with area C between −t* and t*.

Because each ˉxi estimates the corresponding μi, the addition rule for means tells us that the mean μc of the sample contrast c is ψ. In other words, c is an unbiased estimator of ψ. Testing the hypothesis that a contrast is 0 assesses the significance of the effect measured by the contrast. It is often more informative to estimate the size of the effect using a confidence interval for the population contrast.

addition rule for means, p. 254

EXAMPLE 12.20

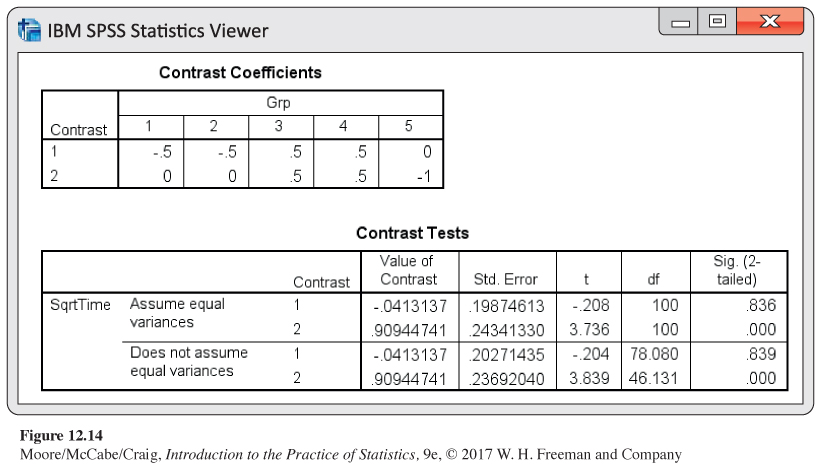

The contrast coefficients. In our example the coefficients in the contrasts are

a1 = −0.5, a2 = −0.5, a3 = 0.5, a4 = 0.5, a5 = 0, for ψ1

and

a1 = 0, a2 = 0, a3 = 0.5, a4 = 0.5, a5 = −1, for ψ2

where the subscripts 1, 2, 3, 4, and 5 correspond to the profiles listed in Example 12.17, respectively. In each case the sum of the ai is 0. We look at inference for each of these contrasts in turn.

EXAMPLE 12.21

Testing the first contrast of interest. The sample contrast that estimates ψ1 is

c1=(−0.5)ˉx1+(−0.5)ˉx2+(0.5)ˉx3+(0.5)ˉx4=−(0.5)2.518+(−0.5)2.585+(0.5)2.405+(0.5)(2.615)=−0.0415

with standard error

SEc1=0.911√(−0.5)221+(−0.5)221+(0.5)221+(0.5)221

= 0.1988

The t statistic for testing H01: ψ1 = 0 versus Ha1: ψ1 > 0 is

t=c1SEc1=−0.04150.1988=−0.21

Because sp has 100 degrees of freedom, software using the t(100) distribution gives the two-

We use the same method for the second contrast.

EXAMPLE 12.22

Testing the second contrast of interest. The sample contrast that estimates ψ2 is

c2=(0.5)ˉx3+(0.5)ˉx4+(−1)ˉx5

= (0.5)2.405 + (0.5)2.615 + (−1)1.600

= 1.2025 + 1.3075 − 1.600

= 0.91

with standard error

SEc2=0.911√(0.5)221+(0.5)221+(−1)221

= 0.2435

The t statistic for assessing the significance of this contrast is

t=0.910.2435=3.74

The P-value for the two-

We have strong evidence to conclude that time viewing a negative content page is different from the time viewing a neutral content page. The size of the difference can be described with a confidence interval.

EXAMPLE 12.23

Confidence interval for the second contrast. To find the 95% confidence interval for ψ2, we combine the estimate with its margin of error:

c2±t*SEc2=0.91±(1.962)(0.24)

= 0.91 ± 0.47

The interval is (0.44, 1.38). Unfortunately, this interval is difficult to interpret because the units are √minutes. We can obtain an approximate 95% interval on the orginial units scale by back-

SPSS output for the contrasts is given in Figure 12.14. The results agree with the calculations that we performed in Examples 12.21 and 12.22 except for minor differences due to roundoff error in our calculations. Note that the output does not give the confidence interval that we calculated in Example 12.23. This is easily computed, however, from the contrast estimate and standard error provided in the output.

Some statistical software packages report the test statistics associated with contrasts as F statistics rather than t statistics. These F statistics are the squares of the t statistics described previously. As with much statistical software output, P-values for significance tests are reported for the two-

![]()

If the software you are using gives P-values for the two-

Questions about population means are expressed as hypotheses about contrasts. A contrast should express a specific question that we have in mind when designing the study. Because the ANOVA F test answers a very general question, it is less powerful than tests for contrasts designed to answer specific questions.

![]()

When contrasts are formulated before seeing the data, inference about contrasts is valid whether or not the ANOVA H0 of equality of means is rejected. Specifying the important questions before the analysis is undertaken enables us to use this powerful statistical technique.

USE YOUR KNOWLEDGE

Question 12.27

12.27 Defining a contrast. Refer to Example 12.17 (page 670). Suppose the researcher was also interested in comparing the viewing time between male and female profile pages. Specify the coefficients for this contrast.

12.27 a1 = 0.5, a2 = 0.5, a3 = –0.5, a4 = –0.5.

Question 12.28

12.28 Defining different coefficients. Refer to Example 12.22 (page 675). Suppose we had selected the coefficients a1 = 0, a2 = 0, a3 = −1, a4 = −1, and a5 = 2. Would this choice of coefficients alter our inference in this example? Explain your answer.

Multiple comparisons

In many studies, specific questions cannot be formulated in advance of the analysis. If H0 is not rejected, we conclude that the population means are indistinguishable on the basis of the data given. On the other hand, if H0 is rejected, we would like to know which pairs of means differ. Multiple-

EXAMPLE 12.24

Comparing each pair of groups. Let’s return once more to the Facebook friends data with five groups (page 648). We can make 10 comparisons between pairs of means. We can write a t statistic for each of these pairs. For example, the statistic

t12= ˉx1−ˉx2sp√1n1+1n2

=3.82−4.881.10√124+133

= −3.59

compares profiles with 102 and 302 friends. The subscripts on t specify which groups are compared.

The t statistics for two other pairs are

t23=ˉx2−ˉx3sp√1n2+1n3

=4.88−4.561.10√133+126

= 1.11

t25=ˉx2−ˉx5sp√1n2+1n5

=4.88−3.991.10√133+121

= 2.90

These 10 t statistics are very similar to the pooled two-

two-

Because we do not have any specific ordering of the means in mind as an alternative to equality, we must use a two-

MULTIPLE COMPARISONS

To perform a multiple-

tij=ˉxi−ˉxjsp√1ni+1nj

If

|tij|≥t**

we declare that the population means μi and μj are different. Otherwise, we conclude that the data do not distinguish between them. The value of t** depends upon which multiple-

One obvious choice for t** is the upper ⍺/2 critical value for the t(DFE) distribution. This choice simply carries out as many separate significance tests of fixed level ⍺ as there are pairs of means to be compared. The procedure based on this choice is called the least-

![]()

LSD has some undesirable properties, particularly if the number of means being compared is large. Suppose, for example, that there are I = 20 groups and we use LSD with ⍺ = 0.05. There are 190 different pairs of means. If we perform 190 t tests, each with an error rate of 5%, our overall error rate will be unacceptably large. We expect about 5% of the 190 to be significant even if the corresponding population means are the same. Because 5% of 190 is 9.5, we expect 9 or 10 false rejections.

The LSD procedure fixes the probability of a false rejection for each single pair of means being compared. It does not control the overall probability of some false rejection among all pairs. Other choices of t** control possible errors in other ways. The choice of t** is, therefore, a complex problem, and a detailed discussion of it is beyond the scope of this text. Many choices for t** are used in practice. Most statistical packages provide several to choose from.

Bonferroni procedure, p. 391

We will discuss only one of these, called the Bonferroni method. Use of this procedure with ⍺ = 0.05, for example, guarantees that the probability of any false rejection among all comparisons made is no greater than 0.05. This is much stronger protection than controlling the probability of a false rejection at 0.05 for each separate comparison.

EXAMPLE 12.25

Applying the Bonferroni method. We apply the Bonferroni multiple-

Of course, we prefer to use software for the calculations.

EXAMPLE 12.26

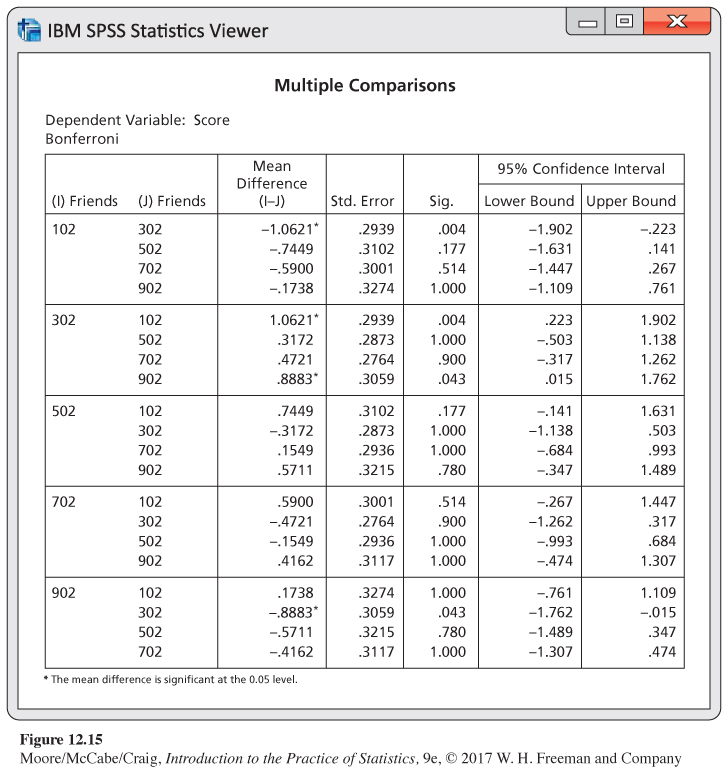

Interpreting software output. The output generated by SPSS for Bonferroni comparisons appears in Figure 12.15. The software uses an asterisk to indicate that the difference in a pair of means is statistically significant. Here, all 10 comparisons are reported. These results agree with the calculations that we performed in Examples 12.24 and 12.25. There are no significant differences except those already mentioned. Note that each comparison is given twice in the output.

The data in the Facebook friends study provide a clear result: the social attractiveness score increases as the number of friends increases to a point and then decreases. Unfortunately with these data, we cannot accurately describe this relationship in more detail. This lack of clarity is not unusual when performing a multiple-

Here, the mean associated with 302 friends is significantly different from the means for the 102-

![]()

This kind of apparent contradiction points out dramatically the nature of the conclusions of statistical tests of significance. The conclusion appears to be illogical. If μ1 is the same as μ3 and if μ3 is the same as μ2, doesn’t it follow that μ1 is the same as μ2? Logically, the answer must be Yes.

Some of the difficulty can be resolved by noting the choice of words used. In describing the inferences, we talk about failing to detect a difference or concluding that two groups are different. In making logical statements, we say things such as “is the same as.’’ There is a big difference between the two modes of thought. Statistical tests ask, “Do we have adequate evidence to distinguish two means?’’ It is not illogical to conclude that we have sufficient evidence to distinguish μ1 from μ2, but not μ1 from μ3 or μ2 from μ3.

One way to deal with these difficulties of interpretation is to give confidence intervals for the differences. The intervals remind us that the differences are not known exactly. We want to give simultaneous confidence intervals, that is, intervals for all differences among the population means at once. Again, we must face the problem that there are many competing procedures—

SIMULTANEOUS CONFIDENCE INTERVALS FOR DIFFERENCES BETWEEN MEANS

Simultaneous confidence intervals for all differences μi − μj between population means have the form

(ˉxi−ˉxj)±t**sp√1ni+1nj

The critical values t** are the same as those used for the multiple-

The confidence intervals generated by a particular choice of t** are closely related to the multiple-

EXAMPLE 12.27

Interpreting software output, continued. The SPSS output for the Bonferroni multiple-

USE YOUR KNOWLEDGE

Question 12.29

12.29 Why no multiple comparisons? Any pooled two-

12.29 Because there are only two groups, if the ANOVA test establishes that there are differences in means, then we already know that the two means we have must be different. In this case, contrasts and multiple comparison provide no further useful information.

Question 12.30

12.30 Growth of Douglas fir seedlings. An experiment was conducted to compare the growth of Douglas fir seedlings under three different levels of vegetation control (0%, 50%, and 100%). Sixteen seedlings were randomized to each level of control. The resulting sample means for stem volume were 58, 73, and 105 cubic centimeters (cm3), respectively, with sp = 17 cm3. The researcher hypothesized that the average growth at 50% control would be less than the average of the 0% and 100% levels.

(a) What are the coefficients for testing this contrast?

(b) Perform the test and report the test statistic, degrees of freedom, and P-value. Do the data provide evidence to support this hypothesis?

Power

Recall that the power of a test is the probability of rejecting H0 when Ha is, in fact, true. Power measures how likely a test is to detect a specific alternative. When planning a study in which ANOVA will be used for the analysis, it is important to perform power calculations to check that the sample sizes are adequate to detect differences among means that are judged to be important.

Power calculations also help evaluate and interpret the results of studies in which H0 was not rejected. We sometimes find that the power of the test was so low against reasonable alternatives that there was little chance of obtaining a significant F.

power, p. 392

In Chapter 7, we found the power for the two-

Here are the steps that are needed:

1. Specify

(a) An alternative (Ha) that you consider important; that is, values for the true population means μ1, μ2, . . . , μI.

(b) Sample sizes n1, n2, . . . , nI; usually these will all be equal to the common value n.

(c) A level of significance ⍺, usually equal to 0.05.

(d) A guess at the standard deviation σ.

2. Use the degrees of freedom DFG = I − 1 and DFE = N − I to find the critical value that will lead to the rejection of H0. This value, which we denote by F*, is the upper ⍺ critical value for the F(DFG,DFE) distribution.

3. Calculate the noncentrality parameternoncentrality parameter8

λ=∑ni(μi−ˉμ)2σ2

where ˉμ is a weighted average of the group means

ˉμ=∑niNμi

If the means are all equal (the ANOVA H0), then λ = 0. The noncentrality parameter measures how unequal the given set of means is. Large λ points to an alternative far from H0, and we expect the ANOVA F test to have high power.

4. Find the power, which is the probability of rejecting H0 when the alternative hypothesis is true; that is, the probability that the observed F is greater than F*. Under Ha, the F statistic has a distribution known as the noncentral F distribution. SAS, for example, has a function for this distribution. Using this function, the power is

noncentral F distribution

Power = 1 − PROBF (F*, DFG, DFE, λ)

Software makes calculation of the power quite easy. The software does Steps 2, 3, and 4, so our task simplifies to just Step 1. Some software doesn’t request the alternative means, but rather a difference in means that is judged important. Most software will also assume a constant sample size. Let’s run through an example doing the calculations ourselves and then compare the results with output from two software programs.

EXAMPLE 12.28

Power of a reading comprehension study. Suppose that a study on reading comprehension for three different teaching methods has 10 students in each group. How likely is this study to detect differences in the mean responses? A previous study performed in a different setting found sample means of 41, 47, and 44, and the pooled standard deviation was 7. Based on these results, we will use μ1 = 41, μ2 = 47, μ3 = 44, and σ = 7 in a calculation of power. The ni are equal, so ˉμ is simply the average of the μi:

ˉμ=41+47+443=44

The noncentrality parameter is, therefore,

λ=n∑(μi−ˉμ)2σ2=(10)[(41−44)2+(47−44)2+(44−44)2]49=(10)(18)49=3.67

Because there are three groups with 10 observations per group, DFG = 2 and DFE = 27. The critical value for ⍺ = 0.05 is F* = 3.35. The power is, therefore,

1 − PROBF(3.35, 2, 27, 3.67) = 0.3486

The chance that we reject the ANOVA H0 at the 5% significance level given these population means and standard deviation is slightly less than 35%.

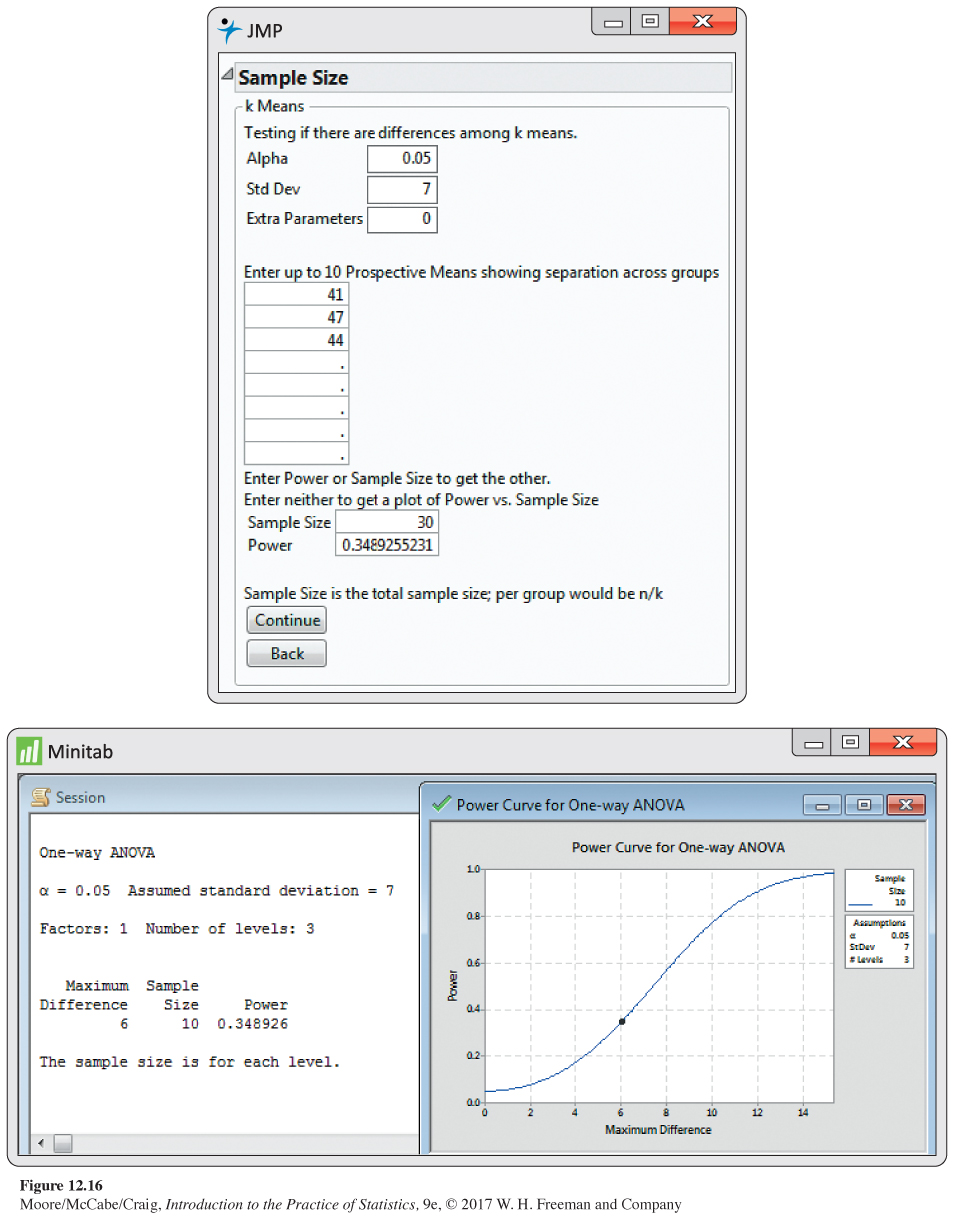

Figure 12.16 shows the power calculation output from JMP and Minitab. For JMP, you specify the alternative means, standard deviation, and the total sample size N. The power is calculated once the “Continue’’ button is clicked. Notice that this result is the same as the result in Example 12.28. For Minitab, you enter the common sample size n, standard deviation σ, and the difference between means that is deemed important. For the alternative means specified in Example 12.28, the largest difference is 6 = 47 − 41, so that was entered. The power is again the same as the result in Example 12.28. This won’t always be the case. Specifying an important difference will often give a power value that is smaller. This is because it computes a noncentrality parameter that is always less than or equal to the noncentrality value based on knowing all the alternative means.

If the assumed values of the μi in this example describe differences among the groups that the experimenter wants to detect, then we would want to use more than 10 subjects per group. Although H0 is false for these μi, the chance of rejecting it at the 5% level is only about 35%. This chance can be increased to acceptable levels by increasing the sample sizes.

EXAMPLE 12.29

Changing the sample size. To decide on an appropriate sample size for the experiment described in the previous example, we repeat the power calculation for different values of n, the number of subjects in each group. Here are the results:

| n | DFG | DFE | F* | l | Power |

|---|---|---|---|---|---|

| 20 | 2 | 57 | 3.16 | 7.35 | 0.65 |

| 30 | 2 | 87 | 3.10 | 11.02 | 0.84 |

| 40 | 2 | 117 | 3.07 | 14.69 | 0.93 |

| 50 | 2 | 147 | 3.06 | 18.37 | 0.97 |

| 100 | 2 | 297 | 3.03 | 36.73 | ͌1 |

Try using JMP to verify these calculations. With n = 40, the experimenters have a 93% chance of rejecting H0 with ⍺ = 0.05 and thereby demonstrating that the groups have different means. In the long run, 93 out of every 100 such experiments would reject H0 at the ⍺ = 0.05 level of significance. Using 50 subjects per group increases the chance of finding significance to 97%. With 100 subjects per group, the experimenters are virtually certain to reject H0. The exact power for n = 100 is 0.99990. In most real-

USE YOUR KNOWLEDGE

Question 12.31

12.31 Understanding power calculations. Refer to Example 12.28. Suppose that the researcher decided to use μ1 = 39, μ2 = 44, and μ3 = 49 in the power calculations. With n = 10 and σ = 7, would the power be larger or smaller than 35%? Explain your answer.

12.31 The power would be larger. For larger differences between alternative means, λ gets bigger, increasing our power to see these differences.

Question 12.32

12.32 Understanding power calculations, continued. If all the group means are equal (H0 is true), what is the power of the F test? Explain your answer.