Language Development

comprehension  with regard to language, understanding what others say (or sign or write)

with regard to language, understanding what others say (or sign or write)

What is the average kindergartener almost as good at as you are? Not much, with one important exception: using language. By 5 years of age, most children have mastered the basic structure of their native language or languages (the possibility of bilingualism is to be assumed whenever we refer to “native language”), whether spoken or manually signed. Although their vocabulary and powers of expression are less sophisticated than yours, their sentences are as grammatically correct as those produced by the average college student. This is a remarkable achievement.

217

production  with regard to language, speaking (or writing or signing) to others

with regard to language, speaking (or writing or signing) to others

Language use requires comprehension, which refers to understanding what others say (or sign or write), and production, which refers to actually speaking (or signing or writing). As we have observed for other areas of development, infants’ and young children’s ability to understand precedes their ability to produce. Children understand words and linguistic structures that other people use months or even years before they include them in their own utterances. This is, of course, not unique to young children; you no doubt understand many words that you never actually use. In our discussion, we will be concerned with the developmental processes involved in both comprehension and production, as well as the relation between them.

The Components of Language

generativity  refers to the idea that through the use of the finite set of words and morphemes in humans’ vocabulary, we can put together an infinite number of sentences and express an infinite number of ideas

refers to the idea that through the use of the finite set of words and morphemes in humans’ vocabulary, we can put together an infinite number of sentences and express an infinite number of ideas

How do languages work? Despite the fact that there are thousands of human languages, they share overarching similarities. All human languages are similarly complex, with different pieces combined at different levels to form a hierarchy: sounds are combined to form words, words are combined to form sentences, and sentences are combined to form stories, conversations, and other kinds of narratives. Children must acquire all of these facets of their native language. The enormous benefit that emerges from this combinatorial process is generativity; by using the finite set of words in our vocabulary, we can generate an infinite number of sentences, expressing an infinite number of ideas.

phonemes  the elementary units of meaningful sound used to produce languages

the elementary units of meaningful sound used to produce languages

However, the generative power of language carries a cost for young language learners: they must deal with its complexity. To appreciate the challenge presented to children learning their first language, imagine yourself as a stranger in a strange land. Someone walks up to you and says, “Jusczyk daxly blickets Nthlakapmx.” You would have absolutely no idea what this person had just said. Why?

phonological development  the acquisition of knowledge about the sound system of a language

the acquisition of knowledge about the sound system of a language

First, you would probably have difficulty perceiving some of the phonemes that make up what the speaker is uttering. Phonemes are the units of sound in speech; a change in phoneme changes the meaning of a word. For example, “rake” and “lake” differ by only one phoneme (/r/versus/l/), but the two words have quite different meanings to English speakers. Different languages employ different sets of phonemes; English uses just 45 of the roughly 200 sounds found across the world’s languages. The phonemes that distinguish meaning in any one language overlap with, but also differ from, those in other languages. For example, the sounds/r/and/l/are a single phoneme in Japanese, and do not carry different meanings. Furthermore, combinations of sounds that are common in one language may never occur in others. When you read the stranger’s utterance in the preceding paragraph, you probably had no idea how to pronounce the word Nthlakapmx, because some of its sound combinations do not occur in English (though they do occur in other languages). Thus, the first step in children’s language learning is phonological development: the mastery of the sound system of their language.

morphemes  the smallest units of meaning in a language, composed of one or more phonemes

the smallest units of meaning in a language, composed of one or more phonemes

Another reason you would not know what the stranger had said to you, even if you could have perceived the sounds being uttered, is that you would have had no idea what the sounds mean. The smallest units of meaning are called morphemes. Morphemes, alone or in combination, constitute words. The word dog, for example, contains one morpheme. The word dogs contains two morphemes, one designating a familiar furry entity (dog) and the second indicating the plural (-s). Thus, the second component in language acquisition is semantic development, that is, learning the system for expressing meaning in a language, including word learning.

218

semantic development  the learning of the system for expressing meaning in a language, including word learning

the learning of the system for expressing meaning in a language, including word learning

However, even if you were told the meaning of each individual word the stranger had used, you would still not understand the utterance unless you knew how words are put together in the stranger’s language. To express an idea of any complexity, we combine words into sentences, but only certain combinations are allowed in any given language. Syntax refers to the permissible combinations of words from different categories (nouns, verbs, adjectives, etc.). In English, for example, the order in which words can appear in a sentence is crucial: “Lila ate the lobster” does not mean the same thing as “The lobster ate Lila.” Other languages indicate which noun did the eating and which noun was eaten by adding morphemes like suffixes to the nouns. For example, a Russian noun ending in “a” is likely to refer to the entity doing the eating, while the same noun ending in “u” is likely to refer to the thing that was eaten. The third component in language learning, then, is syntactic development, that is, learning how words and morphemes are combined.

syntax  rules in a language that specify how words from different categories (nouns, verbs, adjectives, and so on) can be combined

rules in a language that specify how words from different categories (nouns, verbs, adjectives, and so on) can be combined

Finally, a full understanding of the interaction with the stranger would necessitate having some knowledge of the cultural rules and contextual variations for using language. In some societies, for example, it would be quite bizarre to be addressed by a stranger in the first place, whereas in others it would be commonplace. You would also need to know how to go beyond the speaker’s specific words to understand what the speaker was really trying to communicate—to use factors such as the context and the speaker’s emotional tone to read between the lines and to learn how to hold a conversation. Acquiring an understanding of how language is typically used is referred to as pragmatic development.

syntactic development  the learning of the syntax of a language

the learning of the syntax of a language

Our example of the bewilderment one experiences when listening to an unfamiliar language is useful for delineating the components of language use. However, when we, as adults, hear someone speaking an unfamiliar language, we already know what a language is. We know that the sounds the person is uttering constitute words, that words are combined to form sentences, that only certain combinations are acceptable, and so on. In other words, in contrast with young language learners, adults have considerable metalinguistic knowledge—that is, knowledge about language and its properties.

pragmatic development  the acquisition of knowledge about how language is used

the acquisition of knowledge about how language is used

Thus, learning language involves phonological, semantic, syntactic, and pragmatic development, as well as meta-linguistic knowledge. The same factors are involved in learning a sign language, in which the basic linguistic elements are gestures rather than sounds. There are more than 200 languages, including American Sign Language (ASL), that are based on gestures, both manual and facial. They are true languages and are as different from one another as spoken languages are. The course of acquisition of a sign language is remarkably similar to that of a spoken language.

What Is Required for Language?

metalinguistic knowledge  an understanding of the properties and function of language—that is, an understanding of language as language

an understanding of the properties and function of language—that is, an understanding of language as language

What does it take to be able to learn a language in the first place? Full-fledged language is achieved only by humans, so, obviously, one requirement is the human brain. But a single human, isolated from all sources of linguistic experience, could never learn a language; hearing (or seeing) language is a crucial ingredient for successful language development.

219

A Human Brain

The key to full-fledged language development lies in the human brain. Language is a species-specific behavior: only humans acquire language in the normal course of development. Furthermore, it is species-universal: language learning is achieved by typically developing infants across the globe.

In contrast, no other animals naturally develop anything approaching the complexity or generativity of human language, even though they can communicate with one another. For example, birds claim territorial rights by singing (Marler, 1970), and vervet monkeys can reveal the presence and identity of predators through specific calls (Seyfarth & Cheney, 1993).

Researchers have had limited success in training nonhuman primates to use complex communicative systems. One early effort was an ambitious project in which a dedicated couple raised a chimpanzee (Vicki) with their own children (Hayes & Hayes, 1951). Although Vicki learned to comprehend some words and phrases, she produced virtually no recognizable words. Subsequent researchers attempted to teach nonhuman primates sign language. Washoe, a chimpanzee, and Koko, a gorilla, became famous for their ability to communicate with their human trainers and caretakers using manual signs (Gardner & Gardner, 1969; Patterson & Linden, 1981). Washoe could label a variety of objects and could make requests (“more fruit,” “please tickle”). The general consensus is that, however impressive Washoe’s and Koko’s “utterances” were, they do not qualify as language, because they contained little evidence of syntactic structure (Terrace et al., 1979; Wallman, 1992).

The most successful sign-learning nonhuman is Kanzi, a great ape of the bonobo species. Kanzi’s sign-learning began when he observed researchers trying to teach his mother to communicate with them by using a lexigram board, a panel composed of a few graphic symbols representing specific objects and actions (“give,” “eat,” “banana,” “hug,” and so forth) (Savage-Rumbaugh et al., 1993). Kanzi’s mother never caught on, but Kanzi did, and over the years his lexigram vocabulary increased from 6 words to more than 350. He is now very adept at using his lexigram board to answer questions, to make requests, and even to offer comments. He often combines signs, but whether they can be considered syntactically structured sentences is not clear.

There are also several well-documented cases of nonprimate animals that have learned to respond to spoken language. Kaminski, Call, and Fischer (2004) found that Rico, a border collie, knew more than 200 words and could learn and remember new words using the same kinds of processes that toddlers use. Alex, an African Grey parrot, learned to produce and understand basic English utterances, although his skills remained at a toddler level (Pepperberg, 1999).

Whatever the ultimate decision regarding the extent to which trained nonhuman animals should be credited with language, several things are clear. Even their most basic linguistic achievements come only after a great deal of concentrated human effort, whereas human children master the rudiments of their language with little explicit teaching. Furthermore, while the most advanced nonhuman communicators do combine symbols, their utterances show limited evidence of syntactic structure, which is a defining feature of language (Tomasello, 1994). In short, only the human brain acquires a communicative system with the complexity, structure, and generativity of language. Correspondingly, we humans are notoriously poor at learning the communicative systems of other species (Harry Potter’s ability to speak Parseltongue with snakes aside). There is an excellent match between the brains of animals of different species and their respective communicative systems.

220

Brain–language relations A vast amount of research has examined the relationship between language and brain function. It is clear that language processing involves a substantial degree of functional localization. At the broadest level, there are hemispheric differences in language functioning that we discussed to some extent in Chapter 3. For the 90% of people who are right-handed, language is primarily represented and controlled by the left hemisphere.

Left-hemisphere specialization appears to emerge very early in life. Studies using neuroimaging techniques have demonstrated that newborns and 3-month-olds show greater activity in the left hemisphere when exposed to normal speech than when exposed to reversed speech or silence (Bortfeld, Fava, & Boas, 2009; Dehaene-Lambertz, Dehaene, & Hertz-Pannier, 2002; Pena et al., 2003). In addition, EEG studies show that infants exhibit greater left-hemisphere activity when listening to speech but greater right-hemisphere activity when listening to nonspeech sounds (Molfese & Betz, 1988). An exception to this pattern of lateralization occurs in the detection of pitch in speech, which in infants, as in adults, tends to involve the right hemisphere (Homae et al., 2006).

Although it is evident that the left hemisphere predominantly processes speech from birth, the reasons for this are not yet known. One possibility is that the left hemisphere is innately predisposed to process language but not other auditory stimuli. Another possibility is that speech is localized to the left hemisphere because of its acoustic properties. In this view, the auditory cortex in the left hemisphere is tuned to detect small differences in timing, whereas the auditory cortex in the right hemisphere is tuned to detect small differences in pitch (e.g., Zatorre et al., 1992; Zatorre & Belin, 2001; Zatorre, Belin, & Penhune, 2002). Because speech turns on small differences in timing (as you will see when we discuss voice onset time), it may be a more natural fit for the left hemisphere.

critical period for language  the time during which language develops readily and after which (sometime between age 5 and puberty) language acquisition is much more difficult and ultimately less successful

the time during which language develops readily and after which (sometime between age 5 and puberty) language acquisition is much more difficult and ultimately less successful

Critical period for language development If you were to survey your classmates who have studied another language, we predict you would discover that those who learned a foreign language in adolescence found the task to be much more challenging than did those who learned the foreign language in early childhood. A considerable body of evidence suggests that, in fact, the early years constitute a critical period during which language develops readily. After this period (which ends sometime between age 5 and puberty), language acquisition is much more difficult and ultimately less successful.

Relevant to this hypothesis, there are several reports of children who barely developed language at all after being deprived of early linguistic experience. The most famous case in modern times is Genie, who was discovered in appalling conditions in Los Angeles in 1970. From the age of approximately 18 months until she was rescued at age 13, Genie’s parents kept her tied up and locked alone in a room. During her imprisonment, no one spoke to her; when her father brought her food, he growled at her like an animal. At the time of her rescue, Genie’s development was stunted—physically, motorically, and emotionally—and she could barely speak. With intensive training, she made some progress, but her language ability never developed much beyond the level of a toddler’s: “Father take piece wood. Hit. Cry” (Curtiss, 1977, 1989; Rymer, 1993).

221

Does this extraordinary case support the critical-period hypothesis? Possibly, but it is difficult to know for sure. Genie’s failure to develop full, rich, language after her discovery might have resulted as much from the bizarre and inhumane treatment she suffered as from linguistic deprivation.

Other areas of research provide much stronger evidence for the critical-period hypothesis. As noted in Chapter 3, adults, who are well beyond the critical period, are more likely to suffer permanent language impairment from brain damage than are children, presumably because other areas of the young brain (but not the older brain) are able to take over language functions (see M. H. Johnson, 1998). Moreover, adults who learned a second language after puberty use different neural mechanisms to process that language than do adults who learned their second language from infancy (e.g., Kim et al., 1997; Pakulak & Neville, 2011). These results strongly suggest that the neural circuitry supporting language learning operates differently (and better) during the early years.

In an important behavioral study, Johnson and Newport (1989) tested the English proficiency of Chinese and Korean immigrants to the United States who had begun learning English either as children or as adults. The results, shown in Figure 6.1, reveal that knowledge of key aspects of English grammar was related to the age at which these individuals began learning English, but not to the length of their exposure to the language. The most proficient were those who had begun learning English before the age of 7.

A similar pattern has been described for first-language acquisition in the Deaf community: individuals who acquired ASL as a first language when they were children become more proficient signers than do individuals who acquired ASL as a first language as teens or adults (Newport, 1990). Johnson and Newport also observed a great deal of variability among “late learners”—those who were acquiring a second language, or a sign language as their first formal language, at puberty or beyond. As in the findings we predicted for your survey of classmates, some individuals achieved nativelike skills, whereas the language outcomes for others were quite poor. For reasons that are still unknown, some individuals continue to be talented language learners even after puberty, while most do not.

Newport (1990) proposed an intriguing hypothesis to explain these results and, more generally, to explain why children are usually better language learners than adults. According to her “less is more” hypothesis, perceptual and memory limitations cause young children to extract and store smaller chunks of the language than adults do. Because the crucial building blocks of language (the meaning-carrying morphemes) tend to be quite small, young learners’ limited cognitive abilities may actually facilitate the task of analyzing and learning language.

The evidence for a critical period in language acquisition has some very clear practical implications. For one thing, deaf children should be exposed to sign language as early as possible. For another, foreign-language exposure at school, discussed in Box 6.1, should begin in the early grades in order to maximize children’s opportunity to achieve native-level skills in a second language.

A Human Environment

Possession of a human brain is not enough for language to develop. Children must also be exposed to other people using language—any language, signed or spoken. Adequate experience hearing others talk is readily available in the environment of almost all children around the world. Much of the speech directed to infants occurs in the context of daily routines—during thousands of mealtimes, diaper changes, baths, and bedtimes, as well as in countless games like peekaboo and nursery rhymes like the “Itsy Bitsy Spider.”

222

Box 6.1: applications

Two Languages Are Better Than One

bilingualism  the ability to use two languages

the ability to use two languages

The topic of bilingualism, the ability to use two languages, has attracted substantial attention in recent years as increasing numbers of children are developing bilingually. Indeed, almost half the children in the world are regularly exposed to more than one language, often at home with parents who speak different languages. Remarkably, despite the fact that bilingual children have twice as much to learn as monolingual children, they show little confusion and language delay. In fact, there is evidence to suggest that being bilingual improves aspects of cognitive functioning in childhood and beyond.

Bilingual learning can begin in the womb. Newborns prenatally exposed to just their native language prefer it over other languages, whereas newborns whose mothers spoke two languages during pregnancy prefer both languages equally (Byers-Heinlein, Burns, & Werker, 2010). Bilingual infants are able to discriminate the speech sounds of their two languages at roughly the same pace that monolingual infants distinguish the sounds of their one language (e.g., Albareda-Castellot, Pons, & Sebastián-Gallés, 2011; Sundara, Polka, & Molnar, 2008). How might this be, given that bilingual infants have twice as much to learn? One possibility is that bilinguals’ attention to speech cues is heightened relative to that of monolinguals. For example, bilingual infants are better than monolingual infants at using purely visual information (a silent talking face) to discriminate between unfamiliar languages (Sebastián-Gallés et al., 2012).

For the most part, children who are acquiring two languages do not seem to confuse them; indeed, they appear to build two separate linguistic systems. When language mixing does occur, it usually reflects a gap of knowledge in one language that the child is trying to fill in with the other, rather than a confusing of the two language systems (e.g., Deuchar & Quay, 1999; Paradis, Nicoladis, & Genesee, 2000).

Children developing bilingually may appear to lag behind slightly in each of their languages because their vocabulary is distributed across two languages (Oller & Pearson, 2002). That is, a bilingual child may know how to express some concepts in one language but not the other. However, both the course and the rate of language development are generally very similar for bilingual and monolingual children (Genesee & Nicoladis, 2006). And as we have noted, there are cognitive benefits to bilingualism: children who are competent in two languages perform better on a variety of measures of executive function and cognitive control than do monolingual children (Bialystok & Craik, 2010; Costa, Hernandez, & Sebastián-Gallés, 2008). Recent results reveal similar effects for bilingual toddlers (Poulin-Dubois et al., 2011). Even bilingual infants appear to show greater cognitive flexibility in learning tasks (Kovács & Mehler, 2009a, b). The link between bilingualism and improved cognitive flexibility likely lies in the fact that bilingual individuals have had to learn to rapidly switch between languages, both in comprehension and production.

More difficult issues arise with respect to second-language learning in the school setting. Some countries with large but distinct language communities, like Canada, have embraced bilingual education. Others, including the United States, have not. The debate over bilingual education in the United States is tied up with a host of political, ethnic, and racial issues. One side of this debate advocates total immersion, in which children are communicated with and taught exclusively in English, the goal being to help them become proficient in English as quickly as possible. The other side recommends an approach that initially provides children with instruction in basic subjects in their native language and gradually increases the amount of instruction provided in English (Castro et al., 2011).

In support of the latter view, there is evidence that (1) children often fail to master basic subject matter when it is taught in a language they do not fully understand; and (2) when both languages are integrated in the classroom, children learn the second language more readily, participate more actively, and are less frustrated and bored (August & Hakuta, 1998; Crawford, 1997; Hakuta, 1999). This approach also helps prevent situations where children might become less proficient in their original language as a result of being taught a second one in school.

223

Infants identify speech as something important very early. When given the choice, newborns prefer listening to speech rather than to artificial sounds (Vouloumanos et al., 2010). Intriguingly, newborns also prefer nonhuman primate (rhesus macaque) vocalizations to nonspeech sounds, and show no preference for speech over macaque vocalizations until 3 months of age (Vouloumanos et al., 2010). These results suggest that infants’ auditory preferences are fine-tuned through experience with human language during their earliest months.

infant-directed speech (IDS)  the distinctive mode of speech that adults adopt when talking to babies and very young children

the distinctive mode of speech that adults adopt when talking to babies and very young children

Infant-directed speech Imagine yourself on a bus listening to a stranger who is seated behind you and speaking to someone. Could you guess whether the stranger was addressing an infant or an adult? We have no doubt that you could, even if the stranger was speaking an unfamiliar language. The reason is that in virtually all cultures, adults adopt a distinctive mode of speech when talking to babies and very young children. This special way of speaking was originally dubbed “motherese” (Newport, Gleitman, & Gleitman, 1977). The current term, infant-directed speech (IDS), recognizes the fact that this style of speech is used by both males and females, including parents and nonparents alike. Indeed, even young children adopt it when talking to babies (Shatz & Gelman, 1973).

CHARACTERISTICS OF INFANT-DIRECTED SPEECH The most obvious quality of IDS is its emotional tone. It is speech suffused with affection—“the sweet music of the species,” as Darwin (1877) put it. Another obvious characteristic of IDS is exaggeration. When people speak to babies, their speech is slower, and their voice is often higher pitched, than when they speak to adults, and they swoop abruptly from high pitches to low pitches and back again. Even their vowels are clearer (Kuhl et al., 1997). All this exaggerated speech is accompanied by exaggerated facial expressions. Many of these characteristics have been noted in adults speaking such languages as Arabic, French, Italian, Japanese, Mandarin Chinese, and Spanish (see de Boysson-Bardies, 1996/1999), as well as in deaf mothers signing to their infants (Masataka, 1992).

Beyond expressing emotional tone, caregivers can use various pitch patterns of IDS to communicate important information to infants even when infants don’t know the meaning of the words uttered. For example, a word uttered with sharply falling intonation tells the baby that their caregiver disapproves of something, whereas a cooed warm sound indicates approval. These pitch patterns serve the same function in language communities ranging from English and Italian to Japanese (Fernald et al., 1989). Interestingly, infants exhibit appropriate facial emotion when listening to these pitch patterns, even when the language is unfamiliar (Fernald, 1993).

IDS also seems to aid infants’ language development. To begin with, it draws infants’ attention to speech itself. Indeed, infants prefer IDS to adult-directed speech (Cooper & Aslin, 1994; Pegg, Werker, & McLeod, 1992), even when it is in a language other than their own. For example, both Chinese and American infants listened longer to a recording of a Cantonese-speaking woman talking to a baby in IDS than to the same woman speaking normally to an adult friend (Werker, Pegg, & McLeod, 1994). Some studies suggest that infants’ preference for IDS may emerge because it is “happy speech”; when speakers’ affect is held constant, the preference disappears (Singh, Morgan, & Best, 2002). Perhaps because they pay greater attention to IDS, infants learn and recognize words better when the words are presented in IDS than when they are presented in adult-directed speech (Ma et al., 2011; Singh et al., 2009; Thiessen, Hill, & Saffran, 2005).

224

Although IDS is very common throughout the world, it is not universal. In some cultures, such as the Kwara’ae of the Solomon Islands, the Ifaluk of Micronesia, and the Kaluli of Papua New Guinea, it is believed that because infants cannot understand what is said to them, there is no reason for caregivers to speak to them (Le, 2000; Schieffelin & Ochs, 1987; Watson-Gegeo, & Gegeo, 1986). For example, young Kaluli infants are carried facing outward so that they can engage with other members of the group (but not with their caregiver), and if they are spoken to by older siblings, the mother will speak for them (Schieffelin & Ochs, 1987). Thus, even if they are not addressed directly by their caregivers, these infants are still immersed in language.

That infants begin life equipped with the two basic necessities for acquiring language—a human brain and a human environment—is, of course, only the beginning of the story. Of all the things we learn as humans, languages are arguably the most complex; so complex, in fact, that scientists have yet to be able to program computer systems to acquire a human language. The overwhelming complexity of language is further reflected in the difficulty most people have in learning a new language after puberty. How, then, do infants and young children manage to acquire their native language with such astounding success? We turn now to the many steps through which that remarkable accomplishment proceeds.

The Process of Language Acquisition

Acquiring a language involves listening and speaking (or watching and signing) and requires both comprehending what other people communicate and producing intelligible speech (or signs). Infants start out paying attention to what people say or sign, and they know a great deal about language long before their first linguistic productions.

Speech Perception

prosody  the characteristic rhythm, tempo, cadence, melody, intonational patterns, and so forth with which a language is spoken

the characteristic rhythm, tempo, cadence, melody, intonational patterns, and so forth with which a language is spoken

The first step in language learning is figuring out the sounds of one’s native language. As you saw in Chapter 2, the task usually begins in the womb, as fetuses develop a preference for their mother’s voice and the language they hear her speak. The basis for this very early learning is prosody, the characteristic rhythmic and intonation patterns with which a language is spoken. Differences in prosody are in large part responsible for why languages—from Japanese to French to Swahili—sound so different from one another.

Speech perception also involves distinguishing among the speech sounds that make a difference in a given language. To learn English, for example, one must distinguish between bat and pat, dill and kill, Ben and bed. As you will see next, young infants do not have to learn to hear these differences: they perceive many speech sounds in very much the same way that adults do.

categorical perception  the perception of speech sounds as belonging to discrete categories

the perception of speech sounds as belonging to discrete categories

Categorical perception of speech sounds Both adults and infants perceive speech sounds as belonging to discrete categories. This phenomenon, referred to as categorical perception, has been established by studying people’s response to speech sounds. In this research, a speech synthesizer is used to gradually and continuously change one speech sound, such as/b/, into a related one, such as/p/. These two phonemes are on an acoustic continuum; they are produced in exactly the same way, except for one crucial difference—the length of time between when air passes through the lips and when the vocal cords start vibrating. This lag, referred to as voice onset time (VOT), is shorter for/b/(less than 25 milliseconds [ms]) than for/p/(greater than 25 ms). (Try saying “ba” and “pa” alternately several times, with your hand on your throat, and you will likely experience this difference in VOT.)

voice onset time (VOT)  the length of time between when air passes through the lips and when the vocal cords start vibrating

the length of time between when air passes through the lips and when the vocal cords start vibrating

225

To study the perception of VOT, researchers create recordings of speech sounds that vary along this VOT continuum, so that each successive sound is slightly different from the one before, with/b/gradually changing into/p/. What is surprising is that adult listeners do not perceive this continuously changing series of sounds (Figure 6.2). Instead, they hear/b/repeated several times and then hear an abrupt switch to/p/. All the sounds in this continuum that have a VOT of less than 25 ms are perceived as/b/, and all those that have a VOT greater than 25 ms are perceived as/p/. Thus, adults automatically divide the continuous signal into two discontinuous categories—/b/and/p/. This perception of a continuum as two categories is a very useful perceptual ability because it allows one to pay attention to sound differences that are meaningful in one’s native language, such as, in English, the difference between/b/and/p/, while allowing meaningless differences, such as the difference between a/b/with a 10 ms VOT versus a/b/with a 20 ms VOT, to be ignored.

Young infants draw the same sharp distinctions between speech sounds. This remarkable fact was established using the habituation technique familiar to you from previous chapters. In the original, classic study (one of the 100 most frequently cited studies in psychology), 1- and 4-month-olds sucked on a pacifier hooked up to a computer (Eimas et al., 1971). The harder they sucked, the more often they’d hear repetitions of a single speech sound. After hearing the same sound repeatedly, the babies gradually sucked less enthusiastically (habituation). Then a new sound was played. If the infants’ sucking rate increased in response to the new sound, the researchers inferred that the infants discriminated the new sound from the old one (dishabituation).

The crucial factor in this study was the relation between the new and old sounds—specifically, whether they were from the same or different phonemic categories. For one group of infants, the new sound was from a different category; thus, after habituation to a series of sounds that adults perceive as/b/, sucking now produced a sound that adults identify as/p/. For the second group, the new sound was within the same category as the old one (i.e., adults perceive them both as/b/). A critical feature of the study is that for both groups, the new and old sounds differed equally in terms of VOT.

226

As Figure 6.3 shows, after habituating to/b/, the infants increased their rate of sucking when the new sound came from a different phonemic category (/p/instead of/b/). Habituation continued, however, when the new sound was within the same category as the original one. Since this classic study, researchers have established that infants show categorical perception of numerous speech sounds (Aslin, Jusczyk, & Pisoni, 1998).

A fascinating outcome of this research is the discovery that young infants actually make more distinctions than adults do. This rather surprising phenomenon occurs because any given language uses only a subset of the large variety of phonemic categories that exist. As noted earlier, the sounds/r/and/l/make a difference in English, but not in Japanese. Similarly, speakers of Arabic, but not of English, perceive a difference between the/k/sounds in “keep” and “cool.” Adults simply do not perceive differences in speech sounds that are not important in their native language, which partly accounts for why it is so difficult for adults to become fluent in a second language.

In contrast, infants can distinguish between phonemic contrasts made in all the languages of the world—about 600 consonants and 200 vowels. For example, Kikuyu infants in Africa are just as good as American babies at discriminating English contrasts not found in Kikuyu (Streeter, 1976). Studies done with infants from English-speaking homes have shown that they can discriminate non-English distinctions made in languages ranging from German and Spanish to Thai, Hindi, and Zulu (Jusczyk, 1997).

This research reveals an ability that is innate, in the sense that it is present at birth, and experience-independent, because infants can discriminate between speech sounds they have never heard before. Being born able to distinguish speech sounds of any language is enormously helpful to infants, priming them to start learning whichever of the world’s languages they hear around them. Indeed, the crucial role of early speech perception is reflected in a positive correlation between infants’ speech-perception skills and their later language skills. Babies who were better at detecting differences between speech sounds at 6 months scored higher on measures of vocabulary and grammar at 13 to 24 months of age (Tsao, Liu, & Kuhl, 2004).

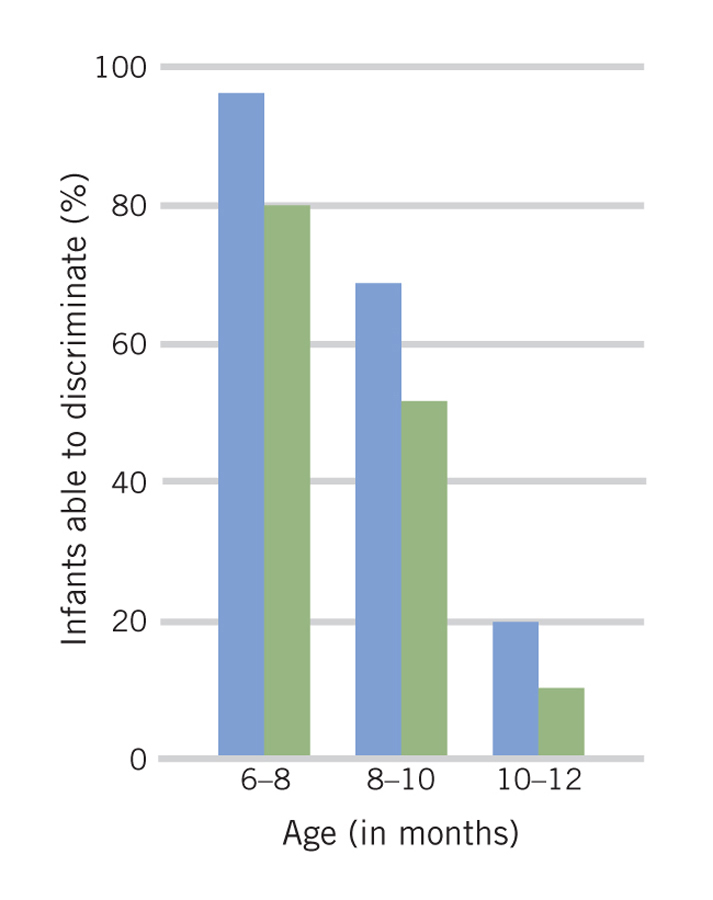

Developmental changes in speech perception During the last months of their first year, infants increasingly home in on the speech sounds of their native language, and by 12 months of age, they have “lost” the ability to perceive the speech sounds that are not part of it. In other words, their speech perception has become adultlike. This shift was first demonstrated by Werker and her colleagues (Werker, 1989; Werker & Lalonde, 1988; Werker & Tees, 1984), who studied infants ranging in age from 6 to 12 months. The infants, all from English-speaking homes, were tested on their ability to discriminate speech contrasts that are not used in English but that are important in two other languages—Hindi and Nthlakapmx (a language spoken by indigenous First Nations people in the Canadian Pacific Northwest). The researchers used a simple conditioning procedure, shown in Figure 6.4. The infants learned that if they turned their head toward the sound source when they heard a change in the sounds they were listening to, they would be rewarded by an interesting visual display. If the infants turned their heads in the correct direction immediately following a sound change, the researchers inferred that they had detected the change.

227

Figure 6.5 shows that at 6 to 8 months of age, English-learning infants readily discriminated between the sounds they heard; they could tell one Hindi syllable from another, and they could also distinguish between two sounds in Nthlakapmx. At 10 to 12 months of age, however, the infants no longer perceived the differences they had detected a few months before. Two Hindi syllables that had previously sounded different to them now sounded the same. Other research indicates that a similar change occurs slightly earlier for the discrimination of vowels (Kuhl et al., 1992; Polka & Werker, 1994). Interestingly, this perceptual narrowing does not appear to be an entirely passive process. Kuhl, Tsao, and Liu (2003) found that infants learned more about the phonetic structure of Mandarin from a live interaction with a Mandarin speaker than from watching a videotape of one.

Is this process of perceptual narrowing limited to speech? To answer this question, a recent study asked whether this narrowing process also occurs in ASL (Palmer et al., 2012). The researchers began by determining whether infants who had never been exposed to ASL were able to discriminate between highly similar ASL signs that are differentiated by the shape of the hand. They found that 4-month-olds could, in fact, discriminate between the signs. However, by 14 months of age, only infants who were learning ASL were able to detect the difference between the hand shapes; those who were not learning ASL had lost their ability to make this perceptual discrimination. Perceptual narrowing is thus not limited to speech. Indeed, this narrowing process may be quite broad; recall the discussion of perceptual narrowing in the domains of face perception and musical rhythm discussed in Chapter 5.

Thus, after the age of 8 months or so, infants begin to specialize in their discrimination of speech sounds, retaining their sensitivity to sounds in the native language they hear every day, while becoming increasingly less sensitive to nonnative speech sounds. Indeed, becoming a native listener is one of the greatest accomplishments of the infant’s first year of postnatal life.

228

Word Segmentation

word segmentation  the process of discovering where words begin and end in fluent speech

the process of discovering where words begin and end in fluent speech

As infants begin to tune into the speech sounds of their language, they also begin to discover another crucial feature of the speech surrounding them: words. This is no easy feat. Unlike the words typed on this page, there are no spaces between words in speech, even in IDS. What this means is that most utterances infants hear are strings of words without pauses between them, like “Lookattheprettybaby! Haveyou-everseensuchaprettybabybefore?” They then have to figure out where the words start and end. Remarkably, they begin the process of word segmentation during the second half of the first year.

In the first demonstration of infant word segmentation, Jusczyk & Aslin (1995) used a head-turn procedure designed to assess infants’ auditory preferences. In this study, 7-month-olds first listened to passages of speech in which a particular word was repeated from sentence to sentence—for example, “The cup was bright and shiny. A clown drank from the red cup. His cup was filled with milk.” After listening to these sentences several times, infants were tested using the head-turn preference procedure to see whether they recognized the words repeated in the sentences. In this method, flashing lights mounted near two loudspeakers located on either side of an infant are used to draw the infant’s attention to one side or the other. As soon as the infant turns to look at the light, an auditory stimulus is played through the speaker, and it continues as long as the infant is looking in that direction. The length of time the infant spends looking at the light—and hence listening to the sound—provides a measure of the degree to which the infant is attracted to that sound.

Infants in this study were tested on repetitions of words that had been presented in the sentences (such as cup) or words that had not (such as bike). The researchers found that infants listened longer to words that they had heard in the passages of fluent speech, as compared with words that never occurred in the passages. This result indicates that the infants were able to pull the words out of the stream of speech—a task so difficult that even sophisticated speech-recognition software often fails at it.

How do infants find words in pause-free speech? They appear to be remarkably good at picking up regularities in their native language that help them to find word boundaries. One example is stress patterning, an element of prosody. In English, the first syllable in two-syllable words is much more likely to be stressed than the second syllable (as in “English,” “often,” and “second”). By 8 months of age, English-learning infants expect stressed syllables to begin words and can use this information to pull words out of fluent speech (Curtin, Mintz, & Christiansen, 2005; Johnson & Jusczyk, 2001; Jusczyk, Houston, & Newsome, 1999; Thiessen & Saffran, 2003).

distributional properties  the phenomenon that in any language, certain sounds are more likely to appear together than are others

the phenomenon that in any language, certain sounds are more likely to appear together than are others

Another regularity to which infants are surprisingly sensitive concerns the distributional properties of the speech they hear. In every language, certain sounds are more likely to appear together than are others. Sensitivity to such regularities in the speech stream was demonstrated in a series of statistical-learning experiments in which babies learned new words based purely on regularities in how often a given sound followed another (Aslin, Saffran, & Newport, 1998; Saffran, Aslin, & Newport, 1996). The infants listened to a 2-minute recording of four different three-syllable “words” (e.g., tupiro, golabu, bidaku, padoti) repeated in random order with no pauses between the “words.” Then, on a series of test trials, the babies were presented with the “words” they had heard (e.g., bidaku, padoti) and with sequences that were not words (such as syllable sequences that spanned a word boundary—for example, kupado, made up from the end of bidaku and the beginning of padoti).

229

Using the same kind of preferential listening test described for the Juscyzk & Aslin (1995) study on the previous page, the researchers found that infants discriminated between the words and the sequences that were not words. To do so, the babies must have registered that certain syllables often occurred together in the sample of speech they heard. For example, “bi” was always followed by “da” and “da” was always followed by “ku,” whereas “ku” could be followed by “tu,” “go,” or “pa.” Thus, the infants used recurrent sound patterns to fish words out of the passing stream of speech. This ability to learn from distributional properties extends to real languages as well; English-learning infants, for example, can track similar statistical patterns when listening to Italian IDS (Pelucchi, Hay, & Saffran, 2009).

Identifying these regularities in speech sounds supports the learning of words. After repeatedly hearing novel “words” such as timay and dobu embedded in a long stream of speech sounds, 17-month-olds readily learned those sounds as labels for objects (Graf Estes et al., 2007). Similarly, after hearing Italian words like mela and bici embedded in fluent Italian speech, 17-month-olds who had no prior exposure to Italian readily mapped those labels to objects (Hay et al., 2011). Having already learned the sound sequences that made up the words apparently made it easier for the infants to associate the words with their referents.

Probably the most salient regularity for infants is their own name. Infants as young as 4½ months will listen longer to repetitions of their own name than to repetitions of a different but similar name (Mandel, Jusczyk, & Pisoni, 1995). Just a few weeks later, they can pick their own name out of background conversations (Newman, 2005). This ability helps them to find new words in the speech stream. After hearing “It’s Jerry’s cup!” a number of times, 6-month-old Jerry is more likely to learn the word cup than if he had not heard it right after his name (Bortfeld et al., 2005). Over time, infants recognize more and more familiar words, making it easier to pluck new ones out of the speech that they hear.

Infants are exceptional in their ability to identify patterns in the speech surrounding them. They start out with the ability to make crucial distinctions among speech sounds but then narrow their focus to the sounds and sound patterns that make a difference in their native language. This process lays the groundwork for their becoming not just native listeners but also native speakers.

Preparation for Production

In their first months, babies are getting ready to talk. The repertoire of sounds they can produce is initially extremely limited. They cry, sneeze, sigh, burp, and smack their lips, but their vocal tract is not sufficiently developed to allow them to produce anything like real speech sounds. Then, at around 6 to 8 weeks of age, infants begin to coo—producing long, drawn-out vowel sounds, such as “ooohh” or “aaahh.” Young infants entertain themselves with vocal gymnastics, switching from low grunts to high-pitched cries, from soft murmurs to loud shouts. They click, smack, blow raspberries, squeal, all with apparent fascination and delight. Through this practice, infants gain motor control over their vocalizations.

While their sound repertoire is expanding, infants become increasingly aware that their vocalizations elicit responses from others, and they begin to engage in dialogues of reciprocal ooohing and aaahing, cooing and gooing with their parents. With improvement in their motor control of vocalization, they imitate the sounds of their “conversational” partners, even producing higher-pitched sounds when interacting with their mothers and lower-pitched sounds when interacting with their fathers (de Boysson-Bardies, 1996/1999).

230

babbling  repetitive consonant–vowel sequences (“bababa…”) or hand movements (for learners of signed languages) produced during the early phases of language development

repetitive consonant–vowel sequences (“bababa…”) or hand movements (for learners of signed languages) produced during the early phases of language development

Babbling Sometime between 6 and 10 months of age, but on average at around 7 months, a major milestone occurs: babies begin to babble. Standard babbling involves producing syllables made up of a consonant followed by a vowel (“pa,” “ba,” “ma”) that are repeated in strings (“papapa”). Contrary to the long-held belief that infants babble a wide range of sounds from their own and other languages (Jakobson, 1941/1968), research has revealed that babies actually babble a fairly limited set of sounds, some of which are not part of their native language (de Boysson-Bardies, 1996/1999).

Native language exposure is a key component in the development of babbling. Although congenitally deaf infants produce vocalizations similar to those of hearing babies until around 5 or 6 months of age, their vocal babbling occurs very late and is quite limited (Oller & Eilers, 1988). However, some congenitally deaf babies do “babble” right on schedule—those who are regularly exposed to sign language. Infants exposed to ASL babble manually. They produce repetitive hand movements that are components of full ASL signs, just as vocally babbled sounds are repeated components of spoken words (Petitto & Marentette, 1991). Thus, like infants learning a spoken language, infants learning signed languages seem to experiment with the elements that are combined to make meaningful words in their native language (Figure 6.6).

As their babbling becomes more varied, it gradually takes on the sounds, rhythm, and intonational patterns of the language infants hear daily. In a simple but clever experiment, French adults listened to the babbling of a French 8-month-old and an 8-month-old from either an Arabic- or Cantonese-speaking family. When asked to identify which baby was the French one in each pair, the adults chose correctly 70% of the time (de Boysson-Bardies, Sagart, & Durand, 1984). Thus, before infants utter their first meaningful words, they are, in a sense, native speakers of a language.

Early interactions Before we turn to the next big step in language production—uttering recognizable words—it is important to consider the social context that promotes language development in most societies. Even before infants start speaking, they display the beginnings of communicative competence: the ability to communicate intentionally with another person.

The first indication of communicative competence is turn-taking. In a conversation, mature participants alternate between speaking and listening. Jerome Bruner and his colleagues (Bruner, 1977; Ratner & Bruner, 1978) have proposed that learning to take turns in social interactions is facilitated by parent–infant games, such as peekaboo and “give and take,” in which caregiver and baby take turns giving and receiving objects. In these “dialogues,” the infant has the opportunity to alternate between an active and a passive role, as in a conversation in which one alternates between speaking and listening. These early interactions give infants practice in bidirectional communication, providing infants with a scaffold to learn how to use language to converse with others. Indeed, recent research suggests that caregivers’ responses to infant babbling may serve a similar function. When an adult labels an object for an infant just after the infant babbles, the infant’s learning of the label is more greatly enhanced than when the labeling occurs in the absence of babbling (Goldstein et al., 2010). The results of this study suggest that babbling may serve as a signal to the caregiver that the infant is attentive and ready to learn. This early back-and-forth may also provide infants with practice in conversational turn-taking.

231

As discussed in Chapter 4, successful communication also requires intersubjectivity, in which two interacting partners share a mutual understanding. The foundation of intersubjectivity is joint attention, which, early on, is established by the parent’s following the baby’s lead, looking at and commenting on whatever the infant is looking at. By 12 months of age, infants have begun to understand the communicative nature of pointing, with many also being capable of meaningful pointing themselves (Behne et al., 2012).

We have thus seen that infants spend a good deal of time getting ready to talk. Through babbling, they gain some initial level of control over the production of sounds that are necessary to produce recognizable words. As they do so, they already begin to sound like their parents. Through early interactions with their parents, they develop interactive routines similar to those required in the use of language for communication. We will now turn our attention to the processes that lead to infants’ first real linguistic productions: words.

First Words

When babies first begin to segment words from fluent speech, they are simply recognizing familiar patterns of sounds without attaching any meaning to them. But then, in a major revolution, they begin to recognize that words have meaning.

reference  in language and speech, the associating of words and meaning

in language and speech, the associating of words and meaning

The problem of reference The first step for infants in acquiring the meanings of words is to address the problem of reference, that is, to start associating words and meaning. Figuring out which of the multitude of possible referents is the right one for a particular word is, as the philosopher Willard Quine (1960) pointed out, a very complex problem. If a child hears someone say “bunny” in the presence of a rabbit, how does the child know whether this new word refers to the rabbit itself, to its fuzzy tail, to the whiskers on the right side of its nose, or to the twitching of its nose? That the problem of reference is a real problem is illustrated by the case of a toddler who thought “Phew!” was a greeting, because it was the first thing her mother said on entering the child’s room every morning (Ferrier, 1978).

Early Word Recognition

Infants begin associating highly familiar words with their highly familiar referents surprisingly early on. When 6-month-olds hear either “Mommy” or “Daddy,” they look toward the appropriate person (Tincoff & Jusczyk, 1999). Infants gradually come to understand the meaning of less frequently heard words, with the pace of their vocabulary-building varying greatly from one child to another. Remarkably, parents are often unaware of just how many words their infants recognize. Using a computer monitor, Bergelson and Swingley (2012) showed infants pairs of pictures of common foods and body parts and tracked the infants’ eye gaze when one of the pictures was named. They found that even 6-month-olds looked to the correct picture significantly more often than would be expected by chance, demonstrating that they recognized the names of these items. Strikingly, most of their parents reported that the infants did not know the meanings of these words. So not only do infants understand far more words than they can produce; they also understand far more words than even their caregivers realize.

232

One of the remarkable features of infants’ early word recognition is how rapidly they understand what they are hearing. To illuminate the age-related dynamics of this understanding, Fernald and her colleagues presented infants with images depicting pairs of familiar objects, such as a dog and a baby, and observed how quickly the infants moved their eyes to the correct object after hearing its label used (e.g., “Where’s the baby?”). The researchers found that whereas 15-month-olds waited until they had heard the whole word to look at the target object, 24-month-olds looked at the correct object after hearing only the first part of its label, just as adults do (Fernald et al., 1998; Fernald, Perfors, & Marchman, 2006; Fernald, Swingley, & Pinto, 2001). Older infants can also use context to help them recognize words. For example, those who are learning a language that has a grammatical gender system (like Spanish or French) can use the gender of the article preceding the noun (la versus el in Spanish; la versus le in French) to speed their recognition of the noun itself (Lew-Williams & Fernald, 2007; Van Heugten & Shi, 2009). Other visual-fixation research has shown that older infants can even recognize familiar words when they are mispronounced (e.g., “vaby” for “baby,” “gall” for “ball,” “tog” for “dog,” etc.), though their recognition is slower than when they hear the words pronounced correctly (Swingley & Aslin, 2000).

early word production Gradually, infants begin to say some of the words they understand, with most producing their first words between 10 and 15 months of age. The words a child is able to say are referred to as the child’s productive vocabulary.

What counts as an infant’s “first word”? It can be any specific utterance consistently used to refer to something or to express something. Even with this loose criterion, identification of an infant’s earliest few words can be problematic. For one thing, doting parents often misconstrue their child’s babbling as words. For another, early words may differ from their corresponding adult forms. For example, Woof was one of the first words spoken by the boy whose linguistic progress was illustrated at the beginning of this chapter. It was used to refer to the dog next door—both to excitedly name the animal when it appeared in the neighbors’ yard and to wistfully request the dog’s presence when it was absent.

Infants’ early word productions are limited by their ability to pronounce words clearly enough that an adult can recognize them. To make life easier for themselves, infants adopt a variety of simplification strategies (Gerken, 1994). For example, they leave out the difficult bits of words, turning banana into “nana,” or they substitute easier sounds for hard-to-say ones—“bubba” for brother, “wabbit” for rabbit. Sometimes they reorder parts of words to put an easier sound at the beginning of the word, as in the common “pasketti” (for spaghetti) or the more idiosyncratic “Cagoshin” (the way the child quoted at the beginning of the chapter continued for several years to say Chicago).

233

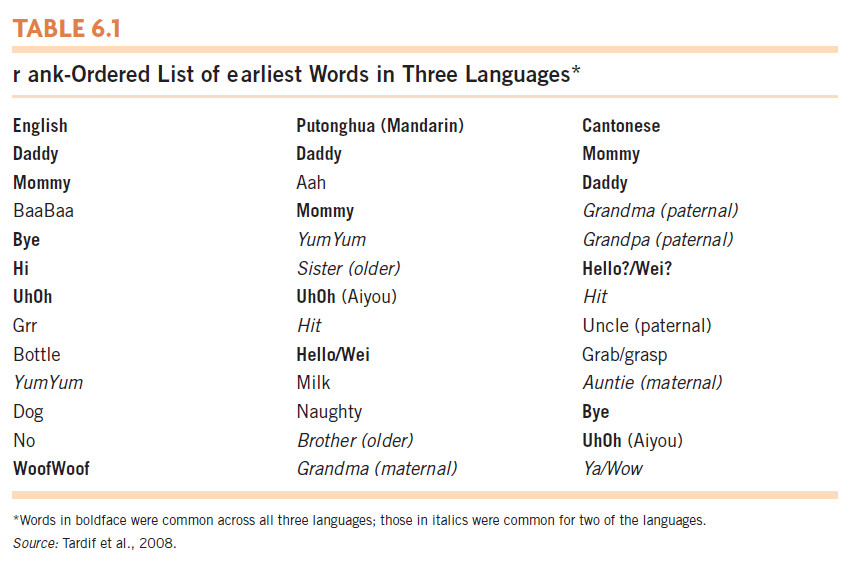

Once children start talking, what do they talk about? The early productive vocabularies of children in the United States include names for people, objects, and events from the child’s everyday life (Clark, 1979; K. Nelson, 1973). Children name their parents, siblings, pets, and themselves, as well as other personally important objects such as cookies, juice, and balls. Frequent events and routines are also labeled—“up,” “bye-bye,” “night-night.” Important modifiers are also used—“mine,” “hot,” “all gone.” Table 6.1 reveals substantial cross-linguistic similarities in the content of the first 10 words of children in the United States, Hong Kong, and Beijing. As the table shows, many of infants’ first words in the three societies referred to specific people or were sound effects (Tardif et al., 2008).

In the early productive vocabularies of children learning English, nouns predominate. One reason may be that because nouns label entities—whereas verbs represent relations among entities—the meanings of nouns are easier to pick up from observation than are the meanings of verbs (Gentner, 1982). Similarly, words that are easier to picture—that are more imageable—are easier for infants and toddlers to learn (McDonough et al., 2011). Another reason is that middle-class American mothers (the group most frequently studied) engage in frequent bouts of object-labeling for their infants—“Look, there’s a turtle! Do you see the turtle?” (Fernald & Morikawa, 1993)—and the proportion of nouns in very young children’s vocabularies is related to the proportion of nouns in their mother’s speech to them (Pine, 1994). Significantly, the pattern of object-labeling by mothers differs across cultures and contexts. For example, Japanese mothers label objects far less often than do American mothers (Fernald & Morikawa, 1993). In the context of toy play, Korean mothers use more verbs than nouns, a pattern very different from that observed in English-speaking mothers (Choi, 2000). And indeed, infants in Korea learn nouns and verbs at the same rate, unlike English-learning infants (Choi & Gopnik, 1995).

234

holophrastic period  the period when children begin using the words in their small productive vocabulary one word at a time

the period when children begin using the words in their small productive vocabulary one word at a time

Initially, infants say the words in their small productive vocabulary only one word at a time. This phase is referred to as the holophrastic period, because the child typically expresses a “whole phrase”—a whole idea—with a single word. For example, a child might say “Drink!” to express the desire for a glass of juice. Children who produce only one-word utterances are not limited to single ideas; they manage to express themselves by stringing together successive one-word utterances. An example is a little girl with an eye infection who pointed to her eye, saying “Ow,” and then after a pause, “Eye” (Hoff, 2001).

overextension  the use of a given word in a broader context than is appropriate

the use of a given word in a broader context than is appropriate

What young children want to talk about quickly outstrips the number of words in their limited vocabularies, so they make the words they do know perform double duty. One way they do this is through overextension—using a word in a broader context than is appropriate, as when children use dog for any four-legged animal, daddy for any man, moon for a dishwasher dial, or hot for any reflective metal (Table 6.2). Most overextensions represent an effort to communicate rather than a lack of knowledge, as demonstrated by research in which children who overextended some words were given comprehension tests (Naigles & Gelman, 1995). In one study, children were shown pairs of pictures of entities for which they generally used the same label—for instance, a dog and a sheep, both of which they normally referred to as “dog.” However, when asked to point to the sheep, they chose the correct animal. Thus, these children understood the meaning of the word sheep, but because it was not in their productive vocabulary, they used a related word that they knew how to say in order to talk about the animal.

235

Box 6.2: individual differences

The Role of Family and School Context in Early Language Development

Within a family, parents often notice significant linguistic differences between their children. Within a given community, such differences can be greatly magnified. In a single kindergarten classroom, for example, there may be a tenfold difference in the number of words used by different children. What accounts for these differences?

The number of words children know is intimately related to the number of words that they hear, which, in turn, is linked to their caregivers’ vocabularies. One of the key determinants of the language children hear is the socioeconomic status of their parents. In a seminal study, Hart and Risley (1994) recorded the speech that 42 parents used with their children over the course of 2½ years, from before the infants were talking until they were 3 years of age. Some of the parents were upper-middle class, others were working class, and others were on welfare. The results were astonishing: the received linguistic experience of the average child whose parents were on welfare (616 words per hour) was half that of the average working-class parents’ child (1251 words per hour) and less than one-third that of the average child in a professional family (2153 words per hour). The researchers did the math and suggested that after 4 years, an average child with upper-middle-class parents would have accumulated experience with almost 45 million words, compared with 26 million for an average child in a working-class family and 13 million for an average child with parents on welfare.

How do these differences affect children’s language development? Not surprisingly, children from higher-SES groups have larger vocabularies than those of children from lower-SES groups (Fenson et al., 1994; Huttenlocher et al., 1991). Indeed, a study of high-SES and mid-SES mothers and their 2-year-old children found that SES differences in maternal speech (e.g., number and length of utterances, richness of vocabulary, and sentence complexity) predicted some of the differences in children’s spoken vocabularies (Hoff, 2003). For instance, higher-SES mothers tended to use longer utterances with their children than did mid-SES parents, giving their children access not only to more words but also to more complex grammatical structures (Hoff, 2003). Such differences in maternal speech even affect how quickly toddlers recognize familiar words: children whose mothers provided more maternal speech at 18 months were faster at recognizing words at 24 months than were children whose mothers provided less input (Hurtado, Marchman, & Fernald, 2008).

Similar findings emerge in the school context. For example, when preschool children with low language skills are placed in classrooms with peers who also have low language skills, they show less language growth than do their counterparts who are placed with classmates who have high language skills (Justice et al., 2011). These peer effects have important implications for programs like Head Start (discussed fully in Chapter 8), which are designed to enhance language development and early literacy for children living in poverty. Unfortunately, congregating children from lower-SES families together in the same preschool classrooms may limit their ability to “catch up.” However, there is the possibility that negative peer effects may be offset by positive teacher effects. For example, one study found that children whose preschool teachers used a rich vocabulary showed better reading comprehension in 4th grade than did children whose preschool teachers used a more limited vocabulary (Dickinson & Porche, 2011).

These results suggest that for a variety of reasons, parents’ SES affects the way they talk to their children; in turn, those individual differences have a substantial influence on the way their children talk. These differences can be intensified by the linguistic abilities of children’s peers and teachers. For children in low-SES environments, the potential negative effects of these influences may be offset by interventions ranging from increased access to children’s books (which provide enriched linguistic environments) to enhancing teacher training in low-income preschool settings (Dickinson, 2011). Regardless of the source, what goes in is what comes out: we can only learn words and grammatical structures that we hear (or see or read) in the language surrounding us.

236

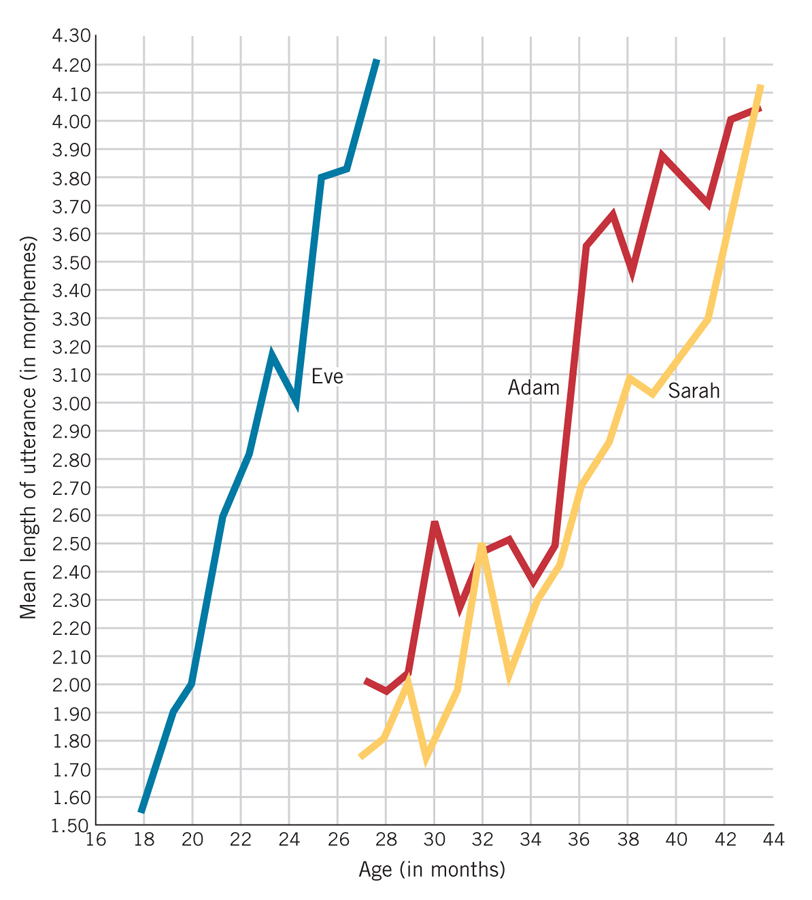

Word learning After the appearance of their first words, children typically plod ahead slowly, reaching a productive vocabulary of 50 or so words by around 18 months of age. At this point, the rate of learning appears to accelerate, leading to what appears to be a “vocabulary spurt” (e.g., L. Bloom, 1973; McMurray, 2007). Although scholars disagree about whether learning actually speeds up for all or even most children (Bloom, 2000), it is clear that children’s communicative abilities are growing rapidly (Figure 6.7).

What accounts for the skill with which young children learn words? When we look closely, we see that there are multiple sources of support for learning new words, some coming from the people around them, and some generated by the children themselves.

ADULT INFLUENCES ON WORD LEARNING In addition to using IDS, which makes word learning easier for infants, adults facilitate word learning by highlighting new words. For example, they stress new words, or place them at the end of a sentence. They also tend to label objects that are already the focus of the child’s attention, thereby reducing uncertainty about the referent (Masur, 1982; Tomasello, 1987; Tomasello & Farrar, 1986). Their repetition of words also helps; young children are more likely to acquire words their parents use frequently (Huttenlocher et al., 1991). Another stimulus to word learning that adults provide involves naming games, in which they ask the child to point to a series of named items—“Where’s your nose?” “Where’s your ear?” “Where’s your tummy?” There is also some evidence that parents may facilitate their children’s word learning by maintaining spatial consistency with the objects they are labeling. For instance, in a study in which parents labeled novel objects for their infants, the infants learned the names of the objects more readily when the objects were in the same location each time they were labeled (Samuelson et al., 2011). Presumably, consistency in the visual environment helps children map words onto objects and events in that environment. In Box 6.3, we discuss recent research focused on a currently popular means by which some parents try to “outsource” word learning: technology.

fast mapping  the process of rapidly learning a new word simply from hearing the contrastive use of a familiar and the unfamiliar word

the process of rapidly learning a new word simply from hearing the contrastive use of a familiar and the unfamiliar word

CHILDREN’S CONTRIBUTIONS TO WORD LEARNING When confronted with words they haven’t heard before, children actively exploit the context in which the new word was used in order to infer its meaning. A classic study by Carey and Bartlett (1978) demonstrated fast mapping—the process of rapidly learning a new word simply from hearing the contrastive use of a familiar word and the unfamiliar word. In the course of everyday activities in a preschool classroom, an experimenter drew a child’s attention to two trays—one red, the other an uncommon color the child would not know by name—and asked the child to get “the chromium tray, not the red one.” The child was thus provided with a contrast between a familiar term (red) and an unfamiliar one (chromium). From this simple contrast, the participants inferred which tray they were supposed to get and that the name of the color of that tray was “chromium.” After this single exposure to a novel word, about half the children showed some knowledge of it 1 week later by correctly picking out “chromium” from an array of paint chips.

Some theorists have proposed that the many inferences children make in the process of learning words are guided by a number of assumptions (sometimes referred to as principles, constraints, or biases) that limit the possible meanings children entertain for a new word. For example, children expect that a given entity will have only one name, an expectancy referred to as the mutual exclusivity assumption by Woodward and Markman (1998). Early evidence for this assumption came from a study in which 3-year-olds saw pairs of objects—a familiar object for which the children had a name and an unfamiliar one for which they had no name. When the experimenter said, “Show me the blicket,” the children mapped the novel label to the novel object, the one for which they had no name (Markman & Wachtel, 1988). Even 16-month-old infants do the same (Graham, Poulin-Dubois, & Baker, 1998). (See Figure 6.8a.) Interestingly, bilingual and trilingual infants, who are accustomed to hearing more than one name for a given object, are less likely to follow the mutual exclusivity principle (Byers-Heinlein & Werker, 2009).

237

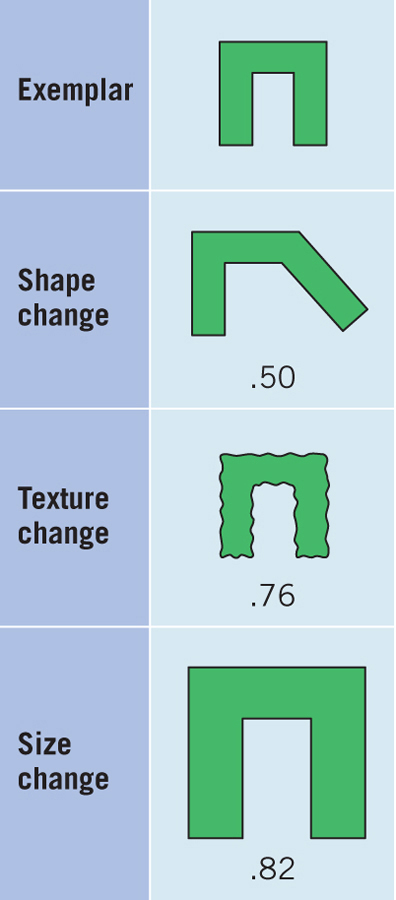

Markman and Woodward (Markman, 1989; Woodward & Markman, 1998) also proposed the whole-object assumption, according to which children expect a novel word to refer to a whole object rather than to a part, property, action, or other aspect of the object. Thus, in the case of Quine’s rabbit problem (see page 231), the whole-object assumption leads children to map the label “bunny” to the whole animal, not just to its tail or the twitching of its nose.

pragmatic cues  aspects of the social context used for word learning

aspects of the social context used for word learning

When confronted with novel words, children also exploit a variety of pragmatic cues to their meaning by paying attention to the social contexts in which the words are used. For example, children use an adult’s focus of attention as a cue to word meaning. In a study by Baldwin (1993), an experimenter showed 18-month-olds two novel objects and then concealed them in separate containers. Next, the experimenter peeked into one of the containers and commented, “There’s a modi in here.” The adult then removed and gave both objects to the child. When asked for the “modi,” the children picked the object that the experimenter had been looking at when saying the label. Thus, the infants used the relation between eye gaze and labeling to learn a novel name for an object before they had ever seen it (see Figure 6.8b).

Another pragmatic cue that children use to draw inferences about a word’s meaning is intentionality (Tomasello, 2007). For instance, in one study, 2-year-olds heard an experimenter announce, “Let’s dax Mickey Mouse.” The experimenter then performed two actions on a Mickey Mouse doll, one carried out in a coordinated and apparently intentional way, followed by a pleased comment (“There!”), and the other carried out in a clumsy and apparently accidental way, followed by an exclamation of surprise (“Oops!”). The children interpreted the novel verb dax as referring to the action the adult apparently intended to perform (Tomasello & Barton, 1994). Infants can even use an adult’s emotional response to infer the name of a novel object that they cannot see (Tomasello, Strosberg, & Akhtar, 1996). In a study establishing this fact, an adult announced her intention to “find the gazzer.” She then picked up one of two objects and showed obvious disappointment with it. When she gleefully seized the second object, the infants inferred that it was a “gazzer.” (Figure 6.8c depicts another instance in which a child infers the name of an unseen object from an adult’s emotional expression.)

The degree to which preschool children take a speaker’s intention into account is shown by the fact that if an adult’s labeling of an object conflicts with their knowledge of that object, they will nevertheless accept the label if the adult clearly used it intentionally (Jaswal, 2004). When an experimenter simply used the label “dog” in referring to a picture of a catlike animal, preschool children were reluctant to extend the label to other catlike stimuli. However, they were much more willing to do so when the experimenter made it clear that he really intended his use of the unexpected label by saying, “You’re not going to believe this, but this is actually a dog.” Similarly, if a child has heard an adult refer to a cat as “dog,” the child will be reluctant to subsequently learn a new word used by that “untrustworthy” adult (e.g., Koenig & Harris, 2005; Koenig & Woodward, 2010; Sabbagh & Shafman, 2009).

238

Another way young children can infer the meaning of novel words is by taking cues from the linguistic context in which the words are used. In one of the first experiments on language acquisition, Brown (1957) established that the grammatical form of a novel word influences children’s interpretation of it. He showed preschool children a picture of a pair of hands kneading a mass of material in a container (Figure 6.9). The picture was described to one group of children as “sibbing,” to another as “a sib,” and to a third as “some sib.” The children subsequently interpreted sib as referring to the action, the container, or the material, depending on which grammatical form (verb, count noun, or mass noun) of the word they had heard.

239