10-2 Functional Anatomy of the Auditory System

To understand how the nervous system analyzes sound waves, we begin by tracing the pathway sound energy takes to and through the brain. The ear collects sound waves from the surrounding air and converts their mechanical energy to electrochemical neural energy, which begins a long route through the brainstem to the auditory cortex.

Before we can trace the journey from ear to cortex, we must ask what the auditory system is designed to do. Because sound waves have the properties frequency, amplitude, and complexity, we can predict that the auditory system is structured to decode these properties. Most animals can tell where a sound comes from, so some mechanism must locate sound waves in space. Finally, many animals, including humans, not only analyze sounds for meaning but also make sounds. Because the sounds they produce are often the same as the ones they hear, we can infer that the neural systems for sound production and analysis must be closely related.

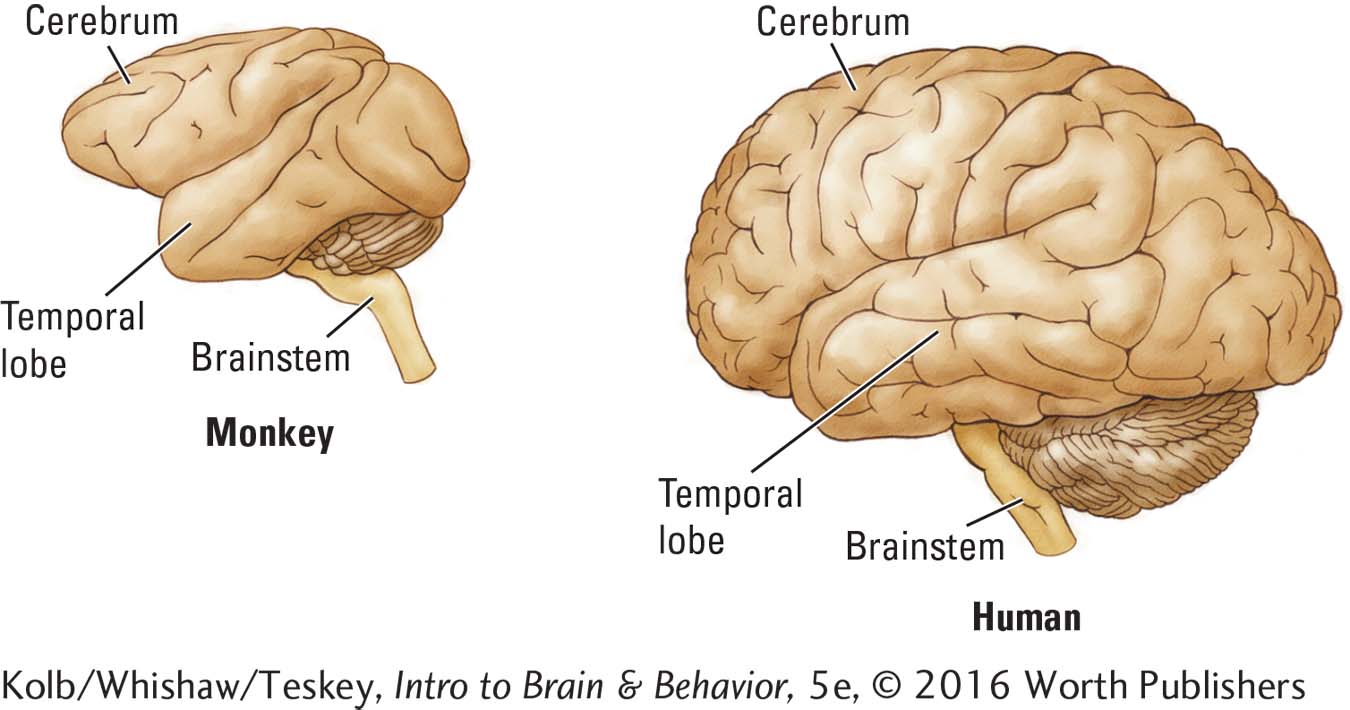

In humans, the evolution of sound-

Structure of the Ear

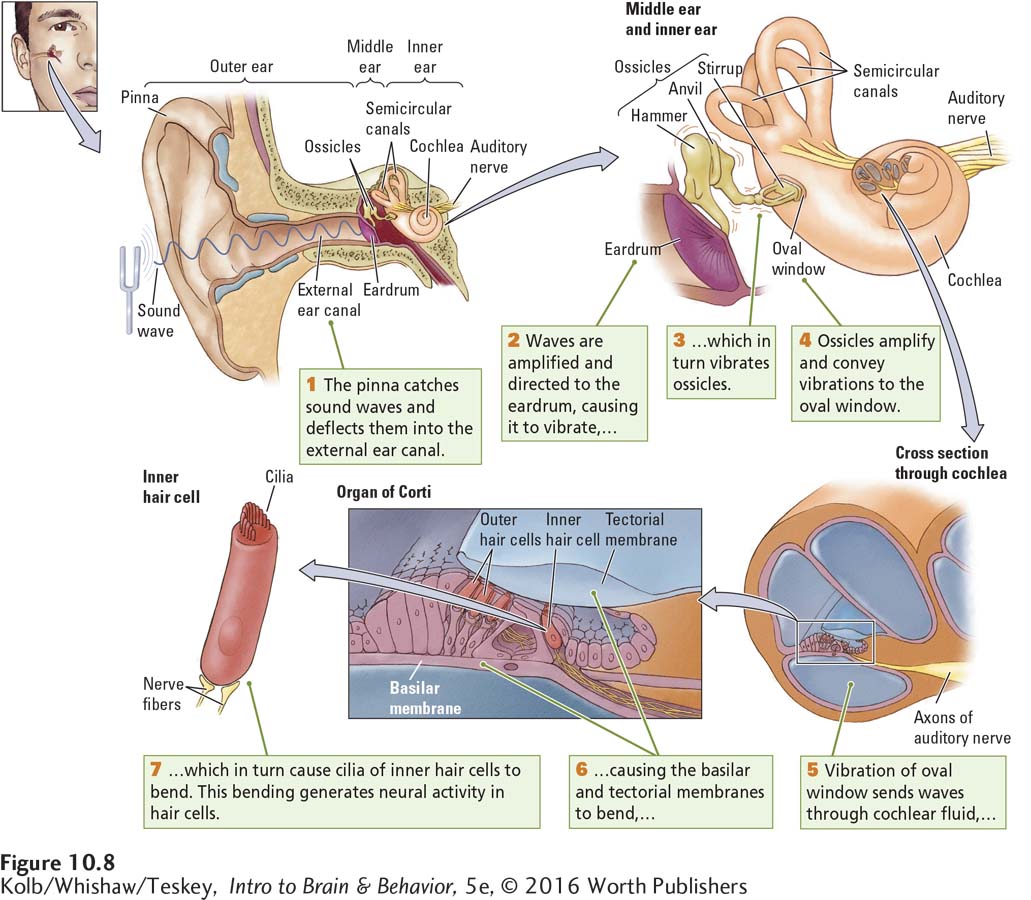

The ear is a biological masterpiece in three acts: outer ear, middle ear, and inner ear, all illustrated in Figure 10-8.

Processing Sound Waves

Both the pinna, the funnel-

Because it narrows from the pinna, the external canal amplifies sound waves and directs them to the eardrum at its inner end. When sound waves strike the eardrum, it vibrates, the rate of vibration varying with the frequency of the waves. On the inner side of the eardrum, as depicted in Figure 10-8, is the middle ear, an air-

These three ossicles are called the hammer, the anvil, and the stirrup because of their distinctive shapes. The ossicles attach the eardrum to the oval window, an opening in the bony casing of the cochlea, the inner ear structure containing the auditory receptor cells. These receptor cells and the cells that support them are collectively called the organ of Corti, shown in detail in Figure 10-8.

Cochlea actually means snail shell in Latin.

When sound waves vibrate the eardrum, the vibrations are transmitted to the ossicles. The leverlike action of the ossicles amplifies the vibrations and conveys them to the membrane that covers the cochlea’s oval window. As Figure 10-8 shows, the cochlea coils around itself and looks a bit like a snail shell. Inside its bony exterior, the cochlea is hollow, as the cross-

The hollow cochlear compartments are filled with lymphatic fluid, and floating in its midst is the thin basilar membrane. Embedded in a part of the basilar membrane are outer and inner hair cells. At the tip of each hair cell are several filaments called cilia, and the cilia of the outer hair cells are embedded in the overlying tectorial membrane. The inner hair cells loosely contact this tectorial membrane.

Pressure from the stirrup on the oval window makes the cochlear fluid move because a second membranous window in the cochlea (the round window) bulges outward as the stirrup presses inward on the oval window. In a chain reaction, the waves traveling through the cochlear fluid bend the basilar and tectorial membranes, and the bending membranes stimulate the cilia at the tips of the outer and inner hair cells.

Transducing Sound Waves into Neural Impulses

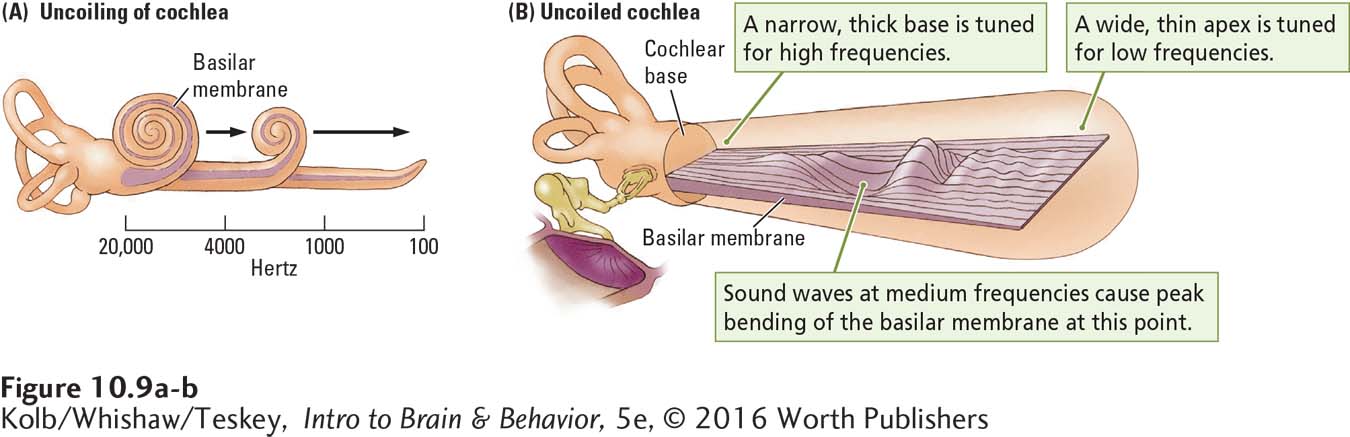

How does the conversion of sound waves into neural activity code the various properties of sound that we perceive? In the late 1800s, the German physiologist Hermann von Helmholtz proposed that sound waves of different frequencies cause different parts of the basilar membrane to resonate. Von Helmholtz was partly correct. Actually, all parts of the basilar membrane bend in response to incoming waves of any frequency. The key is where the peak displacement takes place (Figure 10-9).

This solution to the coding puzzle was determined in 1960, when George von Békésy observed the basilar membrane directly. He saw a traveling wave moving along the membrane all the way from the oval window to the membrane’s apex. The coiled cochlea in Figure 10-9A maps the frequencies to which each part of the basilar membrane is most responsive. When the oval window vibrates in response to the vibrations of the ossicles, shown beside the uncoiled membrane in Figure 10-9B, it generates waves that travel through the cochlear fluid. Békésy placed little grains of silver along the basilar membrane and watched them jump in different places to different frequencies of incoming waves. Higher wave frequencies caused maximum peaks of displacement near the base of the basilar membrane; lower wave frequencies caused maximum displacement peaks near the membrane’s apex.

As a rough analogy, consider what happens when you shake a rope. If you shake it quickly, the waves in the rope are small and short and the peak of activity remains close to the base—

This same response pattern holds for the basilar membrane and sound wave frequency. All sound waves cause some displacement along the entire length of the membrane, but the amount of displacement at any point varies with the sound wave’s frequency. In the human cochlea, shown uncoiling in Figure 10-9A, the basilar membrane near the oval window is maximally affected by frequencies as high as about 20,000 hertz, the upper limit of our hearing range. The most effective frequencies at the membrane’s apex register less than 100 hertz, closer to our lower limit of about 20 Hz (see Figure 10-4).

Intermediate frequencies maximally displace points on the basilar membrane between its ends, as shown in Figure 10-9B. When a wave of a certain frequency travels down the basilar membrane, hair cells at the point of peak displacement are stimulated, resulting in a maximal neural response in those cells. An incoming signal composed of many frequencies causes several points along the basilar membrane to vibrate and excites hair cells at all these points.

Not surprisingly, the basilar membrane is much more sensitive to changes in frequency than is the rope in our analogy, because the basilar membrane varies in thickness along its entire length. It is narrow and thick at the base, near the oval window, and wider and thinner at its tightly coiled apex. The combination of varying width and thickness enhances the effect of small frequency differences. As a result, the cochlear receptors can code small differences in sound wave frequency as neural impulses.

Auditory Receptors

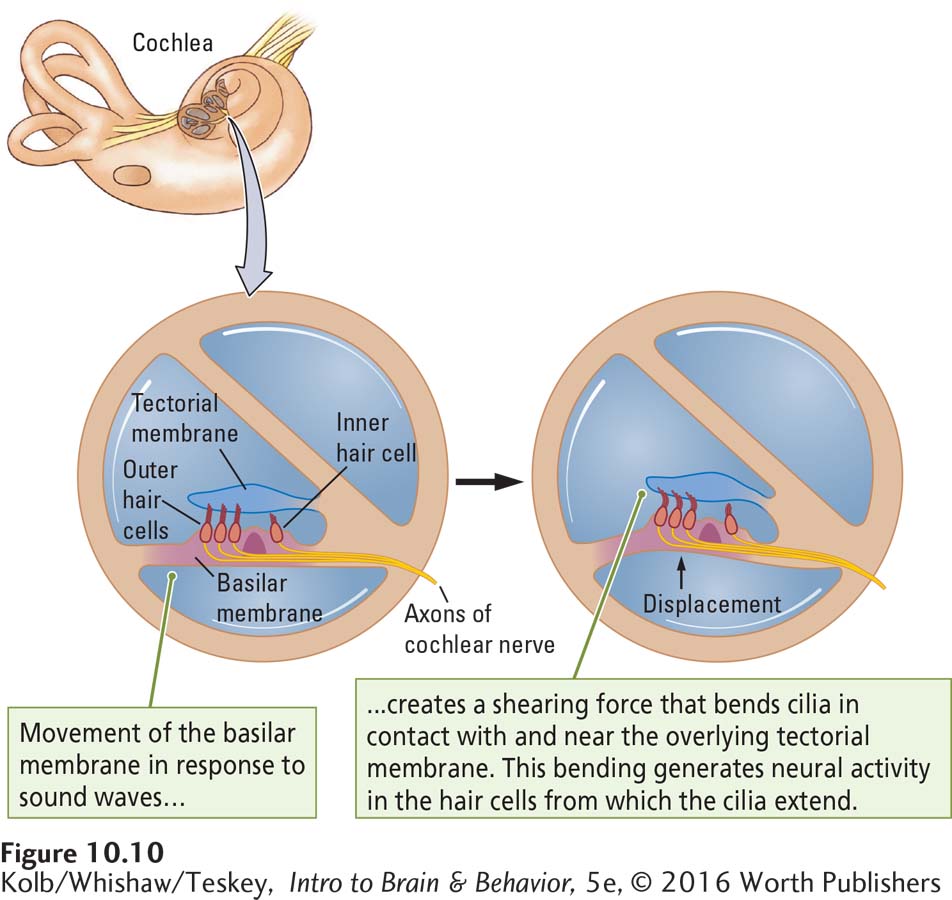

Two kinds of hair cells transform sound waves into neural activity. Figure 10-8 (bottom left) shows the anatomy of the inner hair cells; Figure 10-10 illustrates how sound waves stimulate them. A young person’s cochlea has about 12,000 outer and 3500 inner hair cells. The numbers fall off with age. Only the inner hair cells act as auditory receptors, and their numbers are small considering how many different sounds we can hear. As diagrammed in Figure 10-10, both outer and inner hair cells are anchored in the basilar membrane. The tips of the cilia of outer hair cells are attached to the overlying tectorial membrane, but the cilia of the inner hair cells only loosely touch that membrane. Nevertheless, the movement of the basilar and tectorial membranes causes the cochlear fluid to flow past the cilia of the inner hair cells, bending them back and forth.

Animals with intact outer hair cells but no inner hair cells are effectively deaf. That is, they can perceive only very loud, low-

Outer hair cells function by sharpening the cochlea’s resolving power, contracting or relaxing and thereby changing tectorial membrane stiffness. That’s right: the outer hair cells have a motor function. While we typically think of sensory input preceding motor output, in fact, motor systems can influence sensory input. The pupil contracts or dilates to change the amount of light that falls on the retina, and the outer hair cells contract or relax to alter the physical stimulus detected by the inner hair cells.

How this outer hair cell function is controlled is puzzling. What stimulates these cells to contract or relax? The answer seems to be that through connections with axons in the auditory nerve, the outer hair cells send a message to the brainstem auditory areas and receive a reply that causes the cells to alter tension on the tectorial membrane. In this way, the brain helps the hair cells to construct an auditory world. The outer cells are also part of a mechanism that modulates auditory nerve firing, especially in response to intense sound pressure waves, and thus offers some protection against their damaging effects.

Section 4-2 reviews phases of the action potential and its propagation as a nerve impulse.

A final question remains: How does movement of the inner hair cell cilia alter neural activity? The neurons of the auditory nerve have a spontaneous baseline rate of firing action potentials, and this rate is changed by the amount of neurotransmitter the hair cells release. It turns out that movement of the cilia changes the inner hair cell’s polarization and its rate of neurotransmitter release. Inner hair cells continuously leak calcium, and this leakage causes a small but steady amount of neurotransmitter release into the synapse. Movement of the cilia in one direction results in depolarization: calcium channels open and release more neurotransmitter onto the dendrites of the cells that form the auditory nerve, generating more nerve impulses. Movement of the cilia in the other direction hyperpolarizes the cell membrane and transmitter release decreases, thus decreasing activity in auditory neurons.

Inner hair cells are amazingly sensitive to the movement of their cilia. A movement sufficient to allow sound wave detection is only about 0.3 nm, about the diameter of a large atom! Such sensitivity helps to explain why our hearing is so incredibly sensitive. Clinical Focus 10-2, Otoacoustic Emissions, describes a consequence of cochlear function.

10-2

Otoacoustic Emissions

While the ear is exquisitely designed to amplify and convert sound waves into action potentials, it is unique among the sensory organs. The ear also produces the physical stimulus it is designed to detect! A healthy cochlea produces sound waves called otoacoustic emissions.

The cochlea acts as an amplifier. The outer hair cells amplify sound waves, providing an energy source that enhances cochlear sensitivity and frequency selectivity. Not all the energy the cochlea generates is dissipated within it. Some escapes toward the middle ear, which works efficiently in both directions, thus setting the eardrum in motion. The eardrum then acts as a loudspeaker, radiating sound waves—

Sensitive microphones placed in the external ear canal can detect both types of otoacoustic emissions, spontaneous and evoked. As the name implies, spontaneous otoacoustic emissions occur without external stimulation. Evoked otoacoustic emissions, generated in response to sound waves, are important because evoked emissions are useful for assessing hearing impairments.

A simple, noninvasive test can detect and evaluate evoked otoacoustic emissions in newborns and children who are too young to take conventional hearing tests, as well as in people of any age. A small speaker and microphone are inserted into the ear. The speaker emits a click sound, and the microphone detects the resulting evoked emission without damaging the delicate workings of the inner ear. Missing or abnormal evoked emissions predict a hearing deficit. Many wealthy countries now sponsor universal programs to test the hearing of all newborn babies using otoacoustic emissions.

Otoacoustic emissions serve a useful purpose, but even so, they play no direct role in hearing. They are considered an epiphenomenon—

Pathways to the Auditory Cortex

Figure 2-27 lists and locates the cranial nerves, and in its caption is a mnemonic for remembering them in order.

Inner hair cells in the organ of Corti synapse with neighboring bipolar cells, the axons that form the auditory (cochlear) nerve. The auditory nerve in turn forms part of the eighth cranial nerve, the auditory vestibular nerve that governs hearing and balance. Whereas ganglion cells in the eye receive inputs from many receptor cells, bipolar cells in the ear receive input from but a single inner hair cell receptor.

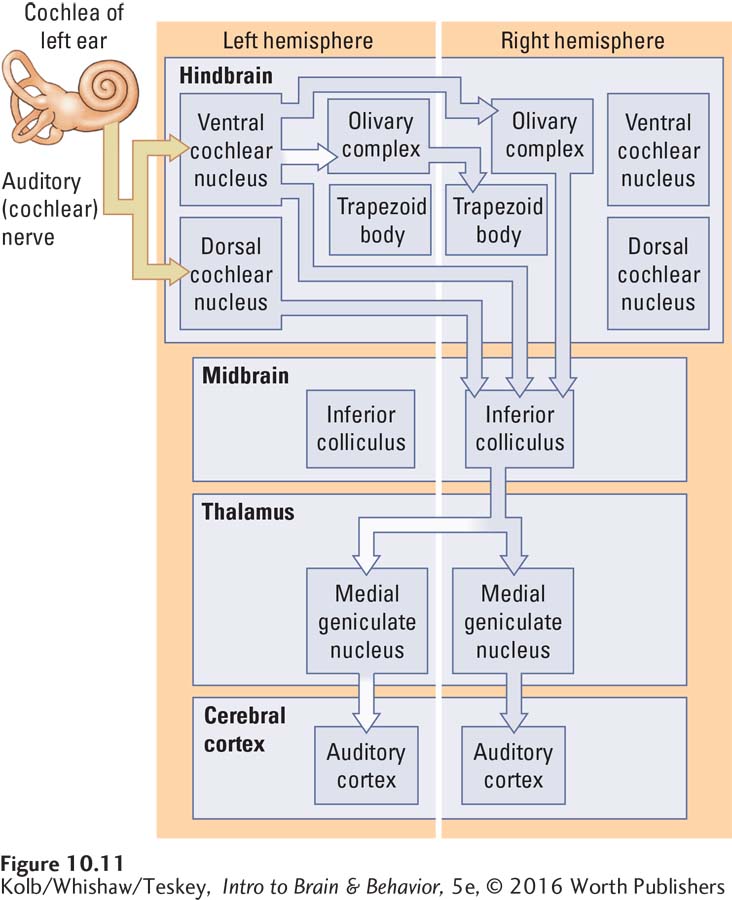

Cochlear-

Both the cochlear nucleus and the superior olive send projections to the inferior colliculus in the dorsal midbrain. Two distinct pathways emerge from the inferior colliculus, coursing to the medial geniculate nucleus in the thalamus. The ventral region of the medial geniculate nucleus projects to the primary auditory cortex (area A1), whereas the dorsal region projects to the auditory cortical regions adjacent to area A1.

Analogous to the two distinct visual pathways—

Figure 9-13 maps the visual pathways through the cortex.

Relatively little is known about the what–

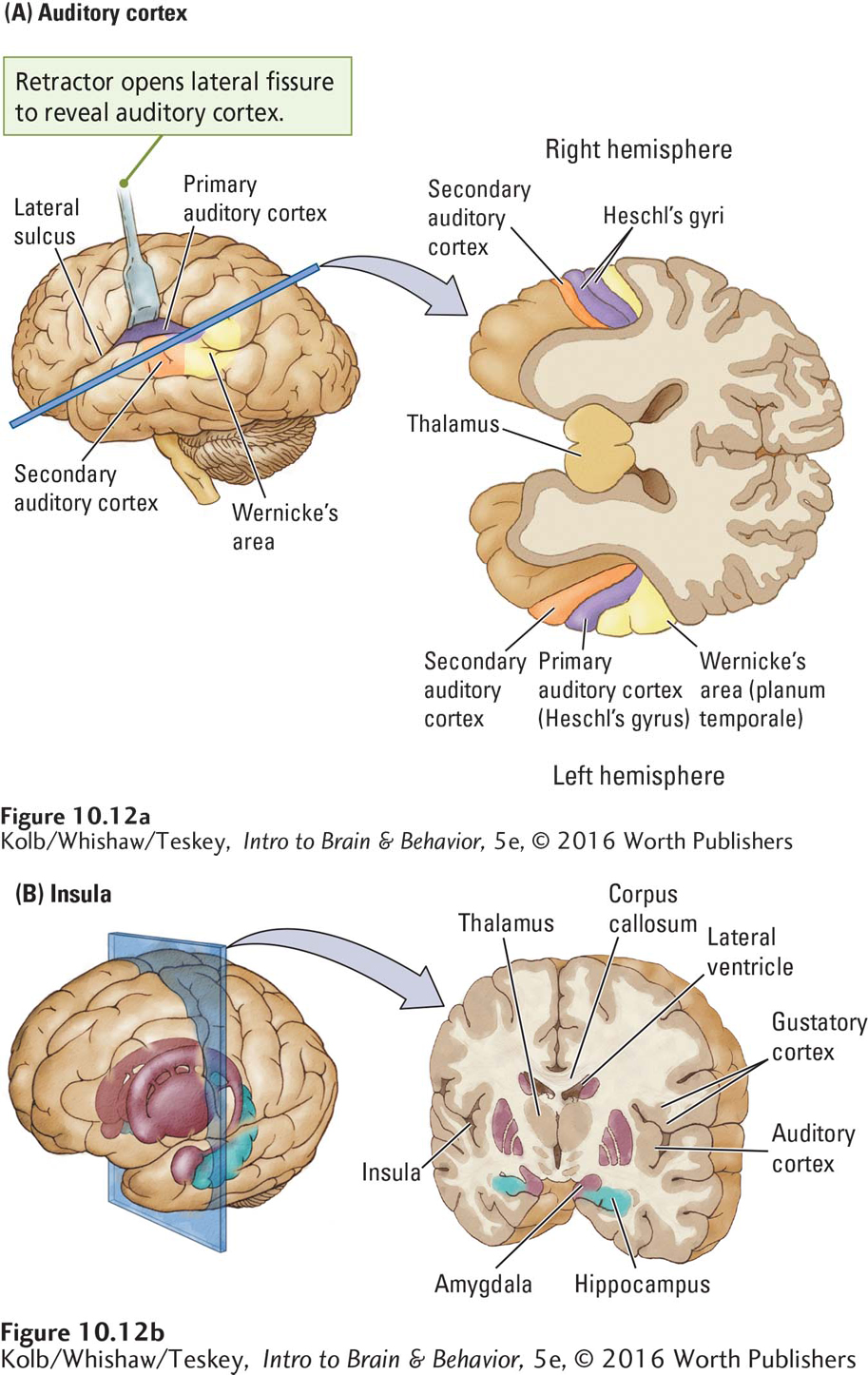

Auditory Cortex

In humans, the primary auditory cortex (A1) lies within Heschl’s gyrus, surrounded by secondary cortical areas (A2), as shown in Figure 10-12A. The secondary cortex lying behind Heschl’s gyrus is called the planum temporale (Latin for temporal plane).

In right-

These hemispheric differences mean that the auditory cortex is anatomically and functionally asymmetrical. Although cerebral asymmetry is not unique to the auditory system, it is most obvious here because auditory analysis of speech takes place only in the left hemisphere of right-

The remaining 30 percent of left-

10-3

Seeing with Sound

As detailed in Section 10-5, echolocation, the ability to use sound to locate objects in space, has been extensively studied in species such as bats and dolphins. But it was reported more than 50 years ago that some blind people also echolocate.

More recently, anecdotal reports have surfaced of blind people who navigate around the world using clicks made with their tongues and mouths then listening to the returning echoes. Videos, such as the 45-

Behavioral studies of blind people reveal that echolocators make short, spectrally broad clicks by moving the tongue backward and downward from the roof of the mouth directly behind the teeth. Skilled echolocators can identify properties of objects that include position, distance, size, shape, and texture (Teng & Whitney, 2011).

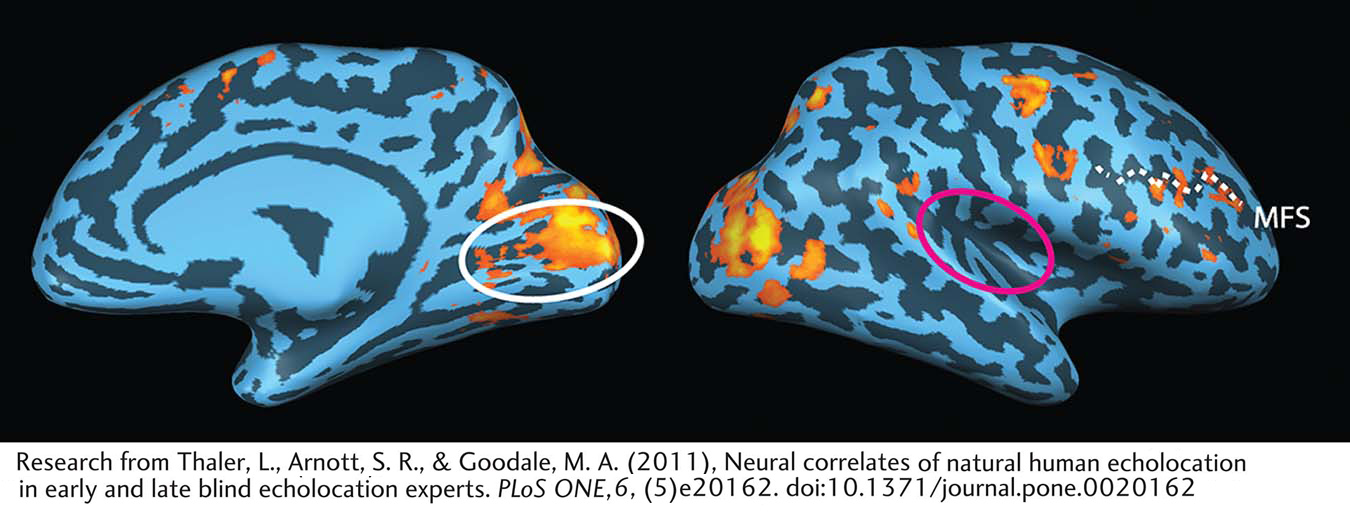

Thaler and colleagues (2011) investigated the neural basis of this ability using fMRI. They studied two blind echolocation experts and compared brain activity for sounds that contain both clicks and returning echoes with brain activity for control sounds that did not contain the echoes. The participants use echolocation to localize objects in the environment, but more important, they also perceive the object’s shape, motion—

When the blind participants listened to recordings of their echolocation clicks and echoes compared to silence, both the auditory cortex and the primary visual cortex showed activity. Sighted controls showed activation only in the auditory cortex. Remarkably, when the investigators compared the controls’ brain activity to recordings that contained echoes versus those that did not, the auditory activity disappeared. By contrast, as illustrated in the figure, the blind echolocators showed activity only in the visual cortex when sounds with and without echoes were compared. Sighted controls (findings not shown) showed no activity in either the visual or auditory cortex in this comparison.

These results suggest that blind echolocation experts process click–

Future research may determine how this process works. More immediately, the study suggests that echolocation could be taught to blind and visually impaired people to provide them increased independence in their daily life.

Localization of language on one side of the brain is an example of lateralization. Note here simply that, in neuroanatomy, if one hemisphere is specialized for one type of analysis—

The temporal lobe sulci enfold a volume of cortical tissue far more extensive than the auditory cortex (Figure 10-12B). Buried in the lateral fissure, cortical tissue called the insula contains not only lateralized regions related to language but also areas controlling taste perception (the gustatory cortex) and areas linked to the neural structures underlying social cognition. As you might expect, injury to the insula can produce such diverse deficits as disturbance of both language and taste.

10-2 REVIEW

Functional Anatomy of the Auditory System

Before you continue, check your understanding.

Question 1

Incoming sound wave energy vibrates the eardrum, which in turn vibrates the ____________.

Question 2

The auditory receptors are the ____________, found in the ____________.

Question 3

The motion of the cochlear fluid causes displacement of the ____________ and ____________ membranes.

Question 4

The axons of bipolar cells from the cochlea form the ____________ nerve, which is part of the ____________ cranial nerve.

Question 5

The auditory nerve originating in the cochlea projects to various nuclei in the brainstem; then it projects to the ____________ in the midbrain and the ____________ in the thalamus.

Question 6

Describe the asymmetrical structure and functions of the auditory cortex.

Answers appear in the Self Test section of the book.