10-4 Anatomy of Language and Music

This chapter began with the evolutionary implications of discovering a flute made by Neanderthals (see Focus 10-1). That Neanderthals made flutes implies not only that they processed musical sound wave patterns but also that they made music. In our brain, musical ability is generally a right-

Section 7-4 surveys functional brain imaging methods; Section 7-2 reviews methods for measuring its electrical activity.

No one knows whether these complementary systems evolved together in the hominid brain, but it is highly likely. Language and music abilities are highly developed in the modern human brain. Although little is known about how each is processed at the cellular level, electrical stimulation and recording and blood flow imaging studies yield important insights into the cortical regions that process them. We investigate such studies next, focusing first on how the brain processes language.

Processing Language

An estimated 5000 to 7000 human languages are spoken in the world today, and probably many more have gone extinct in past millennia. Researchers have wondered whether the brain has a single system for understanding and producing any language, regardless of its structure, or whether disparate languages, such as English and Japanese, are processed differently. To answer this question, it helps to analyze languages to determine just how fundamentally similar they are, despite their obvious differences.

Uniformity of Language Structure

Foreign languages often seem impossibly complex to those who do not speak them. Their sounds alone may seem odd and difficult to make. If you are a native English speaker, for instance, Asian languages, such as Japanese, probably sound especially melodic and almost without obvious consonants to you, whereas European languages, such as German or Dutch, may sound heavily guttural.

Even within such related languages as Spanish, Italian, and French, marked differences can make learning one of them challenging, even if the student already knows another. Yet as real as all these linguistic differences may be, they are superficial. The similarities among human languages, although not immediately apparent, are actually far more fundamental than their differences.

Noam Chomsky (1965) is usually credited as the first linguist to stress similarities over differences in human language structure. In a series of books and papers written over the past half-

Chomsky was greeted with deep skepticism when he first proposed this idea in the 1960s, but it has since become clear that the capacity for human language is indeed genetic. An obvious piece of evidence: language is universal in human populations. All people everywhere use language.

A language’s complexity is unrelated to its culture’s technological complexity. The languages of technologically unsophisticated peoples are every bit as complex and elegant as the languages of postindustrial cultures. Nor is the English of Shakespeare’s time inferior or superior to today’s English; it is just different.

A 1-

Another piece of evidence that Chomsky adherents cite for the genetic basis of human language is that humans learn language early in life and seemingly without effort. By about 12 months of age, children everywhere have started to speak words. By 18 months, they are combining words, and by age 3 years, they have a rich language capability.

Perhaps the most amazing thing about language development is that children are not formally taught the structure of their language, just as they are not taught to crawl or walk. They just do it. As toddlers, they are not painstakingly instructed in the rules of grammar. In fact, their early errors—

Focus 8-3 describes how cortical activation differs for second languages learned later in life and Section 15-6, research on bilingualism and intelligence.

At the most basic level, children learn the language or languages that they hear spoken. In an English household, they learn English; in a Japanese home, Japanese. They also pick up the language structure—

Both its universality and natural acquisition favor the theory for a genetic basis of human language. A third piece of evidence is the many basic structural elements common to all languages. Granted, every language has its own particular grammatical rules specifying exactly how various parts of speech are positioned in a sentence (syntax), how words are inflected to convey different meanings, and so forth. But an overarching set of rules also applies to all human languages, and the first rule is that there are rules.

For instance, all languages employ parts of speech that we call subjects, verbs, and direct objects. Consider the sentence Jane ate the apple. Jane is the subject, ate is the verb, and apple is the direct object. Syntax is not specified by any universal rule but rather is a characteristic of the particular language. In English, syntactical order (usually) is subject, verb, object; in Japanese, the order is subject, object, verb; in Gaelic, the order is verb, subject, object. Nonetheless, all have both syntax and grammar.

The existence of these two structural pillars in all human languages is seen in the phenomenon of creolization—the development of a new language from what was formerly a rudimentary language, or pidgin. Creolization took place in the seventeenth century in the Americas when slave traders and colonial plantation owners brought together, from various parts of West Africa, people who lacked a common language. The newly enslaved needed to communicate, and they quickly created a pidgin based on whatever language the plantation owners spoke—

The pidgin had a crude syntax (word order) but lacked a real grammatical structure. The children of the slaves who invented this pidgin grew up with caretakers who spoke only pidgin to them. Yet within a generation, these children had developed their own creole, a language complete with a genuine syntax and grammar.

Clearly, the pidgin invented of necessity by adults was not a learnable language for children. Their innate biology shaped a new language similar in basic structure to all other human languages. All creolized languages seem to evolve in a similar way, even though the base languages are unrelated. This phenomenon can happen only because there is an innate biological component to language development.

Localizing Language in the Brain

Finding a universal basic language structure set researchers on the search for an innate brain system that underlies language use. By the late 1800s, it had become clear that language functions were at least partly localized—

Section 7-1 links Broca’s observations to his contributions to neuropsychology.

Then, in 1861, the French physician Paul Broca confirmed that certain language functions are localized in the left hemisphere. Broca concluded, on the basis of several postmortem examinations, that language is localized in the left frontal lobe, in a region just anterior to the central fissure. A person with damage in this area is unable to speak despite both an intact vocal apparatus and normal language comprehension. The confirmation of Broca’s area was significant because it triggered the idea that the left and right hemispheres might have different functions.

Other neurologists of the time believed that Broca’s area might be only one of several left-

In Section 10-2 we identified Wernicke’s area as a speech zone (see Figure 10-12A). Damage to any speech area produces some form of aphasia, the general term for any inability to comprehend or produce language despite the presence of otherwise normal comprehension and intact vocal mechanisms. At one extreme, people who suffer Wernicke’s aphasia can speak fluently, but their language is confused and makes little sense, as if they have no idea what they are saying. At the other extreme, a person with Broca’s aphasia cannot speak despite normal comprehension and intact physiology.

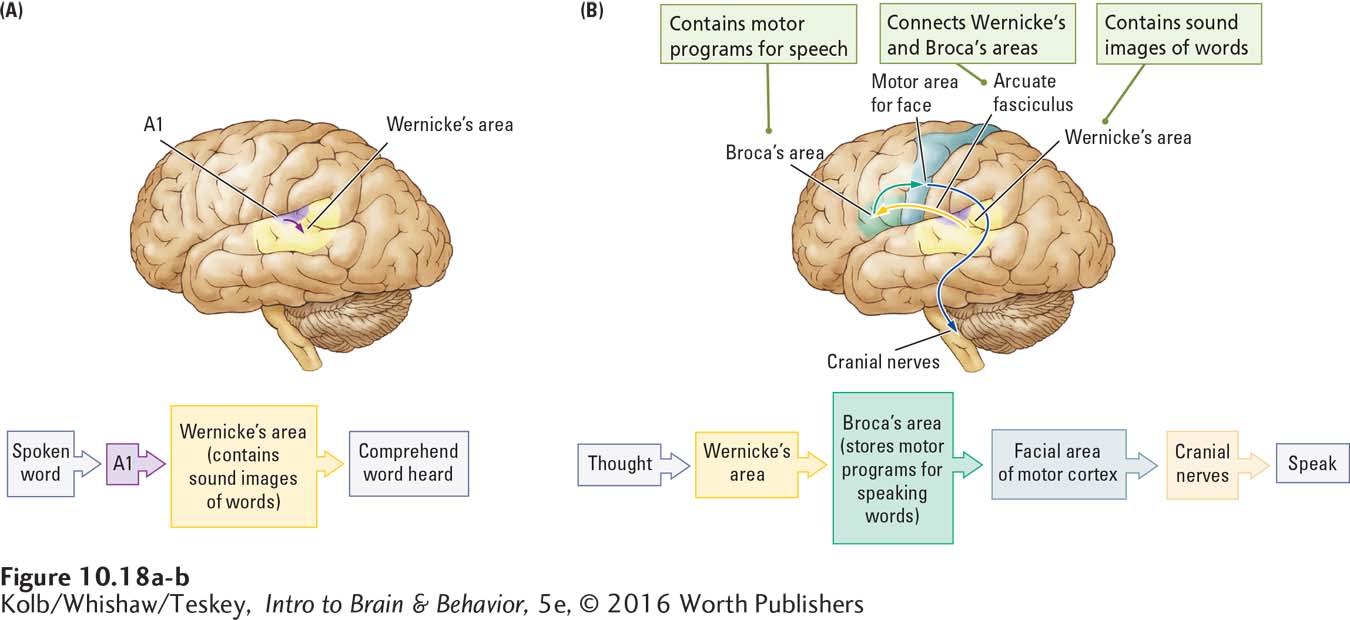

Wernicke went on to propose a model, diagrammed in Figure 10-18A, for how the two language areas of the left hemisphere interact to produce speech. He theorized that images of words are encoded by their sounds and stored in the left posterior temporal cortex. When we hear a word that matches one of those sound images, we recognize it, which is how Wernicke’s area contributes to speech comprehension.

To speak words, Broca’s area in the left frontal lobe must come into play, because the motor program to produce each word is stored in this area. Messages travel to Broca’s area from Wernicke’s area through the arcuate fasciculus, a fiber pathway that connects the two regions. Broca’s area in turn controls articulation of words by the vocal apparatus, as diagrammed in Figure 10-18B.

Wernicke’s model provided a simple explanation both for the existence of two major language areas in the brain and for the contribution each area makes to the control of language. But the model was based on postmortem examinations of patients with brain lesions that were often extensive. Not until neurosurgeon Wilder Penfield’s pioneering studies, begun in the 1930s, were the left hemisphere language areas clearly and accurately mapped.

Auditory and Speech Zones Mapped by Brain Stimulation

It turns out, among Penfield’s discoveries, that neither is Broca’s area the independent site of speech production nor is Wernicke’s area the independent site of language comprehension. Electrical stimulation of either region disrupts both processes.

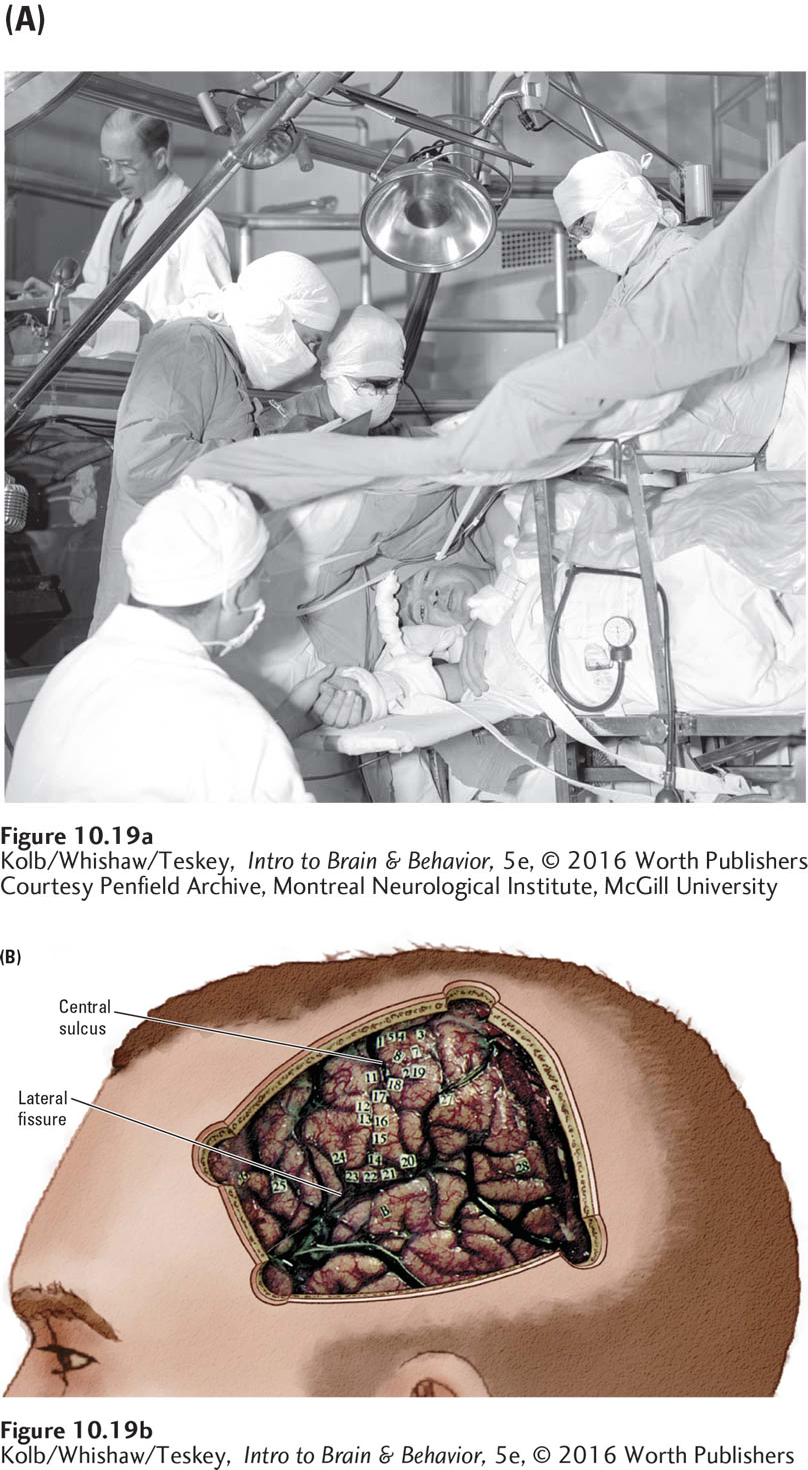

Penfield took advantage of the chance to map the brain’s auditory and language areas when he operated on patients undergoing elective surgery to treat epilepsy unresponsive to antiseizure medication. The goal of this surgery is to remove tissues where the abnormal discharges are initiated without damaging the areas responsible for linguistic ability or vital sensory or motor functioning. To determine the locations of these critical regions, Penfield used a weak electrical current to stimulate the brain surface. By monitoring the patient’s responses during stimulation in different locations, Penfield could map brain functions along the cortex.

Section 7-1 links Broca’s observations to his contributions to neuropsychology.

Typically, two neurosurgeons perform the operation under local anesthesia applied to the skin, skull, and dura mater (Penfield is shown operating in Figure 10-19A) as a neurologist analyzes the electroencephalogram in an adjacent room. Patients, who are awake, are asked to contribute during the procedure, and the effects of brain stimulation in specific regions can be determined in detail and mapped. Penfield placed tiny numbered tickets on different parts of the brain’s surface where the patient noted that stimulation had produced some noticeable sensation or effect, producing the cortical map shown in Figure 10-19B.

When Penfield stimulated the auditory cortex, patients often reported hearing such sounds as a ringing that sounded like a doorbell, a buzzing noise, or a sound like birds chirping. This result is consistent with later single-

Penfield also found that stimulation in area A1 seemed to produce simple tones—

Sometimes, however, stimulation of the auditory cortex produced effects other than sound perceptions. Stimulation of one area, for example, might cause a patient to feel deaf, whereas stimulation of another area might produce a distortion of sounds actually being heard. As one patient exclaimed after a certain region had been stimulated, “Everything you said was mixed up!”

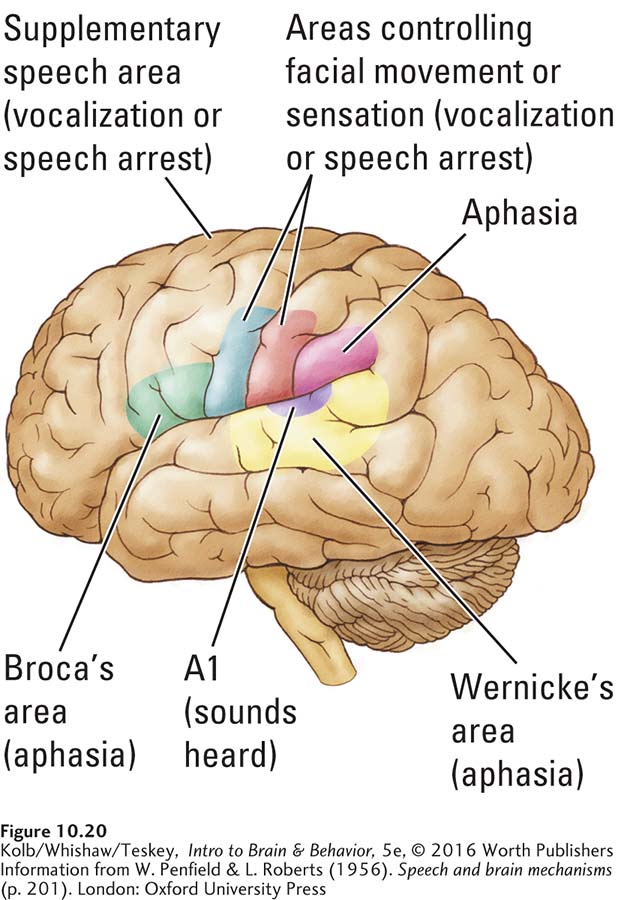

Penfield was most interested in the effects of brain stimulation not on simple sound wave processing but on language. He and later researchers used electrical stimulation to identify four important cortical regions that control language. The two classic regions—

Clearly, much of the left hemisphere takes part in audition. Figure 10-20 shows those areas that Penfield found engaged in some way in processing language. In fact, Penfield mapped cortical language areas in two ways, first by disrupting speech, then by eliciting speech. Not surprisingly, damage to any speech area produces some form of aphasia.

DISRUPTING SPEECH Penfield expected that electrical current might disrupt ongoing speech by effectively short-

Electrical stimulation of the supplementary speech area on the dorsal surface of the frontal lobe (shown in Figure 10-20, that control facial movements. This exception makes sense because talking requires movement of facial, tongue, and throat muscles.

10-4

Left-

Susan S., a 25-

Medication can usually control such seizures, but the drugs were ineffective for Susan. The attacks disrupted her life: they prevented her from driving and restricted the types of jobs she could hold. So Susan decided to undergo neurosurgery to remove the region of abnormal brain tissue that was causing the seizures.

The procedure has a high success rate. Susan’s surgery entailed removal of a part of the left temporal lobe, including most of the cortex in front of the auditory areas. Although it may seem a substantial amount of the brain to cut away, the excised tissue is usually abnormal, so any negative consequences typically are minor.

After the surgery, Susan did well for a few days; then she started to have unexpected and unusual complications. As a result, she lost the remainder of her left temporal lobe, including the auditory cortex and Wernicke’s area. The extent of lost brain tissue resembles that shown in the accompanying MRI.

Susan no longer understood language, except to respond to the sound of her name and to speak just one phrase: I love you. Susan was also unable to read, showing no sign that she could even recognize her own name in writing.

To find ways to communicate with Susan, Bryan Kolb tried humming nursery rhymes to her. She immediately recognized them and could say the words. We also discovered that her singing skill was well within the normal range and she had a considerable repertoire of songs.

Susan did not seem able to learn new songs, however, and she did not understand messages that were sung to her. Apparently, Susan’s musical repertoire was stored and controlled independently of her language system.

ELICITING SPEECH The second way Penfield mapped language areas was to stimulate the cortex when a patient was not speaking. Here the goal was to see if stimulation caused the person to utter a speech sound. Penfield did not expect to trigger coherent speech; cortical electrical stimulation is not physiologically normal and so probably would not produce actual words or word combinations. His expectation was borne out.

Stimulation of regions on both sides of the brain—

Auditory Cortex Mapped by Positron Emission Tomography

Section 7-4 details procedures used to obtain a PET scan.

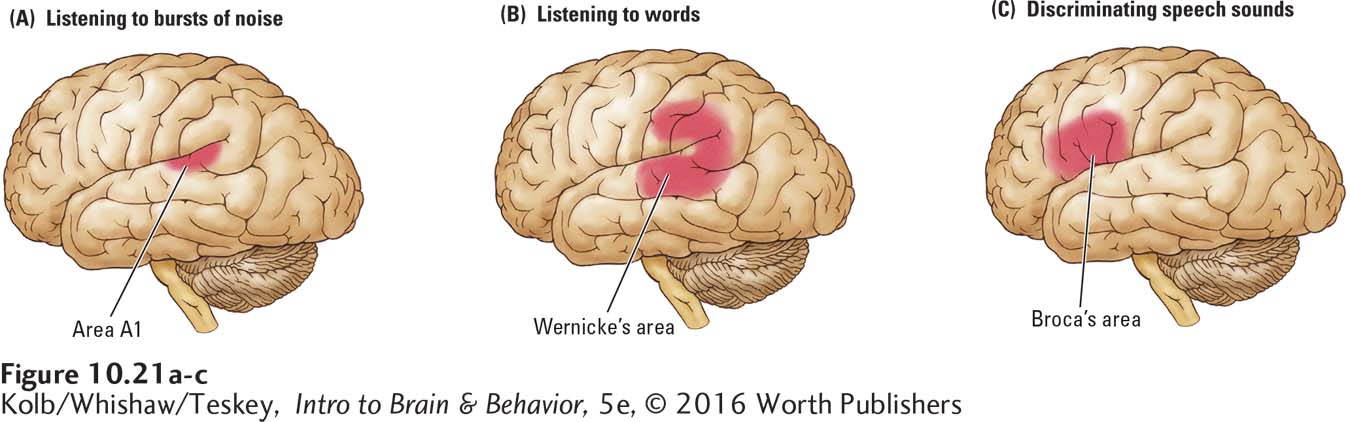

To study the metabolic activity of brain cells engaged in tasks such as processing language, researchers use PET, a brain-

The researchers also hypothesized that performing a discrimination task for speech sounds would selectively activate left-

Both types of stimuli produced responses in both hemispheres but with greater activation in the left hemisphere for the speech syllables. These results imply that area A1 analyzes all incoming auditory signals, speech and nonspeech, whereas the secondary auditory areas are responsible for some higher-

As Figure 10-21C shows, the speech sound discrimination task yielded an intriguing additional result: Broca’s area in the left hemisphere was also activated. This frontal lobe region’s involvement during auditory analysis may seem surprising. In Wernicke’s model, Broca’s area is considered the storage area for motor programs needed to produce words. It is not usually a region thought of as a site of speech sound discrimination.

A possible explanation is that to determine that the g in bag and the one in pig are the same speech sound, the auditory stimulus must be related to how the sound is actually articulated. That is, speech sound perception requires a match with the motor behaviors associated with making the sound.

This role for Broca’s area in speech analysis is confirmed further when investigators ask people to determine whether a stimulus is a word or a nonword (e.g., tid versus tin or gan versus tan). In this type of study, information about how the words are articulated is irrelevant, and Broca’s area need not be recruited. Imaging reveals that it is not.

Processing Music

Although Penfield did not study the effect of brain stimulation on musical analysis, many researchers study musical processing in brain-

Localizing Music in the Brain

A famous patient, the French composer Maurice Ravel (1875–

Skills that had to do with producing music, however, were among those destroyed. Ravel could no longer recognize written music, play the piano, or compose. This dissociation of music perception and music production may parallel the dissociation of speech comprehension and speech production in language. Apparently, the left hemisphere plays at least some role in certain aspects of music processing, especially those that have to do with making music.

10-5

Cerebral Aneurysms

C. N. was a 35-

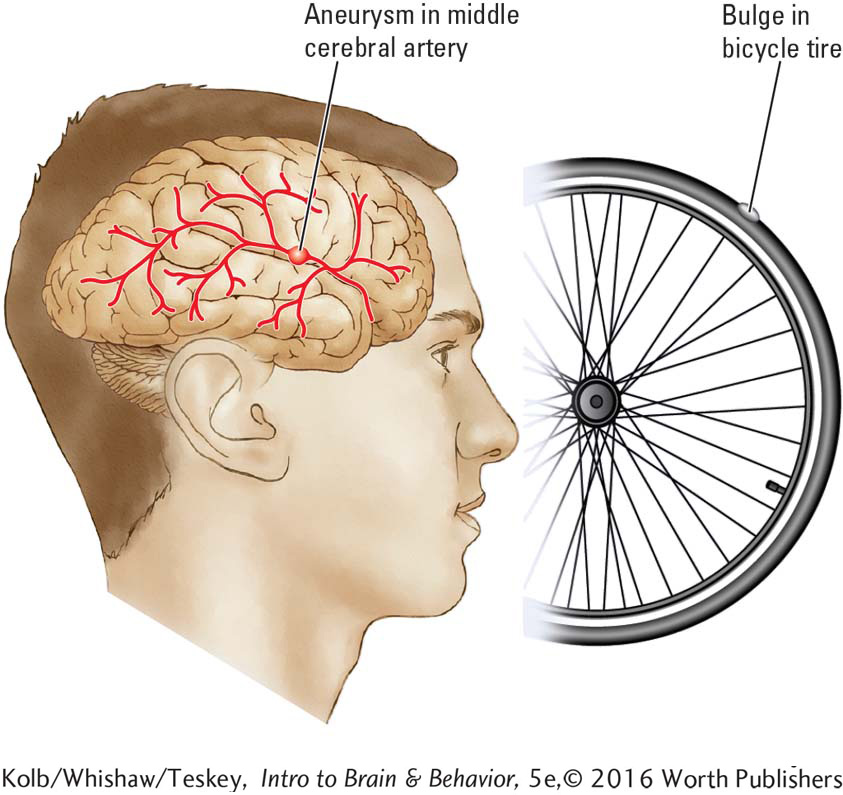

An aneurysm is a bulge in a blood vessel wall caused by weakening of the tissue, much like the bulge that appears in a bicycle tire at a weakened spot. Aneurysms in a cerebral artery are dangerous: if they burst, severe bleeding and consequent brain damage result.

In February 1987, C. N.’s aneurysm was surgically repaired, and she appeared to have few adverse effects. Postoperative brain imaging revealed, however, that a new aneurysm had formed in the same location but in the middle cerebral artery on the opposite side of the brain. This second aneurysm was repaired 2 weeks later.

After her surgery, C. N. had temporary difficulty finding the right word when she spoke, but more important, her perception of music was deranged. She could no longer sing, nor could she recognize familiar tunes. In fact, singers sounded to her as if they were talking instead of singing. But C. N. could still dance to music.

A brain scan revealed damage along the lateral fissure in both temporal lobes. The damage did not include the primary auditory cortex, nor did it include any part of the posterior speech zone. For these reasons, C. N. could still recognize nonmusical sound patterns and showed no evidence of language disturbance. This finding reinforces the hypothesis that nonmusical sounds and speech sounds are analyzed in parts of the brain separate from those that process music.

To find out more about how the brain carries out the perceptual side of music processing, Zatorre and his colleagues (1994) conducted PET studies. When participants listened simply to bursts of noise, Heschl’s gyrus became activated (Figure 10-22A), but perception of melody triggers major activation in the right-

In another test, participants listened to the same melodies. The investigators asked them to indicate whether the pitch of the second note was higher or lower than that of the first note. During this task, which necessitates short-

The brain may be tuned prenatally to the language it will hear at birth; see Focus 7-1.

As noted earlier, the capacity for language is innate. Sandra Trehub and her colleagues (1999) showed that music may be innate as well, as we hypothesized at the beginning of the chapter. Trehub found that infants show learning preferences for musical scales versus random notes. Like adults, children are sensitive to musical errors, presumably because they are biased for perceiving regularity in rhythms. Thus, it appears that the brain is prepared at birth for hearing both music and language, and presumably it selectively attends to these auditory signals.

10-6

The Brain’s Music System

Nonmusicians enjoy music and have musical ability. Musicians show an enormous range of ability: some have perfect pitch and some do not, for example. About 4 percent of the population is tone deaf. Their difficulties, characterized as amusia—an inability to distinguish between musical notes—

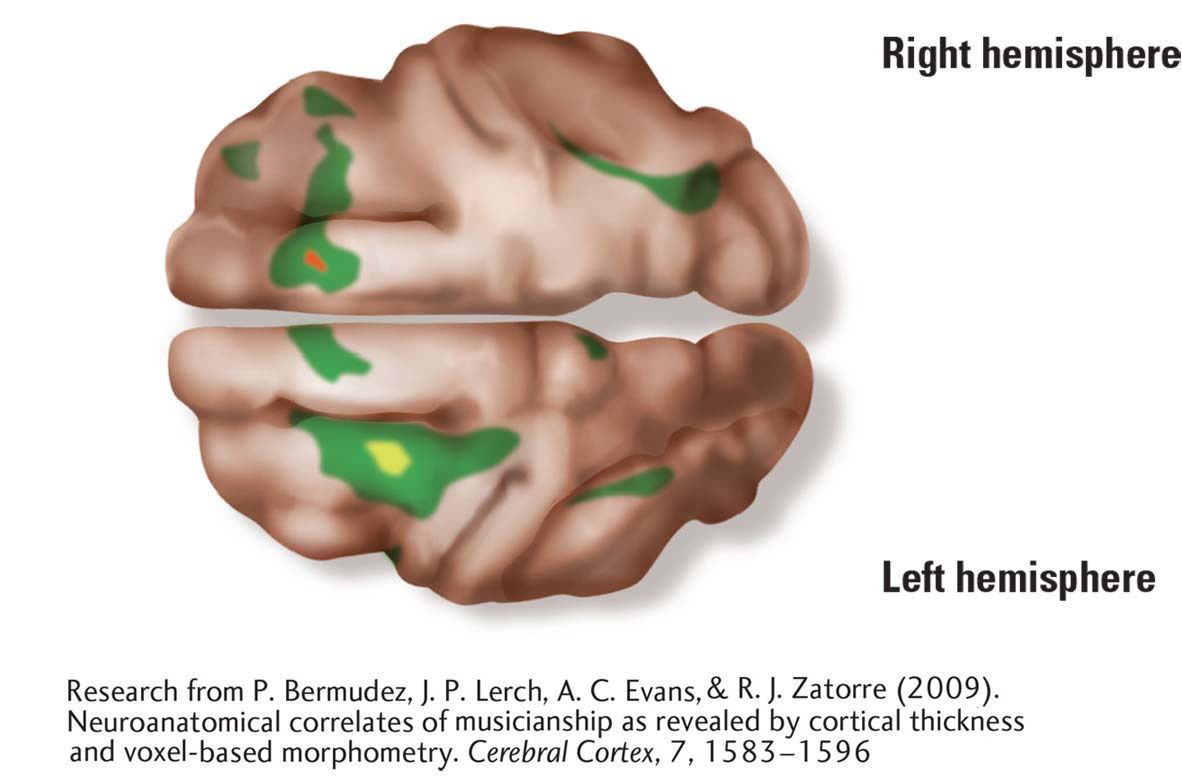

Robert Zatorre and his colleagues (Bermudez et al., 2009; Hyde et al., 2007) have used MRI to look at differences among the brains of musicians, nonmusicians, and amusics. MRIs of the left and right hemispheres show that compared to nonmusicians, musicians’ cortical thickness is greater in dorsolateral frontal and superior temporal regions. Curiously, musicians with perfect pitch have thinner cortex in the posterior part of the dorsolateral frontal lobe. Thinner appears to be better for some music skills.

Compared to nonmusicians then, musicians with thicker than normal cortex must have enhanced neural networks in the right-

Analysis of amusic participants’ brains showed thicker cortex in the right frontal area and in the right auditory cortex regions. Some abnormality in neuronal migration during brain development is likely to have led to an excess of neurons in the right frontal–

Music as Therapy

At: https://www.youtube.com/watch?v=eNpoVeLfMKg, watch as Parkinson patients step to the beat of music to improve their gait length and walking speed.

The power of music to engage the brain has led to its use as a therapeutic tool for brain dysfunctions. The best evidence of its effectiveness lies in studies of motor disorders such as stroke and Parkinson disease (Johansson, 2012). Listening to rhythm activates the motor and premotor cortex and can improve gait and arm training after stroke. Musical experience reportedly also enhances the ability to discriminate speech sounds and to distinguish speech from background noise in patients with aphasia.

More on music as therapy in Focus 5-2 and the dance class for Parkinson patients pictured on page 160. Sections 16-2 and 16-3 revisit music therapy.

Music therapy also appears to be a useful complement to more traditional therapies, especially when there are problems with mood, such as in depression or brain injury. This may prove important in the treatment of stroke and traumatic brain injury, with which depression is a common complication in recovery. Music therapy also has positive effects following major surgery, both in adults and children, by reducing both their pain perception and the amount of pain medication they use (Sunitha Suresh et al., 2015). With all these applications, perhaps researchers will decide to use noninvasive imaging to determine which brain areas music therapy recruits.

10-4 REVIEW

Anatomy of Language and Music

Before you continue, check your understanding.

Question 1

The human auditory system has complementary specialization for the perception of sounds: left for ____________ and right for ____________.

Question 2

The three frontal lobe regions that participate in producing language are ____________, ____________, and ____________.

Question 3

____________ area identifies speech syllables and words and stores their representations in that location.

Question 4

____________ area matches speech sounds to the motor programs necessary to articulate them.

Question 5

At one end of the spectrum for musical ability are people with ____________ and at the other are people who are ____________.

Question 6

What evidence supports the idea that language is innate?

Answers appear in the Self Test section of the book.