18.1 SOLUTIONS TO ODD-NUMBERED END-OF-CHAPTER PROBLEMS

SOLUTIONS TO ODD-

Chapter 1

1.1 Descriptive statistics organize, summarize, and communicate a group of numerical observations. Inferential statistics use sample data to make general estimates about the larger population.

1.3 The four types of variables are nominal, ordinal, interval, and ratio. A nominal variable is used for observations that have categories, or names, as their values. An ordinal variable is used for observations that have rankings (i.e., 1st, 2nd, 3rd) as their values. An interval variable has numbers as its values; the distance (or interval) between pairs of consecutive numbers is assumed to be equal. A ratio variable meets the criteria for interval variables but also has a meaningful zero point. Interval and ratio variables are both often referred to as scale variables.

1.5 Discrete variables can only be represented by specific numbers, usually whole numbers; continuous variables can take on any values, including those with great decimal precision (e.g., 1.597).

1.7 A confounding variable (also called a confound) is any variable that systematically varies with the independent variable so that we cannot logically determine which variable affects the dependent variable. Researchers attempt to control confounding variables in experiments by randomly assigning participants to conditions. The hope with random assignment is that the confounding variable will be spread equally across the different conditions of the study, thus neutralizing its effects.

1.9 An operational definition specifies the operations or procedures used to measure or manipulate an independent or dependent variable.

1.11 When conducting experiments, the researcher randomly assigns participants to conditions or levels of the independent variable. When random assignment is not possible, such as when studying something like gender or marital status, correlational research is used. Correlational research allows us to examine how variables are related to each other; experimental research allows us to make assertions about how an independent variable causes an effect in a dependent variable.

1.13

“This was an experiment” (not “This was a correlational study.”)

“the independent variable of caffeine” (not “the dependent variable of caffeine”)

“A university assessed the validity” (not “A university assessed the reliability”)

“In a between-

groups experiment” (not “In a within- groups experiment”)

1.15 The sample is the 2500 Canadians who work out every week. The population is all Canadians.

1.17 The sample is the 100 customers who completed the survey. The population is all of the customers at the grocery store.

1.19

73 people

All people who shop in grocery stores similar to the one where data were collected

Inferential statistic

Answer may vary, but here is one way that the amount of fruit and vegetable items purchased could be operationalized as a nominal variable. People could be labeled as having a “healthy diet” or an “unhealthy diet.”

Answers may vary, but there could be groupings such as “no items,” “a minimal number of items,” “some items,” and “many items.”

Answers may vary, but the number of items could be counted or weighed.

1.21

The independent variables are physical distance and emotional distance. The dependent variable is accuracy of memory.

There are two levels of physical distance (within 100 miles and 100 miles or farther) and three levels of emotional distance (knowing no one who was affected, knowing people who were affected but lived, and knowing someone who died).

Answers may vary, but accuracy of memory could be operationalized as the number of facts correctly recalled.

1.23

The average weight for a 10-

year- old girl was 77.4 pounds in 1963 and nearly 88 pounds in 2002. No; the CDC would not be able to weigh every single girl in the United States because it would be too expensive and time consuming.

It is a descriptive statistic because it is a numerical summary of a sample. It is an inferential statistic because the researchers drew conclusions about the population’s average weight based on this information from a sample.

1.25

Ordinal

Scale

Nominal

1.27

Discrete

Continuous

Discrete

Discrete

1.29

The independent variables are temperature and rainfall. Both are continuous scale variables.

The dependent variable is experts’ ratings. This is a discrete scale variable.

The researchers wanted to know if the wine experts are consistent in their ratings—

that is, if they’re reliable. This observation would suggest that Robert Parker’s judgments are valid. His ratings seem to be measuring what they intend to measure—

wine quality.

1.31

Forbes is operationalizing earnings as all of a comedian’s pretax gross income from all sources, provided that he earned the majority of his money from live performances.

Erin Gloria Ryan likely has a problem with this definition because not all comedians perform live as their primary source of income. In her article, she explains: “The Forbes list isn’t a brofest because men 100% dominate the top echelons of comedy. . . [It] employs an outdated definition of what comedy is and who is earning money from it that is always going to skew male. The game is rigged.”

Forbes could operationalize the earnings of comedians as pretax gross income, as they are already doing, but they could include all comedians, whether they earned most of their money from concerts, TV or internet shows, movies, books, MP3 sales, or any other comedy-

related source. This would remove the restriction that most income must come from concert sales. According to Ryan, this broader definition would have put Ellen DeGeneres in first place; she earned $53 million in 2013. Other female comedians who would have leaped onto this list include Sofía Vergara, Tina Fey, Amy Poehler, and Chelsea Handler.

1.33

An experiment requires random assignment to conditions. It would not be ethical to randomly assign some people to smoke and some people not to smoke, so this research had to be correlational.

Other unhealthy behaviors have been associated with smoking, such as poor diet and infrequent exercise. These other unhealthy behaviors might be confounded with smoking.

The tobacco industry could claim it was not the smoking that was harming people, but rather the other activities in which smokers tend to engage or fail to engage.

You could randomly assign people to either a smoking group or a nonsmoking group, and assess their health over time.

1.35

This is experimental because students are randomly assigned to one of the incentive conditions for recycling.

Answers may vary, but one hypothesis could be “Students fined for not recycling will report a lower level of concern about the environment, on average, than those rewarded for recycling.”

1.37

Researchers could have randomly assigned some people who are HIV-

positive to take the oral vaccine and other people who are HIV- positive not to take the oral vaccine. The second group would likely take a placebo. This would have been a between-

groups experiment because the people who are HIV- positive would have been in only one group: either vaccine or no vaccine. This limits the researchers’ ability to draw causal conclusions because the participants who received the vaccine may have been different in some way from those who did not receive the vaccine. There may have been a confounding variable that led to these findings. For example, those who received the vaccine might have had better access to health care and better sanitary conditions to begin with, making them less likely to contract cholera regardless of the vaccine’s effectiveness.

The researchers might not have used random assignment because it would have meant recruiting participants, likely immunizing half, then following up with all of them. The researchers likely did not want to deny the vaccine to people who were HIV-

positive because they might have contracted cholera and died without it.

1.39

A “good charity” is operationally defined as one that spends more of its money for the cause it is supporting and less for fundraising or administration.

The rating is a scale variable, as it has a meaningful zero point, has equal distance between intervals, and is continuous.

The tier is an ordinal variable, as it involves ranking the organizations into categories (1st, 2nd, 3rd, 4th, or 5th tier) and it is discrete.

The type of charity is a nominal variable, as it uses names or categories to classify the values (e.g., health and medical needs) and it is discrete.

Measuring finances is more objective and easier to measure than some of the criteria mentioned by Ord, such as importance of the problem and competency and honesty.

Charity Navigator’s ratings are more likely to be reliable than GiveWell’s ratings because they are based on an objective measure. It is more likely that different assessors would come up with the same rating for Charity Navigator than for GiveWell.

GiveWell’s ratings are likely to be more valid than Charity Navigator’s, provided that they can attain some level of reliability. GiveWell’s more comprehensive rating system incorporates a better-

rounded assessment of a charity. This would be a correlational study because donation funds, the independent variable, would not be randomly assigned based on country but measured as they naturally occur.

This would be an experiment because the levels of donation funds, the independent variable, are randomly assigned to different regions to determine the effect on death rate.

Chapter 2

2.1 Raw scores are the original data, to which nothing has been done.

2.3 A frequency table is a visual depiction of data that shows how often each value occurred; that is, it shows how many scores are at each value. Values are listed in one column, and the numbers of individuals with scores at that value are listed in the second column. A grouped frequency table is a visual depiction of data that reports the frequency within each given interval, rather than the frequency for each specific value.

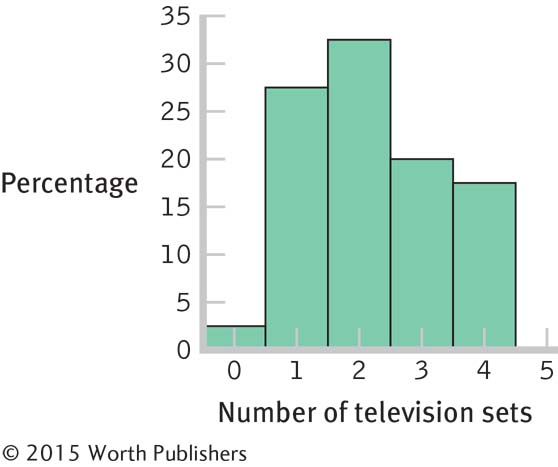

2.5 Bar graphs typically provide scores for nominal data, whereas histograms typically provide frequencies for scale data. Also, the categories in bar graphs do not need to be arranged in a particular order and the bars should not touch, whereas the intervals in histograms are arranged in a meaningful order (lowest to highest) and the bars should touch each other.

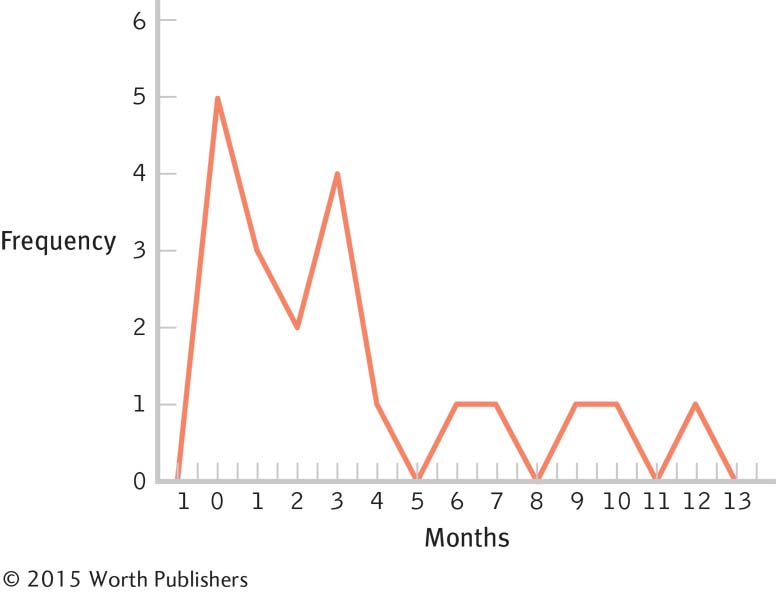

2.7 A histogram looks like a bar graph but is usually used to depict scale data, with the values (or midpoints of intervals) of the variable on the x-axis and the frequencies on the y-axis. A frequency polygon is a line graph, with the x-axis representing values (or midpoints of intervals) and the y-axis representing frequencies; a dot is placed at the frequency for each value (or midpoint), and the points are connected.

2.9 In everyday conversation, you might use the word distribution in a number of different contexts, from the distribution of food to a marketing distribution. A statistician would use distribution only to describe the way that a set of scores, such as a set of grades, is distributed. A statistician is looking at the overall pattern of the data—

2.11 With positively skewed data, the distribution’s tail extends to the right, in a positive direction, and with negatively skewed data, the distribution’s tail extends to the left, in a negative direction.

2.13 A ceiling effect occurs when there are no scores above a certain value; a ceiling effect leads to a negatively skewed distribution because the upper part of the distribution is constrained.

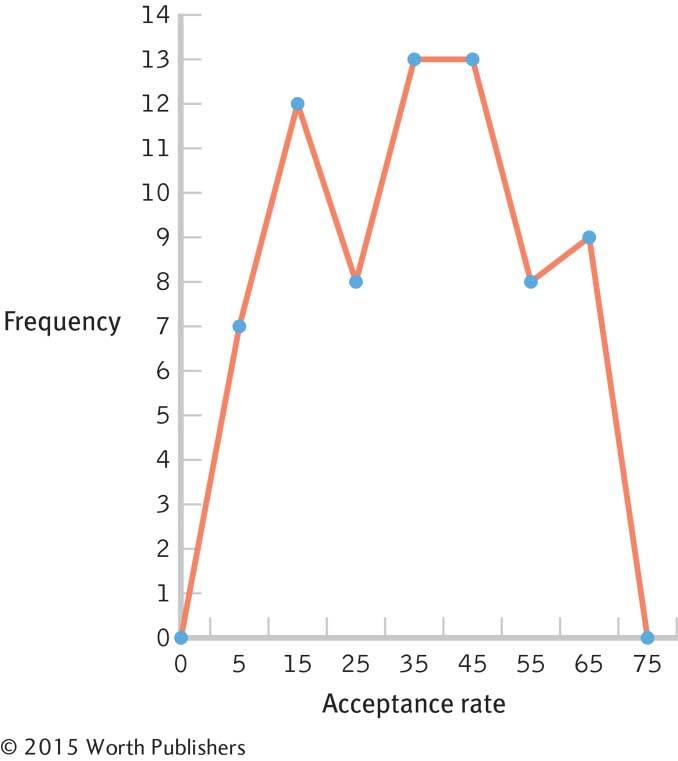

2.15 17.95% and 40.67%

2.17 0.10% and 96.77%

2.19 0.04, 198.22, and 17.89

2.21 The full range of data is 68 minus 2, plus 1, or 67. The range (67) divided by the desired seven intervals gives us an interval size of 9.57, or 10 when rounded. The seven intervals are: 0–

2.23 26 shows

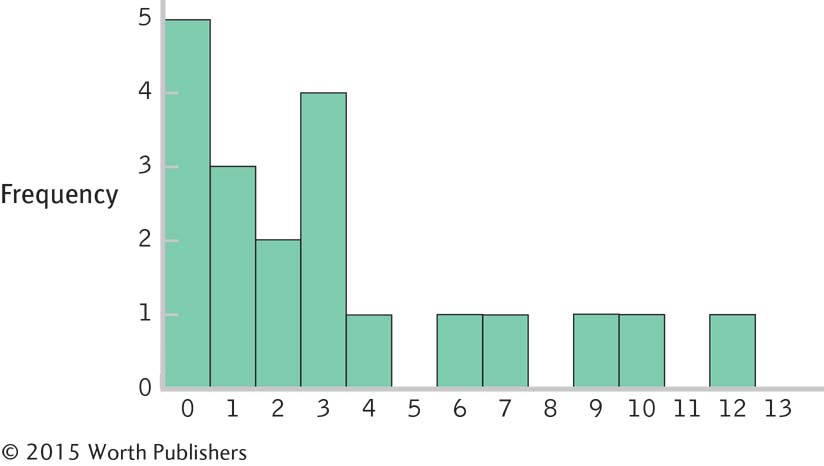

2.25 Serial killers would create positive skew, adding high numbers of murders to the data that are clustered around 1.

2.27

For the college population, the range of ages extends farther to the right (with a larger number of years) than to the left, creating positive skew.

The fact that youthful prodigies have limited access to college creates a sort of floor effect that makes low scores less possible.

2.29

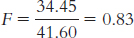

Percentage Frequency Percentage 10 1 5.26 9 0 0.00 8 0 0.00 7 0 0.00 6 0 0.00 5 2 10.53 4 2 10.53 3 4 21.05 2 4 21.05 1 5 26.32 0 1 5.26 In 10.53% of these schools, exactly 4% of the students reported that they wrote between 5 and 10 twenty-

page papers that year. This is not a random sample. It includes schools that chose to participate in this survey and opted to have their results made public.

One

The data are clustered around 1% to 4%, with a high outlier, 10%.

2.31

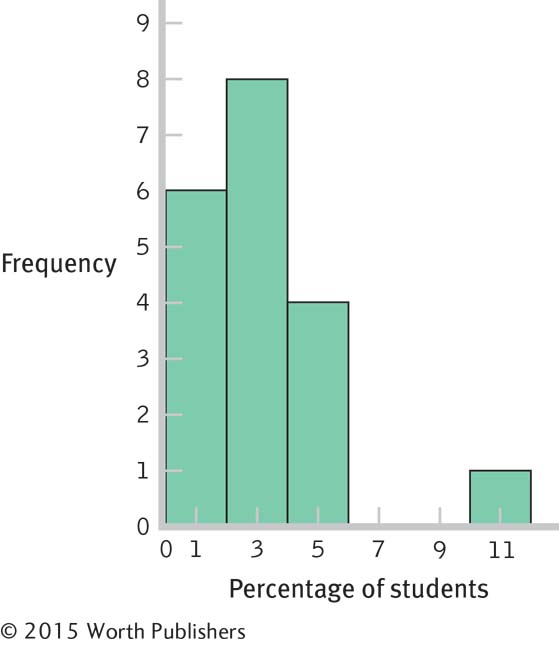

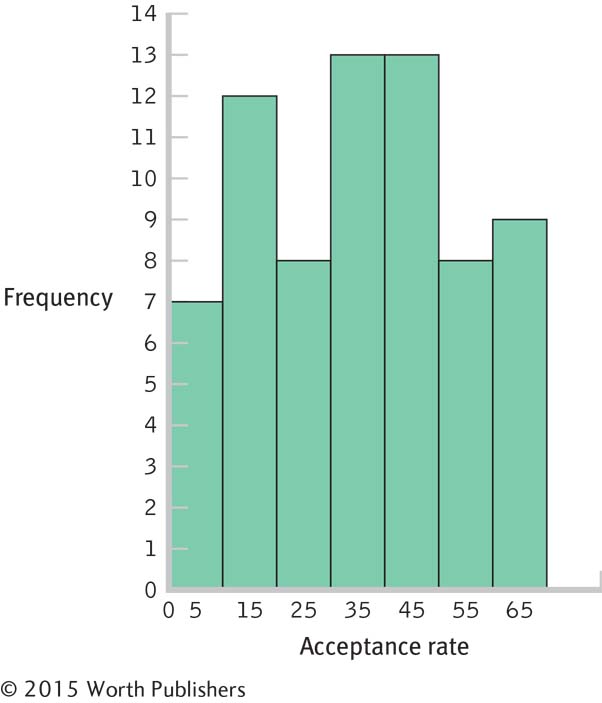

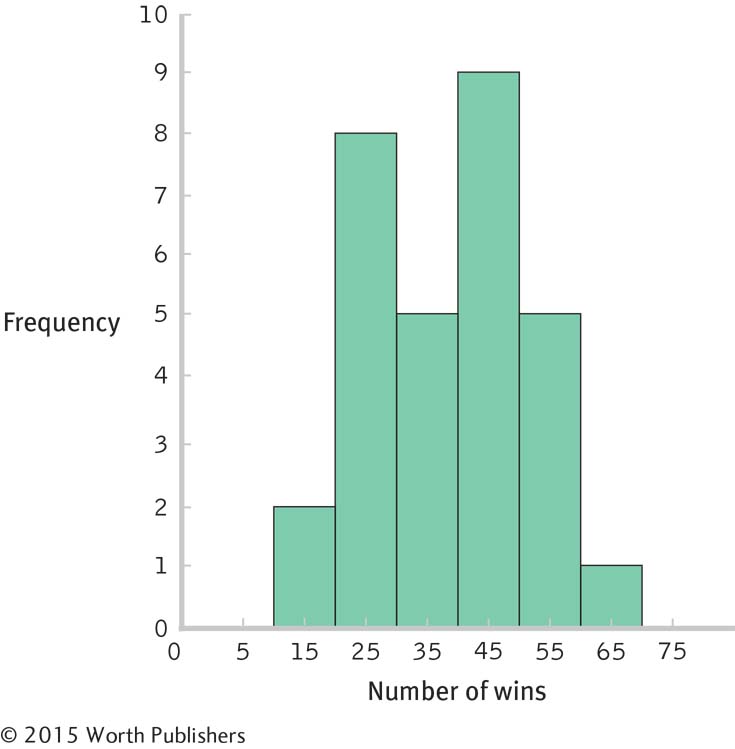

Interval Frequency 60– 69 9 50– 59 8 40– 49 13 30– 39 13 20– 29 8 10– 19 12 0– 9 7 Page C-4There are many possible answers to this question. For example, we might ask whether the prestige of the university or the region of the country is a factor in acceptance rate.

There are no unusual scores, as the distribution is fairly uniform, with frequencies between 6 and 13. The center of the distribution seems to be in the 20–

49 range.

2.33

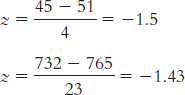

Extroversion scores are most likely to have a normal distribution. Most people would fall toward the middle, with some people having higher levels and some having lower levels.

The distribution of finishing times for a marathon is likely to be positively skewed. The floor is the fastest possible time, a little over 2 hours; however, some runners take as long as 6 hours or more. Unfortunately for the very, very slow but unbelievably dedicated runners, many marathons shut down the finish line 6 hours after the start of the race.

The distribution of numbers of meals eaten in a dining hall in a semester on a three-

meal- a- day plan is likely to be negatively skewed. The ceiling is three times per day, multiplied by the number of days; most people who choose to pay for the full plan would eat many of these meals. A few would hardly ever eat in the dining hall, pulling the tail in a negative direction.

2.35

2.37

A frequency polygon based on these data is likely to be negatively skewed. The scale is 1–

10 and most films are rated above the midpoint. Very few are as low as Gunday. There is more likely to be a ceiling effect. With most films earning high ratings, it seems that the limiting factor is the top score of 10. No film earned the lowest possible score of 1, and few were as low as Gunday’s 1.4. So, there doesn’t seem to be a floor effect of 1.

IMDb ratings don’t seem to be a good way to operationalize movie quality. Audience ratings may be based on something other than how good the film is. In this case, many of those who rated Gunday based their scores on politics rather than on the qualities of the film itself. Another way to operationalize movie quality is a rating based on critics’ reviews, such as the system used by rottentomatoes.com. This site provides an average rating from critics, based on published reviews, in addition to one by movie audiences. Critics are unlikely to rate a movie simply based on politics.

2.39

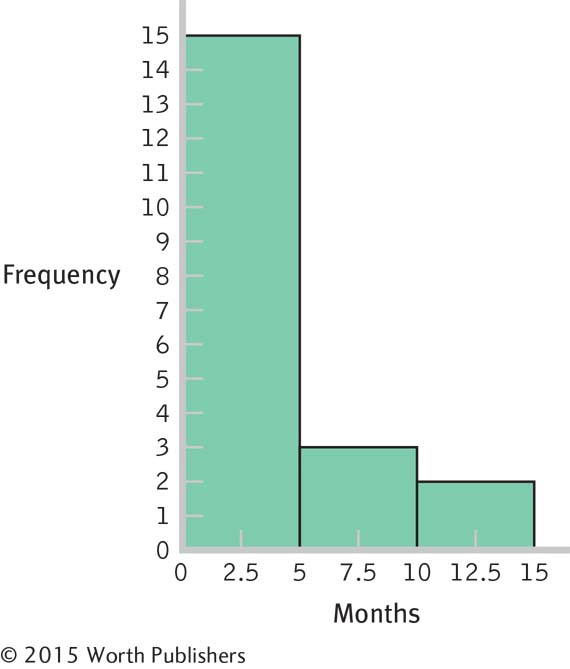

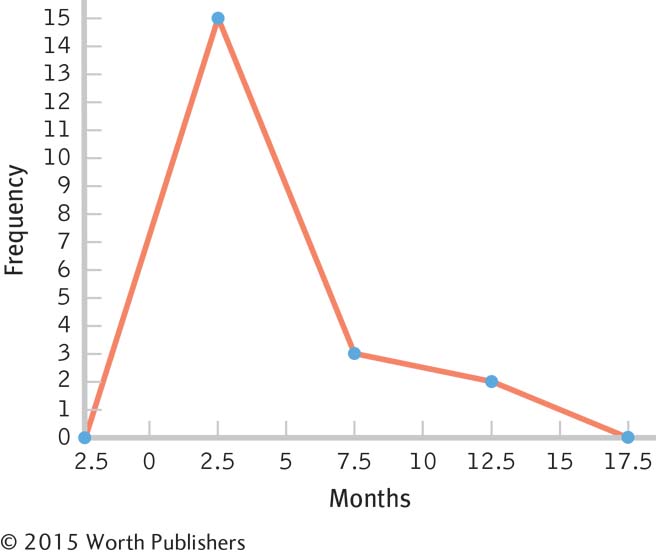

Months Frequency Percentage 12 1 5 11 0 0 10 1 5 9 1 5 8 0 0 7 1 5 6 1 5 5 0 0 4 1 5 3 4 20 2 2 10 1 3 15 0 5 25 Page C-5

Interval Frequency 10– 14 months 2 5– 9 months 3 0– 4 months 15

These data are centered around the 3-

month period, with positive skew extending the data out to the 12- month period. The bulk of the data would need to be shifted from the 3-

month period to approximately 12 months, so the women who have breast- fed for 3 months so far might be the focus of attention. Perhaps early contact at the hospital and at follow- up visits after birth would help encourage mothers to breast- feed, and to breast- feed longer. One could also consider studying the women who create the positive skew to learn what unique characteristics or knowledge they have that influenced their behavior.

2.41

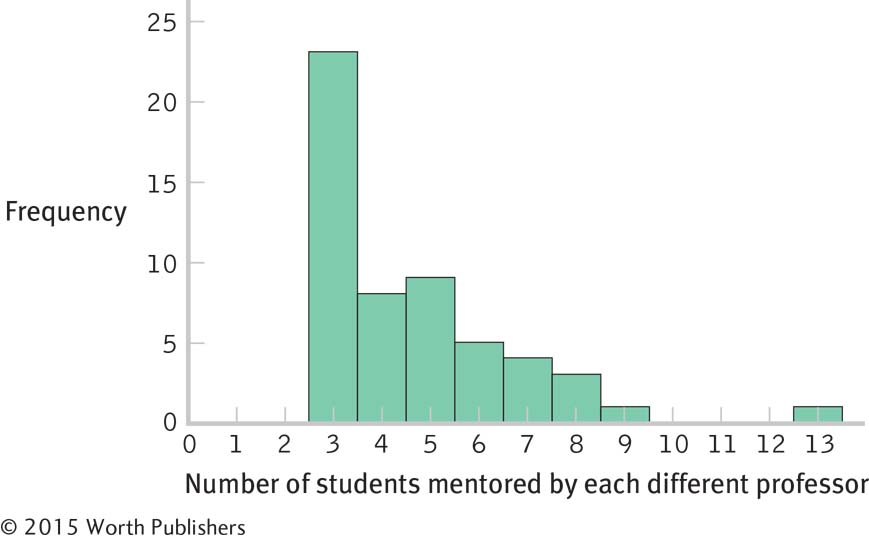

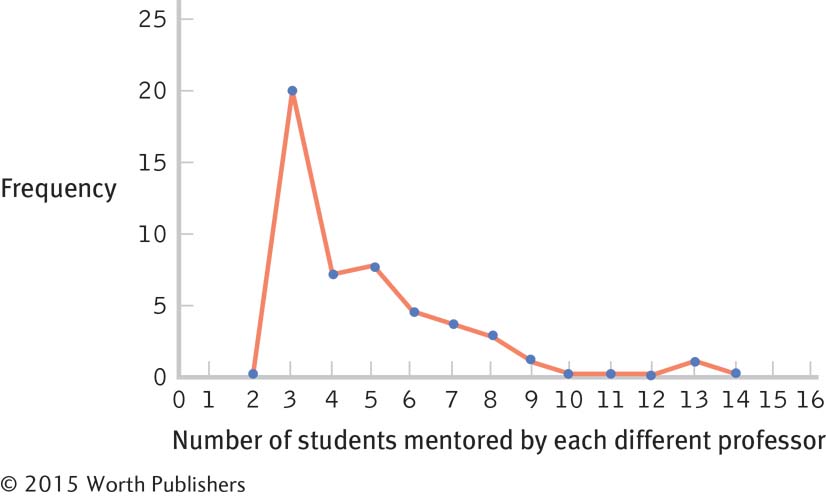

Former Students Now in Top Jobs Frequency Percentage 13 1 1.85 12 0 0.00 11 0 0.00 10 0 0.00 9 1 1.85 8 3 5.56 7 4 7.41 6 5 9.26 5 9 16.67 4 8 14.81 3 23 42.59 Page C-6

This distribution is positively skewed.

The researchers operationalized the variable of mentoring success as numbers of students placed into top professorial positions. There are many other ways this variable could have been operationalized. For example, the researchers might have counted numbers of student publications while in graduate school or might have asked graduates to rate their satisfaction with their graduate mentoring experiences.

The students might have attained their positions as professors because of the prestige of their advisor, not because of his mentoring.

There are many possible answers to this question. For example, the attainment of a top professorial position might be predicted by the prestige of the institution, the number of publications while in graduate school, or the graduate student’s academic ability.

Chapter 3

3.1 The five techniques for misleading with graphs are the biased scale lie, the sneaky sample lie, the interpolation lie, the extrapolation lie, and the inaccurate values lie.

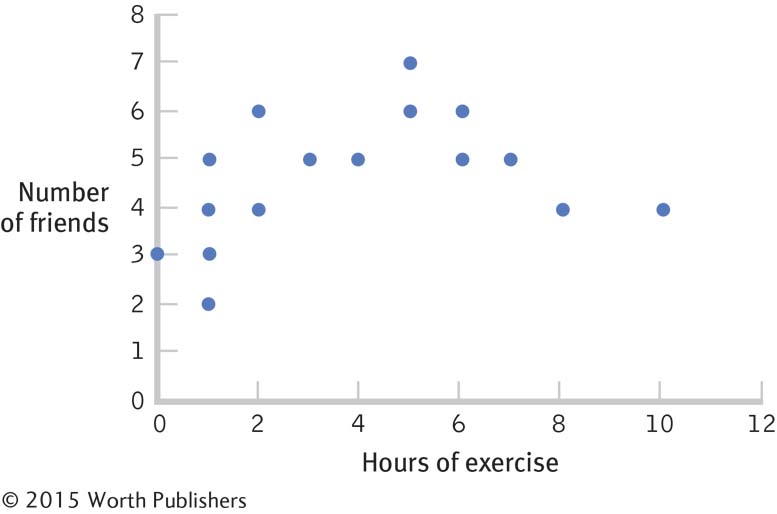

3.3 To convert a scatterplot to a range-

3.5 With scale data, a scatterplot allows for a helpful visual analysis of the relation between two variables. If the data points appear to fall approximately along a straight line, the variables may have a linear relation. If the data form a line that changes direction along its path, the variables may have a nonlinear relation. If the data points show no particular relation, it is possible that the two variables are not related.

3.7 A bar graph is a visual depiction of data in which the independent variable is nominal or ordinal and the dependent variable is scale. Each bar typically represents the mean value of the dependent variable for each category. A Pareto chart is a specific type of bar graph in which the categories along the x-axis are ordered from highest bar on the left to lowest bar on the right.

3.9 A pictorial graph is a visual depiction of data typically used for a nominal independent variable with very few levels (categories) and a scale dependent variable. Each level uses a picture or symbol to represent its value on the scale dependent variable. A pie chart is a graph in the shape of a circle, with a slice for every level. The size of each slice represents the proportion (or percentage) of each category. In most cases, a bar graph is preferable to a pictorial graph or a pie chart.

3.11 The independent variable typically goes on the horizontal x-axis and the dependent variable goes on the vertical y-axis.

3.13 Moiré vibrations are any visual patterns that create a distracting impression of vibration and movement. A grid is a background pattern, almost like graph paper, on which the data representations, such as bars, are superimposed. Ducks are features of the data that have been dressed up to be something other than merely data.

3.15 Like a traditional scatterplot, the locations of the points on the bubble graph simultaneously represent the values that a single case (or country) has on two scale variables. The graph as a whole depicts the relation between these two variables.

3.17 Total dollars donated per year is scale data. A time plot would nicely show how donations varied across years.

3.19

The independent variable is gender and the dependent variable is video game score.

Nominal

Scale

The best graph for these data would be a bar graph because there is a nominal independent variable and a scale dependent variable.

3.21 Linear, because the data could be fit with a line drawn from the upper-

3.23

Bar graph

Line graph; more specifically, a time plot

The y-axis should go down to 0.

The lines in the background are grids, and the three-

dimensional effect is a type of duck. 3.20%, 3.22%, 2.80%

If the y-axis started at 0, all of the bars would appear to be about the same height. The differences would be minimized.

3.25 The minimum value is 0.04 and the maximum is 0.36, so the axis could be labeled from 0.00 to 0.40. We might choose to mark every 0.05 value:

0.00 0.05 0.10 0.15 0.20 0.25 0.30 0.35 0.40

3.27 The relation between physical health and positive emotions seems to be positive, with the data fitting a line moving from the lower-

3.29

The independent variable is height and the dependent variable is attractiveness. Both are scale variables.

The best graph for these data would be a scatterplot (which also might include a line of best fit if the relation is linear) because there are two scale variables.

It would not be practical to start the axis at 0. With the data clustered from 58 to 71 inches, a 0 start to the axis would mean that a large portion of the graph would be empty. We would use cut marks to indicate that the axis did not include all values from 0 to 58. (However, we would include the full range of data—

0 to 71— if omitting some of these numbers would be misleading.)

3.31

The independent variable is country and the dependent variable is male suicide rate.

Country is a nominal variable and suicide rate is a scale variable.

The best graph for these data would be a bar graph or a Pareto chart. Because there are six categories or countries to list along the x-axis, it may be best to arrange them in order from highest to lowest using a Pareto chart.

A time series plot could show year on the x-axis and suicide rate on the y-axis. Each country would be represented by a different color line.

3.33

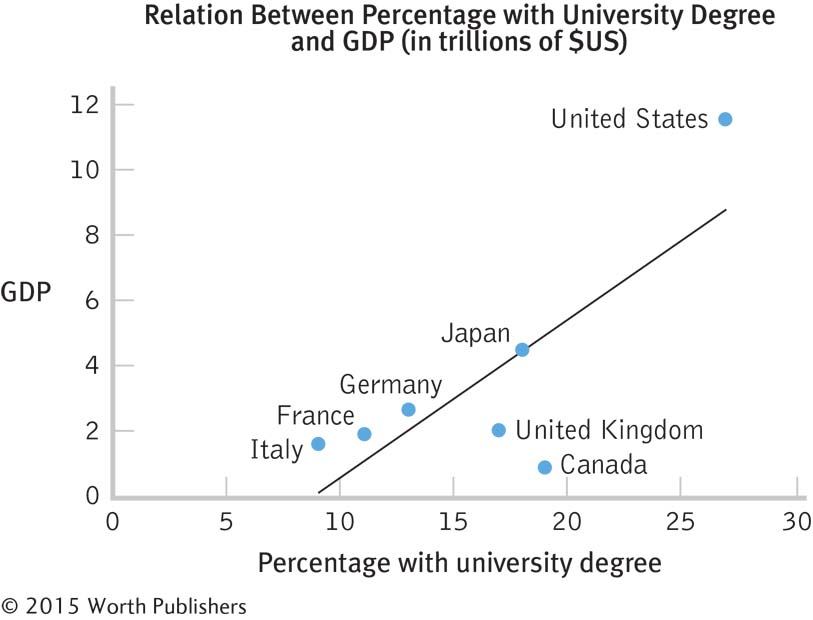

The percentage of residents with a university degree appears to be related to GDP. As the percentage with a university degree increases, so does GDP.

It is possible that an educated populace has the skills to make that country productive and profitable. Conversely, it is possible that a productive and profitable country has the money needed for the populace to be educated.

3.35

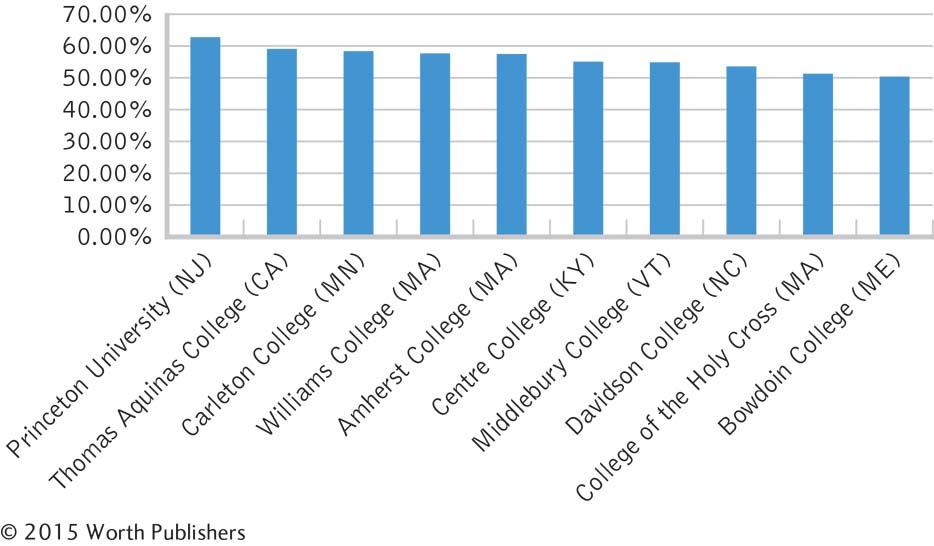

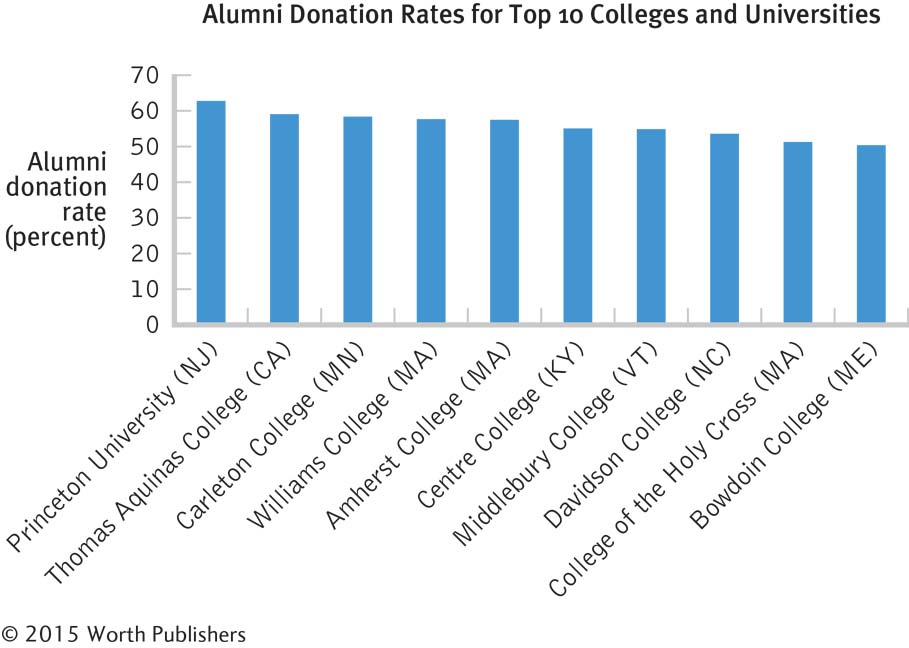

The independent variable is the academic institution. It is nominal; the levels are the 10 colleges.

The dependent variable is alumni donation rate. It is a scale variable; the units are percentages, and the range of values is from 50.2 to 62.6.

The defaults will differ, depending on which software is used. Here is one example.

The redesigns will differ, depending on which software is used. In this example, we added a clear title and labeled the y-axis (being sure that it reads from left to right). We also eliminated the unnecessary lines in the background and the decimal places of each number on the y-axis.

There are many possible answers to this question. The researcher might want to identify characteristics of alumni who donate, methods of soliciting donations that result in the best outcomes, or characteristics of universities that have the highest donation rates.

Pictures could be used instead of bars. For example, dollar signs might be used to represent the donation rate for each college.

If the dollar signs become wider as they get taller, as often happens with pictorial graphs, the overall size would be proportionally larger than the increase in donation rate it is meant to represent. A bar graph is not subject to this problem because graphmakers are not likely to make bars wider as they get taller.

3.37

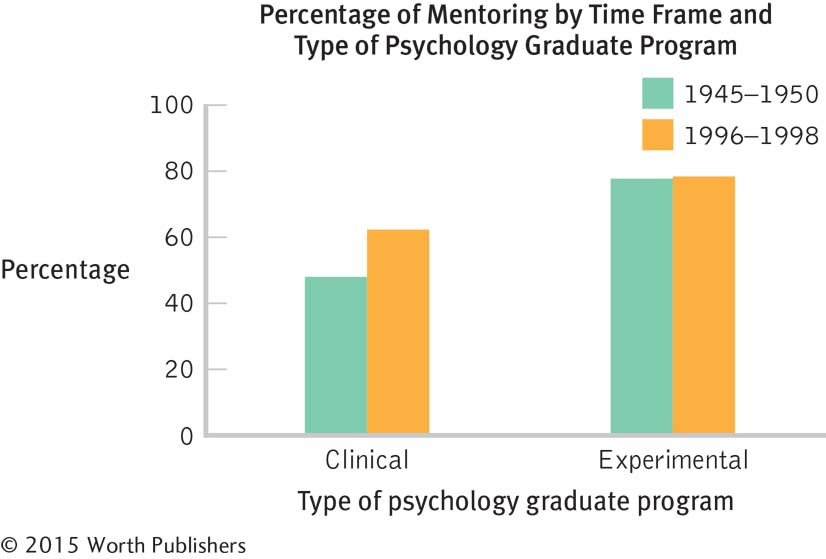

One independent variable is time frame; it has two levels: 1945–

1950 and 1996– 1998. The other independent variable is type of graduate program; it also has two levels: clinical psychology and experimental psychology. The dependent variable is percentage of graduates who had a mentor while in graduate school.

Page C-8

These data suggest that clinical psychology graduate students were more likely to have been mentored if they were in school in the 1996–

1998 time frame than if they were in school during the 1945– 1950 time frame. There does not appear to be such a difference among experimental psychology students. This was not a true experiment. Students were not randomly assigned to time period or type of graduate program.

A time series plot would be inappropriate with so few data points. It would suggest that we could interpolate between these data points. It would suggest a continual increase in the likelihood of being mentored among clinical psychology students, as well as a stable trend, albeit at a high level, among experimental psychology students.

The story based on two time points might be falsely interpreted as a continual increase of mentoring rates for the clinical psychology students and a plateau for the experimental psychology students. The expanded data set suggests that the rates of mentoring have fluctuated over the years. Without the four time points, we might be seduced by interpolation into thinking that the two scores represent the end points of a linear trend. We cannot draw conclusions about time points for which we have no data—

especially when we have only two points, but even when we have more points.

3.39

The details will differ, depending on the software used. Here is one example.

The default options that students choose to override will differ. For the bar graph below, we (1) added a title, (2) labeled the x-axis, (3) labeled the y-axis, (4) rotated the y-axis label so that it reads from left to right, and (5) eliminated the unnecessary key.

3.41

The graph is a scatterplot: individual points are identified for two scale variables—

academic standing and “hotness.” The variables are academic standing and “hotness.”

The graph could be redesigned to get rid of moiré vibrations, such as the colored background; and the grid (the background pattern of graph paper) and duck (the woman in the background image) could be eliminated.

3.43 Each student’s advice will differ. The following are examples of advice.

Business and women: Eliminate all the pictures, including the woman, piggy banks, the dollar signs in the background, and the icons to the right (e.g., house). The two bars near the top could mislead us into thinking they indicated quantity, even though they are the same length for two different median wages. Either eliminate the bars or size them so that they are appropriate to the dollars they represent. Ideally, the two median wages would be presented in a bar graph. Eliminate unnecessary words (e.g., “The Mothers of Business Invention”).

Workforce participation: Eliminate all the pictures. A falling line in the art shown indicates an increase in percentage; notice that 40% is at the top and 80% is at the bottom. Make the y-axis go from highest to lowest, starting from 0. Make the lines easier to compare by eliminating the three-

dimensional effect. Make it clear where the data point for each year falls by including a tick mark for each number on the x-axis.

3.45

The graph proposes that Type I regrets of action are initially intense but decline over the years, while Type II regrets of inaction are initially mild but become more intense over the years.

There are two independent variables: type of regret (a nominal variable) and age (a scale variable).There is one dependent variable: intensity of regrets (also a scale variable).

Page C-9This is a graph of a theory. No data have been collected, so there are no statistics of any kind.

The story that this theoretical relation suggests is that regrets over things a person has done are intense shortly after the actual behavior but decline over the years. In contrast, regrets over things a person has not done but wishes they had are initially low in intensity but become more intense as the years go by.

3.47

These data tell us that most domestic Canadian students—

59%—strongly agreed or somewhat agreed that international students have improved their universities’ reputations. Far fewer— 15%—strongly disagreed or somewhat disagreed with this statement, and 27% reserved judgment for some reason. To understand this pie chart, we have to look back and forth between the label and each “pie slice” that it describes. We then need to mentally compare the various percentages in the graph. A bar graph would allow for easier comparisons among the possible responses.

In this case, it makes sense to keep the possible responses in order from most negative to most positive (with the catch-

all “other” category at the end). If we arranged the bars in order of height— somewhat disagree, not sure/don’t know/N/A, strongly disagree, somewhat agree, and strongly agree— the story is not as easy to understand. This graph allows us to “see” that Canadian students tend to hold positive opinions toward the effect of international students on their universities’ reputations.

3.49

Data can almost always be presented more clearly in a bar graph or table than in a pie chart.

Answers to this question should include revising the data to add up to 100%, removing chartjunk (e.g., colors, shading, background images), and more clearly labeling categories with candidate names only. The graph also should not have 3-

D features.

3.51

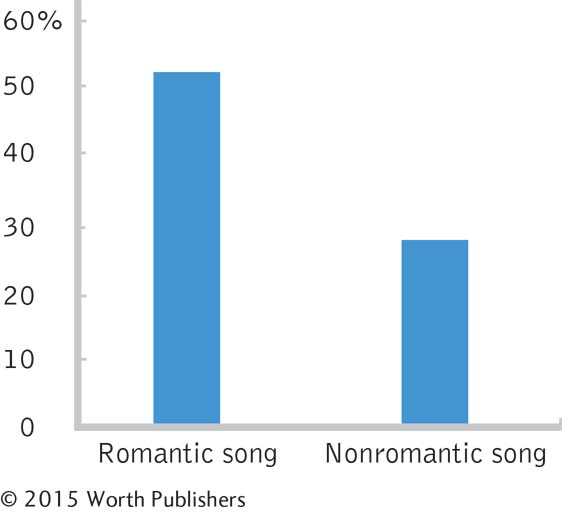

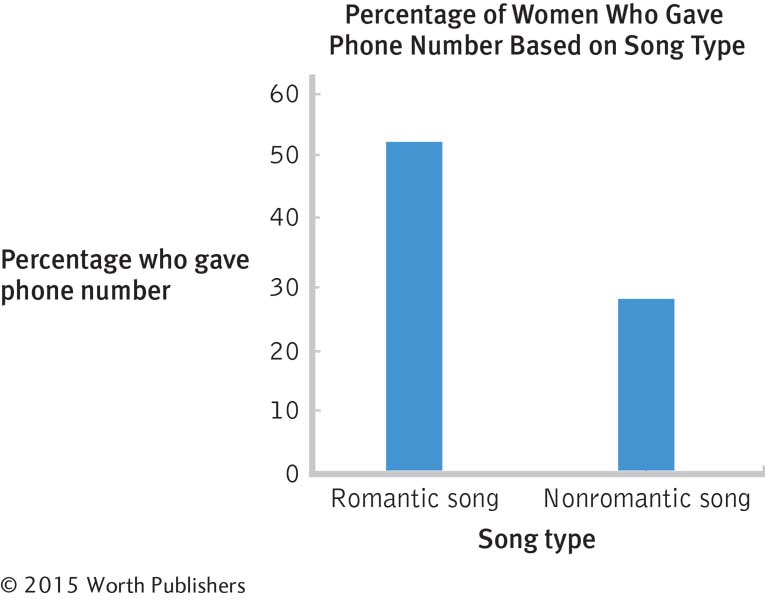

The independent variable is song type, with two levels: romantic song and nonromantic song.

The dependent variable is dating behavior.

This is a between-

groups study because each participant is exposed to only one level or condition of the independent variable. Dating behavior was operationalized by giving one’s phone number to an attractive person of the opposite sex. This may not be a valid measure of dating behavior, as we do not know if the participant actually intended to go on a date with the researcher. Giving one’s phone number might not necessarily indicate an intention to date.

We would use a bar graph because there is one nominal independent variable and one scale dependent variable.

The default graph will differ, depending on which software is used. Here is one example:

The default options that students choose to override will differ. Here is one example.

Chapter 4

4.1 The mean is the arithmetic average of a group of scores; it is calculated by summing all the scores and dividing by the total number of scores. The median is the middle score of all the scores when a group of scores is arranged in ascending order. If there is no single middle score, the median is the mean of the two middle scores. The mode is the most common score of all the scores in a group of scores.

4.3 The mean takes into account the actual numeric value of each score. The mean is the mathematic center of the data. It is the center balance point in the data, such that the sum of the deviations (rather than the number of deviations) below the mean equals the sum of deviations above the mean.

4.5 The mean might not be useful in a bimodal or multimodal distribution because in a bimodal or multimodal distribution the mathematical center of the distribution is not the number that describes what is typical or most representative of that distribution.

4.7 The mean is affected by outliers because the numeric value of the outlier is used in the computation of the mean. The median typically is not affected by outliers because its computation is based on the data in the middle of the distribution, and outliers lie at the extremes of the distribution.

4.9 The standard deviation is the typical amount each score in a distribution varies from the mean of the distribution.

4.11 The standard deviation is a measure of variability in terms of the values of the measure used to assess the variable, whereas the variance is squared values. Squared values simply don’t make intuitive sense to us, so we take the square root of the variance and report this value, the standard deviation.

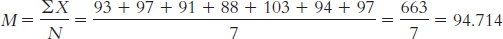

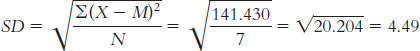

4.13

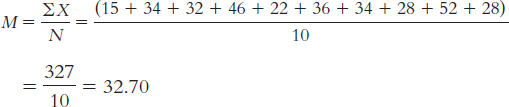

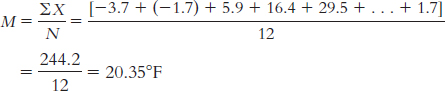

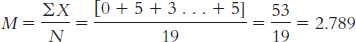

The mean is calculated:

The median is found by arranging the scores in numeric order—

15, 22, 28, 28, 32, 34, 34, 36, 46, 52— then dividing the number of scores, 10, by 2 and adding 1/2 to get 5.5. The mean of the 5th and 6th score in the ordered list of scores is the median— (32 + 34)/2 = 33— so 33 is the median. The mode is the most common score. In these data, two scores appear twice, so we have two modes, 28 and 34.

Adding the value of 112 to the data changes the calculation of the mean in the following way:

(15 + 34 + 32 + 46 + 22 + 36 + 34 + 28 + 52 + 28 + 112)/11 = 439/11 = 39.91

The mean gets larger with this outlier.

There are now 11 data points, so the median is the 6th value in the ordered list, which is 34.

The modes are unchanged at 28 and 34.

This outlier increases the mean by approximately 7 values; it increases the median by 1; and it does not affect the mode at all.

The range is: Xhighest − Xlowest = 52 − 15 = 37

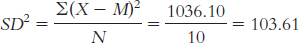

The variance is:

We start by calculating the mean, which is 32.70. We then calculate the deviation of each score from the mean and the square of that deviation.

X X − M (X − M )2 15 − 17.70 313.29 34 1.30 1.69 32 − 0.70 0.49 46 13.30 176.89 22 − 10.70 114.49 36 3.30 10.89 34 1.30 1.69 28 − 4.70 22.09 52 19.30 372.49 28 − 4.70 22.09

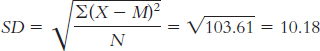

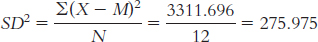

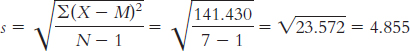

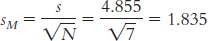

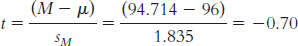

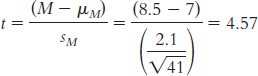

The standard deviation is:

or

or

4.15

The mean is calculated as:

The median is found by arranging the temperatures in numeric order:

− 3.7, − 1.7, 1.7, 5.9, 13.6, 16.4, 24, 29.5, 34.6, 38.5, 42.1, 43.3

There are 12 data points, so the mean of the 6th and 7th data points gives us the median: (16.4 + 24)/2 = 20.20°F.

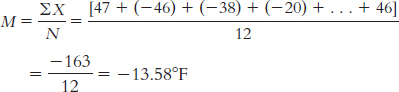

The mean is calculated as:

The median is found by arranging the temperatures in numeric order:

− 47, − 46, − 46, − 38, − 20, − 20, − 5, − 2, 8, 9, 20, 24

There are 12 data points, so the mean of the 6th and 7th data points gives us the median: [ − 20 + − 5]/2 = − 25/2 = − 12.50°F.

There are two modes: both − 46 and − 20 were recorded twice.

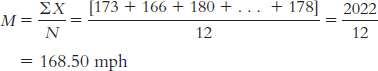

The mean is calculated as:

Page C-11

Page C-11The median is found by arranging the wind gusts in numeric order:

136, 142, 154, 161, 163, 164, 166, 173, 174, 178, 180, 231

There are 12 data points, so the mean of the 6th and 7th data points gives us the median: (164 + 166)/2 = 165 mph.

There is no mode among these wind gusts.

For the wind gust data, we could create 10 mph intervals and calculate the mode as the interval that occurs most often. There are four recorded gusts in the 160–

169 mph interval, three in the 170– 179 interval, and only one in the other intervals. So, the 160– 169 mph interval could be presented as the mode. The range is: Xhighest − Xlowest = 43.3 − ( − 3.7) = 47°F

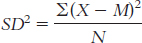

The variance is:

We start by calculating the mean, which is 20.35°F. We then calculate the deviation of each score from the mean and the square of that deviation.

X X − M (X − M )2 − 3.7 − 24.05 578.403 − 1.7 − 22.05 486.203 5.9 − 14.45 208.803 16.4 − 3.95 15.603 29.5 9.15 83.723 38.5 18.15 329.423 43.3 22.95 526.703 42.1 21.75 473.063 34.6 14.25 203.063 24 3.65 13.323 13.6 − 6.75 45.563 1.7 − 18.65 347.823 The variance is:

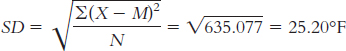

The standard deviation is:

or

or

The range is Xhighest − Xlowest = 24 − ( − 47) = 71°F

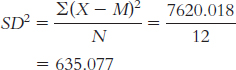

The variance is:

We already calculated the mean, − 13.583°F. We now calculate the deviation of each score from the mean and the square of that deviation.

X X − M (X − M )2 − 47 − 33.417 1116.696 − 46 − 32.417 1050.862 − 38 − 24.417 596.190 − 20 − 6.417 41.178 − 2 11.583 134.166 8 21.583 465.826 24 37.583 1412.482 20 33.583 1127.818 9 22.583 509.992 − 5 8.583 73.668 − 20 − 6.417 41.178 − 46 − 32.417 1050.862 The variance is:

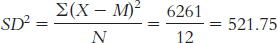

The standard deviation is:

or

or

For the peak wind gust data, the range is Xhighest − Xlowest = 231 − 136 = 95 mph

The variance is:

We start by calculating the mean, which is 168.50 mph. We then calculate the deviation of each score from the mean and the square of that deviation.

X X − M (X − M )2 173 4.50 20.25 166 − 2.50 6.25 180 11.50 132.25 231 62.50 3906.25 164 − 4.50 20.25 136 − 32.50 1056.25 154 − 14.50 210.25 142 − 26.50 702.25 174 5.50 30.25 161 − 7.50 56.25 163 − 5.50 30.25 178 9.50 90.25 Page C-12The variance is:

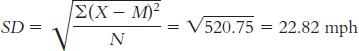

The standard deviation is:

or

or

4.17 The mean for salary is often greater than the median for salary because the high salaries of top management inflate the mean but not the median. If we are trying to attract people to our company, we may want to present the typical salary as whichever value is higher—

4.19 There are few participants in this study (only seven) so a single extreme score would influence the mean more than it would influence the median. The median is a more trustworthy indicator than the mean when there is only a handful of scores.

4.21 In April 1934, a wind gust of 231 mph was recorded. This data point is rather far from the next closest record of 180 mph. If this extreme score were excluded from analyses of central tendency, the mean would be lower, the median would change only slightly, and the mode would be unaffected.

4.23 There are many possible answers to this question. All answers will include a distribution that is skewed, perhaps one that has outliers. A skewed distribution would affect the mean but not the median. One example would be the variable of number of foreign countries visited; the few jet-

4.25

These ads are likely presenting outlier data.

To capture the experience of the typical individual who uses the product, the ad could include the mean result and the standard deviation. If the distribution of outcomes is skewed, it would be best to present the median result.

4.27

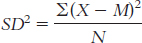

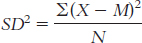

The formula for variance is

We start by creating three columns: one for the scores, one for the deviations of the scores from the mean, and one for the squares of the deviations.

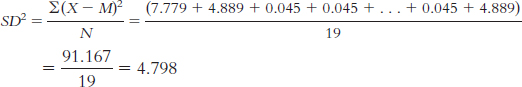

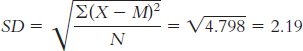

We can now calculate variance:

X X − M (X − M )2 0 − 2.789 7.779 5 2.211 4.889 3 0.211 0.045 3 0.211 0.045 1 − 1.789 3.201 10 7.211 51.999 2 − 0.789 0.623 2 − 0.789 0.623 3 0.211 0.045 1 − 1.789 3.201 2 − 0.789 0.623 4 1.211 1.467 2 − 0.789 0.623 1 − 0.789 3.201 1 − 0.789 3.201 1 − 0.789 3.201 4 1.211 1.467 3 0.211 0.045 5 2.211 4.889 We calculate standard deviation the same way we calculate variance, but we then take the square root:

The typical score is around 2.79, and the typical deviation from 2.79 is around 2.19.

4.29 There are many possible answers to these questions. The following are only examples.

70, 70. There is no skew; the mean is not pulled away from the median.

80, 70. There is positive skew; the mean is pulled up, but the median is unaffected.

60, 70. There is negative skew; the mean is pulled down, but the median is unaffected.

4.31

Because the policy for which violations were issued changed during this time frame, we cannot make accurate comparisons before and after Hurricane Sandy. The conditions for issuing violations were not constant; thus, the policy change would be a likely explanation for a change in the data.

Page C-13The removal of violations in Zone A, which appears to have been most affected by infestations after the hurricane, would result in eliminating an otherwise extreme number, or outlier, of issued violations. This would lead to inaccurate data as it does not accurately portray the number of rat violations, only the number of rat violations issued under the current policy.

4.33 It would probably be appropriate to use the mean because the data are scale; we would assume we have a large number of data points available to us; and the mean is the most commonly used measure of central tendency. Because of the large amount of data available, the effect of outliers is minimized. All of these factors would support the use of the mean for presenting information about the heights or weights of large numbers of people.

4.35 We cannot directly compare the mean ages reported by Canada with the median ages reported by the United States because it is likely that there were some older outliers in both Canada and the United States, and these outliers would affect the means reported by Canada much more than they would affect the medians reported by the United States.

4.37

The researchers reported an increase in early literacy among students in the intervention group (those whose parents received the text messages) as compared with the students who were not in the intervention group (those whose parents did not receive texts). The intervention seemed to work. That is, those in the intervention group as a whole ended up higher in literacy skills as compared with the mean for the nonintervention group. The increase was between 0.21 and 0.34 deviations. We know that the standard deviation indicates the difference of a typical student from the mean. So, the shift for the group as a whole is not as big as the amount that the typical student differs from the mean. It’s just part of a standard deviation.

The researchers used a between-

groups design because each student could only be in one group— either the group in which parents received the text messages or the group in which the parents did not receive the text messages.

4.39

Interval Frequency 60– 69 1 50– 59 5 40– 49 9 30– 39 5 20– 29 8 10– 19 2

With 30 scores, the median would be between the 15th and 16th scores: (30/2) + 0.5 + 15.5. The 15th and 16th scores are 39 and 40, respectively, so the median is 39.50. The mode is 29; there are three scores of 29.

Software reports that the range is 42 and the standard deviation is 11.59.

The summary will differ for each student but should include the following information: The data appear to be roughly symmetric and unimodal, maybe a bit negatively skewed. There are no glaring outliers.

Answers will vary. One example is whether number of wins is related to the average age of a team’s players.

Chapter 5

5.1 It is rare to have access to an entire population. That is why we study samples and use inferential statistics to estimate what is happening in the population.

5.3 Generalizability refers to the ability of researchers to apply findings from one sample or in one context to other samples or contexts.

5.5 Random sampling means that every member of a population has an equal chance of being selected to participate in a study. Random assignment means that each selected participant has an equal chance of being in any of the experimental conditions.

5.7 Random assignment is a process in which every participant (regardless of how he or she was selected) has an equal chance of being in any of the experimental conditions. This avoids bias across experimental conditions.

5.9 An illusory correlation is a belief that two events are associated when in fact they are not.

5.11 Students’ answers will vary. Personal probability is a person’s belief about the probability of an event occurring; for example, someone’s belief about the likelihood that she or he will complete a particular task.

5.13 In reference to probability, the term trial refers to each occasion that a given procedure is carried out. For example, each time we flip a coin, it is a trial. Outcome refers to the result of a trial. For coin-

5.15 The independent variable is the variable the researcher manipulates. Independent trials or events are those that do not affect each other; the flip of a coin is independent of another flip of a coin because the two events do not affect each other.

5.17 A null hypothesis is a statement that postulates that there is no mean difference between populations or that the mean difference is in a direction opposite of that anticipated by the researcher. A research hypothesis, also called an alternative hypothesis, is a statement that postulates that there is a mean difference between populations or sometimes, more specifically, that there is a mean difference in a certain direction, positive or negative.

5.19 We commit a Type I error when we reject the null hypothesis but the null hypothesis is true. We commit a Type II error when we fail to reject the null hypothesis but the null hypothesis is false.

5.21 In each of the six groups of 10 passengers that go through the checkpoint, we would check the 9th, 9th, 10th, 1st, 10th, and 8th passengers, respectively.

5.23 Only recording the numbers 1 to 5, the sequence appears as 5, 3, 5, 5, 2, 2, and 2. So, the first person is assigned to the fifth condition, the second person to the third condition, and so on.

5.25 Illusory correlation is particularly dangerous because people might perceive there to be an association between two variables that does not in fact exist. Because we often make decisions based on associations, it is important that those associations be real and be based on objective evidence. For example, a parent might perceive an illusory correlation between body piercings and trustworthiness, believing that a person with a large number of body piercings is untrustworthy. This illusory correlation might lead the parent to unfairly eliminate anyone with a body piercing from consideration when choosing babysitters.

5.27 The probability of winning is estimated as the number of people who have already won out of the total number of contestants, or 8/266 = 0.03.

5.29

0.627

0.003

0.042

5.31

Expected relative-

frequency probability Personal probability

Personal probability

Expected relative-

frequency probability

5.33 Most of us believe we can think randomly. However, it is extremely difficult for us to come up with a string of four numbers in which we determined each of the numbers completely independently. We may choose numbers with some meaning for us, perhaps without even realizing we are doing so. We also tend to consider the previous numbers when we come up with each new one. As the BBC article reported, people are lazy when it comes to choosing PINs and passwords. “They use birthdays, wedding days, the names of siblings or children or pets. They use their house number, street name or pick on a favourite pop star” (Ward, 2013). So, the best advice would be to let a random numbers table choose your PIN.

5.35

The independent variable is type of news information, with two levels: information about an improving job market and information about a declining job market.

The dependent variable is psychologists’ attitudes toward their careers.

The null hypothesis would be that, on average, the psychologists who received the positive article about the job market have the same attitude toward their career as those who read a negative article about the job market. The research hypothesis would be that a difference, on average, exists between the two groups.

5.37 Although we all believe we can think randomly if we want to, we do not, in fact, generate numbers independently of the ones that came before. We tend to glance at the preceding numbers in order to make the next ones “random.” Yet once we do this, the numbers are not independent and therefore are not random. Moreover, even if we can keep ourselves from looking at the previous numbers, the numbers we generate are not likely to be random. For example, if we were born on the 6th of the month, then we may be more likely to choose 6’s than other digits. Humans just don’t think randomly.

5.39

The typical study volunteer is likely someone who cares deeply about U.S. college football. Moreover, it is particularly the fans of the top ACC teams, who themselves are likely extremely biased, who are most likely to vote.

External validity refers to the ability to generalize beyond the current sample. In this case, it is likely that fans of the top ACC teams are voting and that the poll results do not reflect the opinions of U.S. college football fans at large.

There are several possible answers to this question. As one example, only eight options were provided. Even though one of these options was “other,” this limited the range of possible answers that respondents would be likely to provide. The sample is also biased in favor of those who know about and would spend time at the USA Today Web site in the first place.

5.41

These numbers are likely not representative. This is a volunteer sample.

Those most likely to volunteer are those who have stumbled across, or searched for, this Web site: a site that advocates for self-

government. Those who respond are more likely to tend toward supporting self- government than are those who do not respond (or even find this Web site). Page C-15This description of libertarians suggests they would advocate for self-

government— as that is part of the name of the group that hosts this quiz— a likely explanation for the predominance of libertarians who responded to this survey. The repeated use of the word “Libertarian” (in the heading and in the icon) likely helps preselect who would come to this Web site in the first place. It doesn’t matter how large a sample is if it’s not representative. With respect to external validity, it would be far preferable to have a smaller but representative sample than a very large but unrepresentative sample.

5.43 Your friend’s bias is an illusory correlation—

5.45 If a depressed person has negative thoughts about himself or herself and about the world, confirmation bias may make it difficult to change those thoughts because confirmation bias would lead this person to pay more attention to and better remember negative events than positive events. For example, he or she might remember the one friend who slighted him or her at a party but not the many friends who were excited to see him or her.

5.47

Probability refers to the proportion of Waldos that we expect to see in these two 1.5-

inch bands in the long run. In the long run, given 53% of Waldos falling in these bands, we would expect the proportion of Waldos to be 0.53. Proportion refers to the observed fraction of Waldos in these bands—

the number of successes (Waldo in one of these bands) divided by the number of trials (total Waldo illustrations used). In this case, the proportion of Waldos in one of these bands is 0.53. Percentage refers to the proportion multiplied by 100: 0.53(100) = 53%, as reported by Blatt in this case. The media often report percentage versions of probabilities.

Although 0.53 is far from 0.3%, Blatt did not analyze every Where’s Waldo? illustration that exists. It does seem that this is more than coincidence, but we might expect a fluctuation in the short run. We can’t know for certain that the Where’s Waldo? game has a bias.

5.49 These polls could be considered independent trials if they were conducted for each state individually, and if the state currently being polled did not have any information about the polling results from other states. However, these are not truly independent trials, as state-

5.51

The null hypothesis is that the average tendency to develop false memories is either unchanged or is lowered by the repetition of false information. The research hypothesis is that false memories are higher, on average, when false information is repeated than when it is not.

The null hypothesis is that the average outcome is the same or worse whether or not structured assessments are used. The research hypothesis is that the average outcome is better when structured assessments are used than when they are not used.

The null hypothesis is that average employee morale is the same whether employees work in enclosed offices or in cubicles. The research hypothesis is that average employee morale is different when employees work in enclosed offices versus in cubicles.

The null hypothesis is that ability to speak one’s native language is the same, on average, whether or not a second language is taught from birth. The research hypothesis is that the ability to speak one’s native language is different, on average, when a second language is taught from birth than when no second language is taught.

5.53

If this conclusion is incorrect, the researcher has made a Type I error. The researcher rejected the null hypothesis when the null hypothesis is really true. (Of course, he or she never knows whether there has been an error! She or he just has to acknowledge the possibility.)

If this conclusion is incorrect, the researcher has made a Type I error. She has rejected the null hypothesis when the null hypothesis is really true.

If this conclusion is incorrect, the researcher has made a Type II error. He has failed to reject the null hypothesis when the null hypothesis is not true.

If this conclusion is incorrect, the researcher has made a Type II error. She has failed to reject the null hypothesis when the null hypothesis is not true.

5.55

Confirmation bias has guided his logic in that he looked for specific events that occurred during the day to fit the horoscope but ignored the events that did not fit the prediction.

If this conclusion is incorrect, they have made a Type I error. Dean and Kelly would have failed to reject the null hypothesis when the null hypothesis is not true.

If an event occurs regularly or a research finding is replicated many times and by other researchers and in a range of contexts, then it is likely the event or finding is not occurring in error or by chance alone.

5.57

The population in which you would be interested is all people who already had read Harry Potter and the Half-

Blood Prince .The sample would be just bel 78. It is dangerous to rely on just one review, bel 78’s testimonial. She clearly felt strongly about the book if she spent the time to post her review. She is not likely to be representative of the typical reader of this book.

This is a large sample, but it is not likely representative of those who had read this book. Not only does this sample consist solely of Amazon users, but it consists of readers who chose to post a review. It is likely that those who took the time to write and post a review were those who felt more strongly about the book than did the typical reader.

In this case, the population of interest would be all Amazon users who had read this book. We would need Amazon to generate a list of everyone who bought the book (something that they would not do because of ethical considerations), and we would have to randomly select a sample from this population. We would then have to identify the people who actually read the book (who may not be the buyers) and elicit the ratings from the randomly selected sample.

Page C-16We could explain that testimonials are typically written by those who feel most strongly about a book. The sample of reviewers, therefore, is unlikely to be representative of the population of readers.

5.59

The population of interest is male students with alcohol problems. The sample is the 64 students who were ordered to meet with a school counselor.

Random selection was not used. The sample was comprised of 64 male students who had been ordered to meet with a school counselor; they were not chosen out of all male students with alcohol problems.

Random assignment was used. Each participant had an equal chance of being assigned to either of the two conditions.

The independent variable is type of counseling. It has two levels: BMI and AE. The dependent variable is number of alcohol-

related problems at follow- up. The null hypothesis is that the mean number of alcohol-

related problems at follow- up is the same, regardless of type of counseling (BMI or AE).The research hypothesis is that students who undergo a BMI have different mean numbers of alcohol- related problems at follow- up than do students who participate in AE. The researchers rejected the null hypothesis.

If the researchers were incorrect in their decision, then they made a Type I error, rejecting the null hypothesis when the null hypothesis is true. The consequences of this type of error are that a new treatment that is no better, on average, than the standard treatment would be implemented. This might lead to unnecessary costs to train counselors to implement the new treatment.

Chapter 6

6.1 In everyday conversation, the word normal is used to refer to events or objects that are common or that typically occur. Statisticians use the word to refer to distributions that conform to a specific bell-

6.3 The distribution of sample scores approaches normal as the sample size increases, assuming the population is normally distributed.

6.5 A z score is a way to standardize data; it expresses how far a data point is from the mean of its distribution in terms of standard deviations.

6.7 The mean is 0 and the standard deviation is 1.0.

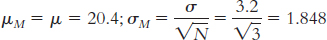

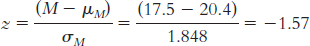

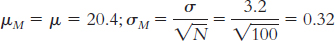

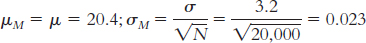

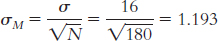

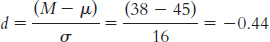

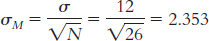

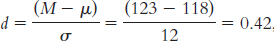

6.9 The symbol μM stands for the mean of the distribution of means. The μ indicates that it is the mean of a population, and the subscript M indicates that the population is composed of sample means—the means of all possible samples of a given size from a particular population of individual scores.

6.11 Standard deviation is the measure of spread for a distribution of scores in a single sample or in a population of scores. Standard error is the standard deviation (or measure of spread) in a distribution of means of all possible samples of a given size from a particular population of individual scores.

6.13 The z statistic tells us how many standard errors a sample mean is from the population mean.

6.15

As the sample size increases, the distribution approaches the shape of the normal curve.

Page C-17

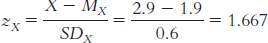

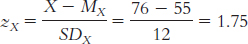

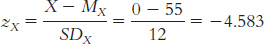

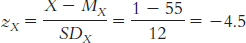

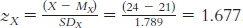

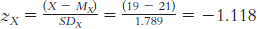

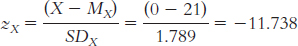

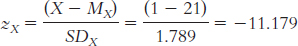

6.17

6.19

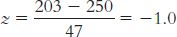

Each of these scores is 47 points away from the mean, which is the value of the standard deviation. The z scores of –1.0 and 1.0 express that the first score, 203, is 1 standard deviation below the mean, whereas the other score, 297, is 1 standard deviation above the mean.

6.21

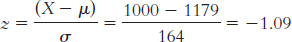

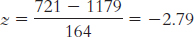

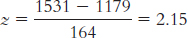

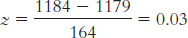

X = z(σ) + μ = − 0.23(164) + 1179 = 1141.28

X = 1.41(164) + 1179 = 1410.24

X = 2.06(164) + 1179 = 1516.84

X = 0.03(164) + 1179 = 1183.92

6.23

X = z(σ) + μ = 1.5(100) + 500 = 650

X = z(σ) + μ = − 0.5(100) + 500 = 450

X = z(σ) + μ = − 2.0(100) + 500 = 300

6.25

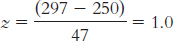

Both of these scores fall below the means of their distributions, resulting in negative z scores. One score (45) is a little farther below its mean than the other (732).

6.27

50%

82% (34 + 34 + 14)

4% (2 + 2)

48% (34 + 14)

100% or nearly 100%

6.29

6.31

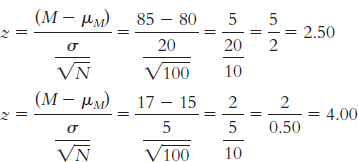

The first sample had a mean that was 2.50 standard deviations above the population mean, whereas the second sample had a mean that was 4 standard deviations above the mean. Compared to the population mean (as measured by this scale), both samples are extreme scores; however, a z score of 4.0 is even more extreme than a z score of 2.5.

6.33

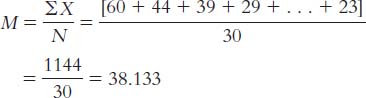

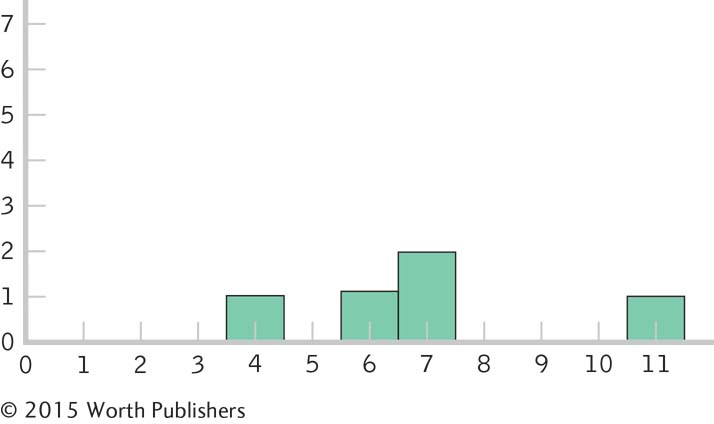

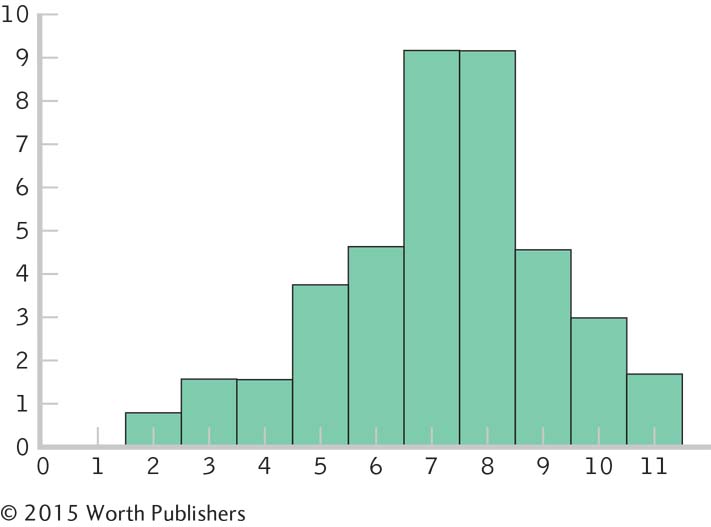

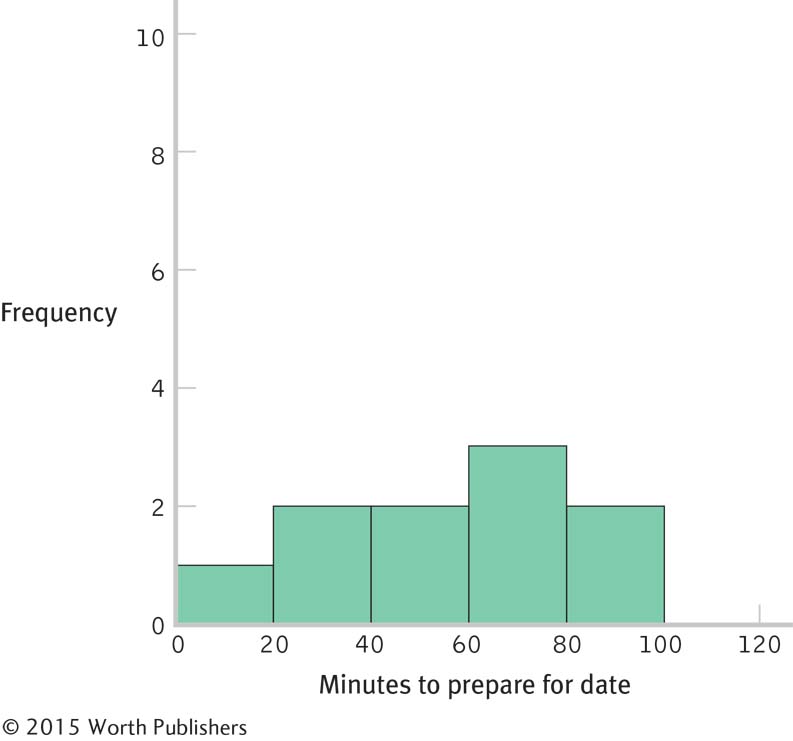

Histogram for the 10 scores:

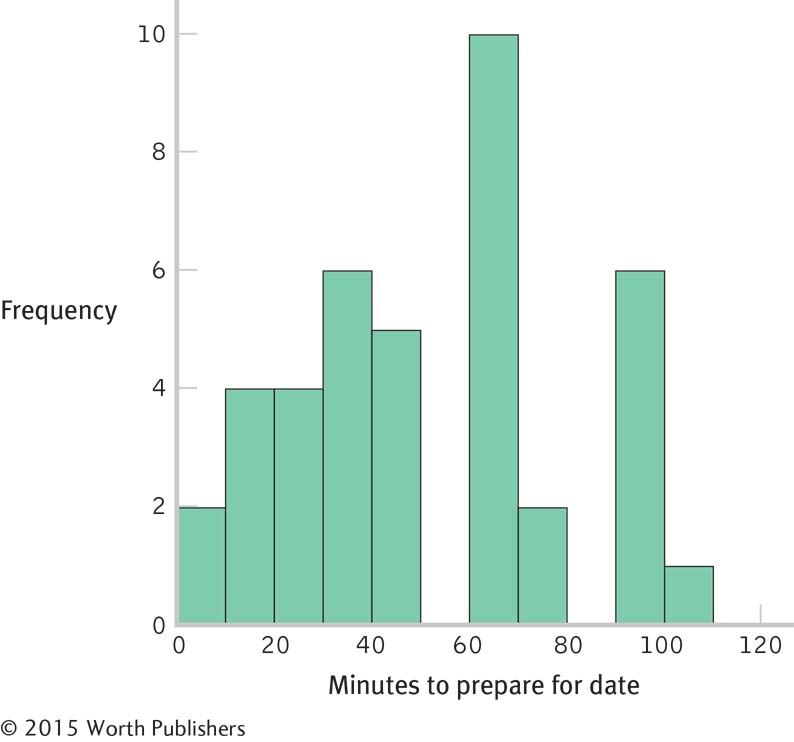

Histogram for the 40 scores:

The shape of the distribution became more normal as the number of scores increased. If we added more scores, the distribution would become more and more normal. This happens because many physical, psychological, and behavioral variables are normally distributed. With smaller samples, this might not be clear. But as the sample size approaches the size of the population, the shape of the sample distribution approaches that of the population.

Page C-18These are distributions of scores, as each individual score is represented in the histograms on its own, not as part of a mean.

There are several possible answers to this question. For example, instead of using retrospective self-

reports, we could have had students call a number or send an e- mail as they began to get ready; they would then have called the same number or sent another e- mail when they were ready. This would have led to scores that would be closer to the actual time it took the students to get ready. There are several possible answers to this question. For example, we could examine whether there was a mean gender difference in time spent getting ready for a date.

6.35

The mean of the z distribution is always 0.

The standard deviation of the z distribution is always 1.

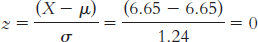

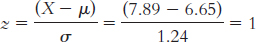

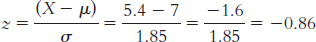

A student 1 standard deviation above the mean would have a score of 6.65 + 1.24 = 7.89. This person’s z score would be:

The answer will differ for each student but will involve substituting one’s own score for X in this equation:

6.37

It would not make sense to compare the mean of this sample to the distribution of individual scores because, in a sample of means, the occasional extreme individual score is balanced by less extreme scores that are also part of the sample. Thus, there is less variability.

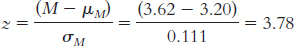

The null hypothesis would state that the population from which the sample was drawn has a mean of 3.20. The research hypothesis would state that the mean for the population from which our sample was drawn is not 3.20.

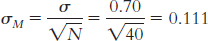

μM = μ = 3.20

6.39

Yes, the distribution of the number of movies college students watch in a year would likely approximate a normal curve. You can imagine that a small number of students watch an enormous number of movies and that a small number watch very few but that most watch a moderate number of movies between these two extremes.

Yes, the number of full-

page advertisements in magazines is likely to approximate a normal curve. We could find magazines that have no or just one or two full- page advertisements and some that are chock full of them, but most magazines have some intermediate number of full- page advertisements. Yes, human birth weights in Canada could be expected to approximate a normal curve. Few infants would weigh in at the extremes of very light or very heavy, and the weight of most infants would cluster around some intermediate value.

6.41 Household income is positively skewed. Most households cluster around a relatively low central tendency, but the 1-

6.43

According to these data, the Falcons had a better regular season (they had a higher z score) than did the Braves.

The Braves would have had to have won 101 regular season games to have a slightly higher z score than the Falcons:

There are several possible answers to this question. For example, we could have summed the teams’ scores for every game (as compared to other teams’ scores within their leagues).

6.45

X = z(σ) + μ = −1.705(11.733) + 81.00 = 61 games (rounded to a whole number)

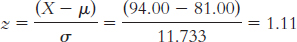

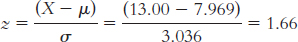

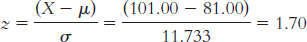

X = z(σ) + μ = −0.319(3.036) + 7.969 = 7 games (rounded to a whole number)

Fifty percent of scores fall below the mean, so 34% (84 − 50 = 34) fall between the mean and the Colts’ score. We know that 34% of scores fall between the mean and a z score of 1.0, so the Colts have a z score of 1.0. X = z(σ) + μ = 1(3.036) + 7.969 = 11 games (rounded to a whole number).

We can examine our answers to be sure that negative z scores match up with answers that are below the mean and positive z scores match up with answers that are above the mean.

6.47

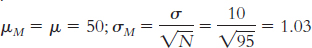

μ = 50; σ = 10

When we calculate the mean of the scores for 95 individuals, the most extreme MMPI-

2 depression scores will likely be balanced by scores toward the middle. It would be rare to have an extreme mean of the scores for 95 individuals. Thus, the spread is smaller than is the spread for all of the individual MMPI- 2 depression scores.

6.49

These are the data for a distribution of scores rather than means because they have been obtained by entering each individual score into the analysis.

Comparing the sizes of the mean and the standard deviation suggests that there is positive skew. A person can’t have fewer than zero friends, so the distribution would have to extend in a positive direction to have a standard deviation larger than the mean.

Page C-19Because the mean is larger than either the median or the mode, it suggests that the distribution is positively skewed. There are extreme scores in the positive end of the distribution that are causing the mean to be more extreme than the median or mode.

You would compare this person to the distribution of scores. When making a comparison of an individual score, we must use the distribution of scores.

You would compare this sample to a distribution of means. When making a comparison involving a sample mean, we must use a distribution of means because it has a different pattern of variability from a distribution of scores (it has less variability).

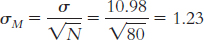

μM = μ = 7.44. The number of individuals in the sample is 80. Substituting 80 in the standard error equation yields

The distribution of means is likely to be a normal curve. Because the sample of 80 is well above the 30 recommended to see the central limit theorem at work, we expect that the distribution of the sample means will approximate a normal distribution.

6.51

You would compare this sample mean to a distribution of means. When we are making a comparison involving a sample mean, we need to use the distribution of means because it is this distribution that indicates the variability we are likely to see in sample means.

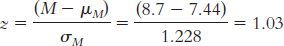

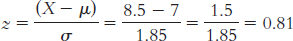

This z statistic of 1.03 is approximately 1 standard deviation above the mean. Because 50% of the sample are below the mean and 34% are between the mean and 1 standard deviation above it, this sample would be at approximately the 84th percentile.

It does make sense to calculate a percentile for this sample. Given the central limit theorem and the size of the sample used to calculate the mean (80), we would expect the distribution of the sample means to be approximately normal.

6.53

The population is all patients treated for blocked coronary arteries in the United States. The sample is Medicare patients in Elyria, Ohio, who received angioplasty.

Medicare and the commercial insurer compared the angioplasty rate in Elyria to that in other towns. Given that the rate was so far above that of other towns, they decided that such a high angioplasty rate was unlikely to happen just by chance. Thus, they used probability to make a decision to investigate.

Medicare and the commercial insurer could look at the z distribution of angioplasty rates in cities from all over the country. Locating the rate of Elyria within that distribution would indicate exactly how extreme or unlikely its angioplasty rates are.

The error made would be a Type I error, as they would be rejecting the null hypothesis that there is no difference among the various towns in rates of angioplasty, and concluding that there is a difference, when there really is no difference.

Elyria’s extremely high rates do not necessarily mean the doctors are committing fraud. One could imagine that an area with a population composed mostly of retirees (that is, more elderly people) would have a higher rate of angioplasty. Conversely, perhaps Elyria has a talented set of surgeons who are renowned for their angioplasty skills and people from all over the country come there to have angioplasty.

6.55

The researchers are operationally defining cheating as the change in standardized test score for a given classroom. This variable is a scale variable.

Researchers could establish a cut-

off z statistic at which those who had a mean change larger than that z statistic would be considered “suspicious.” For example, a classroom with a z statistic of 2 or more may have cheated on this year’s test. A histogram or frequency polygon would provide an easy visual to see where a given classroom falls on the distribution. A researcher could even draw lines indicating the cutoffs and see which classrooms fall beyond them.

They would be committing a Type I error, because they would be rejecting the null hypothesis that there is no difference in a classroom’s test scores from one year to the next when there really is no difference and they should have failed to reject the null hypothesis.

Chapter 7

7.1 A percentile is the percentage of scores that fall below a certain point on a distribution.

7.3 We add the percentage between the mean and the positive z score to 50%, which is the percentage of scores below the mean (50% of scores are on each side of the mean).

7.5 In statistics, assumptions are the characteristics we ideally require the population from which we are sampling to have so that we can make accurate inferences.

7.7 Parametric tests are statistical analyses based on a set of assumptions about the population. By contrast, nonparametric tests are statistical analyses that are not based on assumptions about the population.

7.9 Critical values, often simply called cutoffs, are the test statistic values beyond which we reject the null hypothesis. The critical region refers to the area in the tails of the distribution in which the null hypothesis will be rejected if the test statistic falls there.

7.11 A statistically significant finding is one in which we have rejected the null hypothesis because the pattern in the data differed from what we would expect by chance. The word significant has a particular meaning in statistics. “Statistical significance” does not mean that the finding is necessarily important or meaningful. Statistical significance only means that we are justified in believing that the pattern in the data is likely to reoccur; that is, the pattern is likely genuine.

7.13 Critical region may have been chosen because values of a test statistic describe the area beneath the normal curve that represents a statistically significant result.

7.15 For a one-

7.17 The following are the two options for one-

Null hypothesis: H0: μ1 ≥ μ2

Research hypothesis: H1: μ1 ≺ μ2

Null hypothesis: H0: μ1 ≤ μ1

Research hypothesis: H1: μ1 ≻ μ2

7.19

If 22.96% are beyond this z score (in the tail), then 77.04% are below it (100% − 22.96%).

If 22.96% are beyond this z score, then 27.04% are between it and the mean (50% − 22.96%).

Because the curve is symmetric, the area beyond a z score of 20.74 is the same as that beyond 0.74. Expressed as a proportion, 22.96% appears as 0.2296.

7.21

The percentage above is the percentage in the tail, 4.36%.

The percentage below is calculated by adding the area below the mean, 50%, and the area between the mean and this z score, 45.64%, to get 95.64%.

The percentage at least as extreme is computed by doubling the amount beyond the z score, 4.36%, to get 8.72%.

7.23

19%

4%

92%

7.25

2.5% in each tail

5% in each tail

0.5% in each tail

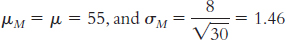

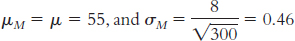

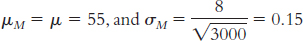

7.27 μM = μ = 500

7.29

Fail to reject the null hypothesis because 1.06 does not exceed the cutoff of 1.96.

Reject the null hypothesis because − 2.06 is more extreme than − 1.96.

Fail to reject the null hypothesis because a z statistic with 7% of the data in the tail occurs between ±1.48 and ±1.47, which are not more extreme than ±1.96.

7.31

Fail to reject the null hypothesis because 0.95 does not exceed 1.65.

Reject the null hypothesis because − 1.77 is more extreme than − 1.65.

Reject the null hypothesis because the critical value resulting in 2% in the tail falls within the 5% cutoff region in each tail.

7.33

The percentage below is 19.49%.

The percentage below is 50% + 29.10% = 79.10%.

The percentage below is 50% + 34.85% = 84.85%.

The percentage below is 39.36%.

7.35

44.18% of scores are between this z score and the mean. We need to add this to the area below the mean, 50%, to get the percentile score of 94.18%.

94.18% of boys are shorter than Kona at this age.

If 94.18% of boys are shorter than Kona, that leaves 5.82% in the tail. To compute how many scores are at least as extreme, we double this to get 11.64%.

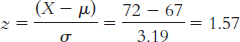

We look at the z table to find a critical value that puts 30% of scores in the tail, or as close as we can get to 30%. A z score of − 0.52 puts 30.15% in the tail. We can use that z score to compute the raw score for height:

X = − 0.52(3.19) + 67 = 65.34 inches

At 72 inches tall, Kona is 6.66 inches taller than Ian.

7.37

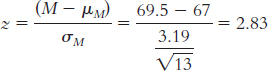

The z statistic indicates that this sample mean is 2.83 standard deviations above the expected mean for samples of size 13. In other words, this sample of boys is, on average, exceptionally tall.

The percentile rank is 99.77%, meaning that 99.77% of sample means would be of lesser value than the one obtained for this sample.

7.39

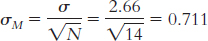

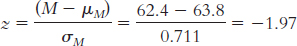

μM = μ = 63.8

2.44% of sample means would be shorter than this mean.

We double 2.44% to account for both tails, so we get 4.88% of the time.

The average height of this group of 15-

year- old females is rare, or statistically significant.

7.41

This is a nondirectional hypothesis because the researcher is predicting that it will alter skin moisture, not just decrease it or increase it.

This is a directional hypothesis because better grades are expected.

This hypothesis is nondirectional because any change is of interest, not just a decrease or an increase in closeness of relationships.

7.43

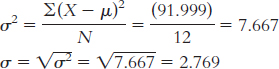

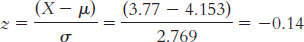

X (X − μ) (X − μ)2 January 4.41 0.257 0.066 February 8.24 4.087 16.704 March 4.69 0.537 0.288 April 3.31 − 0.843 0.711 May 4.07 − 0.083 0.007 June 2.52 − 1.633 2.667 July 10.65 6.497 42.211 August 3.77 − 0.383 0.147 September 4.07 − 0.083 0.007 October 0.04 − 4.113 16.917 November 0.75 − 3.403 11.580 December 3.32 − 0.833 0.694 μ = 4.153; SS = osX − μd2 = 91.999;

August: X = 3.77

The table tells us that 44.43% of scores fall in the tail beyond a z score of −0.14. So, the percentile for August is 44.43%. This is surprising because it is below the mean, and it was the month in which a devastating hurricane hit New Orleans. (Note: It is helpful to draw a picture of the curve when calculating this answer.)

Paragraphs will be different for each student but will include the fact that a monthly total based on missing data is inaccurate. The mean and the standard deviation based on this population, therefore, are inaccurate. Moreover, even if we had these data points, they would likely be large and would increase the total precipitation for August; August would likely be an outlier, skewing the overall mean. The median would be a more accurate measure of central tendency than the mean under these circumstances.

We would look up the z score that has 10% in the tail. The closest z score is 1.28, so the cutoffs are 21.28 and 1.28. (Note: It is helpful to draw a picture of the curve that includes these z scores.) We can then convert these z scores to raw scores. X = z(σ) + μ = − 1.28(2.769) + 4.153 = 0.61; X = z(σ) + μ = 1.28(2.769) + 4.153 = 7.70. Only October (0.04) is below 0.61. Only February (8.24) and July (10.65) are above 7.70. These data are likely inaccurate, however, because the mean and the standard deviation of the population are based on an inaccurate mean from August. Moreover, it is quite likely that August would have been in the most extreme upper 10% if there were complete data for this month.

7.45

The independent variable is the division. Teams were drawn from either the Football Bowl Subdivision (FBS) or the Football Championship Division (FCS). The dependent variable is the spread.

Random selection was not used. Random selection would entail having some process for randomly selecting FCS games for inclusion in the sample. We did not describe such a process and, in fact, took all the FCS teams from one league within that division.

The populations of interest are football games between teams in the upper divisions of the NCAA (FBS and FCS).

The comparison distribution would be the distribution of sample means.

The first assumption—

that the dependent variable is a scale variable— is met in this example. The dependent variable is point spread, which is a scale measure. The second assumption— that participants are randomly selected— is not met. As described in part (b), the teams for inclusion in the sample were not randomly selected. The third assumption— that the distribution of scores in the population of interest must be normal— is not likely to have been met. The standard deviation is almost as large as the mean, an indication that one or more outliers are creating positive skew. Moreover, we only have a sample size of 4, not the 30 we would need to have a normal distribution of means.

7.47 Because we have a population mean and a population standard deviation, we can use a z test. To conduct this study, we would need a sample of red-

7.49

The independent variable is whether a patient received the video with information about orthodontics. One group received the video; the other group did not. The dependent variable is the number of hours per day patients wore their appliances.

The researcher did not use random selection when choosing his sample. He selected the next 15 patients to come into his clinic.

Step 1: Population 1 is patients who did not receive the video. Population 2 is patients who received the video. The comparison distribution will be a distribution of means. The hypothesis test will be a z test because we have only one sample and we know the population mean and the standard deviation. This study meets the assumption that the dependent variable is a scale measure. We might expect the distribution of number of hours per day people wear their appliances to be normally distributed, but from the information provided it is not possible to tell for sure. Additionally, the sample includes fewer than 30 participants, so the central limit theorem may not apply here. The distribution of sample means may not approach normality. Finally, the participants were not randomly selected. Therefore, we may not want to generalize the results beyond this sample.

Page C-22Step 2: Null hypothesis: Patients who received the video do not wear their appliances a different mean number of hours per day than patients who did not receive the video: H0: μ1 = μ2.

Research hypothesis: Patients who received the video wear their appliances a different mean number of hours per day than patients who did not receive the video: H1: μ1 ≠ μ2.

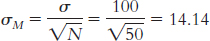

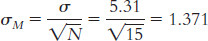

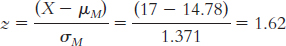

Step 3: μM = μ = 14.78;

Step 4: The cutoff z statistics, based on a p level of 0.05 and a two-